【Abstract】 As the scale of containers expands and business scenarios become more complex, container networking, as a core component supporting microservice communication, service discovery, and traffic management, has become increasingly important while also facing numerous challenges. In this context, selecting a suitable CNI plugin for one’s business scenario has become a key decision for enterprises implementing cloud-native solutions. This article focuses on exploring and comparing the most popular CNI plugins: Flannel, Calico, and Cilium, and analyzes the impact of bare metal and virtualized environments on container network performance.

【Abstract】 As the scale of containers expands and business scenarios become more complex, container networking, as a core component supporting microservice communication, service discovery, and traffic management, has become increasingly important while also facing numerous challenges. In this context, selecting a suitable CNI plugin for one’s business scenario has become a key decision for enterprises implementing cloud-native solutions. This article focuses on exploring and comparing the most popular CNI plugins: Flannel, Calico, and Cilium, and analyzes the impact of bare metal and virtualized environments on container network performance.

【Author】Fu Cheng, Banking Technology Manager, responsible for the operation and maintenance of online analytical processing applications, as well as the management of foundational software and cloud-native technologies.

Introduction

In the world of cloud computing, computing is fundamental, storage is crucial, and networking is complex. Container networking, as a critical component of Kubernetes clusters, has seen various solutions emerge, namely CNI (Container Network Interface) plugins. CNI refers to the Container Network Interface, a standard design that allows users to configure container networks more easily when creating or destroying containers.

1. Background Introduction

In today’s rapidly developing cloud computing and cloud-native technology landscape, containerization has become one of the important technological development paths for our digital transformation. From real-time trading systems in the financial industry to high-concurrency traffic handling on e-commerce platforms, and to edge computing scenarios for IoT devices, container technology, with its lightweight and rapid deployment advantages, is reshaping the IT infrastructure architecture across various industries. However, as the scale of containers expands and business scenarios become more complex, container networking, as a core component supporting microservice communication, service discovery, and traffic management, has become increasingly important while also facing numerous challenges:

(1) Diverse Industry Scenario Requirements

Financial Industry: Requires extremely high network latency and security, needing to support strict network isolation policies (e.g., millisecond-level latency requirements for securities trading systems).E-commerce and Internet: Need to handle instantaneous high-concurrency traffic, requiring network plugins to have high throughput and low resource overhead to avoid becoming a performance bottleneck.Manufacturing and IoT: In edge computing scenarios, network communication across geographically dispersed nodes must balance flexibility and stability while adapting to hybrid cloud architectures.

(2) Technical Challenges and Value

Performance and Cost Balance: Overlay networks (e.g., VXLAN) can achieve inter-segment communication, but the performance loss from encapsulation and decapsulation may affect critical business operations. Security and Compliance: Industries such as finance and healthcare need to meet compliance requirements through fine-grained network policies.Scalability: The routing efficiency of network plugins directly determines operational complexity as enterprises scale from hundreds to thousands of nodes.

In this context, selecting a suitable CNI plugin for one’s business scenario has become a key decision for enterprises implementing cloud-native solutions. In this article, we will focus on exploring and comparing the most popular CNI plugins: Flannel, Calico, and Cilium, as well as analyzing the impact of bare metal and virtualized environments on container network performance.

2. Introduction to Network Plugins

(1) Flannel

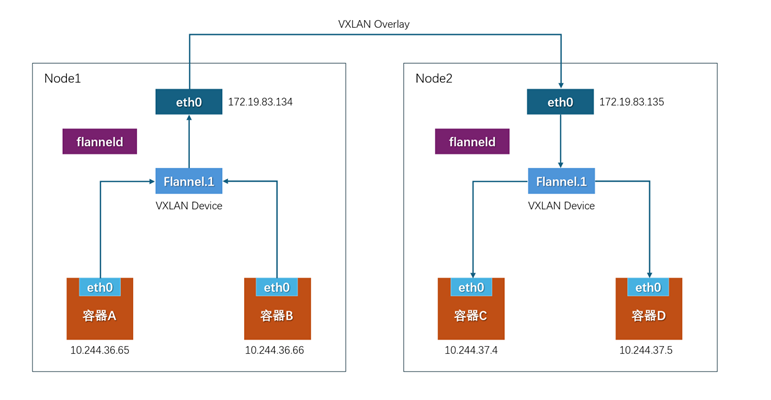

Flannel is a simple overlay network solution that is easy to understand and deploy. UDP: Early versions of Flannel used UDP encapsulation to complete packet forwarding across hosts, which had some security and performance shortcomings.VXLAN: The Linux kernel added support for the VXLAN protocol after version 3.7.0 at the end of 2012, so the new version of Flannel also has UDP converted to VXLAN. VXLAN is essentially a tunnel protocol used to create a virtual Layer 2 network based on Layer 3 networks. Currently, Flannel’s network model is based on VXLAN overlay networks, and it is recommended to use VXLAN as its network model. Host-gw: This is the Host Gateway, which forwards packets by creating a routing table to reach each target container address on the node. Therefore, this method requires that all node nodes must be in the same local area network (Layer 2 network), making it unsuitable for frequently changing or larger network environments, but its performance is relatively better.Flannel Components:Cni0: A bridge device, a pair of veth pairs is created for each pod, where one end is eth0 in the pod, and the other end is a port (network card) in the Cni0 bridge. Traffic sent from the network card eth0 in the pod will be sent to the port (network card) of the Cni0 bridge, and the IP address obtained by the Cni0 device is the first address in the subnet assigned to that node.Flannel.1: The overlay network device used to process VXLAN packets (encapsulation and decapsulation). The pod data traffic between different nodes is sent to the other end in a tunnel form through the overlay device.Advantages:1. Simple and easy to use: Suitable for users who are new to container networking.2. Supports multiple backends: Such as UDP, VXLAN, and host-gw.3. Extensive community support: Has a large community, making it easy to obtain solutions and tutorials.Disadvantages: 1. Relatively few features: Weak network policy functionality.2. Performance loss: Additional encapsulation/decapsulation overhead in overlay mode.

UDP: Early versions of Flannel used UDP encapsulation to complete packet forwarding across hosts, which had some security and performance shortcomings.VXLAN: The Linux kernel added support for the VXLAN protocol after version 3.7.0 at the end of 2012, so the new version of Flannel also has UDP converted to VXLAN. VXLAN is essentially a tunnel protocol used to create a virtual Layer 2 network based on Layer 3 networks. Currently, Flannel’s network model is based on VXLAN overlay networks, and it is recommended to use VXLAN as its network model. Host-gw: This is the Host Gateway, which forwards packets by creating a routing table to reach each target container address on the node. Therefore, this method requires that all node nodes must be in the same local area network (Layer 2 network), making it unsuitable for frequently changing or larger network environments, but its performance is relatively better.Flannel Components:Cni0: A bridge device, a pair of veth pairs is created for each pod, where one end is eth0 in the pod, and the other end is a port (network card) in the Cni0 bridge. Traffic sent from the network card eth0 in the pod will be sent to the port (network card) of the Cni0 bridge, and the IP address obtained by the Cni0 device is the first address in the subnet assigned to that node.Flannel.1: The overlay network device used to process VXLAN packets (encapsulation and decapsulation). The pod data traffic between different nodes is sent to the other end in a tunnel form through the overlay device.Advantages:1. Simple and easy to use: Suitable for users who are new to container networking.2. Supports multiple backends: Such as UDP, VXLAN, and host-gw.3. Extensive community support: Has a large community, making it easy to obtain solutions and tutorials.Disadvantages: 1. Relatively few features: Weak network policy functionality.2. Performance loss: Additional encapsulation/decapsulation overhead in overlay mode.

(2) Calico

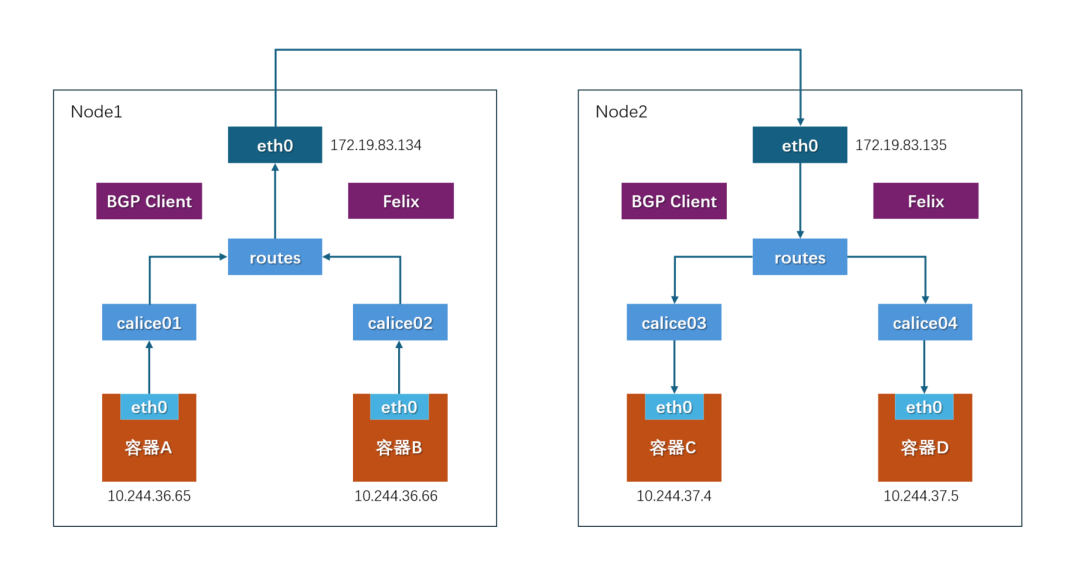

Calico is known for its powerful network policy capabilities and uses BGP routing mechanisms, making it suitable for high-performance demand scenarios. Core components of Calico:Felix: An agent process running on each host, mainly responsible for network interface management and monitoring, routing, ARP management, ACL management and synchronization, status reporting, etc.etcd: A distributed key-value store, mainly responsible for the consistency of network metadata, ensuring the accuracy of Calico’s network state, which can be shared with Kubernetes;BGP Client (BIRD): Calico deploys a BGP Client for each host, implemented using BIRD, which is a separate ongoing project that implements many dynamic routing protocols such as BGP, OSPF, RIP, etc. In Calico’s role, it listens to the routing information injected by Felix on the host and broadcasts it to the remaining host nodes via the BGP protocol, thus achieving network intercommunication. Advantages:1. Powerful network policies: Supports multi-condition definition of access rules.2. Good performance: Low performance loss in non-overlay mode.3. Supports various network plugins and integrations: Adapts to different ecosystems and deployment environments.Disadvantages:1. Relatively complex configuration: High learning cost for beginners.2. Dependency on additional components: Increases system complexity and maintenance costs.

Core components of Calico:Felix: An agent process running on each host, mainly responsible for network interface management and monitoring, routing, ARP management, ACL management and synchronization, status reporting, etc.etcd: A distributed key-value store, mainly responsible for the consistency of network metadata, ensuring the accuracy of Calico’s network state, which can be shared with Kubernetes;BGP Client (BIRD): Calico deploys a BGP Client for each host, implemented using BIRD, which is a separate ongoing project that implements many dynamic routing protocols such as BGP, OSPF, RIP, etc. In Calico’s role, it listens to the routing information injected by Felix on the host and broadcasts it to the remaining host nodes via the BGP protocol, thus achieving network intercommunication. Advantages:1. Powerful network policies: Supports multi-condition definition of access rules.2. Good performance: Low performance loss in non-overlay mode.3. Supports various network plugins and integrations: Adapts to different ecosystems and deployment environments.Disadvantages:1. Relatively complex configuration: High learning cost for beginners.2. Dependency on additional components: Increases system complexity and maintenance costs.

(3) Cilium

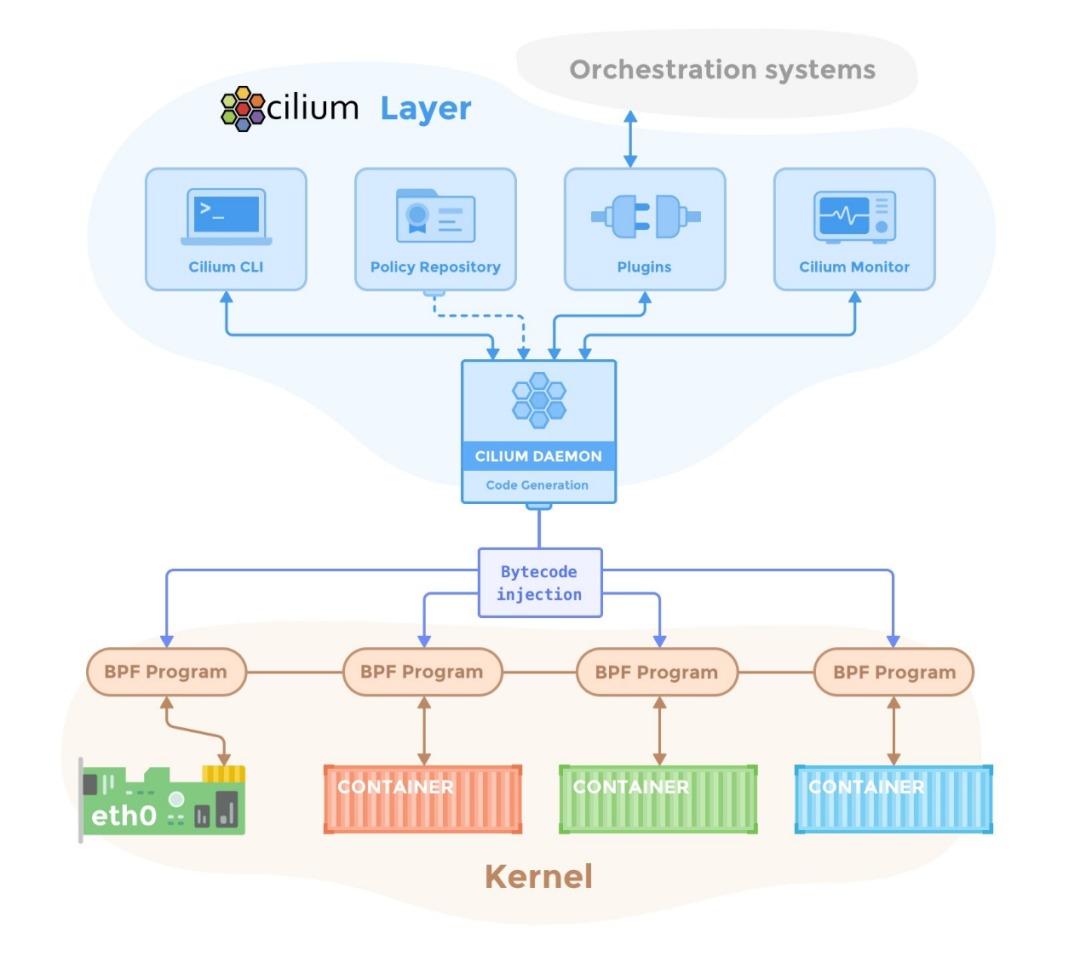

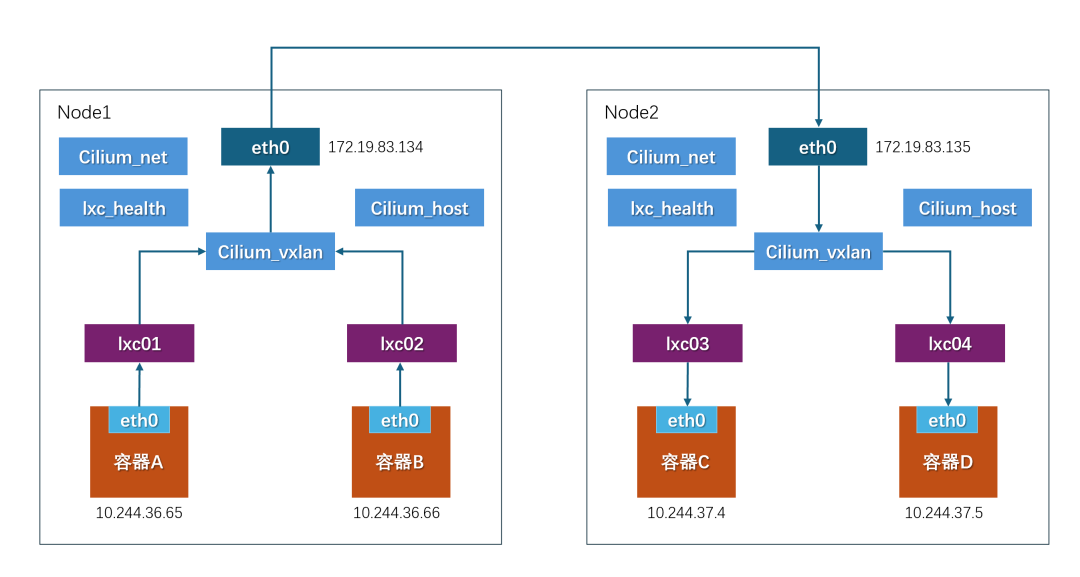

Cilium is based on eBPF technology, providing high-performance network communication and deep observability. Cilium sits between the container orchestration system and the Linux Kernel, allowing network and corresponding security configurations for containers through the orchestration platform above, and controlling the forwarding behavior of container networks and the execution of security policies by mounting eBPF programs in the Linux kernel below.In Cilium’s architecture, in addition to key-value data storage, the main components include the Cilium Agent and Cilium Operator, as well as a client command-line tool, Cilium CLI.The Cilium Agent is the core component of the entire architecture, running on each host in privileged container mode via DaemonSet. The Cilium Agent acts as a user-space daemon, interacting with the container runtime and container orchestration system through plugins to configure network and security for containers on the local machine. It also provides an open API for other components to call.When configuring network and security, the Cilium Agent implements it using eBPF programs. The Cilium Agent generates eBPF programs based on container identities and related policies, compiles the eBPF programs into bytecode, and passes them to the Linux kernel.

Cilium is based on eBPF technology, providing high-performance network communication and deep observability. Cilium sits between the container orchestration system and the Linux Kernel, allowing network and corresponding security configurations for containers through the orchestration platform above, and controlling the forwarding behavior of container networks and the execution of security policies by mounting eBPF programs in the Linux kernel below.In Cilium’s architecture, in addition to key-value data storage, the main components include the Cilium Agent and Cilium Operator, as well as a client command-line tool, Cilium CLI.The Cilium Agent is the core component of the entire architecture, running on each host in privileged container mode via DaemonSet. The Cilium Agent acts as a user-space daemon, interacting with the container runtime and container orchestration system through plugins to configure network and security for containers on the local machine. It also provides an open API for other components to call.When configuring network and security, the Cilium Agent implements it using eBPF programs. The Cilium Agent generates eBPF programs based on container identities and related policies, compiles the eBPF programs into bytecode, and passes them to the Linux kernel.  Advantages:1. High performance based on eBPF: Provides low latency and high throughput.2. Deep observability: Helps understand container network behavior.3. Flexible network policies: Achieves fine-grained security policies.Disadvantages:1. High technical threshold: Requires knowledge of kernel programming and eBPF.2. Compatibility issues: May encounter compatibility challenges under different kernel versions or operating system environments.

Advantages:1. High performance based on eBPF: Provides low latency and high throughput.2. Deep observability: Helps understand container network behavior.3. Flexible network policies: Achieves fine-grained security policies.Disadvantages:1. High technical threshold: Requires knowledge of kernel programming and eBPF.2. Compatibility issues: May encounter compatibility challenges under different kernel versions or operating system environments.

Calico’s performance is superior to Flannel and is widely used, while Cilium has the highest performance but currently has fewer application cases. The network plugin solution widely used in our company is Calico.

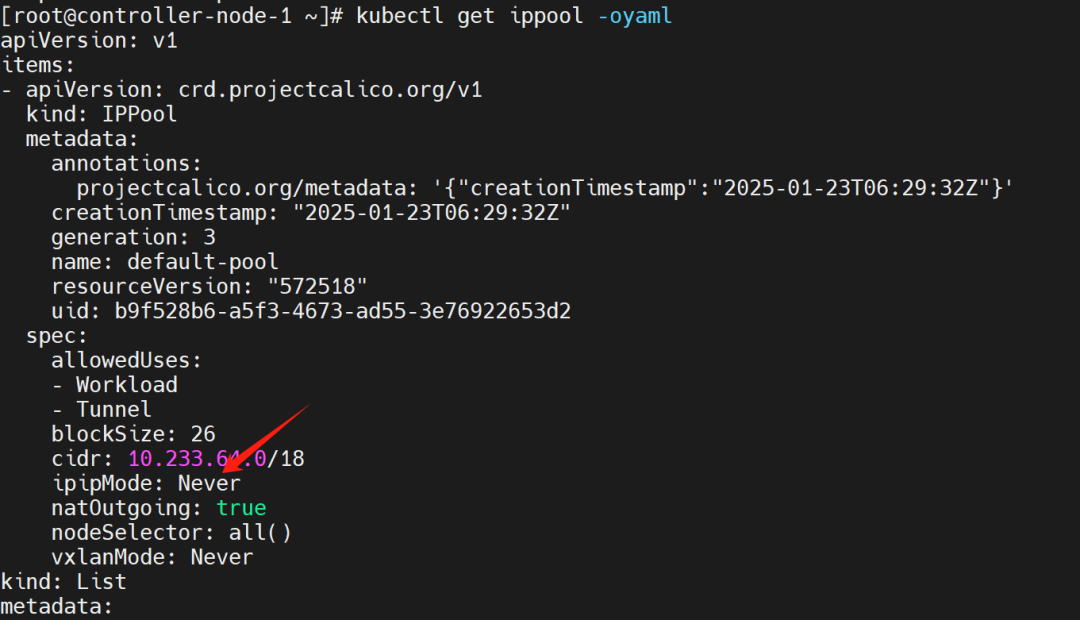

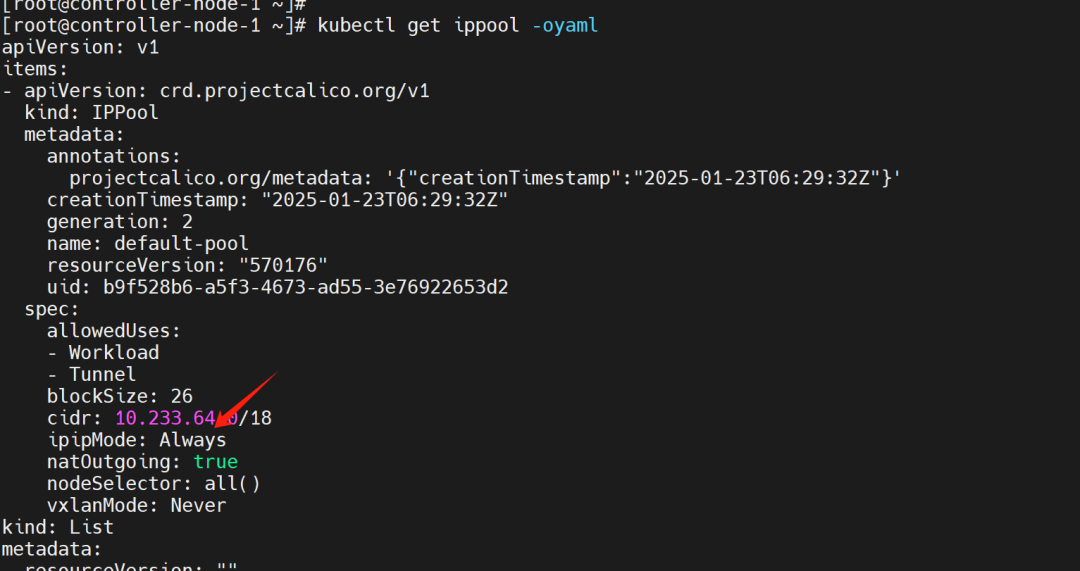

3. Comparison of Calico Network Modes

Calico does not have any bridges; it creates a Veth pair device for each container, one end inside the container and the other set to the host. Packet forwarding relies on routing rules maintained by Calico.

(1) Mode Comparison

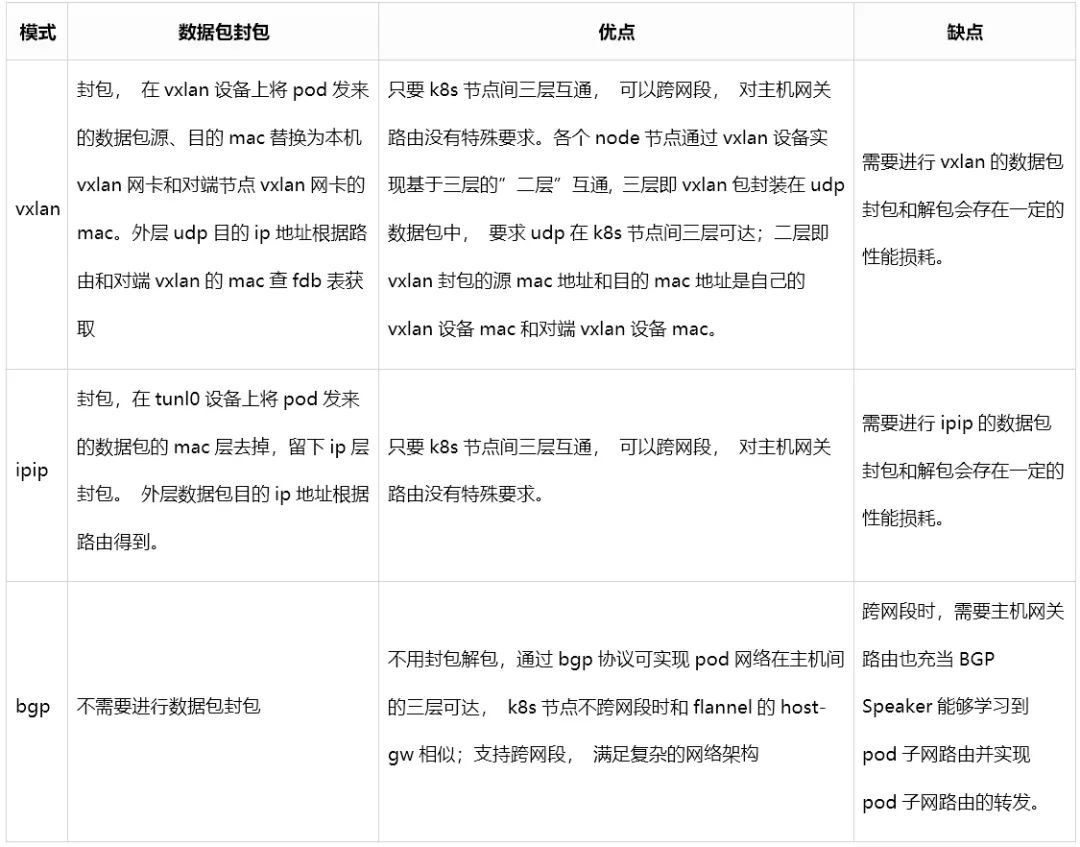

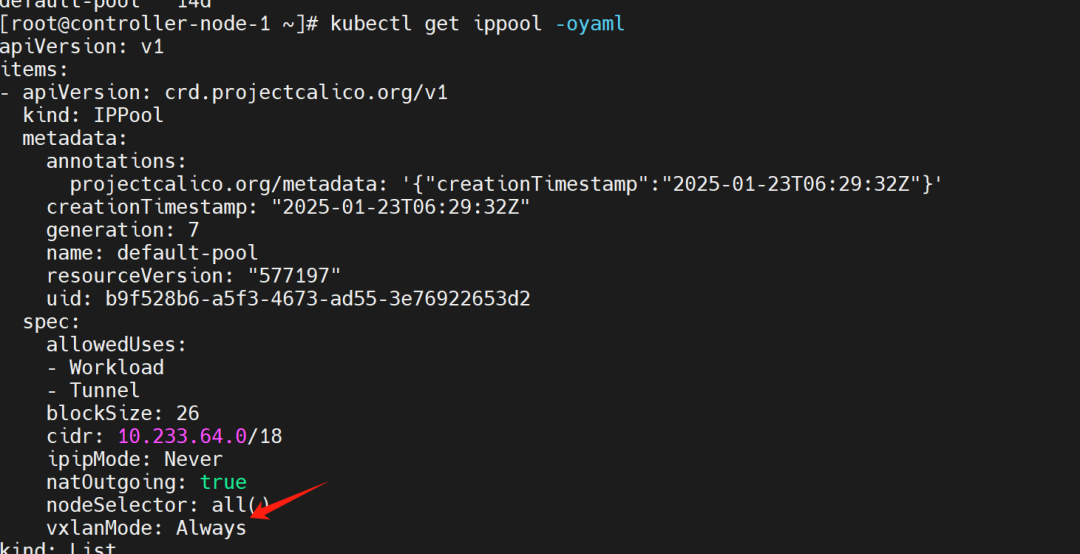

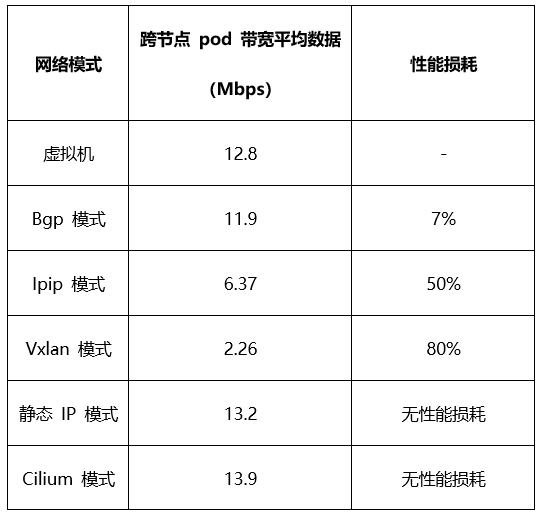

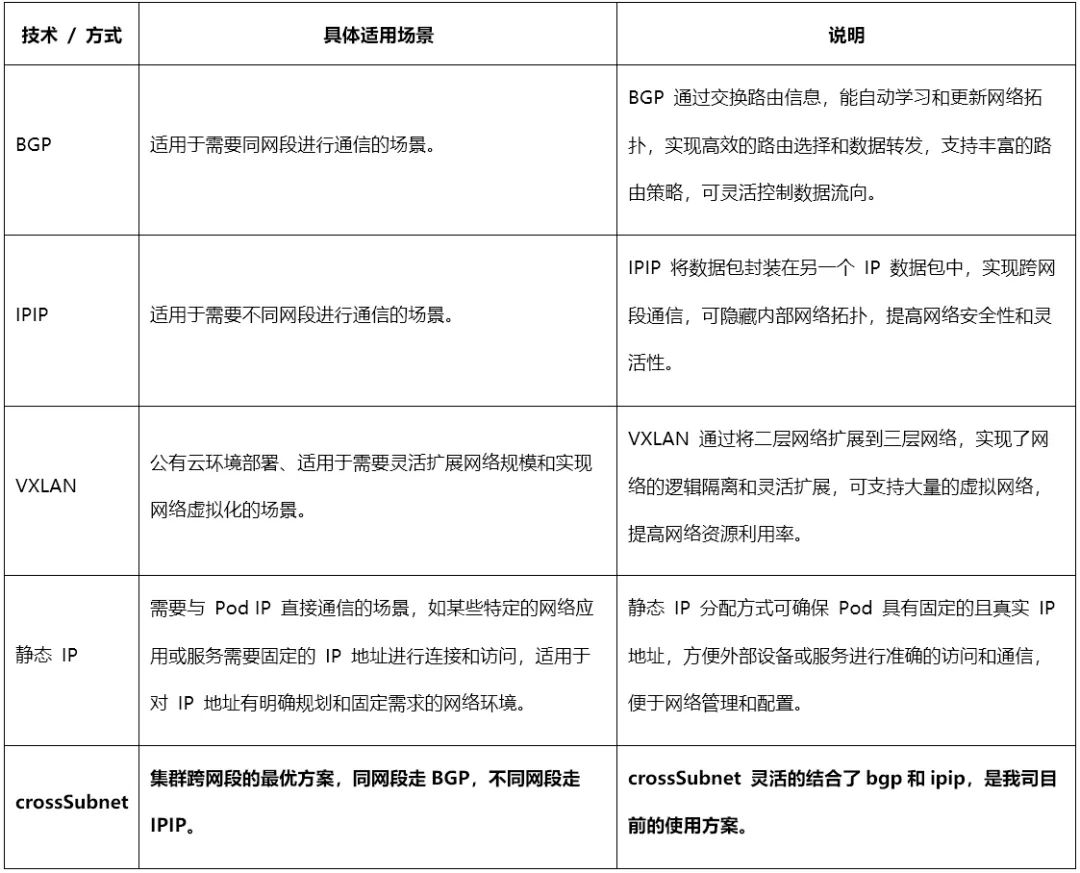

The technical characteristics of various modes of Calico are compared in the table below:

(Click the image to enlarge)

Among them, both vxlan mode and ipip mode can set crosssubnet, meaning that VXLAN or IPIP is used for encapsulation only when communicating between pods on Kubernetes nodes across different subnets, while communication between pods on the same subnet uses BGP mode directly. Using Kubernetes nodes within the same subnet as BGP speakers, they exchange their pod subnet routes to achieve intercommunication, avoiding encapsulation.

(2) Comparison Testing

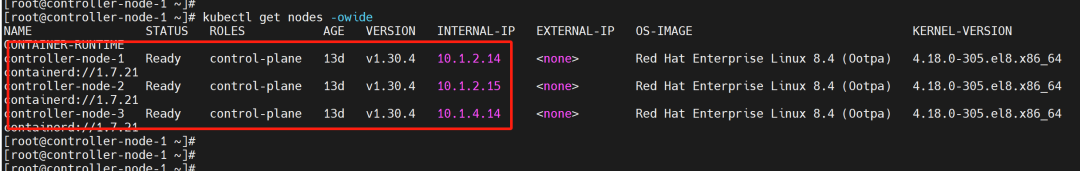

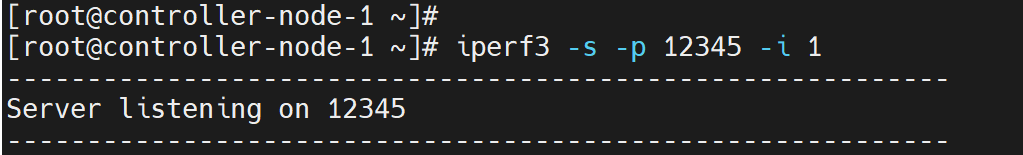

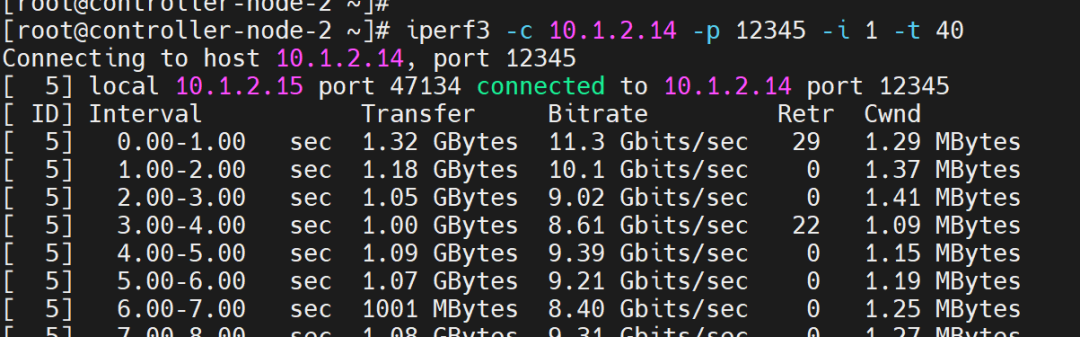

1. Virtual Machine Bandwidth Testing

Cluster situation: Server side: 10.1.2.14

Server side: 10.1.2.14 Client side: 10.1.2.15

Client side: 10.1.2.15 Test results:

Test results:

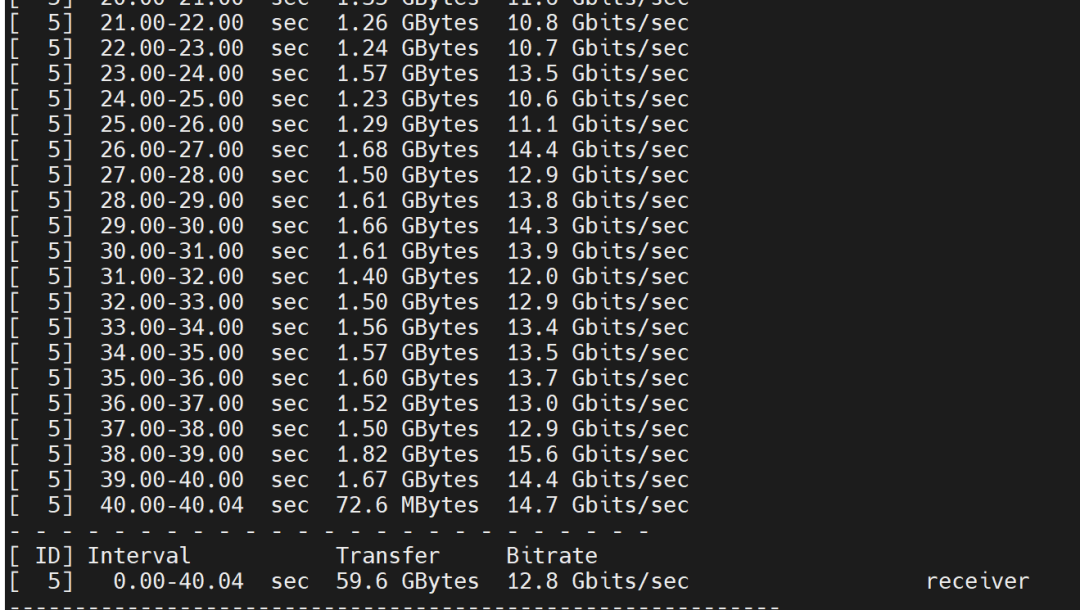

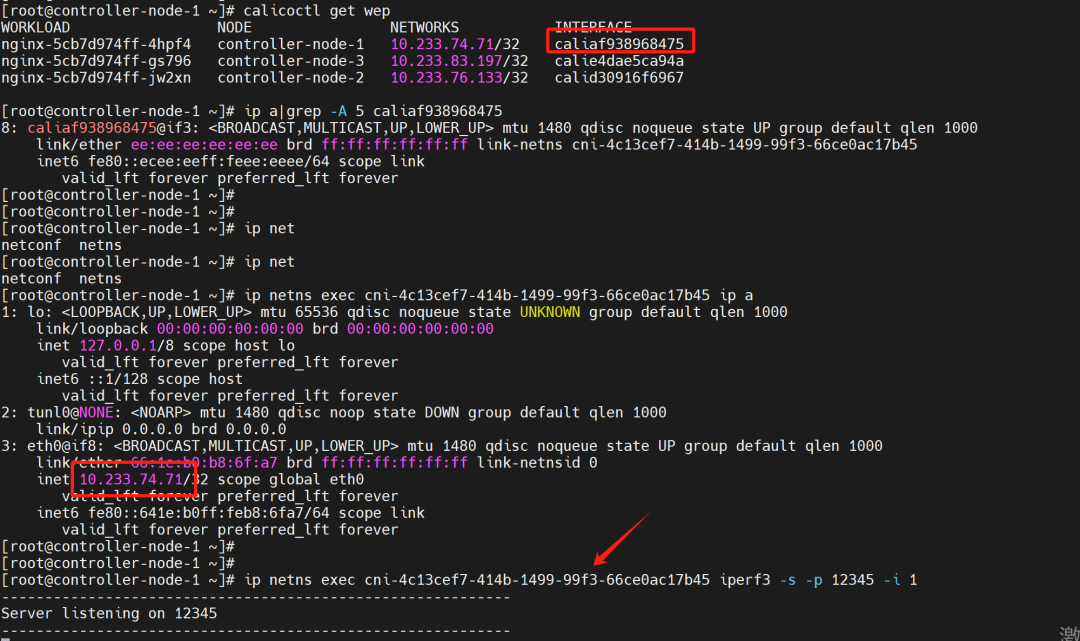

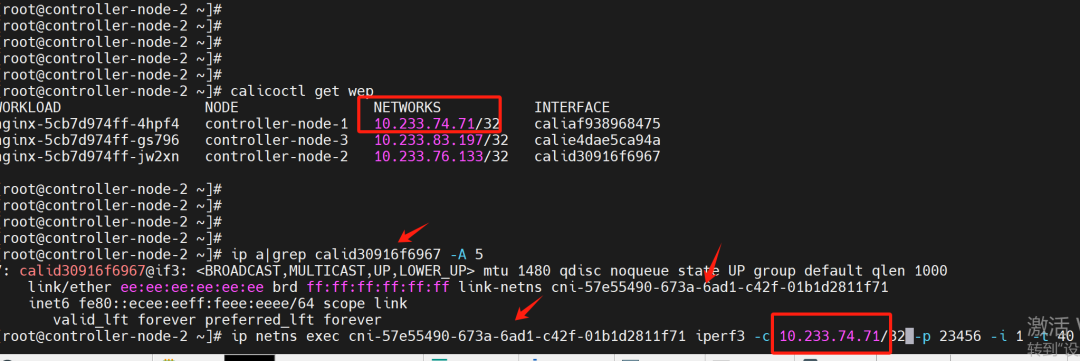

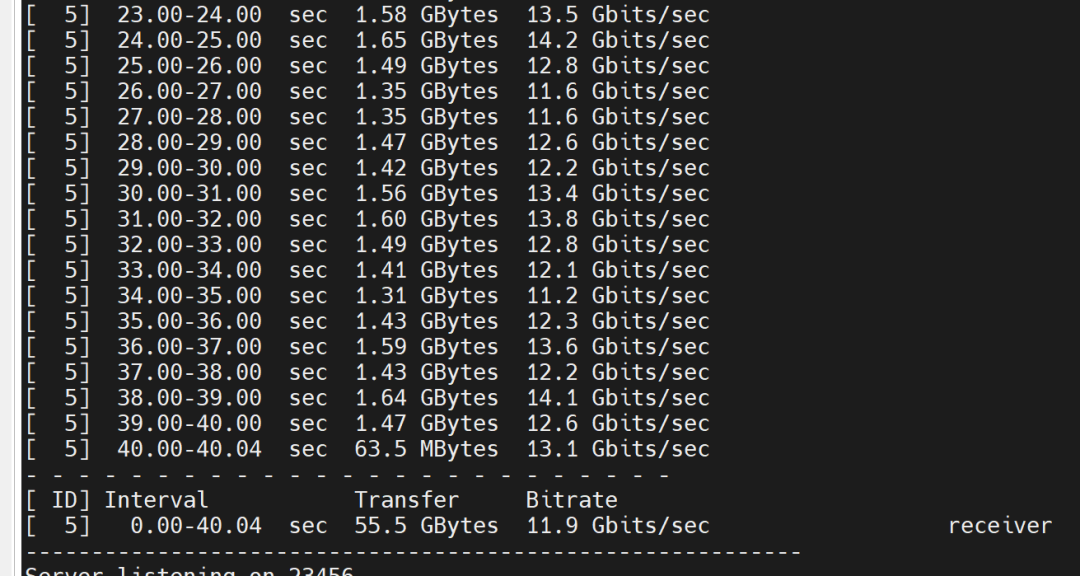

2. Bandwidth Testing Between Pods in BGP Mode

Server side: Pod on node 10.1.2.14

Server side: Pod on node 10.1.2.14  Client side: Pod on node 10.1.2.15

Client side: Pod on node 10.1.2.15 Test results:

Test results:

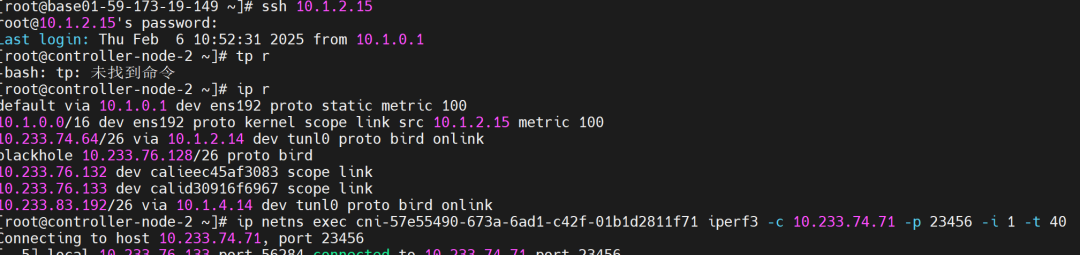

3. Bandwidth Testing Between Pods in IPIP Mode

Server side: Pod on node 10.1.2.14

Server side: Pod on node 10.1.2.14 Client side: Pod on node 10.1.2.15

Client side: Pod on node 10.1.2.15 Test results:

Test results:

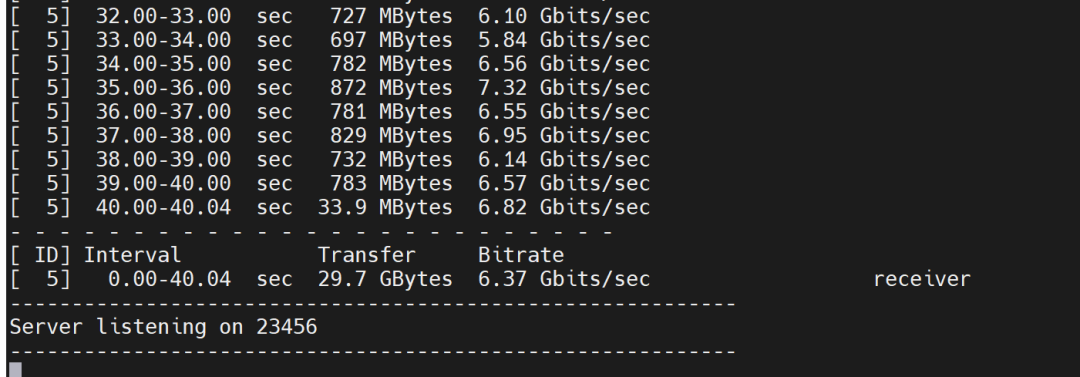

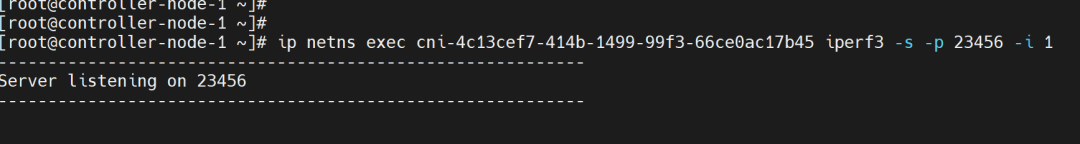

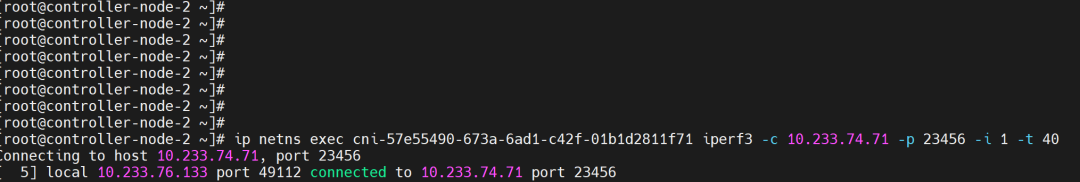

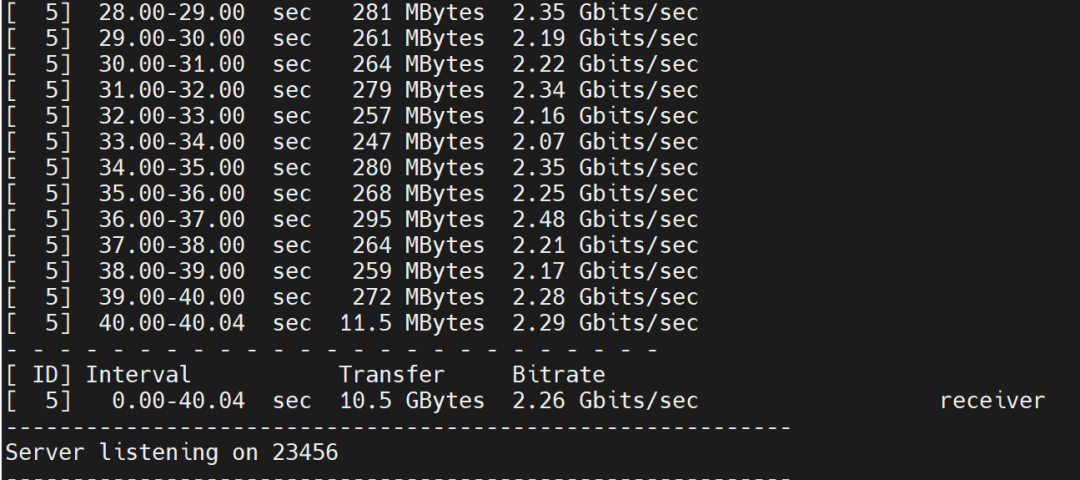

4. Bandwidth Testing Between Pods in VXLAN Mode

Server side: Pod on node 10.1.2.14

Server side: Pod on node 10.1.2.14 Client side: Pod on node 10.1.2.15

Client side: Pod on node 10.1.2.15 Test results:

Test results:

5. Static IP Mode (i.e., Macvlan) Bandwidth Testing Between Pods

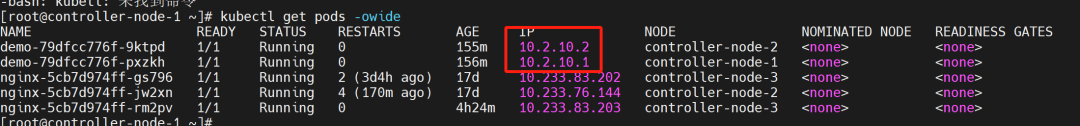

Server side: Pod IP address is 10.2.10.1

Server side: Pod IP address is 10.2.10.1 Client side: Pod IP address is 10.2.10.2

Client side: Pod IP address is 10.2.10.2  Test results:

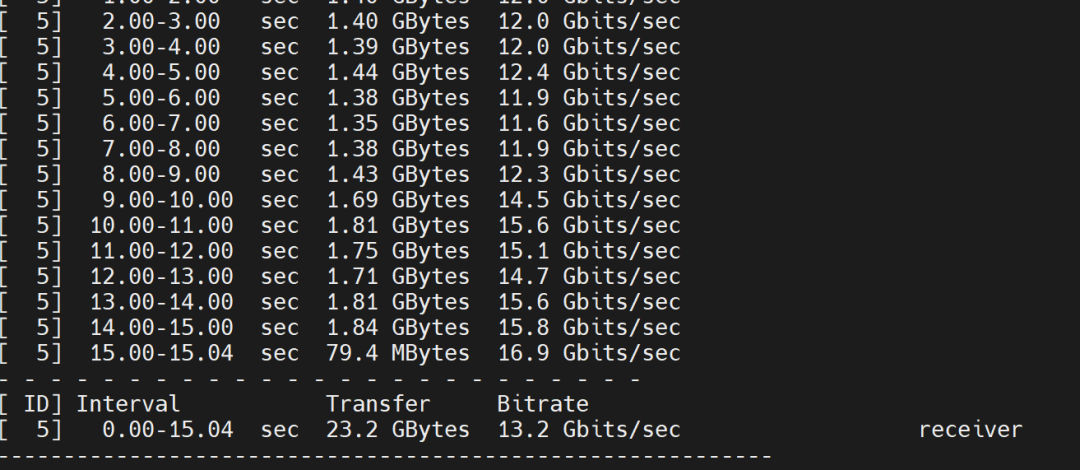

Test results:

(3) Testing Conclusions

Based on the above comparison tests, the following results can be drawn:

(4) Applicable Scenarios

4. Bare Metal vs Virtualization

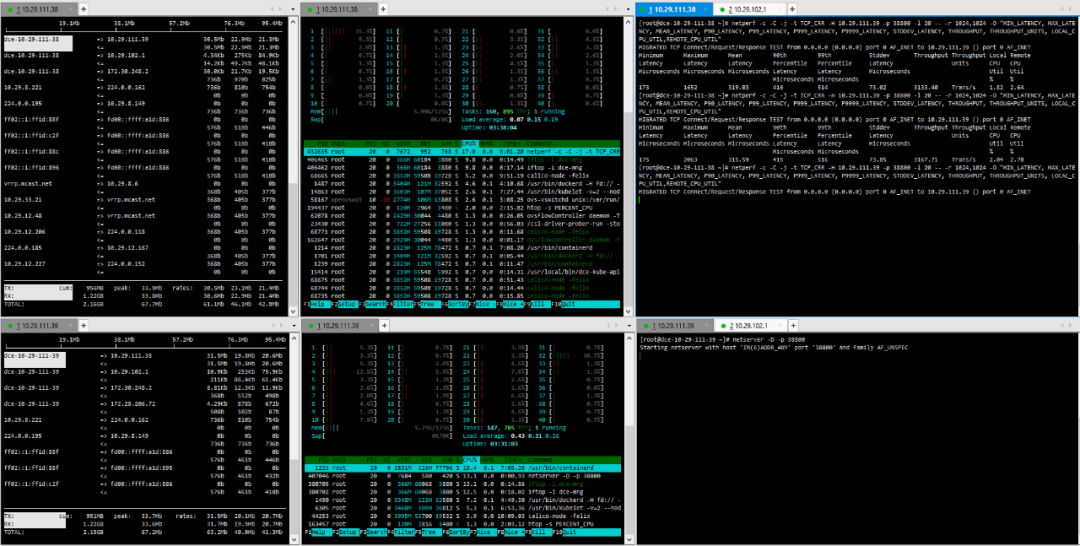

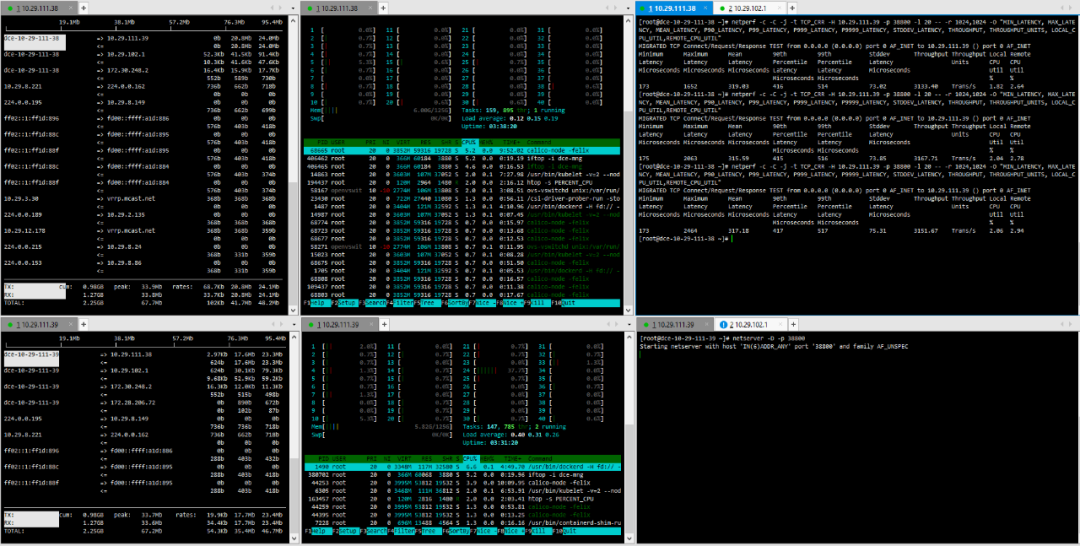

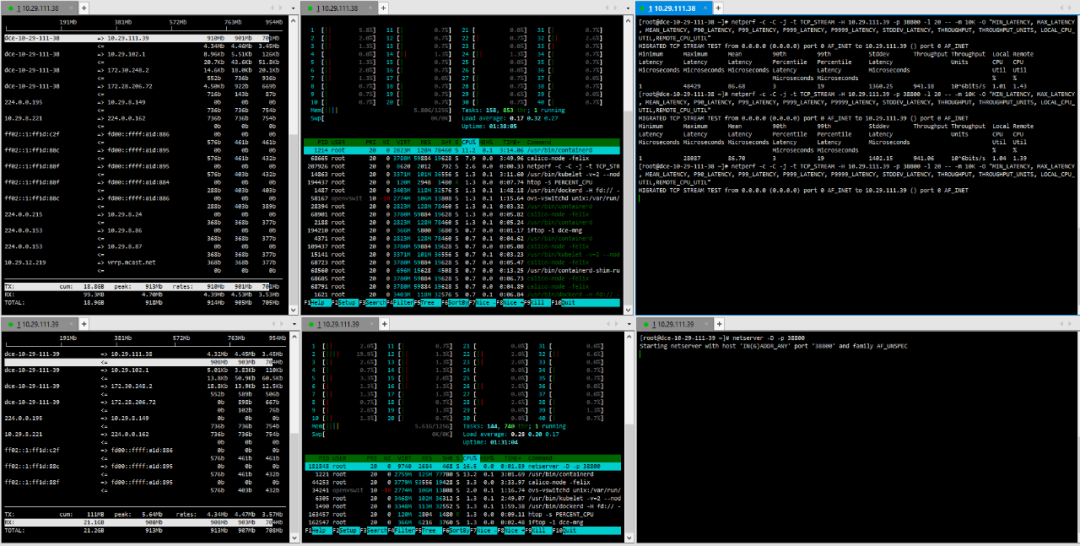

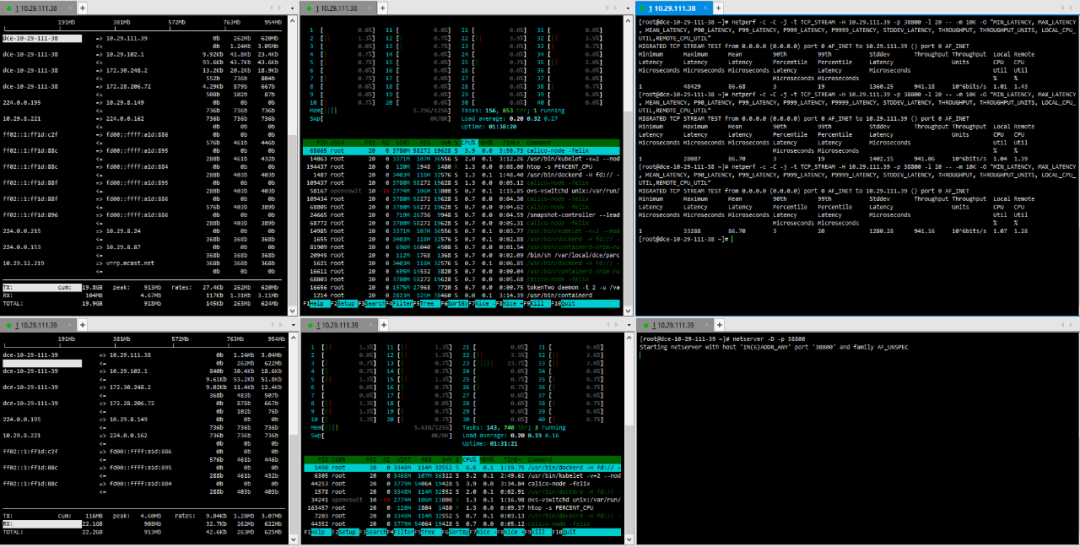

Taking the Calico plugin currently used in the production environment as an example, we test the performance differences between bare metal and virtualized environments.

(1) Testing Environment

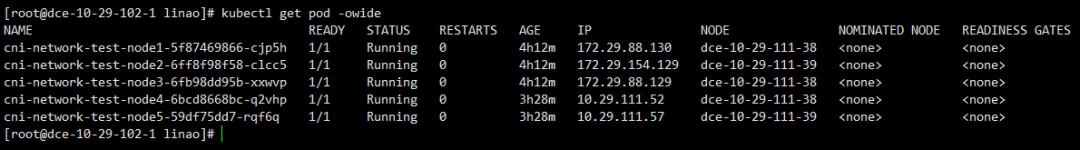

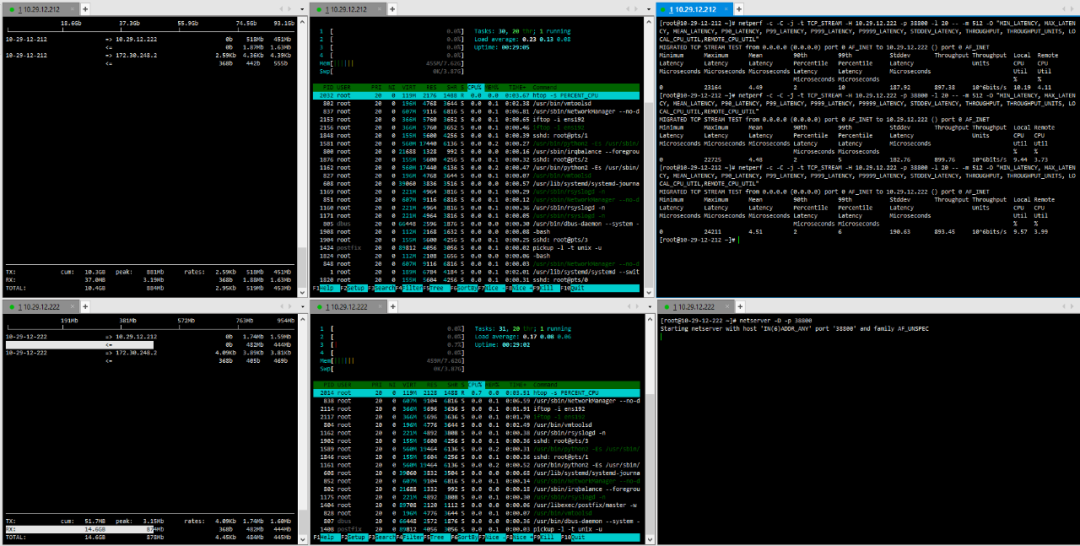

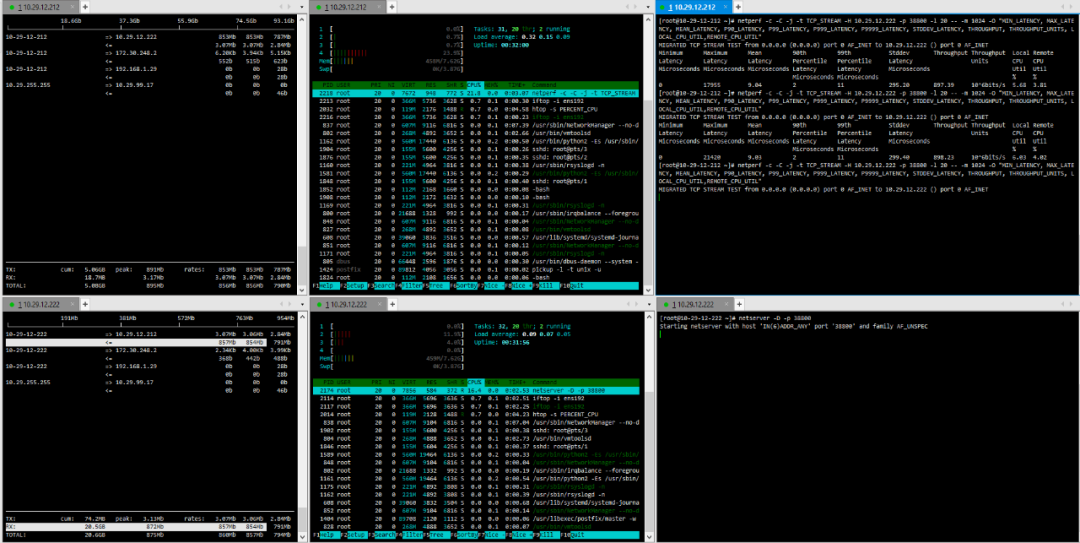

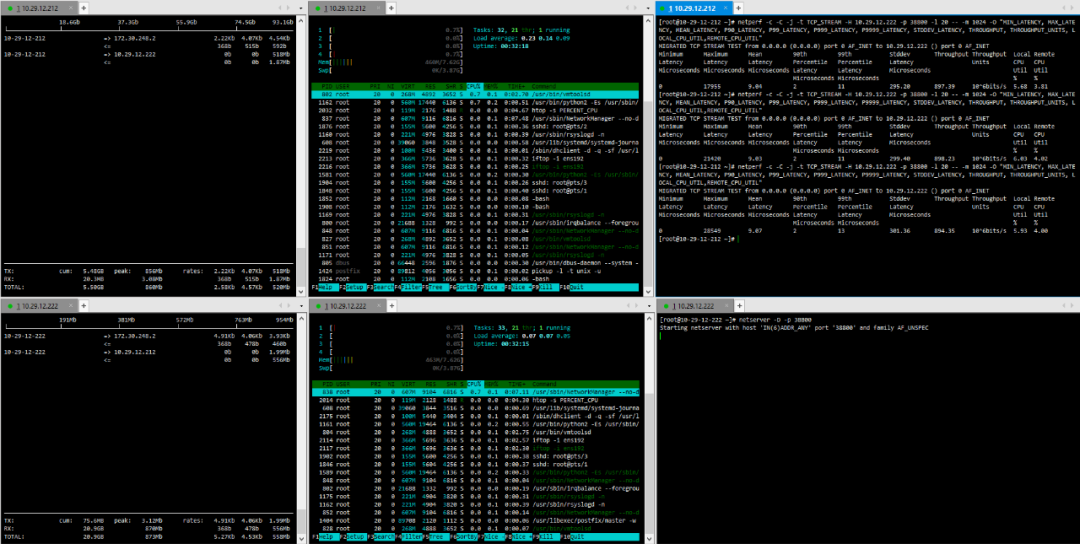

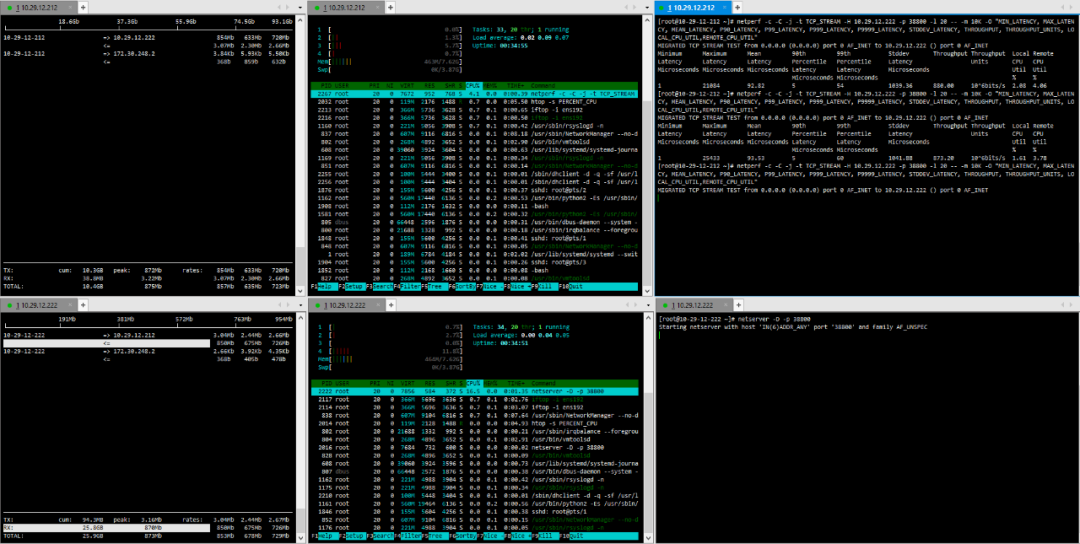

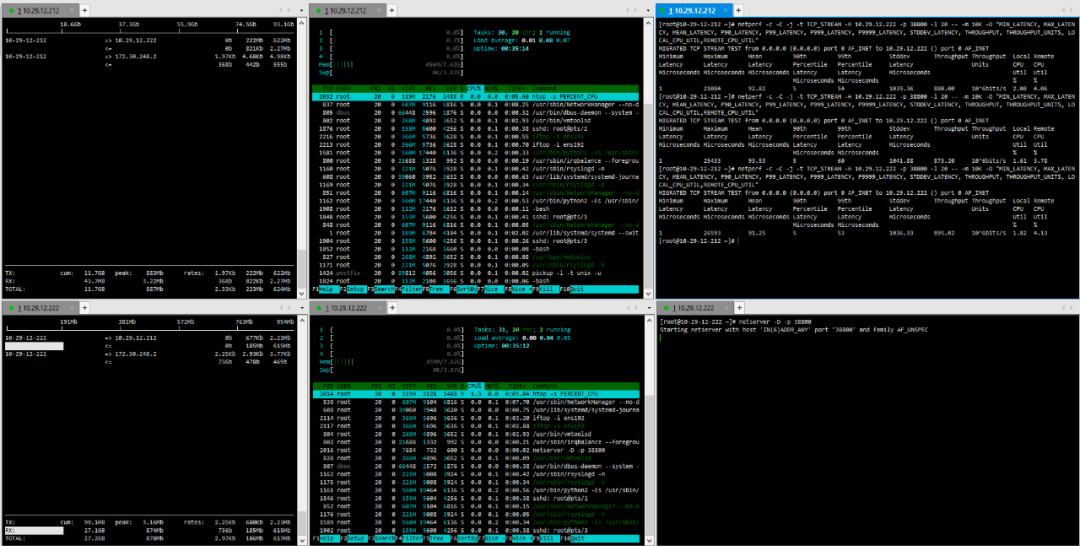

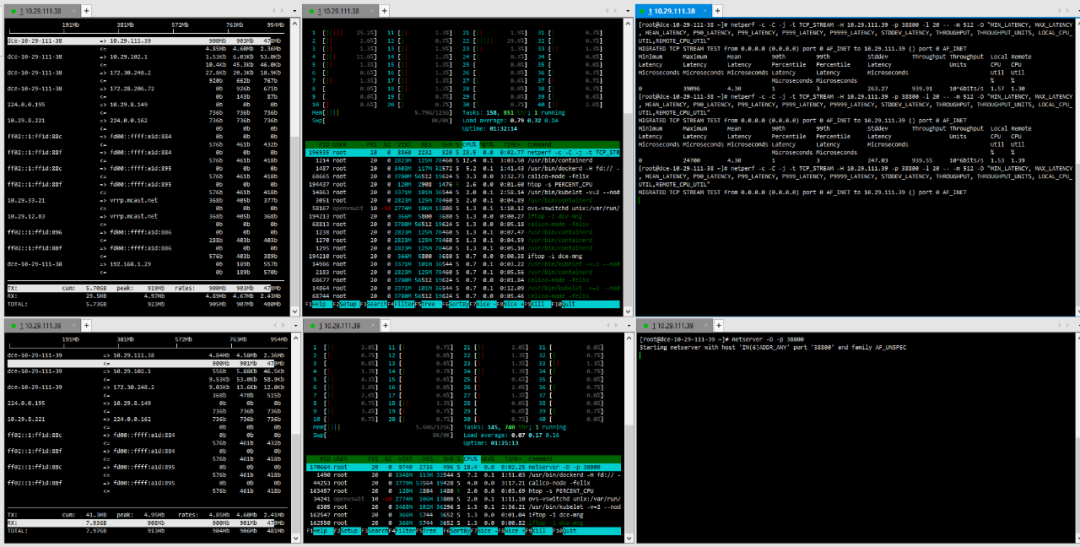

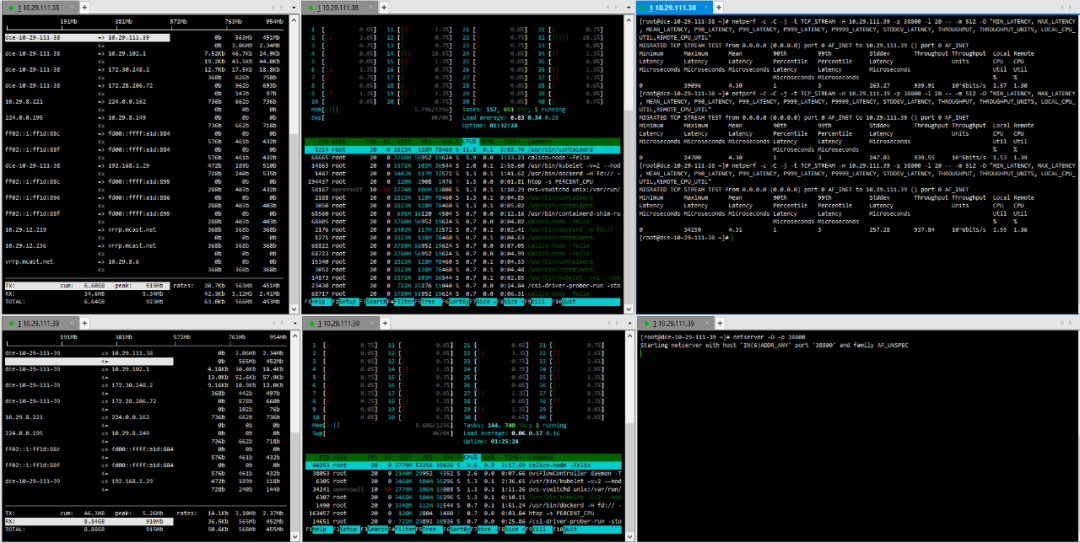

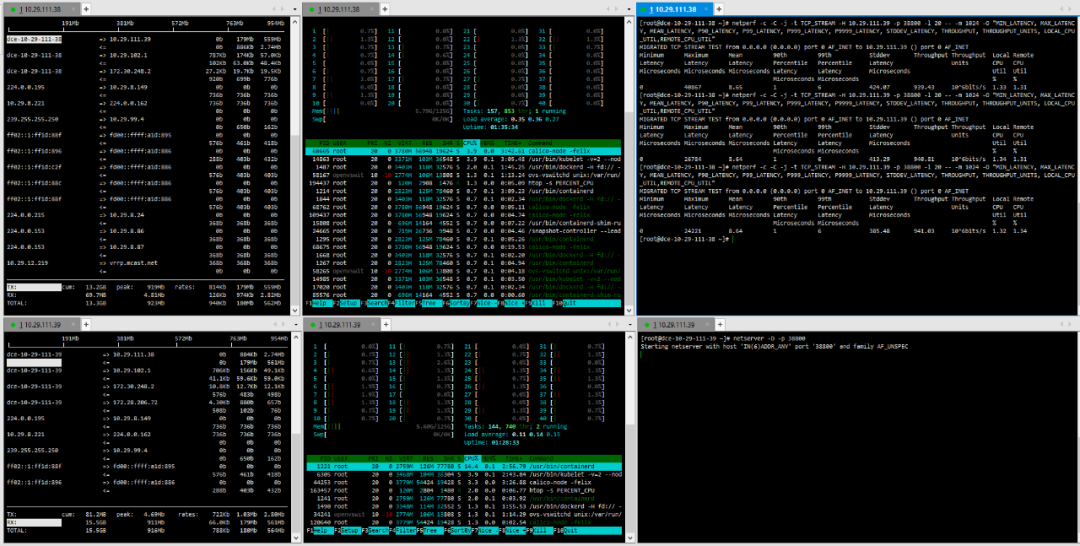

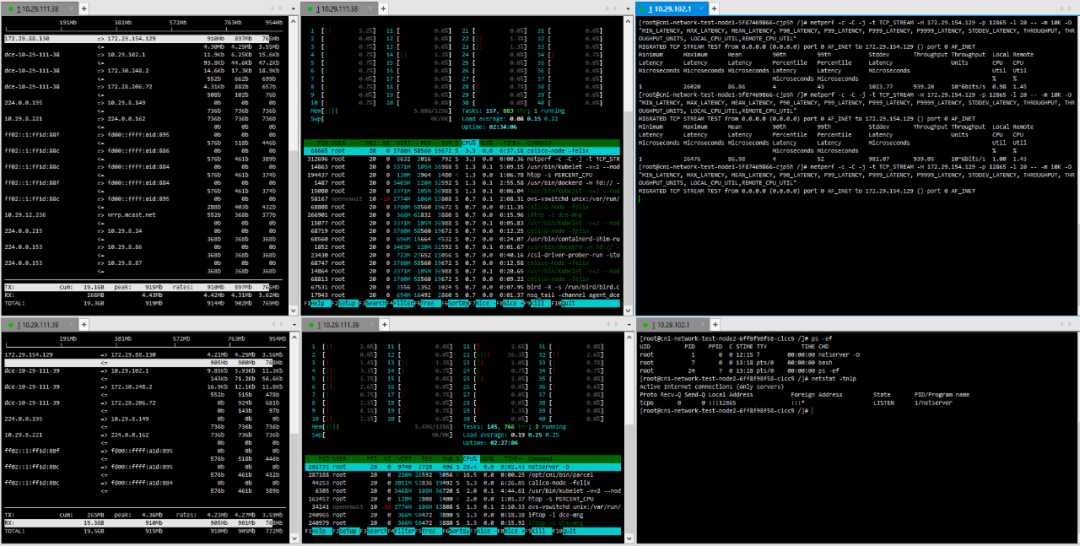

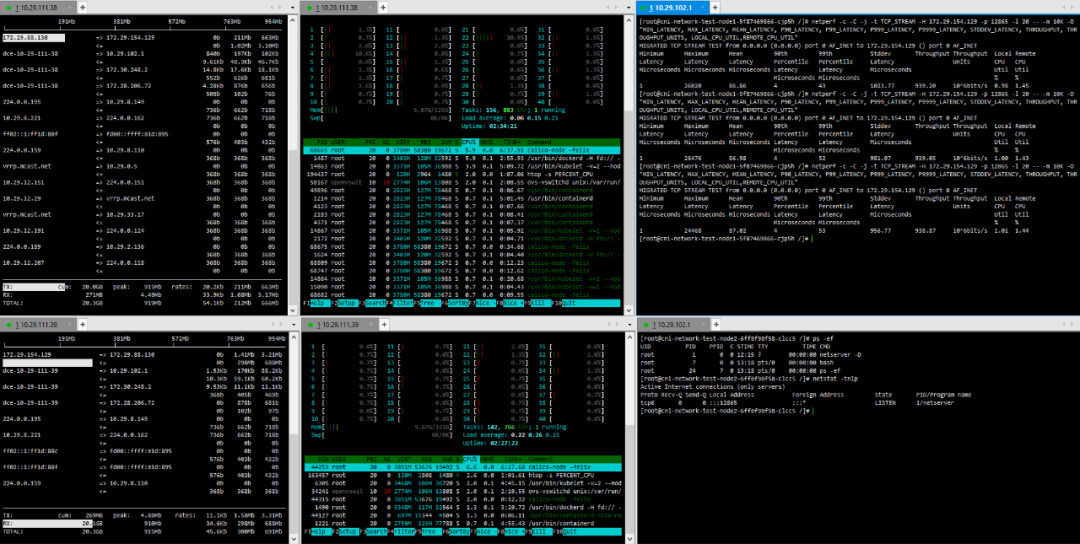

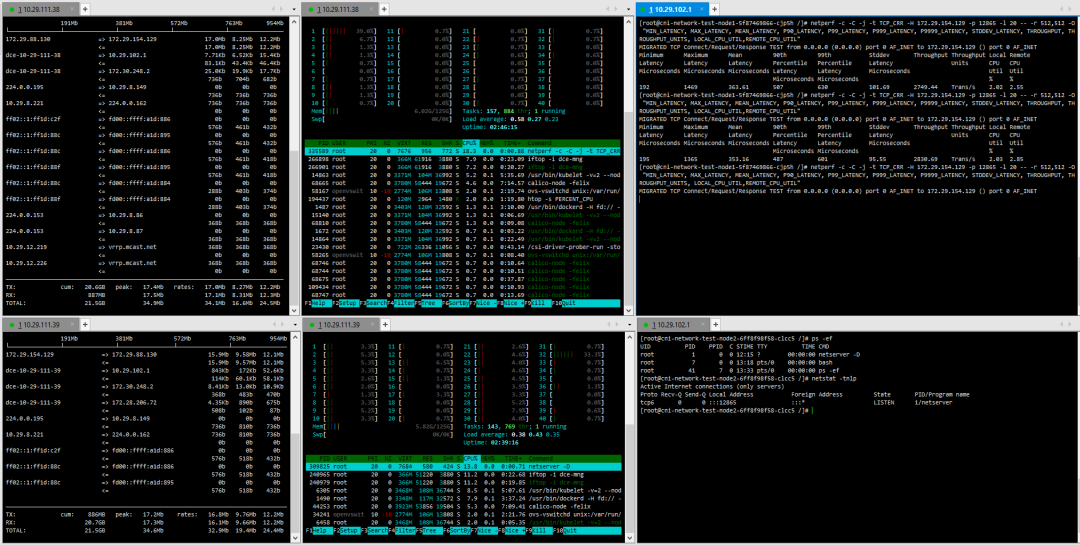

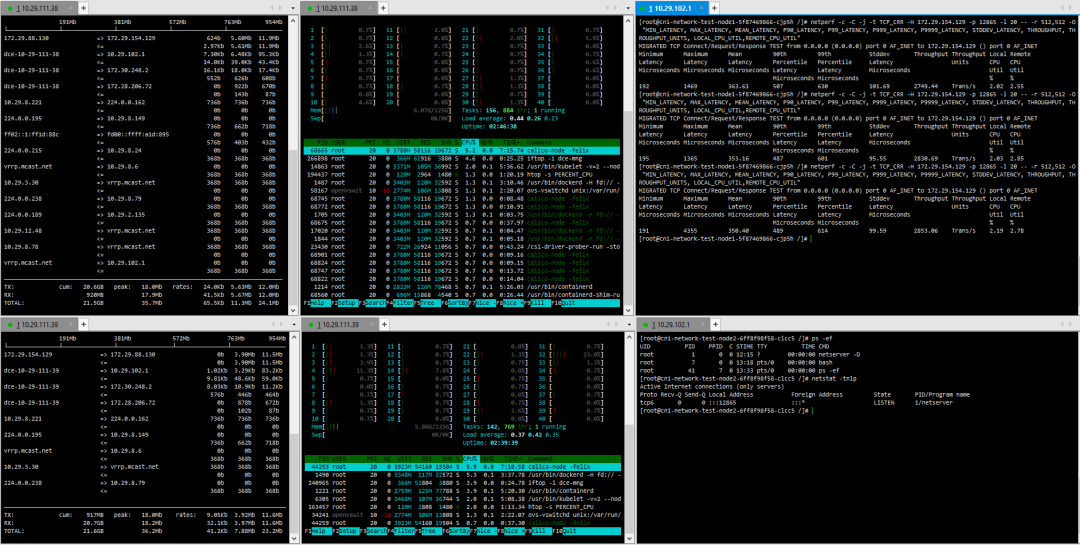

Bare Metal: 10.29.111.38, 10.29.111.39.Virtual Machines: 10.29.12.212, 10.29.12.222

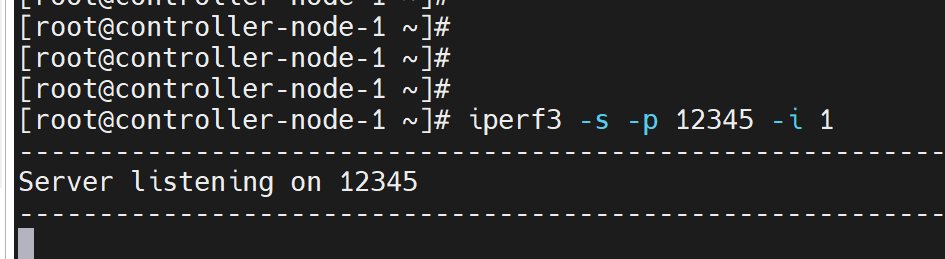

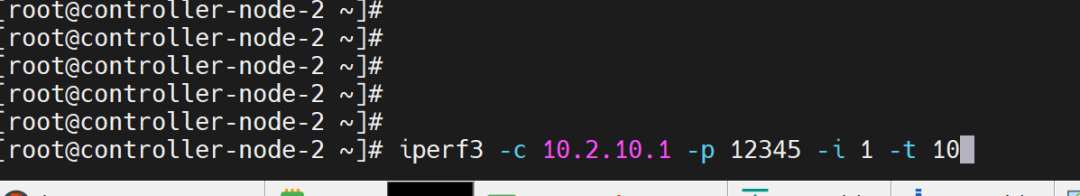

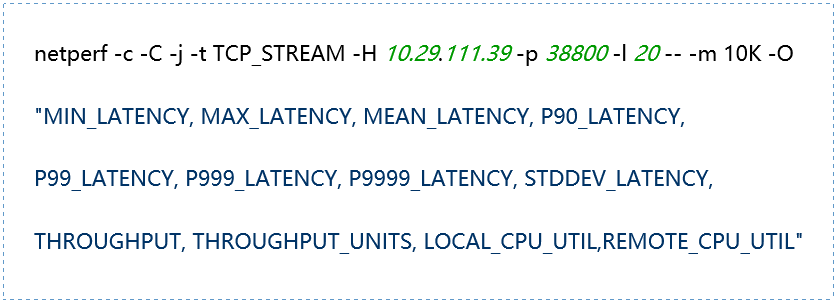

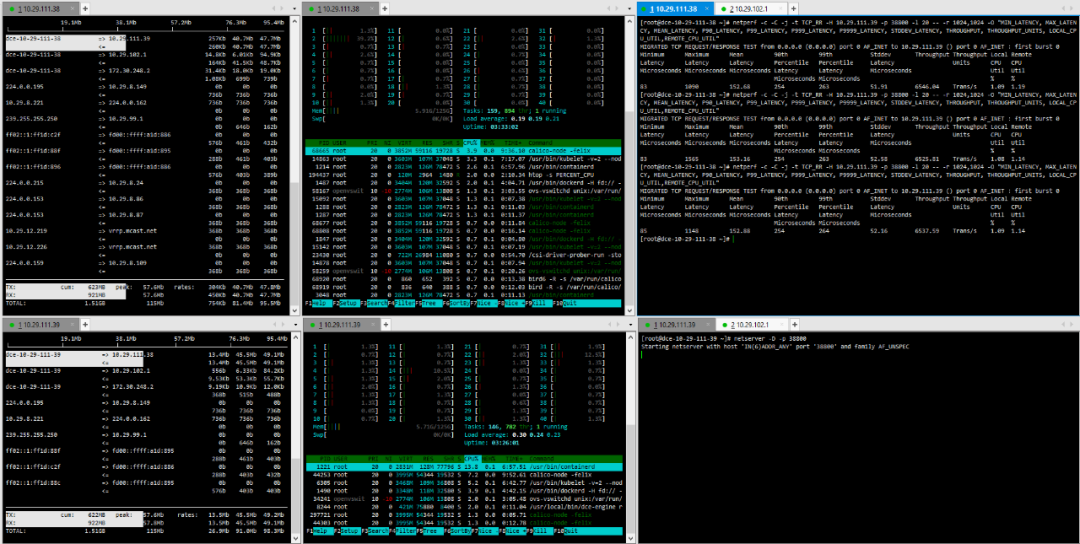

(2) Testing Method

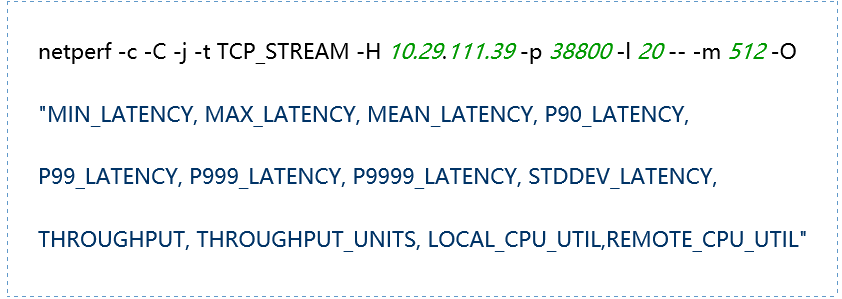

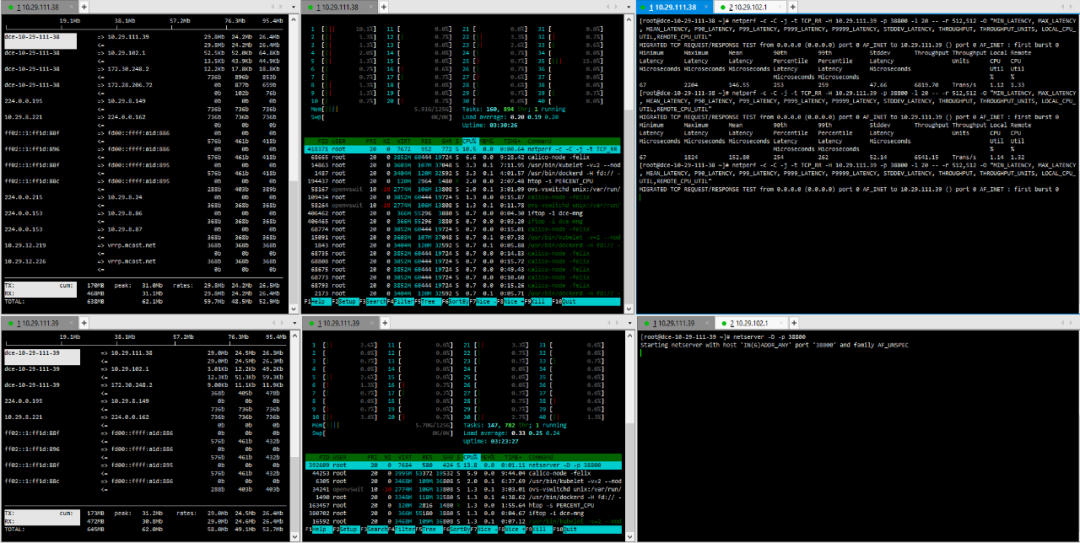

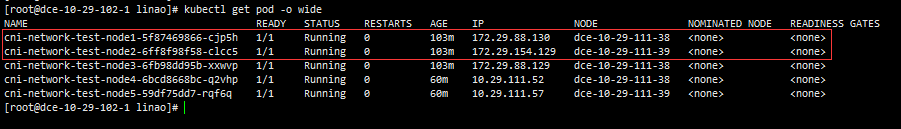

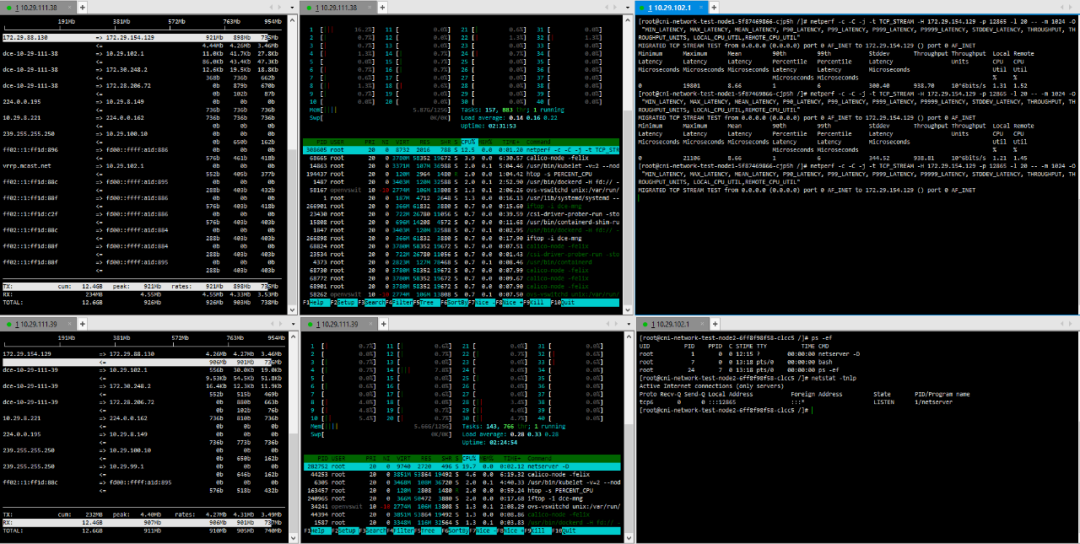

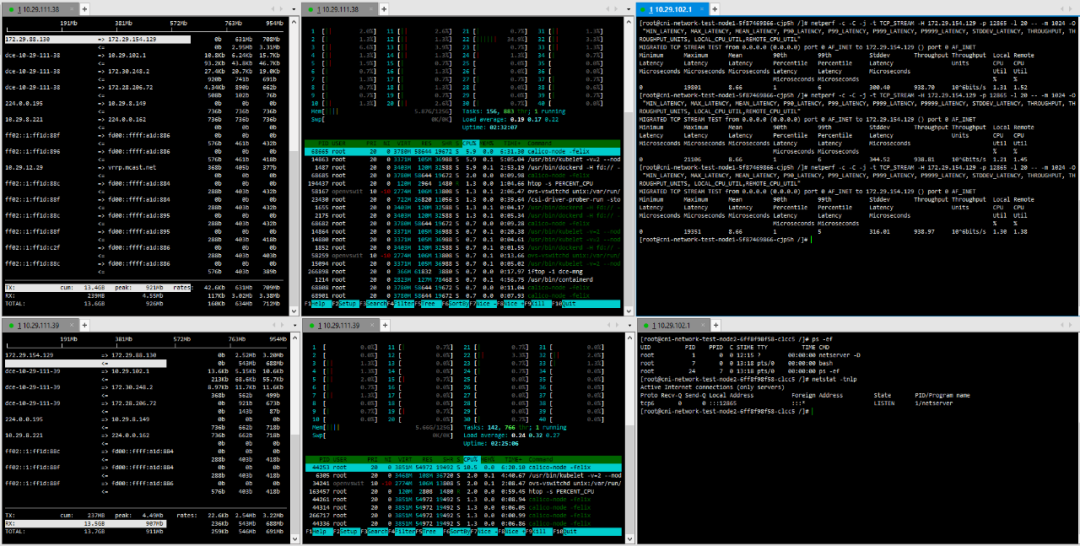

Testing is conducted directly between pods created on the two hosts using netperf.The distribution of pods in the physical environment is shown in the following diagram:

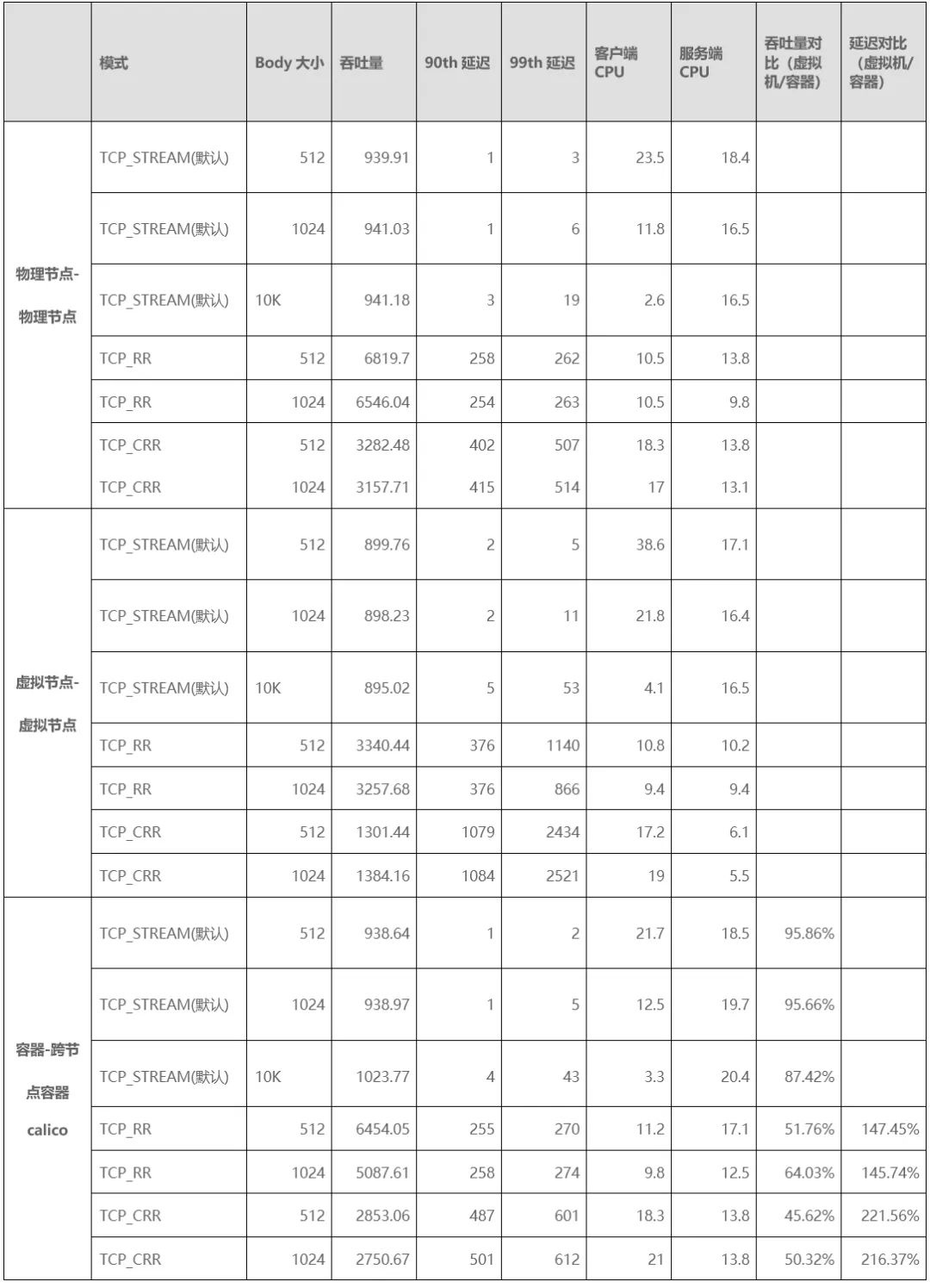

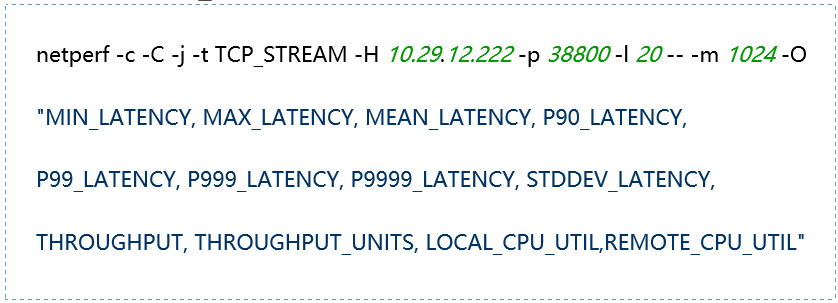

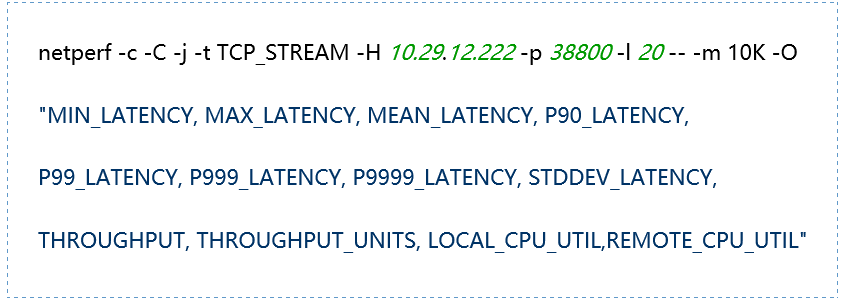

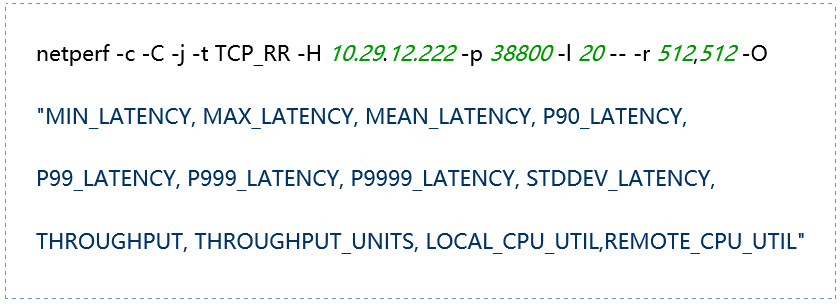

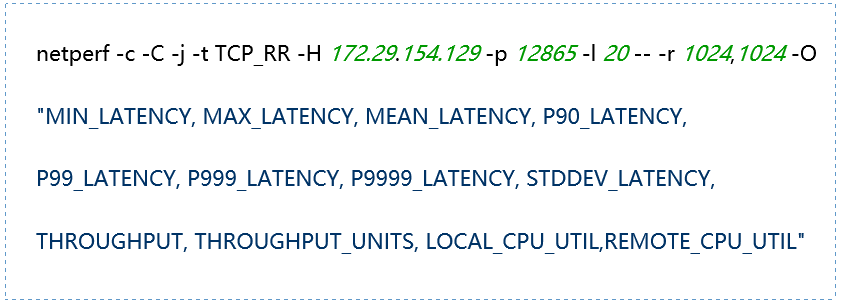

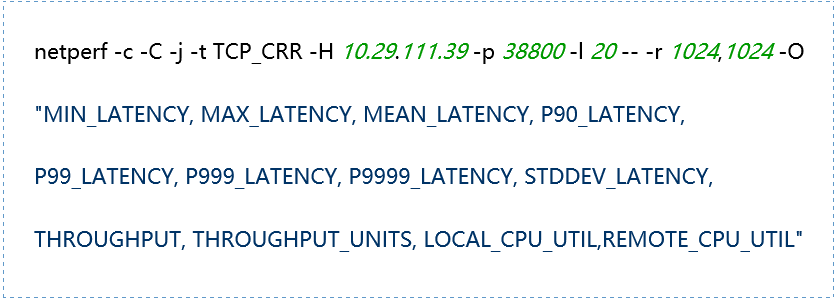

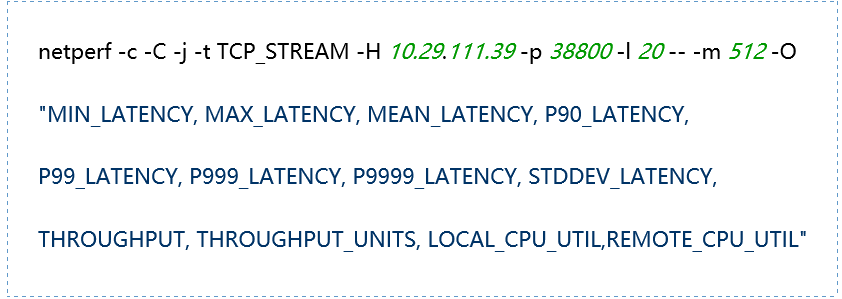

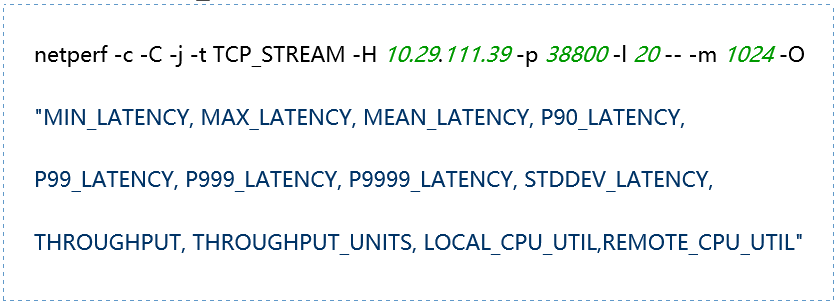

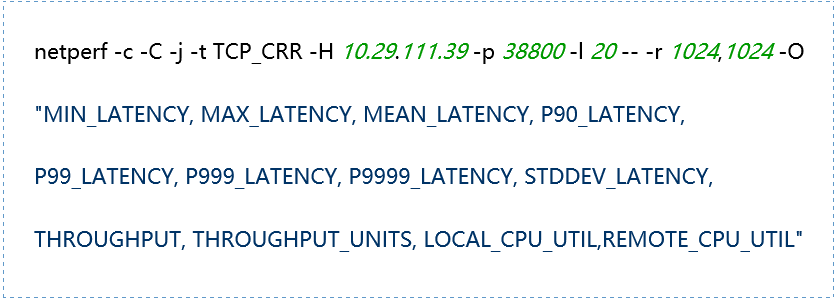

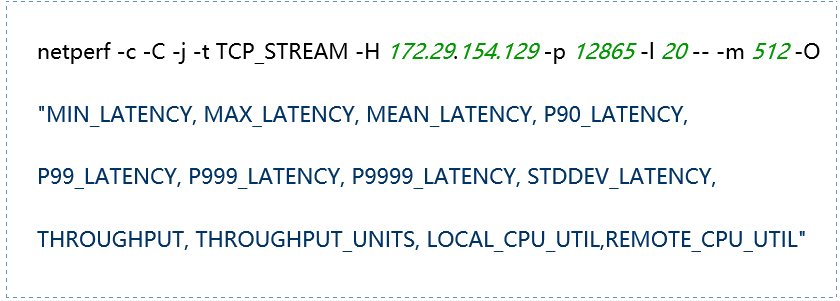

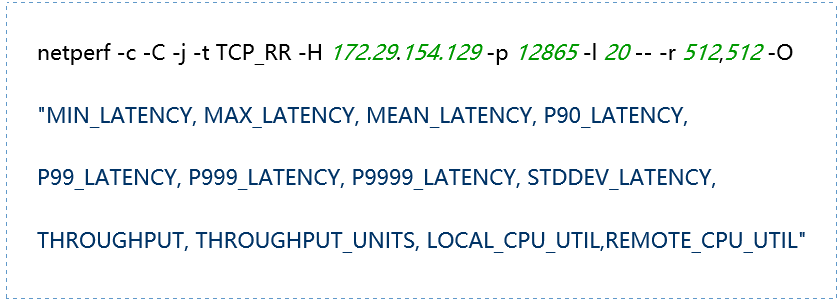

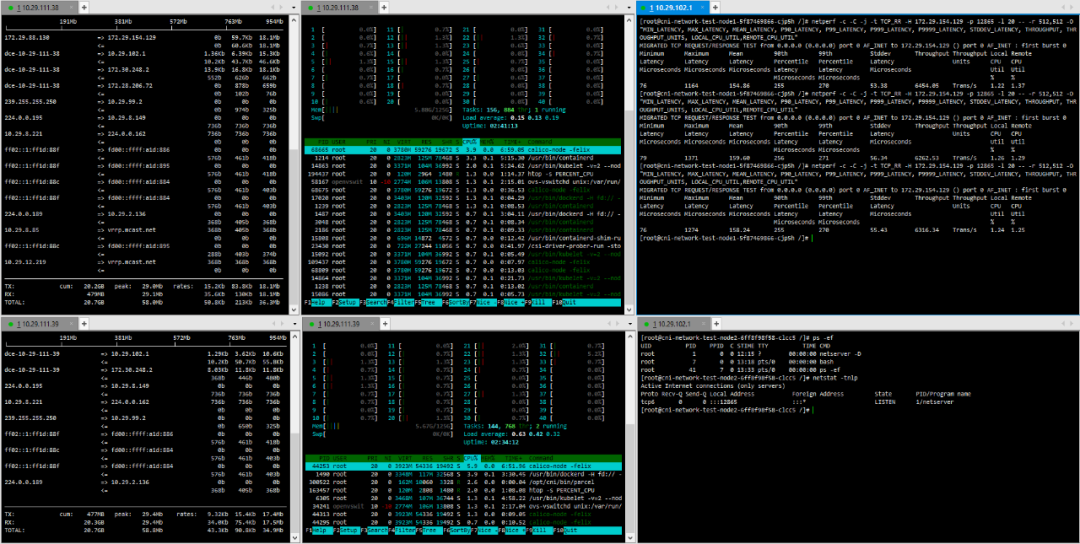

(3) Testing Tool: netperf

1. Tool Mode Introduction:

|

TCP_STREAM: TCP-based bulk sending mode UDP_STREAM: UDP-based bulk sending mode TCP_RR and TCP_CRR: TCP-based request-response modes, TCP_RR performs multiple requests and responses in the same connection, while TCP_CRR establishes a new connection for each request UDP_RR: UDP-based request-response mode |

2. Common Parameters:

|

-H host: Specify the remote server IP address running netserver -l testlen: Duration of the test (seconds) -t testname: netperf working mode, including TCP_STREAM, UDP_STREAM, TCP_CRR, TCP_RR, UDP_RR -s size: Local system socket send and receive buffer size -S size: Remote system socket send and receive buffer size -m size: Set the size of the data packets during transmission -M size: Size of the packets received by the remote system during testing -p port: Port for connecting to the server -r req,resp: Packet sizes for sending and receiving during request-response mode testing -O string: Custom output results, which can include latency, 90th percentile, 99th percentile, throughput, CPU usage, etc. -c: Calculate and display the client CPU usage in the results -C: Calculate and display the server CPU usage in the results |

Reference: https://www.netperf.org/

(4) Test Case Description

1. Physical Node – Physical Node

Tests are conducted on two physical machines, one running the netperf server and the other as the netperf client for various specific modes.

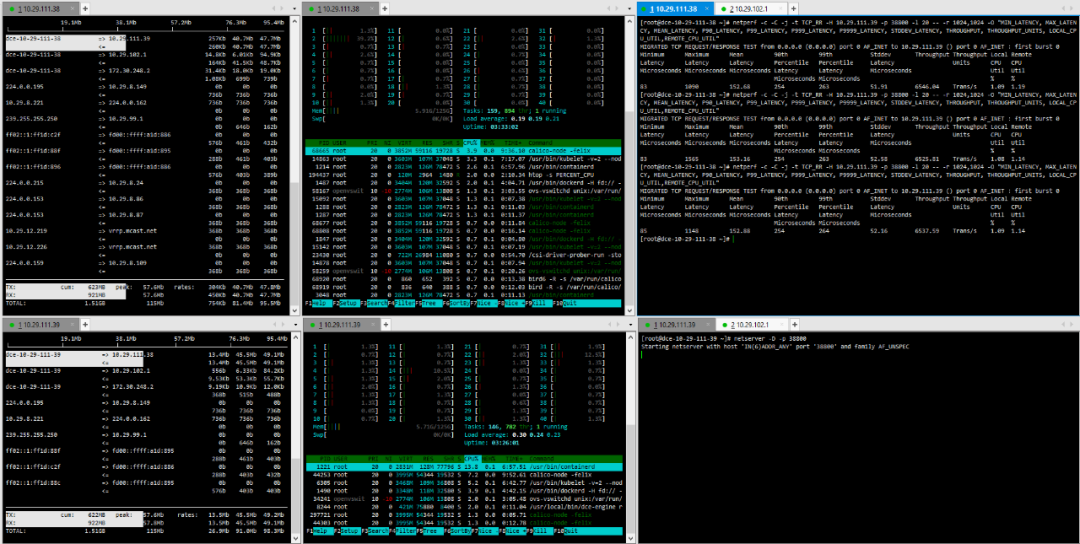

2. Virtual Node – Virtual Node

Tests are conducted on two virtual machine nodes, one running the netperf server and the other as the netperf client for various specific modes.

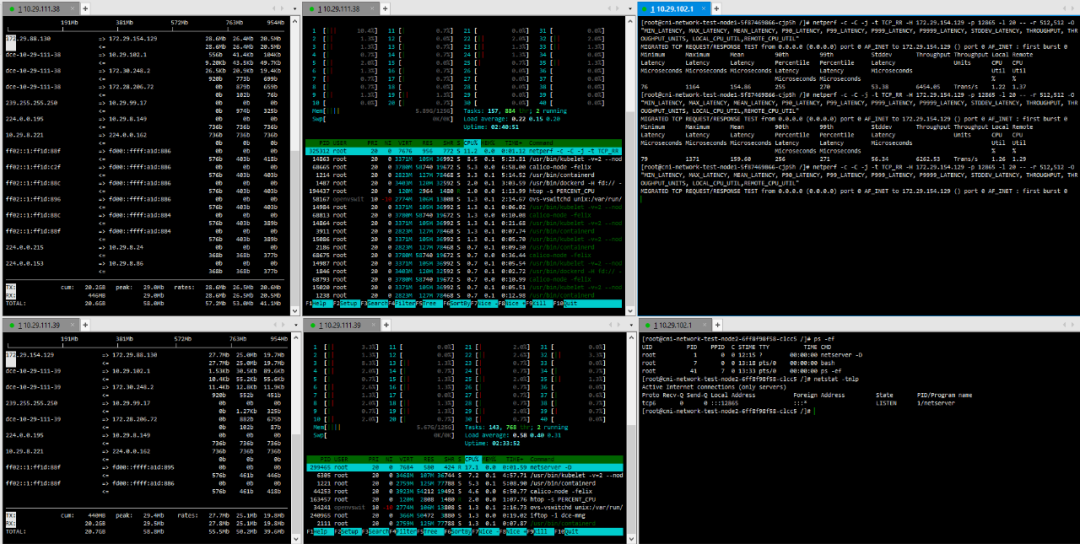

3. Container – Cross-Node Container Calico

One Calico-mode pod runs on each of the two physical machine nodes, with one pod running the netperf server and the other pod running the netperf client for specific mode testing.

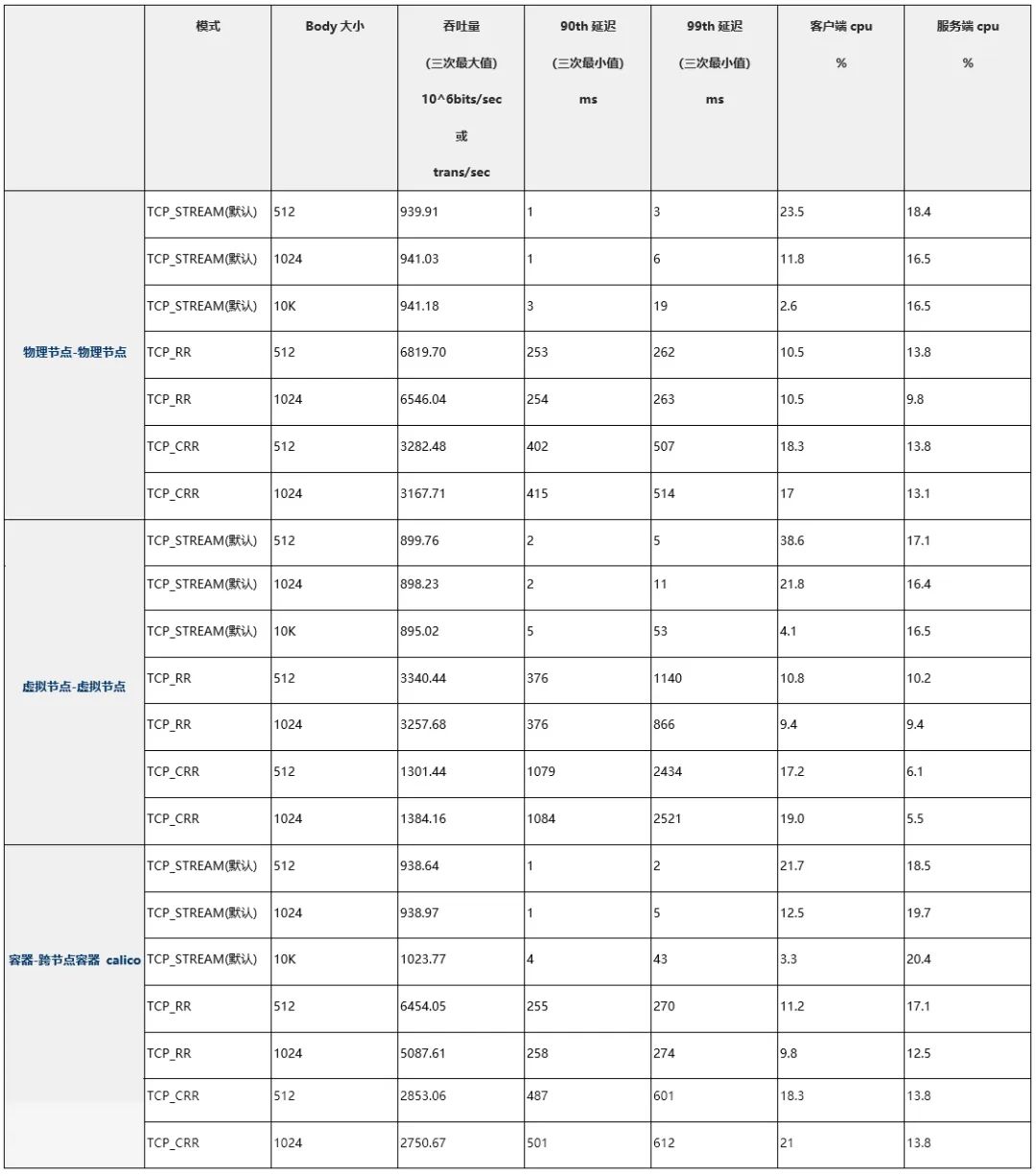

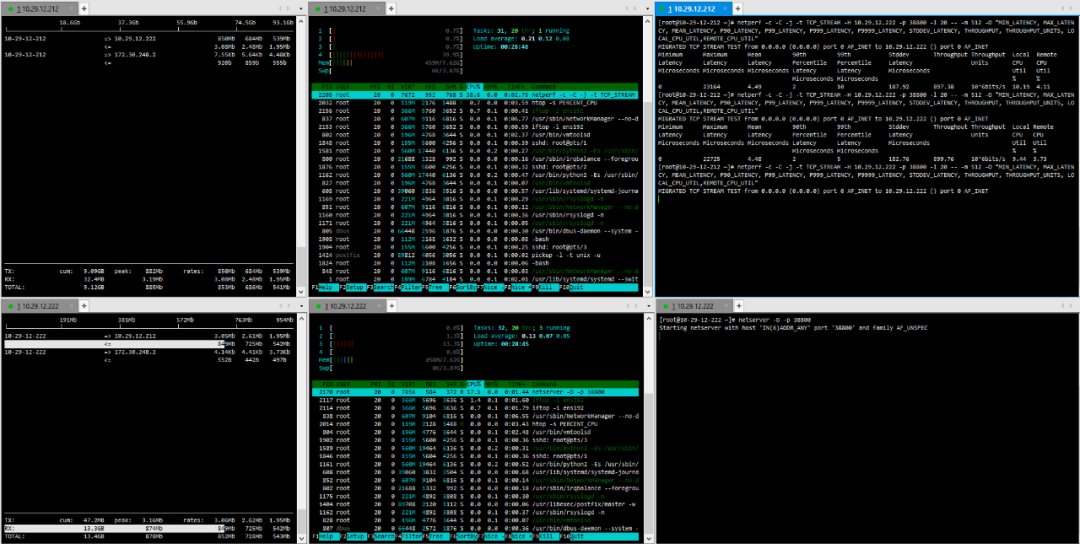

(5) Summary of Test Results

(6) Comparison of Test Results

(7) Summary of Test Results

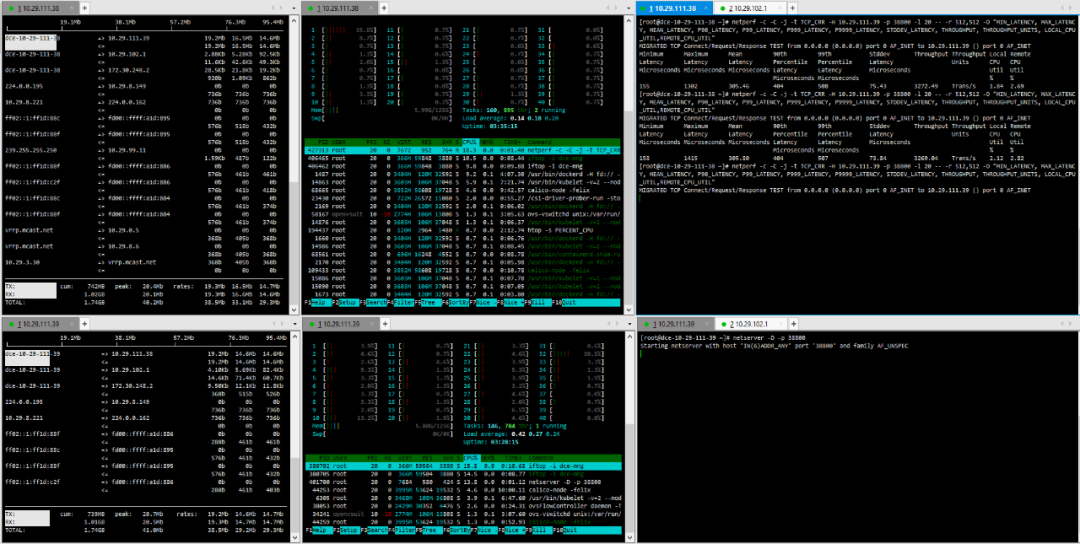

Comparing the Calico-mode pods on virtual machines to those on physical nodes, the average throughput loss is 47%, and the average latency increases by 83%.

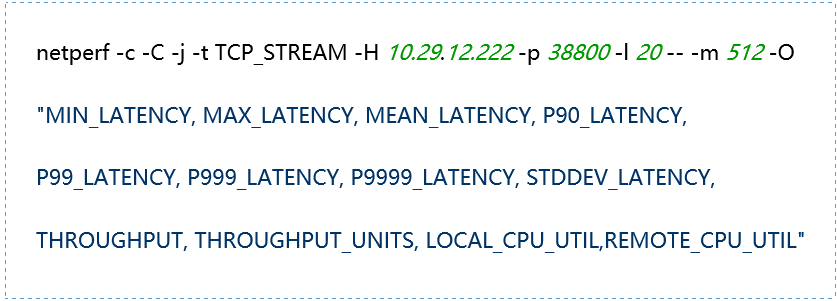

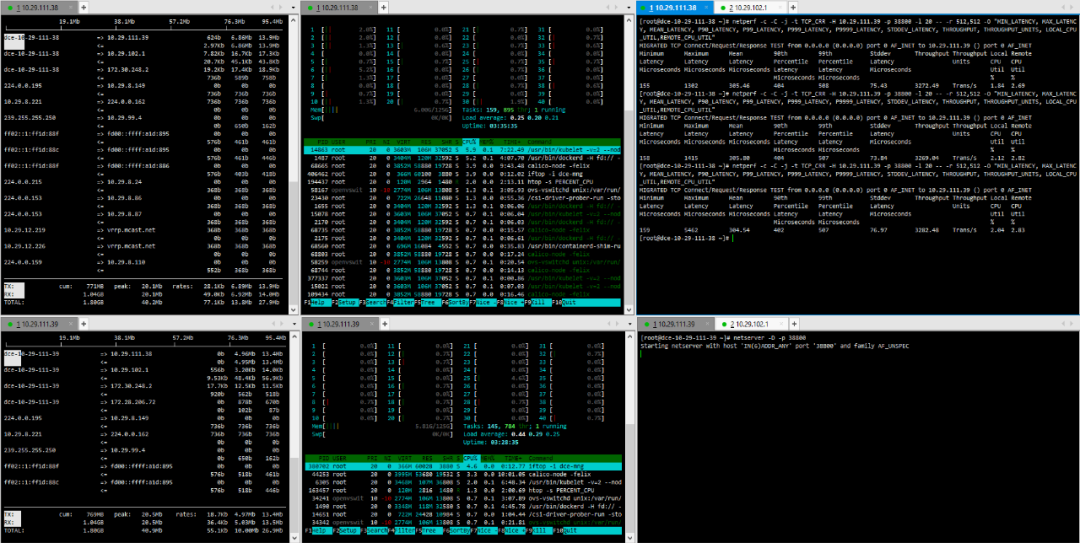

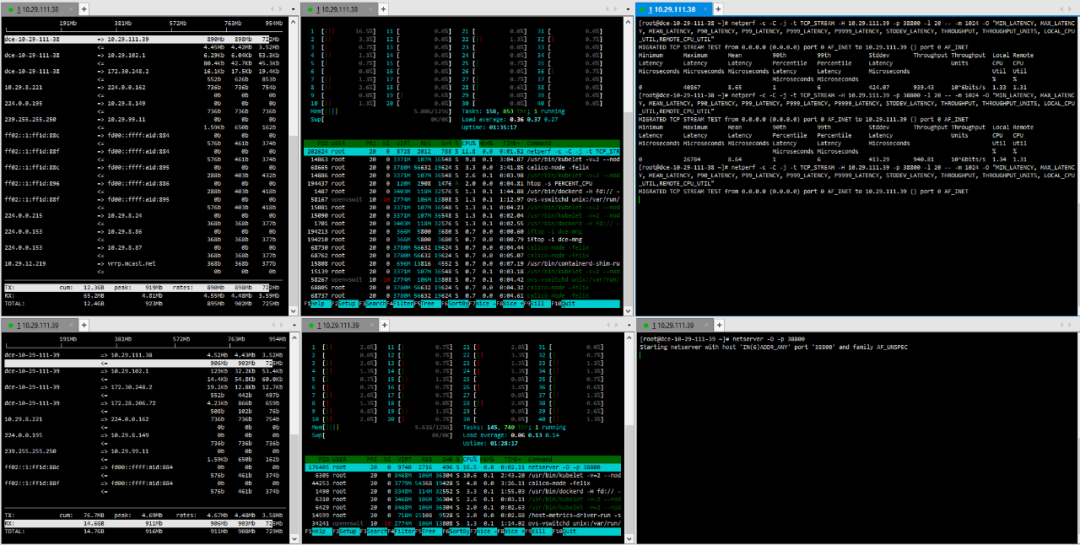

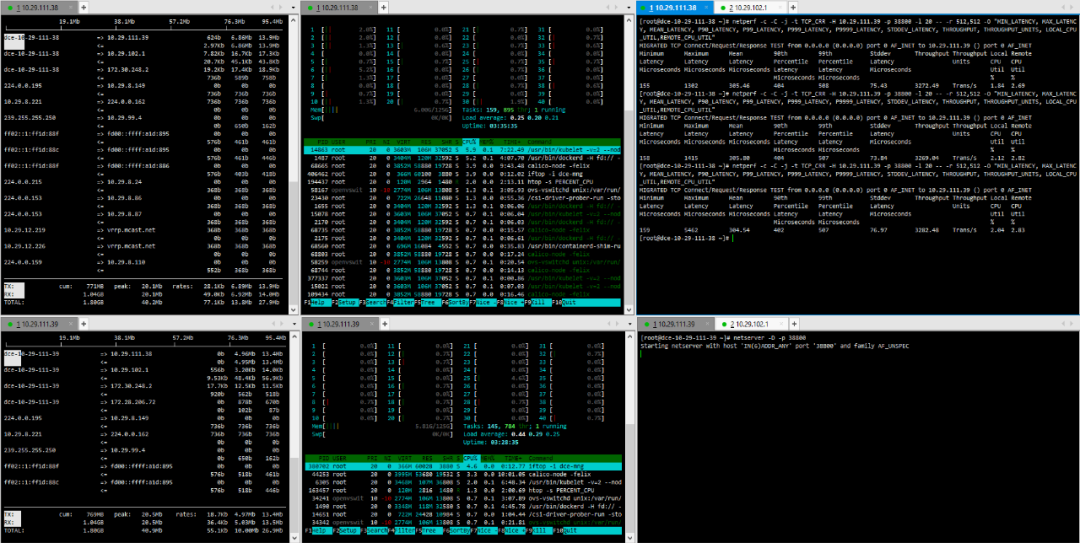

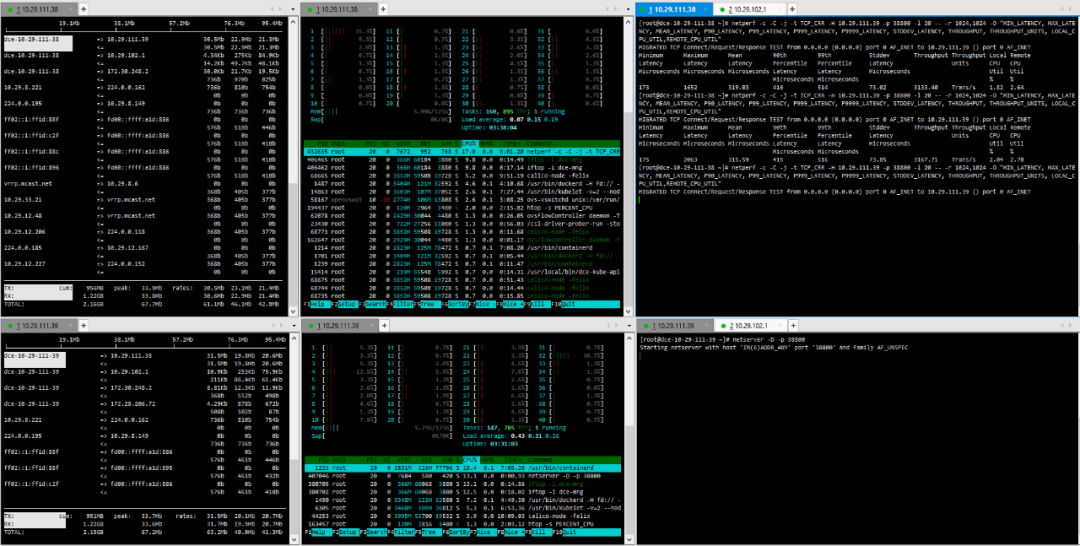

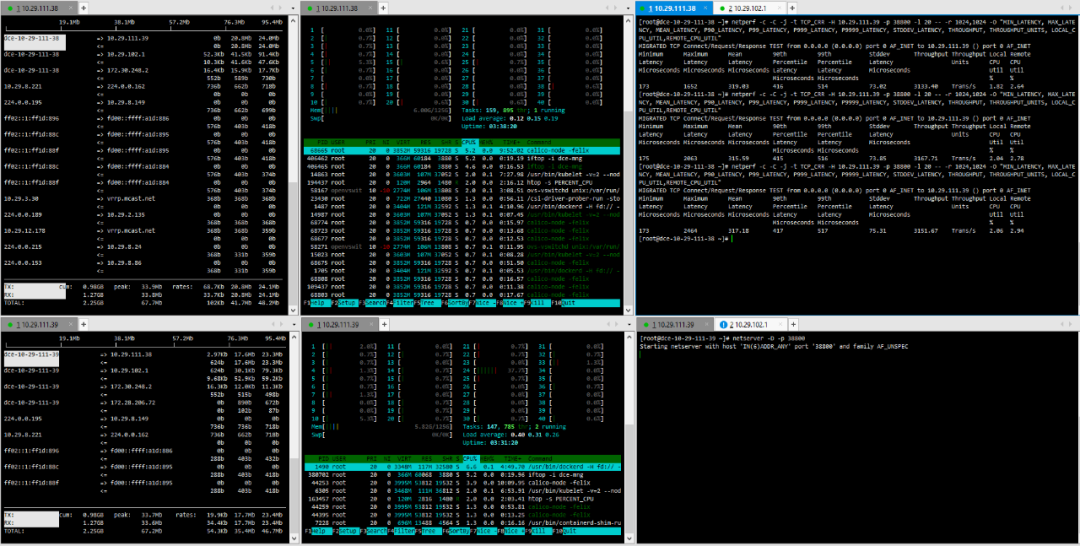

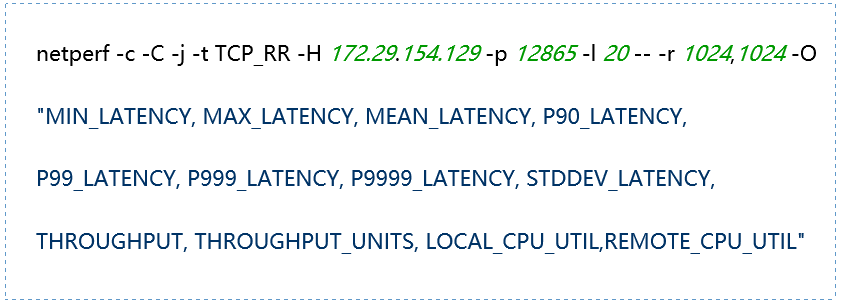

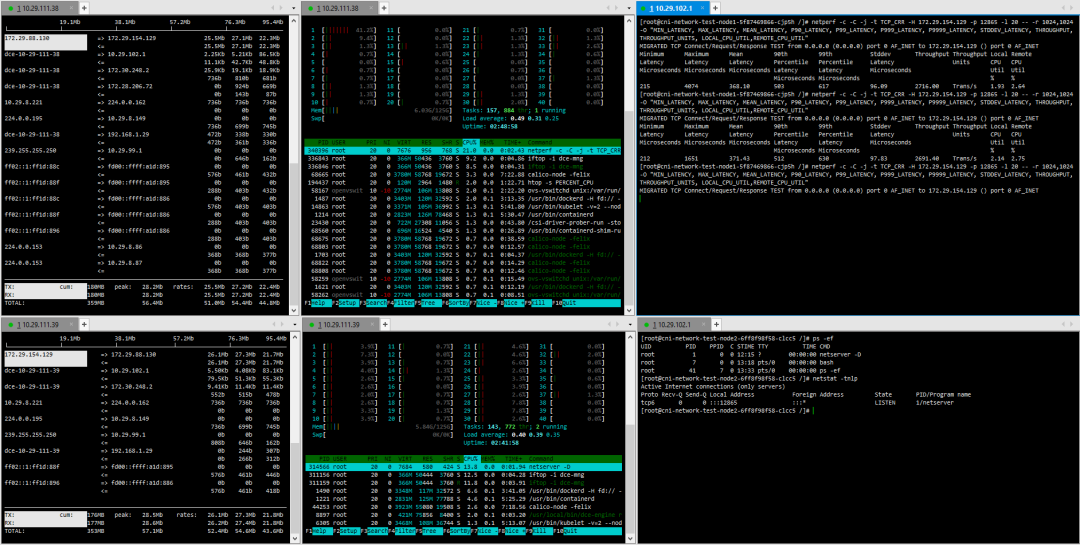

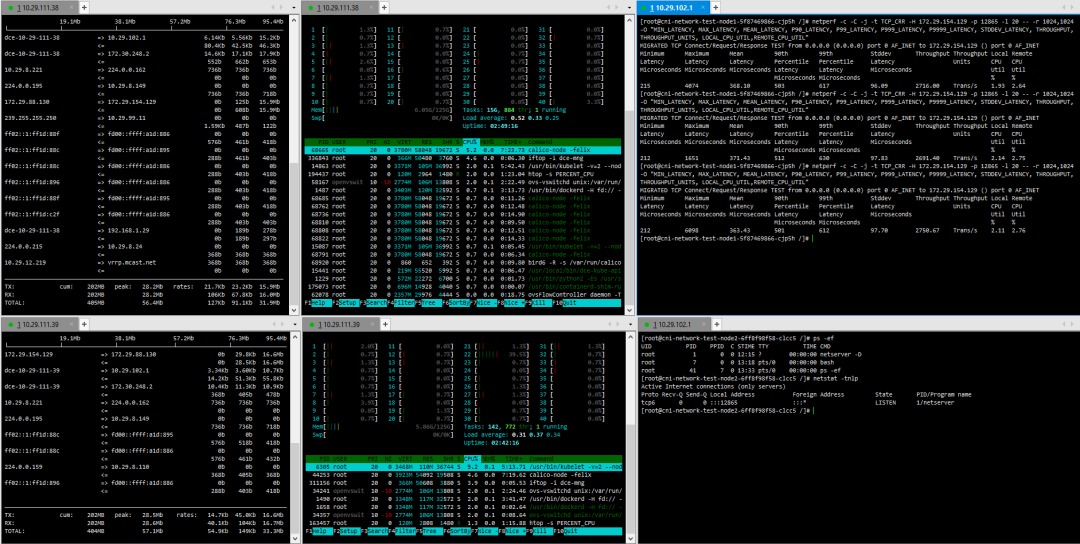

(8) Test Records

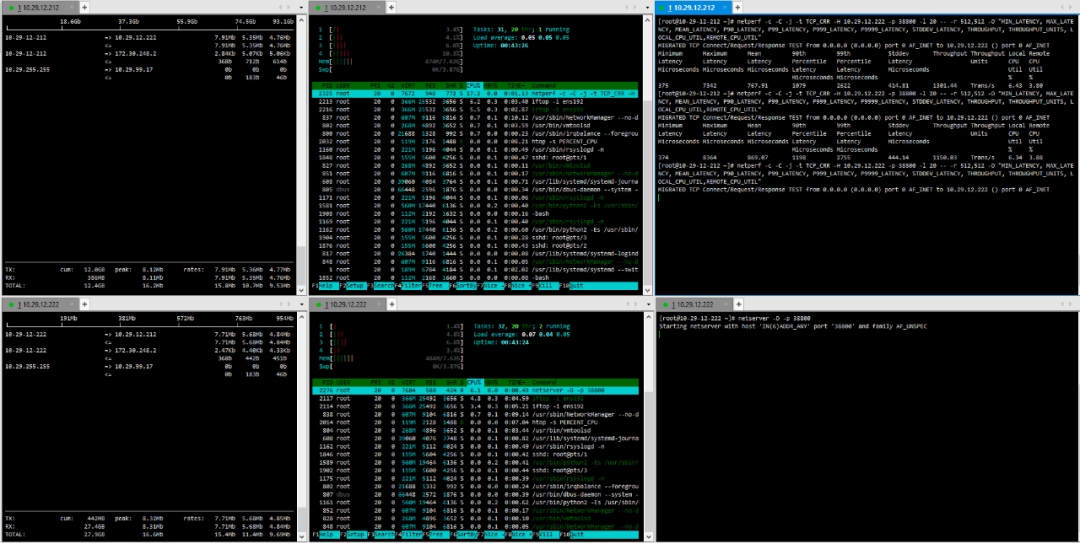

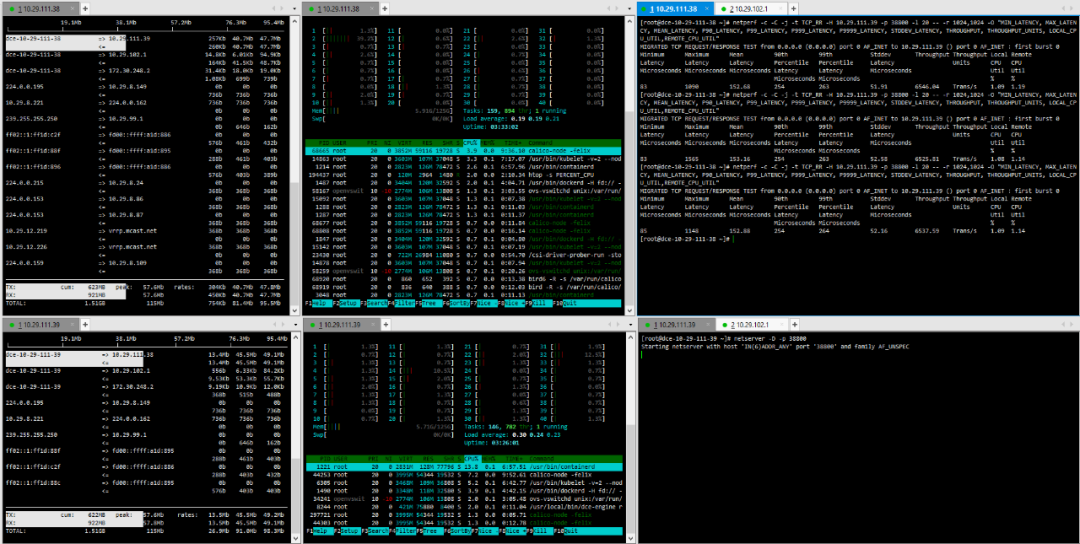

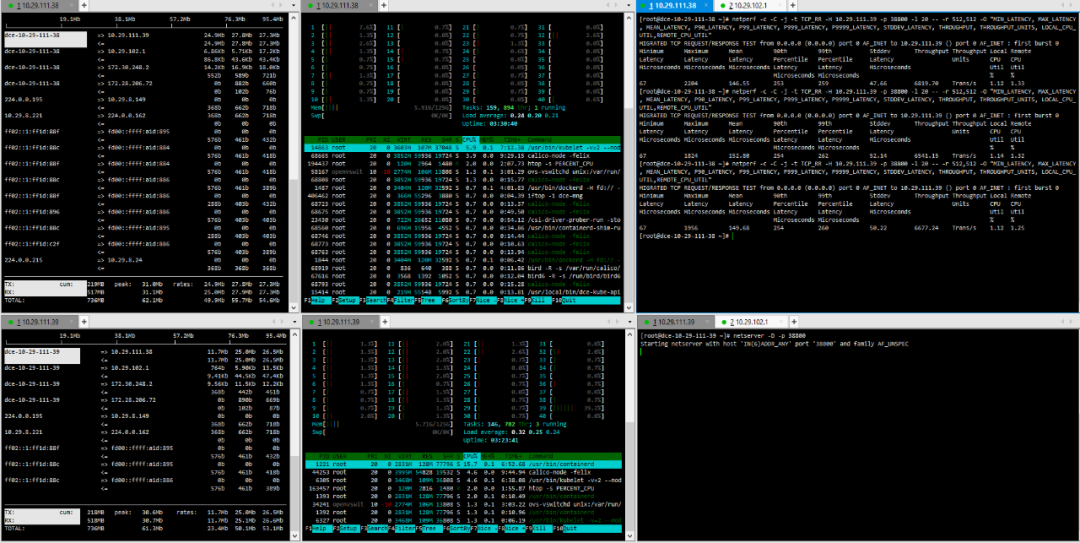

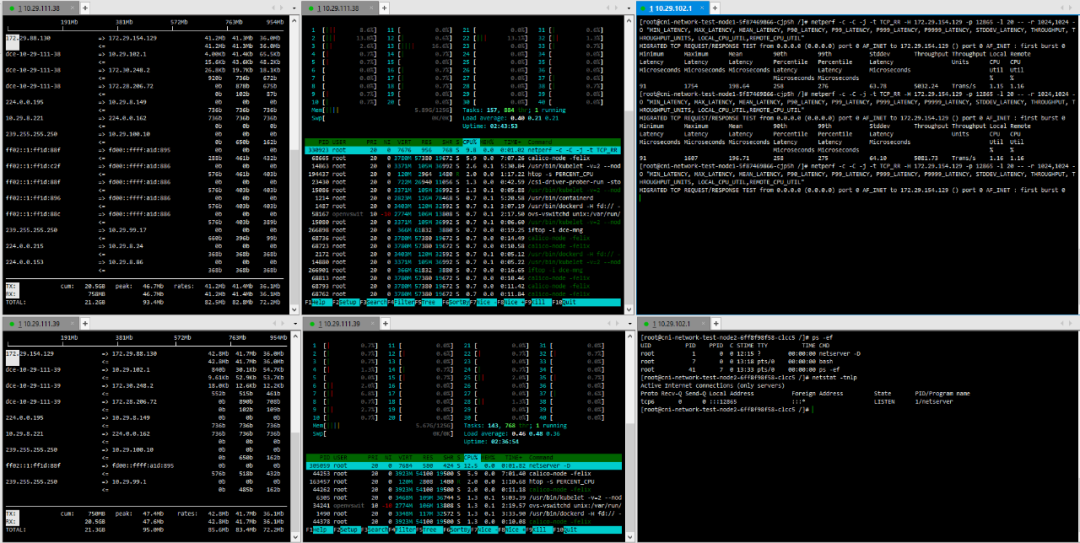

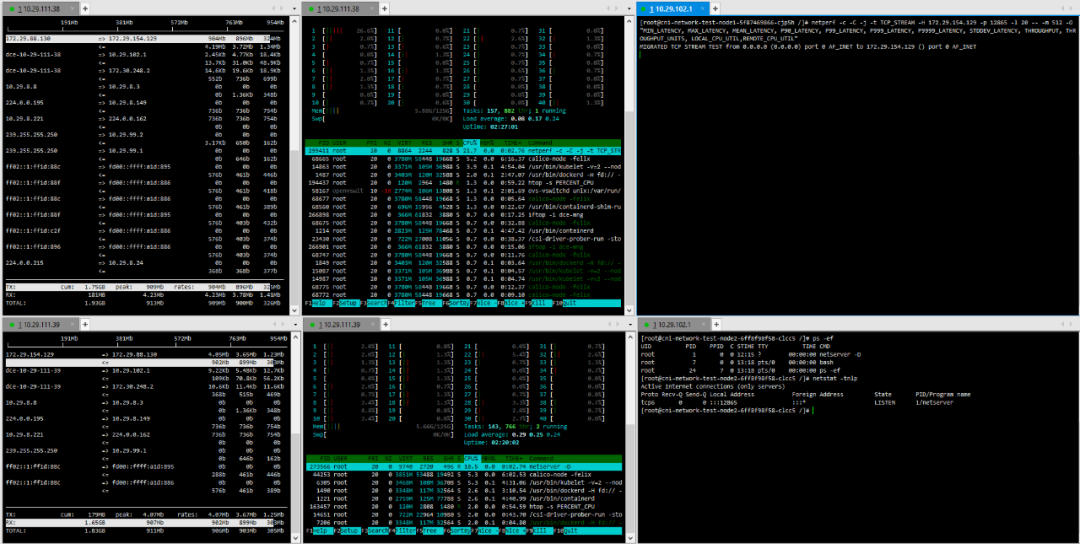

1. Virtual Node – Virtual Node

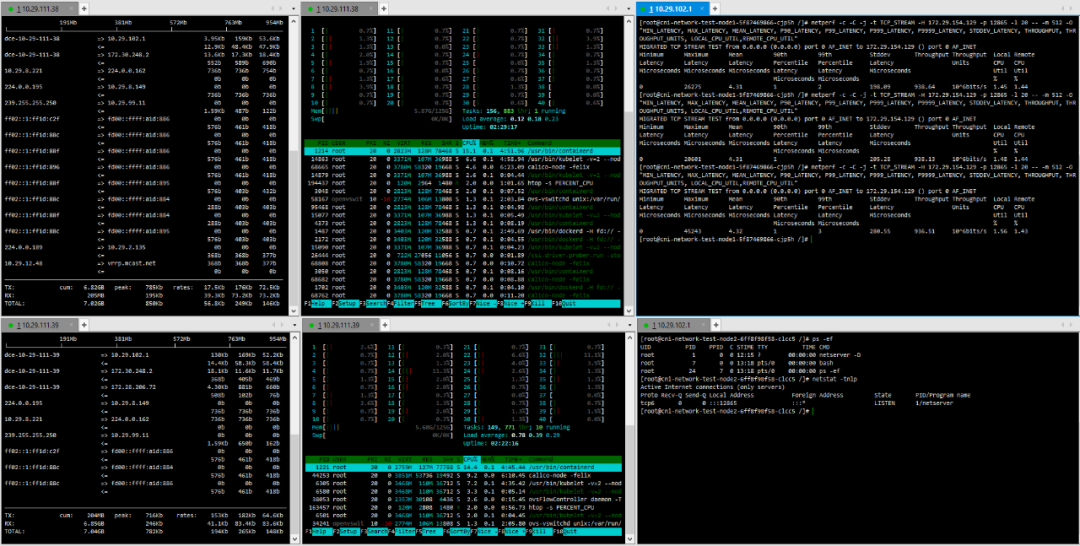

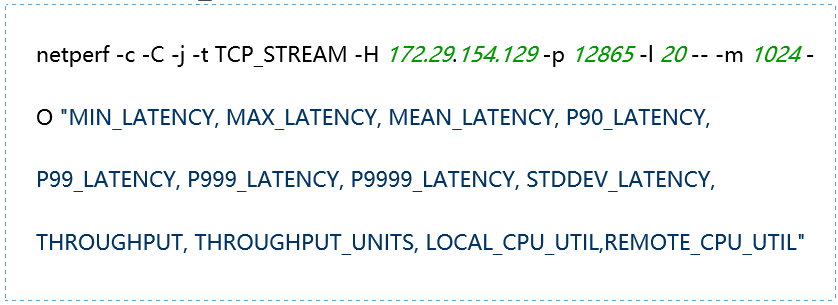

(1) TCP_STREAM (default) Message Body 512

(2) TCP_STREAM (default) Message Body 1024

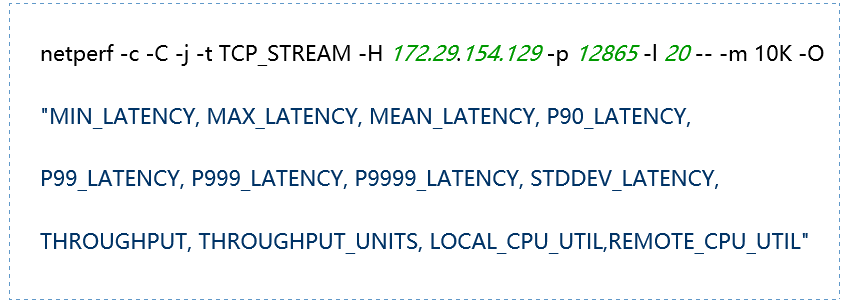

(3) TCP_STREAM (default) Message Body 10K

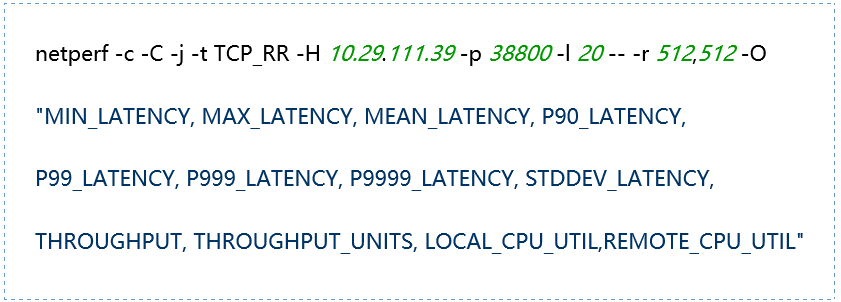

(4) TCP_RR Message Body 512

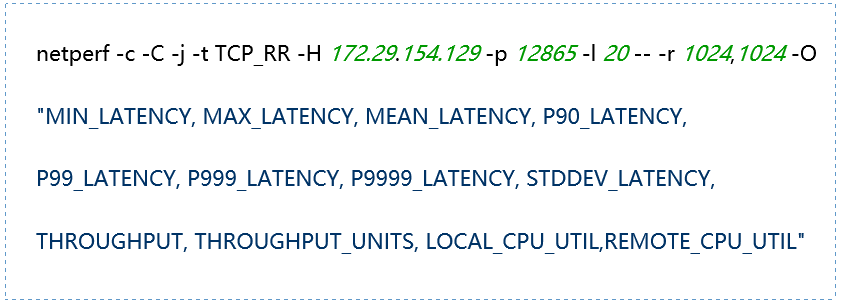

(5) TCP_RR Message Body 1024

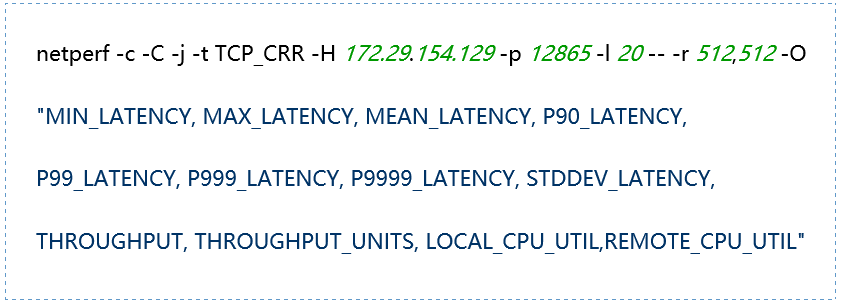

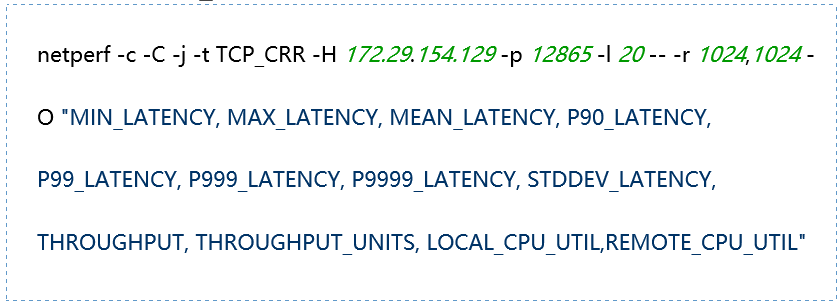

(6) TCP_CRR Message Body 512

(7) TCP_CRR Message Body 1024

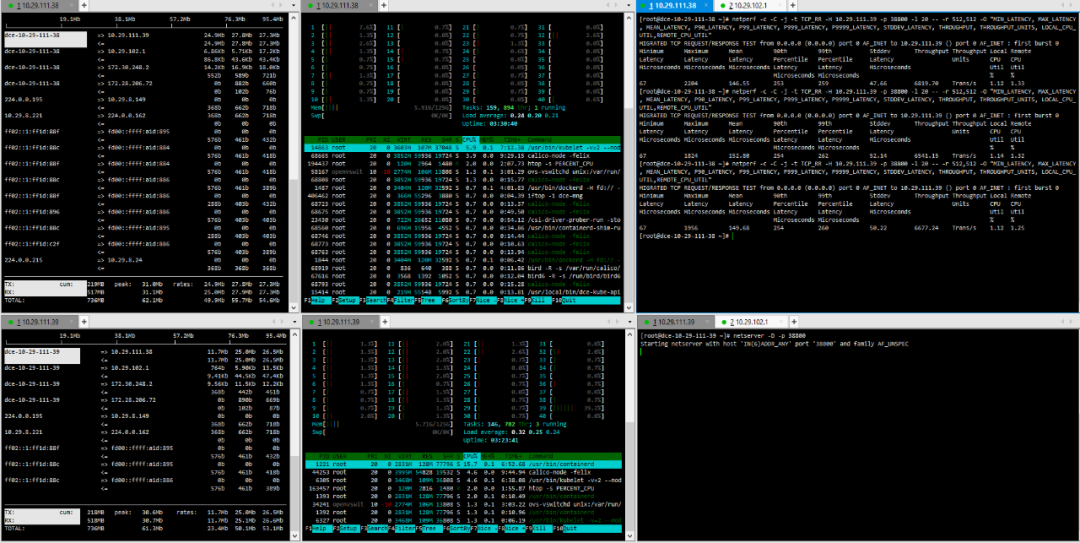

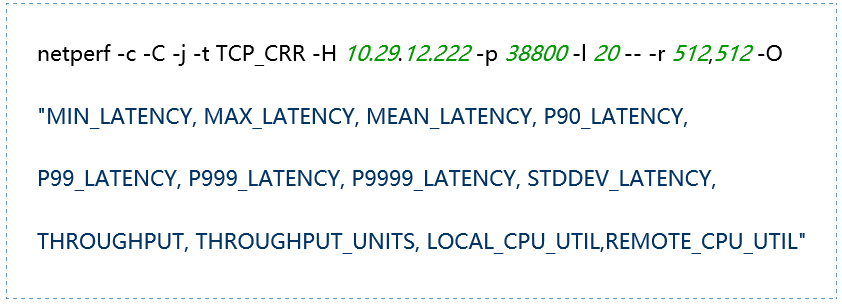

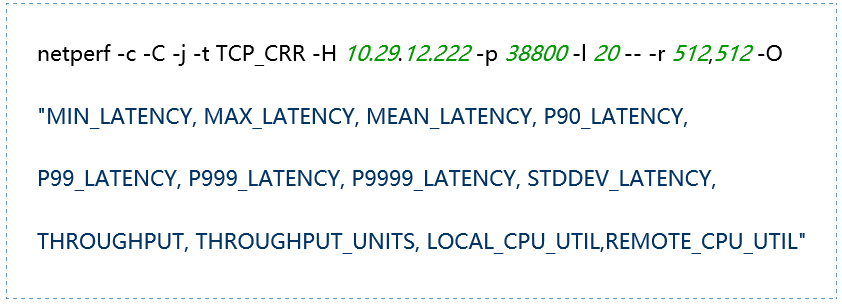

2. Physical Node – Physical Node

(1) TCP_STREAM (default) Message Body 512

(2) TCP_STREAM (default) Message Body 1024

(3) TCP_STREAM (default) Message Body 10K

(4) TCP_RR Message Body 512

(5) TCP_RR Message Body 1024

(6) TCP_CRR Message Body 512

(7) TCP_CRR Message Body 1024

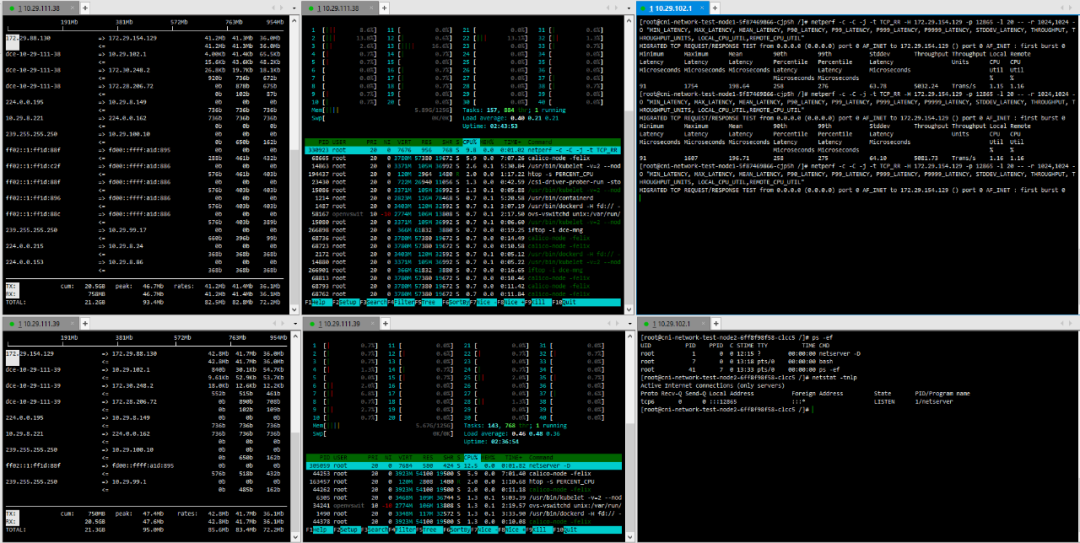

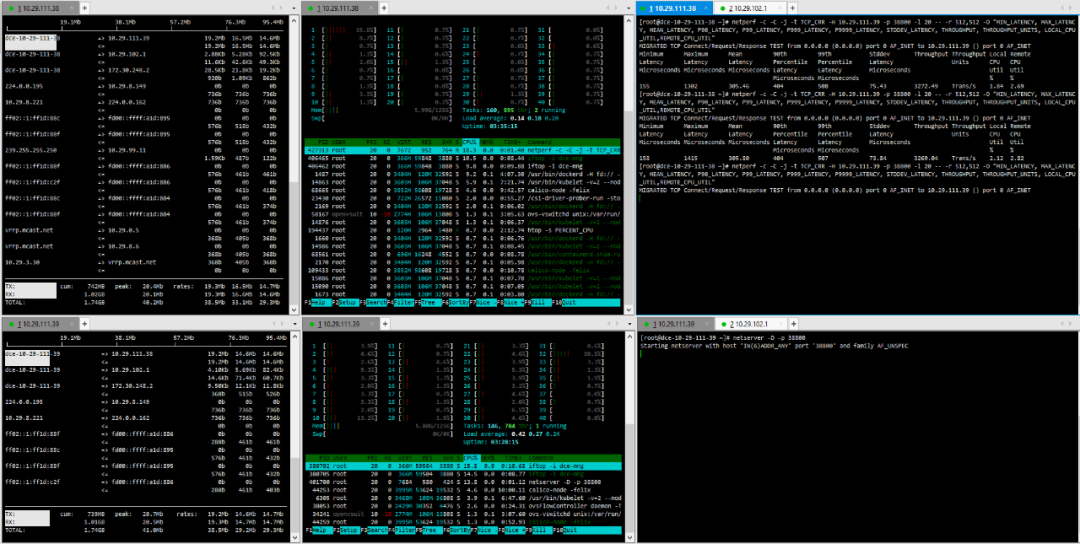

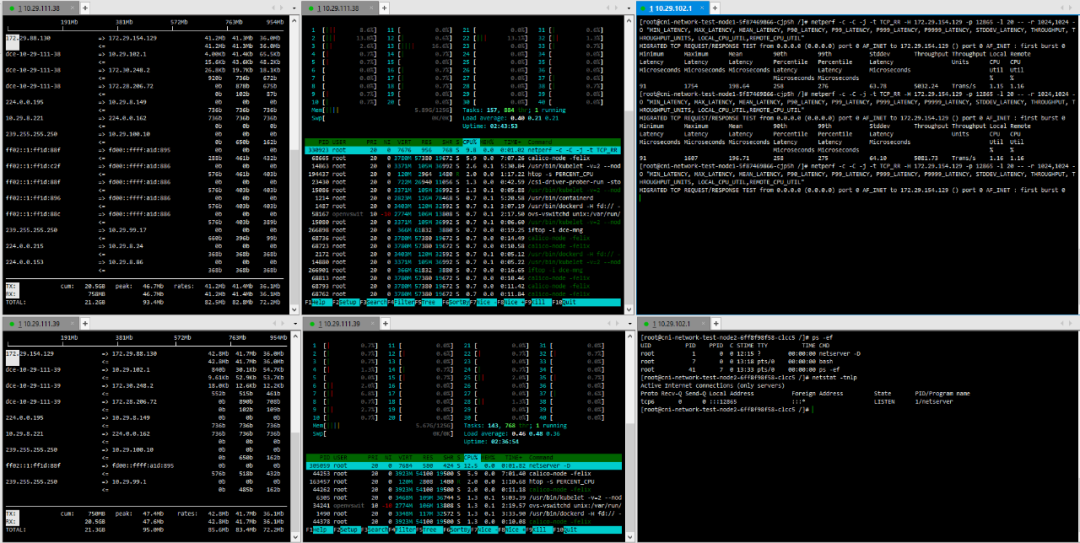

3. Pod – Cross-Host Pod Calico

(1) TCP_STREAM (default) Message Body 512

(2) TCP_STREAM (default) Message Body 1024

(3) TCP_STREAM (default) Message Body 10K

(4) TCP_RR Message Body 512

(5) TCP_RR Message Body 1024

(6) TCP_CRR Message Body 512

(7) TCP_CRR Message Body 1024

5. Summary: Dual Validation of Industry Scenarios and Test Data

By comparing the characteristics of the three major CNI plugins: Flannel, Calico, and Cilium, and combining Calico’s performance testing in bare metal and virtualized environments, we draw the following conclusions:

(1) Industry Scenario Adaptation Recommendations

Calico: Suitable for scenarios with high requirements for network policies and performance, such as financial trading systems and online education platforms. Its BGP mode in bare metal environments achieves throughput close to physical networks (only 7% loss) and supports flexible configurations for cross-subnet communication (such as IPIP mode), meeting hybrid cloud and multi-site deployment needs.Static IP: Suitable for systems with extreme performance requirements, such as the core business system of Hangzhou Bank, which runs on bare metal nodes and communicates with the cluster externally using static IPs. However, it consumes a significant number of physical IP resources.Cilium: High-performance features based on eBPF, but there are kernel compatibility risks, and production cases are limited; our company is still in a wait-and-see and research phase.Flannel: Suitable for small to medium-sized teams or testing environments, quick to set up and easy to maintain, but may become a performance bottleneck in production environments, not recommended for use.

(2) Deployment Environment Selection

Bare Metal Environment: Actual tests show that Calico’s pod-to-pod communication performance loss on bare metal is only 7%, suitable for latency-sensitive core businesses (such as securities business, banking core system business).Virtualized Environment: Although the average throughput loss reaches 47%, resource utilization is more flexible, suitable for development and testing environments or scenarios with high resource elasticity needs (such as temporary scaling during e-commerce promotions).Our company currently uses bare metal deployment in customer transaction systems and virtualized deployment in some internal management systems.

(3) Optimal Practices for Cluster Deployment

Using Calico’s crossSubnet mode (BGP for the same subnet, IPIP for cross-subnet) can balance performance and flexibility. In specific production practices, we do not deploy Kubernetes clusters across subnets, and instead deploy independent Kubernetes clusters in each independent subnet to ensure efficient transmission within the same subnet and avoid using IPIP mode.

(4) Future Trends

As eBPF technology matures and the kernel ecosystem improves, Cilium’s potential in observability and security policies will be further released. Our company will continue to track its community progress to reserve technical capabilities for high concurrency and high security demand scenarios.

Click at the end of the article to read the original text, and you can leave comments and communicate below the original textIf you find this article useful, please share, like or click “♡” to let more peers see it

Recommended materials/articles:

-

What is the relationship between containers, container clouds, and containerized PaaS platforms?

-

Consensus framework for evaluating the selection of cloud bases in the financial industry production environment (12 dimensions to comprehensively assess cloud base capabilities, available for download)

-

Comparison of the cloud frameworks of the three major domestic cloud vendors (Huawei, Tencent, Alibaba)

-

Sharing of disaster recovery architecture practices based on NAS dual-active architecture for over 100 business systems in a city commercial bank’s production container cloud

-

Construction of a private cloud platform 2.0 for a certain bank’s headquarters data center

Welcome to follow the community “Cloud Computing” technology theme which will continuously update high-quality materials and articles. Address:

https://www.talkwithtrend.com/Channel/77/

Download the twt community client APP

Long press to recognize the QR code to download

Or search for “twt” in the app store

Long press the QR code to follow the public account

*The content published by this public account only represents the author’s views and does not represent the community’s position