Introduction

With the increasing demand for computing power, the energy consumption of modern computing systems is also rising, making it challenging to support the sustainable future development of artificial intelligence technologies. This energy issue largely stems from the traditional digital computing systems employing the classic von Neumann architecture, where data processing and storage occur in different locations. In contrast, the human brain performs both data processing and storage in the same area and does so on a large scale in parallel. Inspired by biology, brain-inspired computing and neuromorphic systems can process unstructured data, perform image recognition, classify noisy and uncertain datasets, and contribute to building better learning and inference systems, fundamentally changing how we process signals and data in terms of energy efficiency and handling real-world uncertainties. However, current attention and investment in neuromorphic computing lag significantly behind digital AI and quantum technologies. How can we unlock the potential of neuromorphic computing? A recent article published in Nature argues that brain-inspired computing urgently needs a grand blueprint.

Research Areas: Brain-Inspired Computing, Neuroscience, Artificial Intelligence

A. Mehonic & A. J. Kenyon | Authors

Ren Kana | Translation

JawDrin | Proofreading

Deng Yixue | Editing

Paper Title:

Brain-inspired computing needs a master plan

Paper Link:

https://www.nature.com/articles/s41586-021-04362-w

Abstract

Abstract

Brain-inspired computing (or brain-inspired computing) paints a promising vision: processing information in fundamentally different ways with extremely high energy efficiency, capable of handling the ever-increasing amounts of unstructured and noisy data generated by humans. To realize such a vision, we urgently need a grand blueprint that provides support in funding, research focus, etc., to coordinate different research groups together. Yesterday, we walked this way in digital technology; today, we do the same in quantum technology; will we do the same tomorrow in brain-inspired computing?

Background

Background

Modern computing systems consume too much energy and cannot serve as sustainable platforms to support the widespread development of artificial intelligence technologies. We have focused solely on speed, accuracy, and the number of parallel operations per second, while neglecting sustainability, especially in the case of cloud-based systems. The habit of instant information retrieval has made us forget the energy and environmental costs that come with achieving these functionalities. For example, every Google search is supported by data centers that consume about 200 terawatt-hours of energy annually, and this consumption is expected to increase by an order of magnitude by 2030. Similarly, high-end AI systems, such as DeepMind’s AlphaGo and AlphaGo Zero, which can defeat human experts in complex strategy games, require thousands of parallel processing units, each consuming about 200 watts, far exceeding the approximately 20 watts consumed by the human brain.

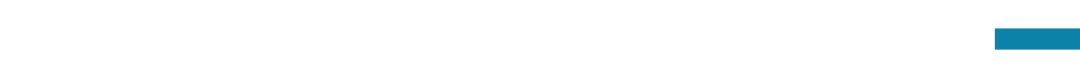

While not all data-intensive computations require AI and deep learning, we still need to be concerned about the environmental costs brought by the increasingly widespread application of deep learning. Additionally, we should consider applications that do not require compute-intensive deep learning algorithms, such as the Internet of Things (IoT) and autonomous robotic agents, to reduce their energy consumption. If energy demands are too high, the IoT, which requires countless devices to operate, becomes impractical. Analyses show that the surging demand for computing power far exceeds the improvements brought by Moore’s Law, with the demand for computing power doubling now only taking two months. Through the combination of intelligent architecture and co-design of hardware and software, we are making increasingly significant progress. For example, NVIDIA’s graphics processing units (GPUs) have improved 317 times since 2012, far surpassing the predictions of Moore’s Law, although during this time, the power consumption of processors has increased from 25W to 320W. The remarkable performance improvements during the research and development phase indicate that we can do better. Unfortunately, traditional computing solutions alone cannot meet long-term needs. This regret is particularly evident when considering the astonishingly high training costs of most complex deep learning models. Therefore, we need new pathways.

The energy issue largely stems from the classic von Neumann architecture of digital computing systems, where data processing and storage occur in different locations, requiring the processor to spend most of its time and energy moving data. Fortunately, inspiration from biology has provided us with a new pathway—performing storage and processing in the same area, encoding information in a completely different way, or directly manipulating signals and employing large-scale parallelism, which will be elaborated in Box 1. In fact, energy efficiency and the exercise of advanced functionality can coexist—our brain is a testament to this. Of course, there is still much to explore regarding how our brains achieve both. Our goal is not merely to mimic biological systems but to find pathways inspired by the significant advancements in neuroscience and computational neuroscience over the past few decades. Our understanding of the brain is sufficient to inspire us.

Biological Inspiration

Biological Inspiration

In biology, data storage is not independent of data processing; for example, the human brain—primarily composed of neurons and synapses—exercises both functions in a large-scale parallel and adaptive structure. The human brain contains an average of 1011 neurons and 1015 synapses, consuming about 20 watts of power; in contrast, a digital simulation of an artificial neural network of the same size consumes 7900 kilowatts. This six-order-of-magnitude difference is undoubtedly a challenge for us. The brain directly processes noisy signals with high efficiency, contrasting sharply with the signal-data conversion and high-precision computations in traditional computer systems, which consume significant time and energy. Even the most powerful digital supercomputers cannot match the brain’s capabilities. Therefore, brain-inspired computing systems (also referred to as neuromorphic computing) are expected to fundamentally change how we process signals and data, both in terms of energy efficiency and in handling real-world uncertainties.

Of course, the emergence of this idea has a traceable history in the development of science. The term “neuromorphic” describes devices and systems that mimic some functions of biological neural systems, coined by Carver Mead at the California Institute of Technology in the late 1980s. This inspiration comes from decades of work modeling the nervous system as equivalent circuits and building analog electronic devices and systems to provide similar functionalities.

What are we talking about when we say neuromorphic systems?

The inspiration from the brain allows us to process information in a completely different way than existing traditional computing systems. Different brain-inspired computing platforms (or neuromorphic computing platforms) combine methods distinct from von Neumann computers: analogue data processing, asynchronous communication, massively parallel processing (MPP), or spiking deep residual networks (Spiking ResNet), among others.

The term “neuromorphic” encompasses at least three general research groups, distinguishable by their research goals: simulating neural functions (reverse engineering the brain), simulating neural networks (developing new computational methods), and designing new electronic devices.

(1) Simulating Neural Functions

Neuromorphic engineering studies how the brain uses the physical properties of biological structures such as synapses and neurons to perform computation. Brain-inspired engineers leverage the physical principles of analog electronics to simulate the functions of biological neurons and synapses to define the basic operations required for processing audio, video, or smart sensors, such as carrier tunneling, charge retention on silicon floating gates, and the exponential dependence of various device or material properties on various fields. More detailed information can be found in reference [41].

(2) Simulating Neural Networks

Brain-inspired computing seeks new data processing methods from a biological perspective, which can also be understood as the computational science of neuromorphic systems. This direction of research focuses on simulating the structure and function of biological neural networks (structure or function), completing storage and processing in the same area like the brain; or employing entirely different computation methods based on voltage spikes to simulate biological system action potentials.

(3) Designing New Electronic Devices

Of course, a skilled craftsman cannot work without materials. We need the devices and materials to support the realization of biomimetic functions. Recently developed electronic and photonic components with customizable features can help us mimic biological structures such as synapses and neurons. These neuromorphic tools provide exciting new technologies that can expand the capabilities of neuromorphic engineering and computing.

Among these new components, the most important are memristors: their resistance value is a function of their historical resistance value. The complex dynamic electrical response of memristors means they can be used in digital memory elements, variable weights in artificial synapses, cognitive processing elements, optical sensors, and devices that simulate biological neurons. They may possess some functions of real dendrites, and their dynamic responses can produce oscillatory behaviors similar to the brain, operating at the edge of chaos. They may link with biological neurons within a single system and can perform these functions with minimal energy.

Data discourse. We use the term “data” to describe information encoded in the physical responses of analog signals or sensors, or more standard digital information in pure theoretical computations. When referring to the brain “processing data,” we describe a complete set of signal processing tasks without relying on the traditional notion of signal digitization. Imagine brain-inspired computing systems operating at different levels from analog signal processing to processing massive databases: in the former case, we can avoid generating large datasets from the outset; in the latter case, we can significantly enhance processing efficiency while avoiding the impact of the von Neumann model. We have ample reason to explain why, in many scenarios, a digital representation of signals is necessary: high precision, reliability, and determinism. However, discoveries in transistor physics have shown that digital abstraction eliminates a lot of information, pursuing only minimal, quantized information: a bit. In this process, we incur enormous energy costs to exchange efficiency for reliability. Since AI applications are inherently still probabilistic, we must consider whether this exchange of efficiency for reliability makes sense. The computational tasks supporting AI applications executed by traditional von Neumann computers are highly compute-intensive and therefore energy-consuming. However, using spike-based signal simulation or hybrid systems may be able to perform similar tasks with high energy efficiency. Therefore, the advancements in AI systems and the emergence of new devices have rekindled interest in neuromorphic computing. These new devices offer exciting new ways to simulate some capabilities of biological neural systems, as detailed in Box 1.

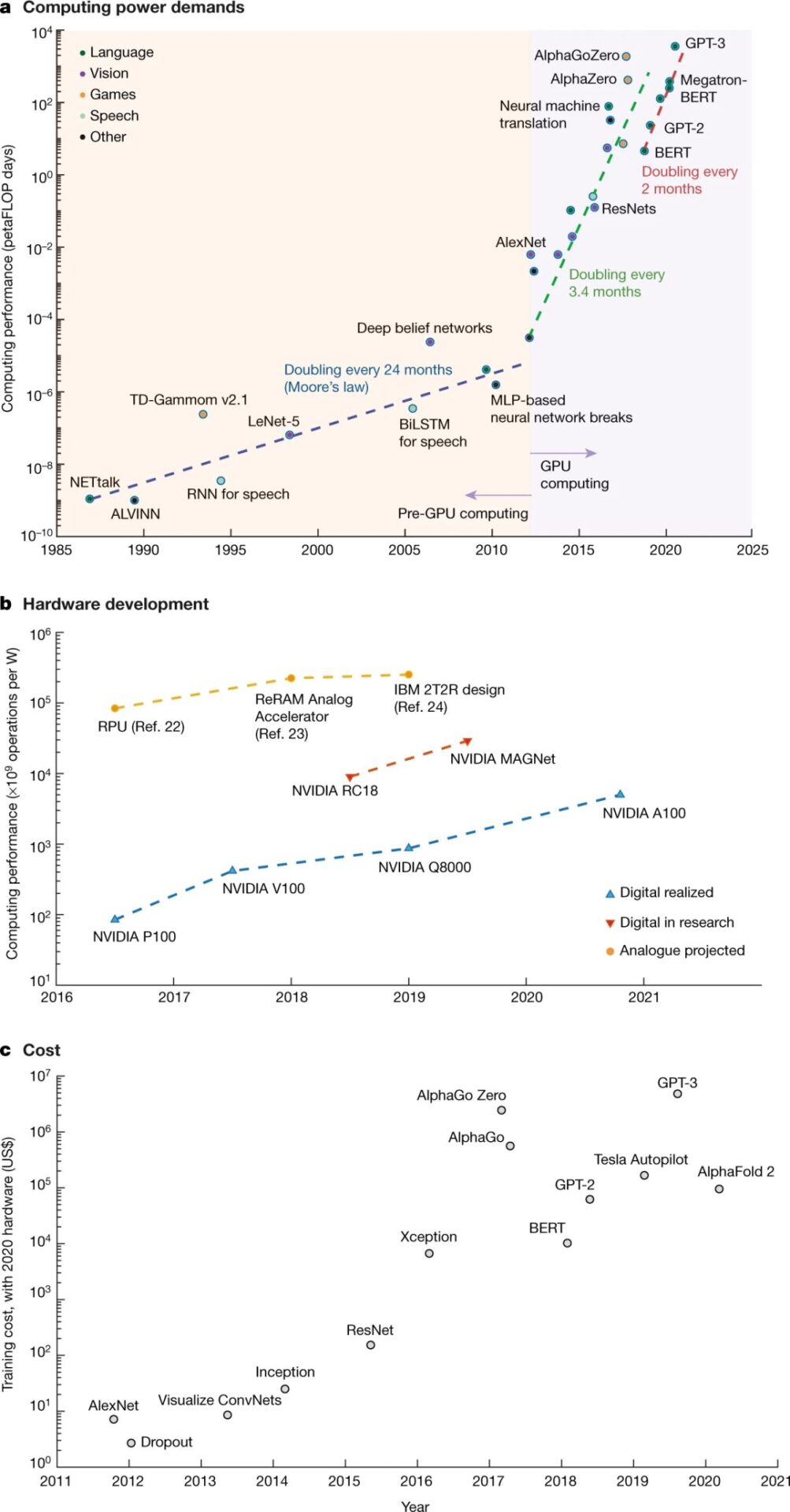

The definition of “neuromorphic” varies. In short, it is a story about hardware: brain-inspired chips aim to integrate and utilize various useful features of the brain, including in-memory computing, spike-based information processing, fine-grained parallelism, noise and randomness resilient signal processing, adaptability, hardware learning, asynchronous communication, and analogue processing. Although there is no consensus on how many capabilities are required to be classified as neuromorphic, it is evident that this is a form of AI implemented differently than mainstream computing systems. However, we should not get lost in terminology but focus on whether the methods are effective.

The current neuromorphic technological approaches are still exploring between the poles of deconstruction and construction: deconstruction attempts to reverse-engineer the profound connections between the brain’s structure and function, while construction strives to find inspiration from our limited understanding of the brain. On the long road of deconstruction, perhaps the most important is the Human Brain Project (HBP), this high-profile and ambitious ten-year initiative funded by the EU since 2013. The HBP adopts two existing brain-inspired computing platforms and will further develop open-access brain-inspired computing platforms, namely, the SpiNNaker at the University of Manchester and the BrainScaleS at Heidelberg University. Both platforms implement highly complex silicon models of brain structure to help us better understand the workings of the biological brain. Meanwhile, construction is also arduous, with many teams using brain-inspired computing methods to enhance the performance of digital or analog electronic devices. Figure 2 summarizes the existing categories of neuromorphic chips, classifying them into four categories based on their different roles in analysis-synthesis and technology platforms. More importantly, we must recognize that neuromorphic engineering is not just for advanced cognitive systems; it can simultaneously provide benefits in energy, speed, and security in small edge devices with limited cognitive capabilities (at least by eliminating the need for continuous communication with the cloud).

References related to brain-inspired chips:

-

Neurogrid: Benjamin, B. V. et al. Neurogrid: a mixed-analog–digital multichip system for large-scale neural simulations. Proc. IEEE 102, 699–716 (2014). -

BrainScaleS: Schmitt, S. et al. Neuromorphic hardware in the loop: training a deep spiking network on the BrainScaleS wafer-scale system. In 2017 Intl Joint Conf. Neural Networks (IJCNN) https://doi.org/10.1109/ijcnn.2017.7966125 (IEEE, 2017). -

MNIFAT: Lichtsteiner, P., Posch, C. & Delbruck, T. A 128 × 128 120 dB 15 μs latency asynchronous temporal contrast vision sensor. IEEE J. Solid-State Circuits 43, 566–576 (2008). -

DYNAP: Moradi, S., Qiao, N., Stefanini, F. & Indiveri, G. A scalable multicore architecture with heterogeneous memory structures for dynamic neuromorphic asynchronous processors (DYNAPs). IEEE Trans. Biomed. Circuits Syst. 12, 106–122 (2018). -

DYNAP-SEL: Thakur, C. S. et al. Large-scale neuromorphic spiking array processors: a quest to mimic the brain. Front. Neurosci. 12, 891 (2018). -

ROLLS: Qiao, N. et al. A reconfigurable on-line learning spiking neuromorphic processor comprising 256 neurons and 128K synapses. Front. Neurosci. 9, 141 (2015). -

Spirit: Valentian, A. et al. in 2019 IEEE Intl Electron Devices Meeting (IEDM) 14.3.1–14.3.4 https://doi.org/10.1109/IEDM19573.2019.8993431 (IEEE, 2019). -

ReASOn: Resistive Array of Synapses with ONline Learning (ReASOn) Developed by NeuRAM3 Project https://cordis.europa.eu/project/id/687299/reporting (2021). -

DeepSouth: Wang, R. et al. Neuromorphic hardware architecture using the neural engineering framework for pattern recognition. IEEE Trans. Biomed. Circuits Syst. 11, 574–584 (2017). -

SpiNNaker: Furber, S. B., Galluppi, F., Temple, S. & Plana, L. A. The SpiNNaker Project. Proc. IEEE 102, 652–665 (2014). An example of a large-scale neuromorphic system as a model for the brain. -

IBM TrueNorth: Merolla, P. A. et al. A million spiking-neuron integrated circuit with a scalable communication network and interface. Science 345, 668–673 (2014). -

Intel Loihi: Davies, M. et al. Loihi: a neuromorphic manycore processor with on-chip learning. IEEE Micro 38, 82–99 (2018). -

Tianjic: Pei, J. et al. Towards artificial general intelligence with hybrid Tianjic chip architecture. Nature 572, 106–111 (2019). -

ODIN: Frenkel, C., Lefebvre, M., Legat, J.-D. & Bol, D. A 0.086-mm2 12.7-pJ/SOP 64k-synapse 256-neuron online-learning digital spiking neuromorphic processor in 28-nm CMOS. IEEE Trans. Biomed. Circuits Syst. 13, 145–158 (2018). -

Intel SNN chip: Chen, G. K., Kumar, R., Sumbul, H. E., Knag, P. C. & Krishnamurthy, R. K. A 4096-neuron 1M-synapse 3.8-pJ/SOP spiking neural network with on-chip STDP learning and sparse weights in 10-nm FinFET CMOS. IEEE J. Solid-State Circuits 54, 992–1002 (2019).

Future Outlook

Future Outlook

Seizing Opportunities

Seizing Opportunities

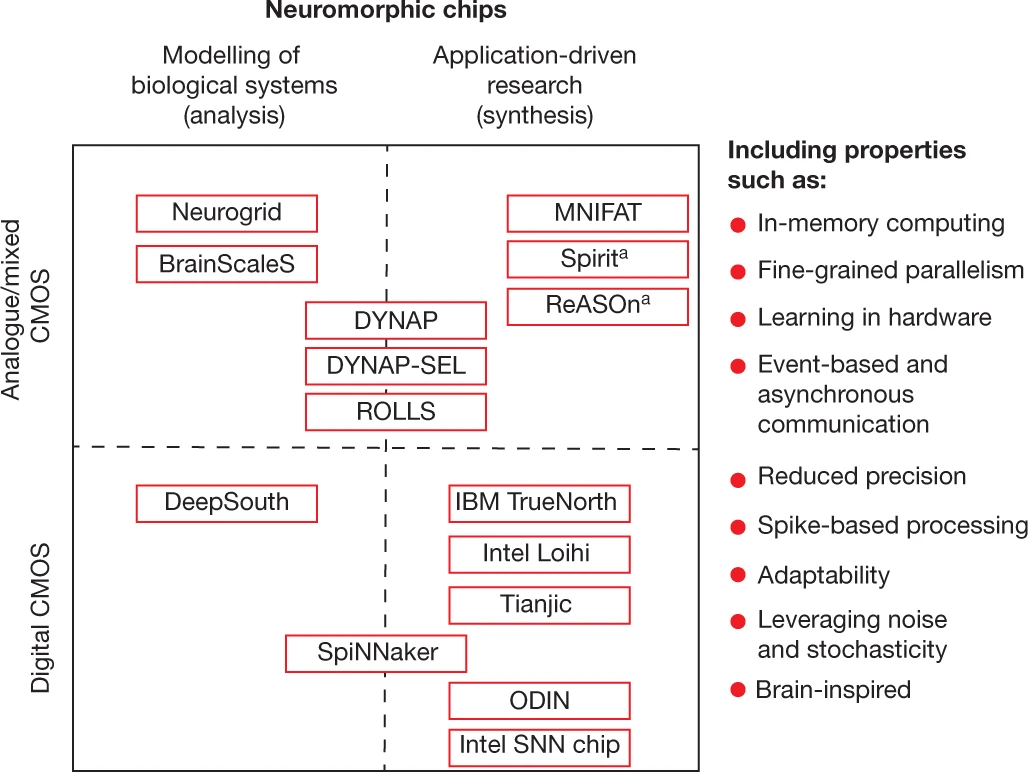

Prospects for AI Financing

Conclusion

Conclusion

References

-

Jones, N. How to stop data centres from gobbling up the world’s electricity. Nature https://doi.org/10.1038/d41586-018-06610-y (12 September 2018).

-

Wu, K. J. Google’s new AI is a master of games, but how does it compare to the human mind? Smithsonian https://www.smithsonianmag.com/innovation/google-ai-deepminds-alphazero-games-chess-and-go-180970981/ (10 December 2018).

-

Amodei, D. & Hernandez, D. AI and compute. OpenAI Blog https://openai.com/blog/ai-and-compute/ (16 May 2018).

-

Venkatesan, R. et al. in 2019 IEEE Hot Chips 31 Symp. (HCS) https://doi.org/10.1109/HOTCHIPS.2019.8875657 (IEEE, 2019).

-

Venkatesan, R. et al. in 2019 IEEE/ACM Intl Conf. Computer-Aided Design (ICCAD) https://doi.org/10.1109/ICCAD45719.2019.8942127 (IEEE, 2019).

-

Wong, T. M. et al. 1014. Report no. RJ10502 (ALM1211-004) (IBM, 2012). The power consumption of this simulation of the brain puts that of conventional digital systems into context.

-

Mead, C. Analog VLSI and Neural Systems (Addison-Wesley, 1989).

-

Mead, C. A. Author Correction: How we created neuromorphic engineering. Nat. Electron. 3, 579–579 (2020).

-

Hodgkin, A. L. & Huxley, A. F. A quantitative description of membrane current and its application to conduction and excitation in nerve. J. Physiol. 117, 500–544 (1952). More complex models followed, but this seminal work remains the clearest and an excellent starting point, developing equivalent electrical circuits and circuit models for the neural membrane.

-

National Nanotechnology Initiative. US National Nanotechnology Initiative https://www.nano.gov (National Nanotechnology Coordination Office, accessed 18 August 2021).

-

About Groningen Cognitive Systems and Materials. University of Groningen https://www.rug.nl/research/fse/cognitive-systems-and-materials/about/ (accessed 9 November 2020).

-

Degree programs: Neuroengineering. Technical University of Munich https://www.tum.de/en/studies/degree-programs/detail/detail/StudyCourse/neuroengineering-master-of-science-msc/ (accessed 18 August 2021).

-

Course catalogue: 227-1033-00L Neuromorphic Engineering I. ETH Zürich http://www.vvz.ethz.ch/Vorlesungsverzeichnis/lerneinheit.view?lerneinheitId=132789&semkez=2019W&ansicht=KATALOGDATEN&lang=en (accessed 9 November 2020).

-

Brains in Silicon. http://web.stanford.edu/group/brainsinsilicon/ (accessed 16 March 2022).

-

Neuromorphs. Instituto de Microelectrónica de Sevilla http://www2.imse-cnm.csic.es/neuromorphs (accessed 9 November 2020).

-

Neurotech. https://neurotechai.eu (accessed 18 August 2021).

-

Chua Memristor Center: Members. Technische Universität Dresden https://cmc-dresden.org/members (accessed 9 November 2020).

-

Subcommittee on Quantum Information Science. National Strategic Overview for Quantum Information Science. https://web.archive.org/web/20201109201659/https://www.whitehouse.gov/wp-content/uploads/2018/09/National-Strategic-Overview-for-Quantum-Information-Science.pdf (US Government, 2018; accessed 17 March 2022).

-

Smith-Goodson, P. Quantum USA vs. quantum China: the world’s most important technology race. Forbes https://www.forbes.com/sites/moorinsights/2019/10/10/quantum-usa-vs-quantum-china-the-worlds-most-important-technology-race/#371aad5172de (10 October 2019).

-

Gibney, E. Quantum gold rush: the private funding pouring into quantum start-ups. Nature https://doi.org/10.1038/d41586-019-02935-4 (2 October 2019).

-

Le Quéré, C. et al. Temporary reduction in daily global CO2 emissions during the COVID-19 forced confinement. Nat. Clim. Change 10, 647–653 (2020).

-

Gokmen, T. & Vlasov, Y. Acceleration of deep neural network training with resistive cross-point devices: design considerations. Front. Neurosci. 1010.3389/fnins.2016.00333 (2016).

-

Marinella, M. J. et al. Multiscale co-design analysis of energy latency area and accuracy of a ReRAM analog neural training accelerator. IEEE J. Emerg. Selected Topics Circuits Systems 8, 86–101 (2018).

-

Chang, H.-Y. et al. AI hardware acceleration with analog memory: microarchitectures for low energy at high speed. IBM J. Res. Dev. 63, 8:1–8:14 (2019).

-

ARK Invest. Big Ideas 2021 https://research.ark-invest.com/hubfs/1_Download_Files_ARK-Invest/White_Papers/ARK–Invest_BigIdeas_2021.pdf (ARK Investment Management, 2021; accessed 27 April 2021).

-

Benjamin, B. V. et al. Neurogrid: a mixed-analog–digital multichip system for large-scale neural simulations. Proc. IEEE 102, 699–716 (2014).

-

Schmitt, S. et al. Neuromorphic hardware in the loop: training a deep spiking network on the BrainScaleS wafer-scale system. In 2017 Intl Joint Conf. Neural Networks (IJCNN) https://doi.org/10.1109/ijcnn.2017.7966125 (IEEE, 2017).

-

Lichtsteiner, P., Posch, C. & Delbruck, T. A 128 × 128 120 dB 15 μs latency asynchronous temporal contrast vision sensor. IEEE J. Solid-State Circuits 43, 566–576 (2008).

-

Moradi, S., Qiao, N., Stefanini, F. & Indiveri, G. A scalable multicore architecture with heterogeneous memory structures for dynamic neuromorphic asynchronous processors (DYNAPs). IEEE Trans. Biomed. Circuits Syst. 12, 106–122 (2018).

-

Thakur, C. S. et al. Large-scale neuromorphic spiking array processors: a quest to mimic the brain. Front. Neurosci. 12, 891 (2018).

-

Qiao, N. et al. A reconfigurable on-line learning spiking neuromorphic processor comprising 256 neurons and 128K synapses. Front. Neurosci. 9, 141 (2015).

-

Valentian, A. et al. in 2019 IEEE Intl Electron Devices Meeting (IEDM) 14.3.1–14.3.4 https://doi.org/10.1109/IEDM19573.2019.8993431 (IEEE, 2019).

-

Resistive Array of Synapses with ONline Learning (ReASOn) Developed by NeuRAM3 Project https://cordis.europa.eu/project/id/687299/reporting (2021).

-

Wang, R. et al. Neuromorphic hardware architecture using the neural engineering framework for pattern recognition. IEEE Trans. Biomed. Circuits Syst. 11, 574–584 (2017).

-

Furber, S. B., Galluppi, F., Temple, S. & Plana, L. A. The SpiNNaker Project. Proc. IEEE 102, 652–665 (2014). An example of a large-scale neuromorphic system as a model for the brain.

-

Merolla, P. A. et al. A million spiking-neuron integrated circuit with a scalable communication network and interface. Science 345, 668–673 (2014).

-

Davies, M. et al. Loihi: a neuromorphic manycore processor with on-chip learning. IEEE Micro 38, 82–99 (2018).

-

Pei, J. et al. Towards artificial general intelligence with hybrid Tianjic chip architecture. Nature 572, 106–111 (2019).

-

Frenkel, C., Lefebvre, M., Legat, J.-D. & Bol, D. A 0.086-mm2 12.7-pJ/SOP 64k-synapse 256-neuron online-learning digital spiking neuromorphic processor in 28-nm CMOS. IEEE Trans. Biomed. Circuits Syst. 13, 145–158 (2018).

-

Chen, G. K., Kumar, R., Sumbul, H. E., Knag, P. C. & Krishnamurthy, R. K. A 4096-neuron 1M-synapse 3.8-pJ/SOP spiking neural network with on-chip STDP learning and sparse weights in 10-nm FinFET CMOS. IEEE J. Solid-State Circuits 54, 992–1002 (2019).

-

Indiveri, G. et al. Neuromorphic silicon neuron circuits. Front. Neurosci. 5, 73 (2011).

-

Mehonic, A. et al. Memristors—from in‐memory computing, deep learning acceleration, and spiking neural networks to the future of neuromorphic and bio‐inspired computing. Adv. Intell Syst. 2, 2000085 (2020). A review of the promise of memristors across a range of applications, including spike-based neuromorphic systems.

-

Li, X. et al. Power-efficient neural network with artificial dendrites. Nat. Nanotechnol. 15, 776–782 (2020).

-

Chua, L. Memristor, Hodgkin–Huxley, and edge of chaos. Nanotechnology 24, 383001 (2013)

-

Kumar, S., Strachan, J. P. & Williams, R. S. Chaotic dynamics in nanoscale NbO2 Mott memristors for analogue computing. Nature 548, 318–321 (2017).

-

Serb, A. et al. Memristive synapses connect brain and silicon spiking neurons. Sci Rep. 10, 2590 (2020).

-

Rosemain, M. & Rose, M. France to spend $1.8 billion on AI to compete with U.S., China. Reuters https://www.reuters.com/article/us-france-tech-idUSKBN1H51XP (29 March 2018).

-

Castellanos, S. Executives say $1 billion for AI research isn’t enough. Wall Street J. https://www.wsj.com/articles/executives-say-1-billion-for-ai-research-isnt-enough-11568153863 (10 September 2019).

-

Larson, C. China’s AI imperative. Science 359, 628–630 (2018).

-

European Commission. A European approach to artificial intelligence. https://ec.europa.eu/digital-single-market/en/artificial-intelligence (accessed 9 November 2020).

-

Artificial intelligence (AI) funding investment in the United States from 2011 to 2019. Statista https://www.statista.com/statistics/672712/ai-funding-united-states (accessed 9 November 2020).

-

Worldwide artificial intelligence spending guide. IDC Trackers https://www.idc.com/getdoc.jsp?containerId=IDC_P33198 (accessed 9 November 2020).

-

Markets and Markets.com. Neuromorphic Computing Market https://www.marketsandmarkets.com/Market-Reports/neuromorphic-chip-market-227703024.html?gclid=CjwKCAjwlcaRBhBYEiwAK341jS3mzHf9nSlOEcj3MxSj27HVewqXDR2v4TlsZYaH1RWC4qdM0fKdlxoC3NYQAvD_BwE. (accessed 17 March 2022).

(References can be scrolled up and down to view)

Neurodynamics Model Reading Club

With the development of various technical methods such as electrophysiology, network modeling, machine learning, statistical physics, and brain-inspired computing, our understanding of the interaction mechanisms and connection mechanisms of brain neurons, as well as functions such as consciousness, language, emotion, memory, and socialization, is gradually deepening, and the mysteries of the brain’s complex systems are being unveiled. To promote communication and cooperation among researchers in neuroscience, system science, computer science, and other fields, we initiated the 【Neurodynamics Model Reading Club】.

The Wisdom Club Reading Club is a series of paper reading activities aimed at a wide range of researchers, with the purpose of jointly exploring a scientific topic in depth, stimulating research inspiration, and promoting research collaboration. The 【Neurodynamics Model Reading Club】 is jointly initiated by the Wisdom Club and the Tianqiao Brain Science Research Institute, and has started on March 19, meeting every Saturday afternoon from 14:00 to 16:00 (or every Friday evening from 19:00 to 21:00, adjusted according to actual conditions), expected to last for 10-12 weeks. During this period, discussions will focus on the multiscale modeling of neural networks and their applications in brain diseases and cognition.

For more details, please see:

Neurodynamics Model Reading Club Launch: Integrating Multidisciplinary Approaches in Computational Neuroscience

Recommended Reading

-

Frontiers in Brain-Inspired Computing: Biological Signal Classification Based on Organic Electrochemical Networks -

Frontiers in Network Neuroscience: How Does the Brain Efficiently Process Information Locally and Globally? -

Fei-Fei Li’s Team: How to Create Smarter AI? Let Artificial Life Evolve in Complex Environments -

Complete Online Launch of “Zhangjiang: 27 Lectures on Complex Science”! -

Become a Wisdom VIP and unlock all site courses/reading clubs -

Join Wisdom and explore complexity together!

Click “Read the original text” to sign up for the reading club