Machine Heart reports

Editor: Danjiang

Generally, the deployment of large language models adopts a “pre-training – then fine-tuning” approach. However, when fine-tuning the base model for numerous tasks (such as personalized assistants), the training and service costs can become extremely high. Low-Rank Adaptation (LoRA) is a parameter-efficient fine-tuning method, typically used to adapt the base model to various tasks, resulting in many LoRA adapters derived from a single base model.

This approach provides numerous opportunities for batch inference during the service process. Research on LoRA indicates that fine-tuning only the adapter weights can achieve performance comparable to full-weight fine-tuning. While this method allows for low-latency inference of individual adapters and serial execution across adapters, it significantly reduces overall service throughput and increases total latency when serving multiple adapters simultaneously. In summary, the issue of how to serve these fine-tuned variants on a large scale remains unresolved.

In a recent paper, researchers from UC Berkeley, Stanford, and other institutions proposed a new fine-tuning method called S-LoRA.

-

Paper link: https://arxiv.org/pdf/2311.03285.pdf

-

Project link: https://github.com/S-LoRA/S-LoRA

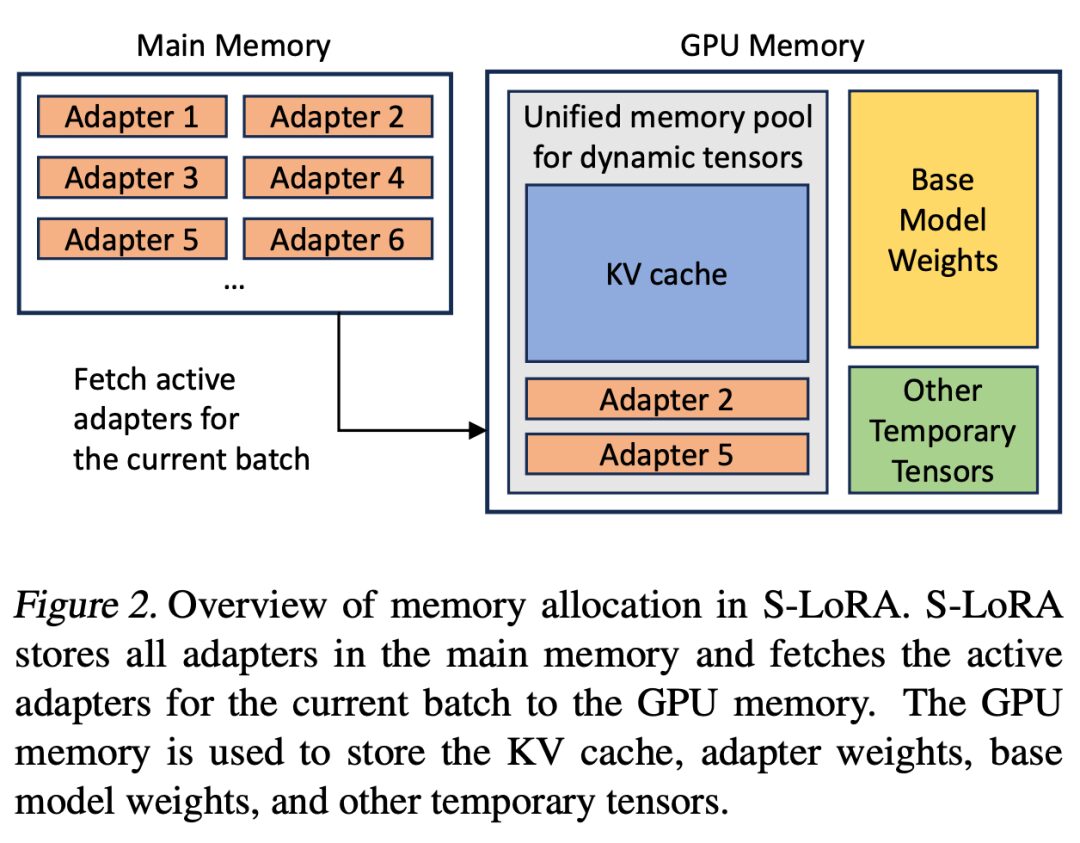

S-LoRA is a system designed for the scalable service of numerous LoRA adapters, storing all adapters in main memory and loading the adapters used for the current running query into GPU memory.

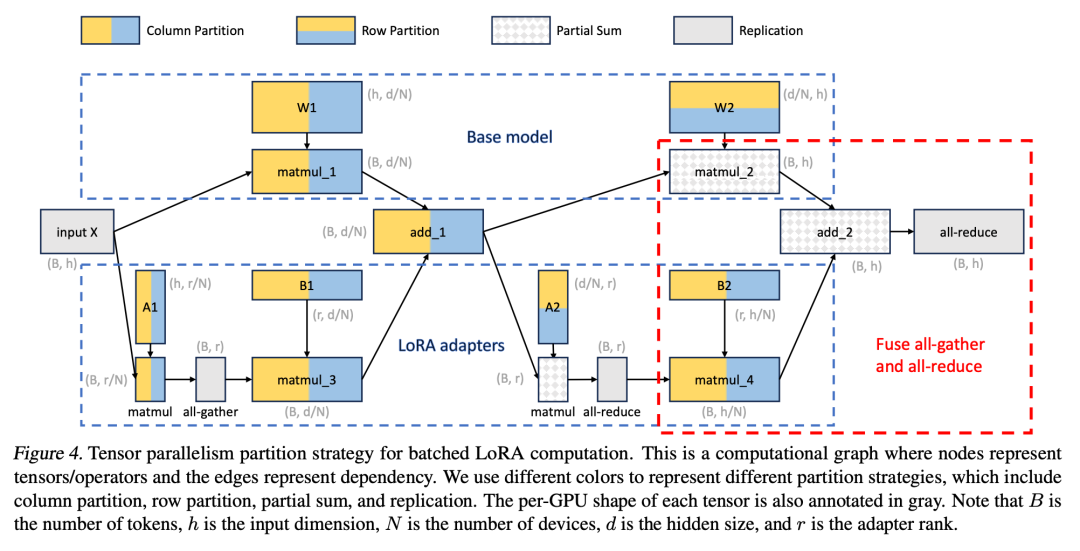

S-LoRA proposes a Unified Paging technology, which uses a unified memory pool to manage dynamically adapting adapter weights of different levels and KV cache tensors of different sequence lengths. Additionally, S-LoRA employs new tensor parallel strategies and highly optimized custom CUDA kernels to achieve heterogeneous batch processing of LoRA computations.

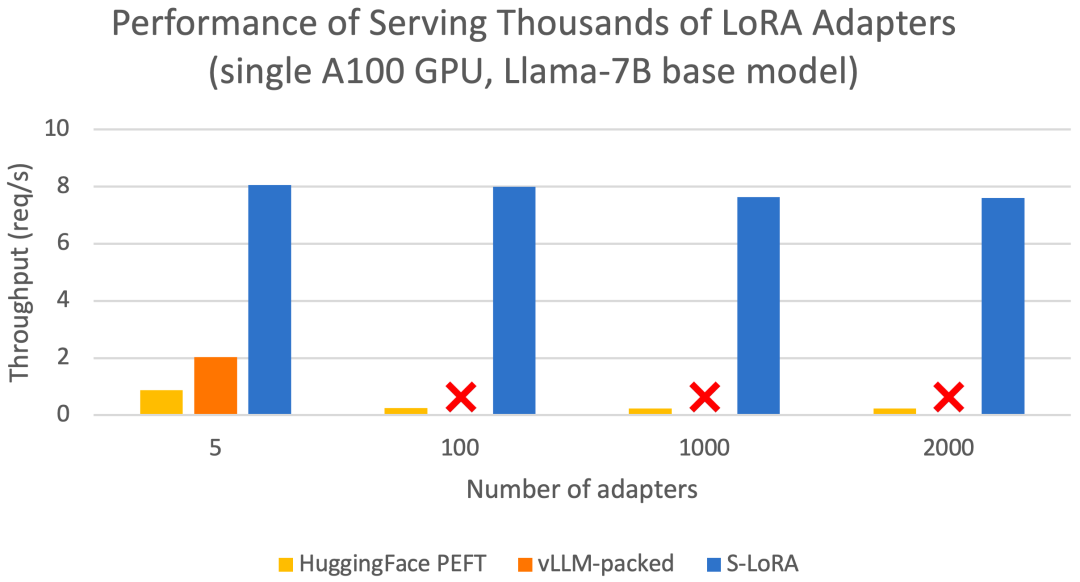

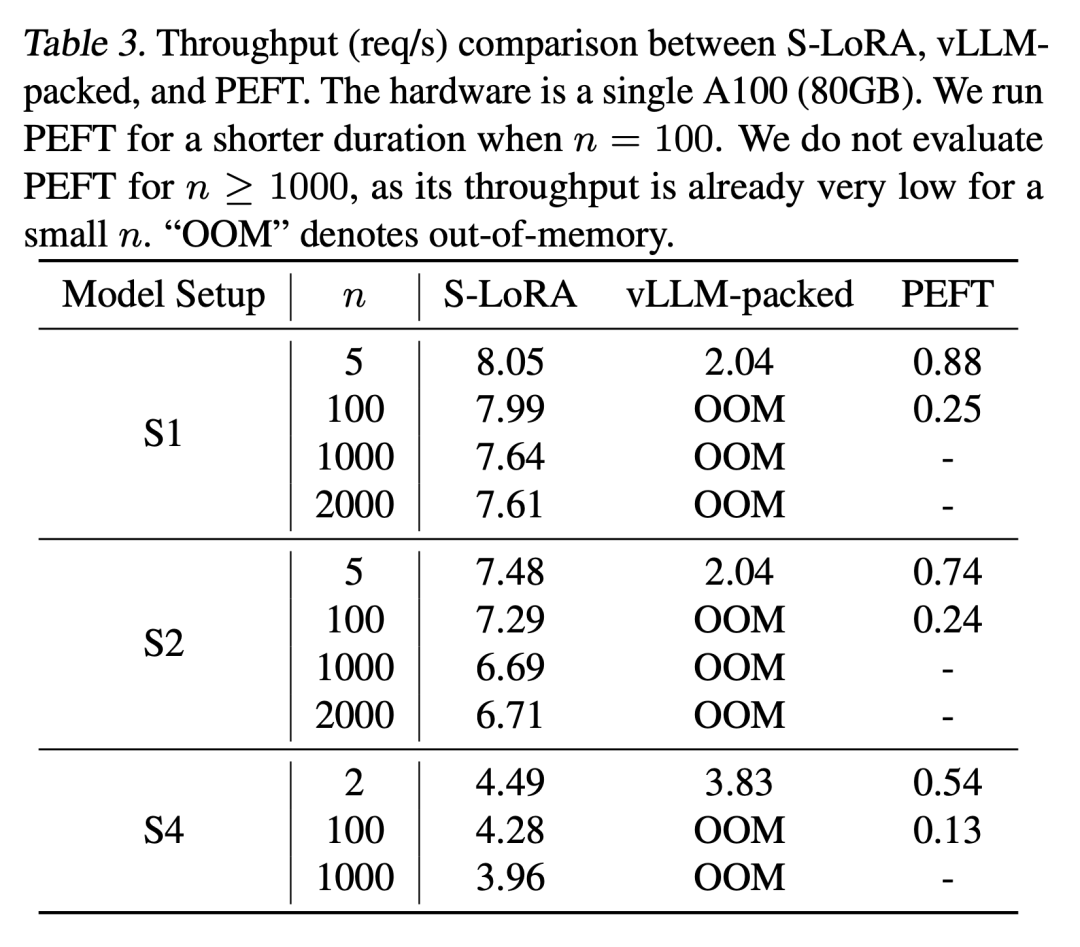

These features enable S-LoRA to serve thousands of LoRA adapters (serving 2000 adapters simultaneously) on a single or multiple GPUs with minimal overhead, reducing the increased LoRA computational burden to a minimum. In contrast, vLLM-packed requires maintaining multiple weight copies and can only serve fewer than 5 adapters due to GPU memory limitations.

Compared to state-of-the-art libraries such as HuggingFace PEFT and vLLM (which only supports LoRA services), S-LoRA can improve throughput by up to 4 times and increase the number of served adapters by several orders of magnitude. Therefore, S-LoRA provides scalable service for fine-tuned models for many specific tasks and offers potential for large-scale customized fine-tuning services.

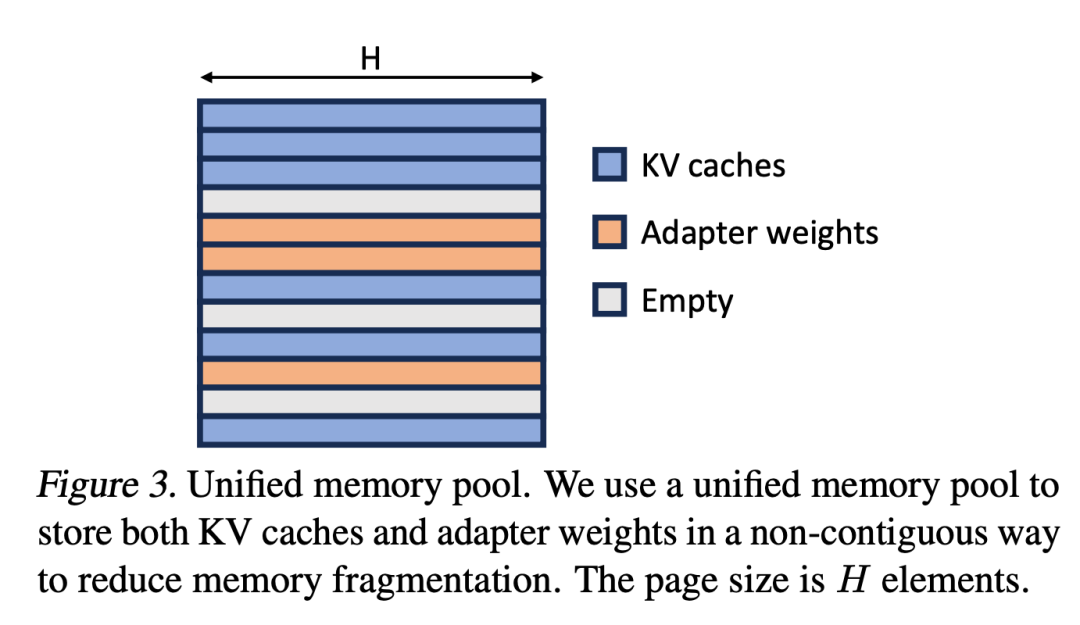

S-LoRA comprises three main innovative parts. Section 4 of the paper introduces a batching strategy that decomposes the computation between the base model and LoRA adapters. Furthermore, the researchers address the challenges of demand scheduling, including adapter clustering and admission control. The batching capability across concurrent adapters presents new challenges for memory management. In Section 5, the researchers extend PagedAttention to Unified Paging, supporting dynamic loading of LoRA adapters. This method uses a unified memory pool to page store KV caches and adapter weights, reducing fragmentation and balancing the dynamic size changes of KV caches and adapter weights. Finally, Section 6 introduces a new tensor parallel strategy that efficiently decouples the base model and LoRA adapters.

The following are the key points:

Batching

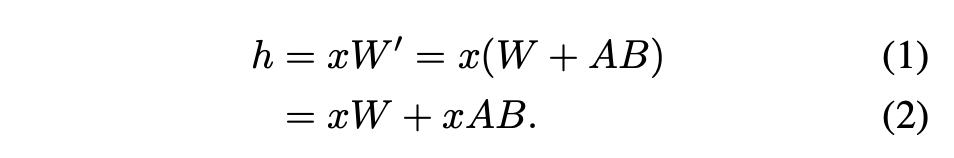

For a single adapter, the method recommended by Hu et al., 2021, is to merge the adapter weights into the base model weights to create a new model (see Formula 1). The benefit of this approach is that there is no additional adapter overhead during inference since the number of parameters in the new model is the same as that of the base model. In fact, this is also a prominent feature of the original LoRA work.

This article points out that merging LoRA adapters into the base model is inefficient for high-throughput service settings with multiple LoRAs. Instead, the researchers suggest computing LoRA computation xAB in real-time (as shown in Formula 2).

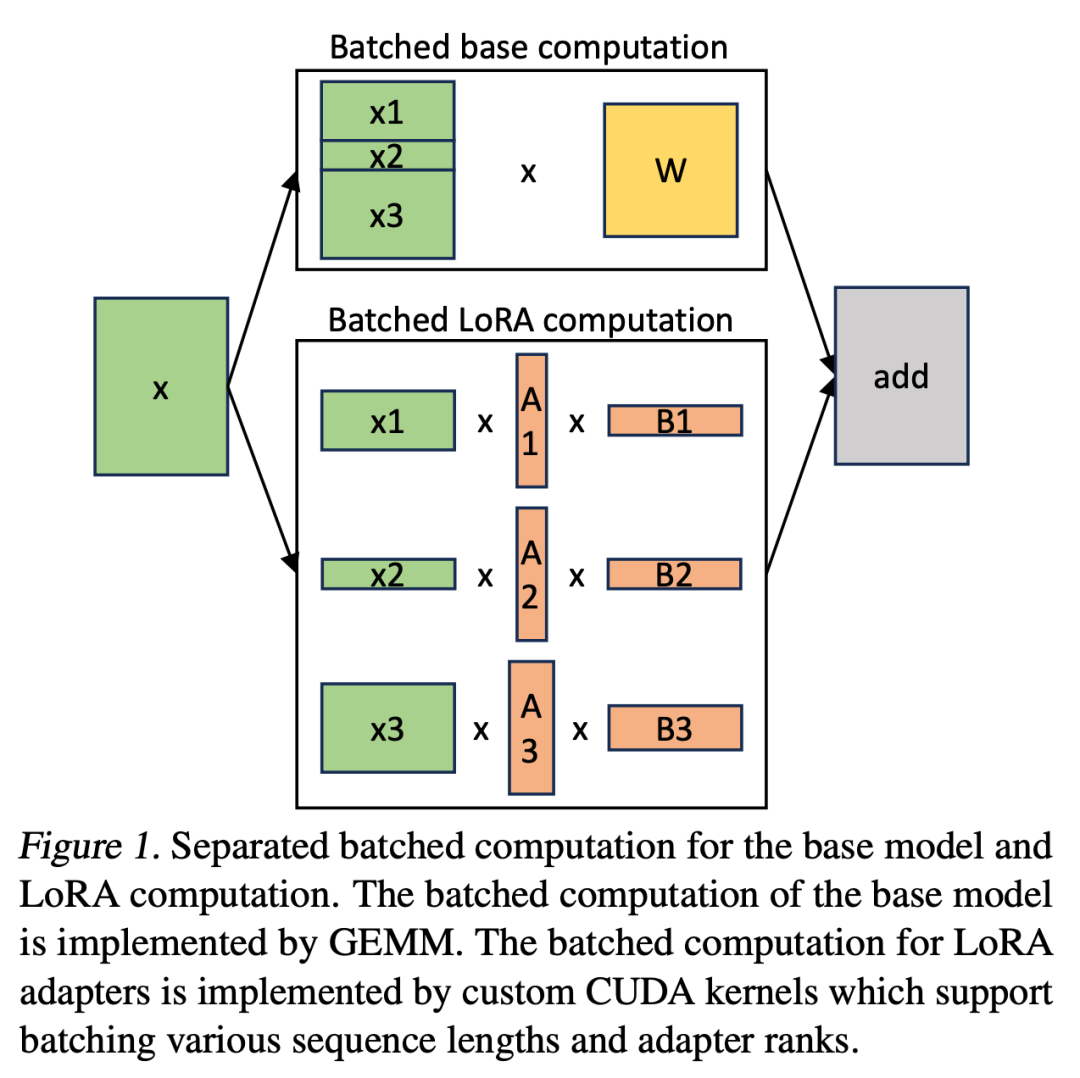

In S-LoRA, the computation of the base model is batched, and then all adapters’ additional xAB are executed separately using custom CUDA kernels. This process is illustrated in Figure 1. The researchers do not use padding and batch GEMM kernels from the BLAS library to compute LoRA, but instead implement custom CUDA kernels for more efficient computation without using padding, with implementation details discussed in Section 5.3.

If the LoRA adapters are stored in main memory, their number can be quite large, but the number of LoRA adapters required for the current running batch is manageable since the batch size is limited by GPU memory. To leverage this advantage, the researchers store all LoRA adapter cards in main memory and load only the required LoRA adapter cards into GPU RAM for the currently running batch during inference. In this case, the maximum number of adapters that can be served is limited by the size of the main memory. Figure 2 illustrates this process. Section 5 also discusses techniques for efficient memory management.

Memory Management

Serving multiple LoRA adapter cards simultaneously presents new challenges for memory management compared to serving a single base model. To support multiple adapters, S-LoRA stores them in main memory and dynamically loads the required adapter weights for the current running batch into GPU RAM.

In this process, there are two obvious challenges. The first is memory fragmentation, caused by dynamically loading and unloading adapter weights of different sizes. The second is the latency overhead brought by loading and unloading the adapters. To effectively address these challenges, the researchers proposed Unified Paging and overlapped I/O with computation through prefetching adapter weights.

Unified Paging

The researchers extend the idea of PagedAttention to Unified Paging, which manages not only the KV cache but also the adapter weights. Unified Paging uses a unified memory pool to jointly manage the KV cache and adapter weights. To achieve this, they first statically allocate a large buffer for the memory pool, using all available space except for the space occupied by base model weights and temporary activation tensors. Both KV cache and adapter weights are stored in a paged manner in the memory pool, with each page corresponding to an H vector. Thus, a KV cache tensor of sequence length S occupies S pages, while an R-level LoRA weight tensor occupies R pages. Figure 3 shows the layout of the memory pool, where KV cache and adapter weights are stored in an interleaved and non-contiguous manner. This approach significantly reduces fragmentation and ensures that different levels of adapter weights coexist with dynamic KV caches in a structured and systematic way.

Tensor Parallelism

Moreover, the researchers designed a novel tensor parallel strategy for batch LoRA inference to support multi-GPU inference of large Transformer models. Tensor parallelism is the most widely used parallel method because its single program multiple data model simplifies implementation and integration with existing systems. Tensor parallelism can reduce memory usage and latency per GPU when serving large models. In the context of this paper, the additional LoRA adapters introduce new weight matrices and matrix multiplications, necessitating the formulation of new partitioning strategies for these added items.

Evaluation

Finally, the researchers evaluate S-LoRA by serving Llama-7B/13B/30B/70B.

Results indicate that S-LoRA can serve thousands of LoRA adapters on a single or multiple GPUs with minimal overhead. Compared to the state-of-the-art parameter-efficient fine-tuning library Huggingface PEFT, S-LoRA can improve throughput by up to 30 times. Compared to high-throughput service systems vLLM that support LoRA services, S-LoRA can increase throughput by 4 times and increase the number of served adapters by several orders of magnitude.

For more research details, please refer to the original paper.

© THE END

For reprints, please contact this public account for authorization

Submissions or inquiries: [email protected]