1. Overview

With the surge of artificial intelligence and the widespread application of AI algorithms, deep learning has become a key focus of current AI research. In the field of autonomous driving, environmental perception, sensor fusion, and control decision-making all involve deep learning to some extent. The performance of autonomous driving is directly related to AI algorithms, which boils down to the quality of deep learning algorithms. To efficiently and reliably handle massive amounts of parallel data computation, the computing platform that supports AI algorithms must provide sufficient performance acceleration, meaning the computing power of AI chips must be adequate while also considering other factors such as power consumption, which should not exceed standards. The higher the energy efficiency ratio, the better. In the field of AI chips, one needs a CPU or ARM core for scheduling and processing, while large-scale parallel computing is completed by GPUs, FPGAs, or ASICs. ASICs have various architectures, including Google’s TPU, Horizon’s BPU, and the NPUs launched by Cambrian and Huawei.

I previously read an article about how AI chips such as GPUs process deep learning and I will summarize it here to reinforce my memory.

Both CPUs and GPUs are general-purpose chips; however, GPUs have enhanced their parallel computing units specifically for AI algorithms in recent years. Therefore, besides CPUs, chips like GPUs, NPUs, and FPGAs serve as hardware accelerators for AI algorithms, each playing their own advantages in different application scenarios and deep learning algorithms. Let’s discuss them one by one.

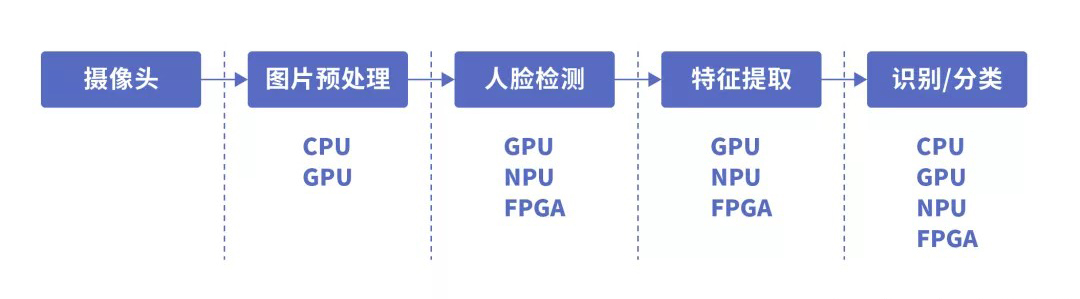

In autonomous driving algorithms, perception algorithms serve as the foundation for all autonomous driving control processing. Environmental perception mainly relies on cameras and LiDAR, with a significant emphasis on camera-based visual processing. Taking facial recognition as an example from camera visual deep learning algorithms, the basic processing flow and corresponding functional module power requirements are distributed as follows:

In visual algorithms, the camera first captures images, which are then preprocessed by the CPU or GPU, performing basic judgment and task allocation. Following this, a substantial amount of data computation and analysis is required, at which point AI chips like GPUs, NPUs, or FPGAs come into play. This involves understanding the structural differences between general-purpose chips like CPUs and AI computing chips like GPUs.

1. Overview

With the surge of artificial intelligence and the widespread application of AI algorithms, deep learning has become a key focus of current AI research. In the field of autonomous driving, environmental perception, sensor fusion, and control decision-making all involve deep learning to some extent. The performance of autonomous driving is directly related to AI algorithms, which boils down to the quality of deep learning algorithms. To efficiently and reliably handle massive amounts of parallel data computation, the computing platform that supports AI algorithms must provide sufficient performance acceleration, meaning the computing power of AI chips must be adequate while also considering other factors such as power consumption, which should not exceed standards. The higher the energy efficiency ratio, the better. In the field of AI chips, one needs a CPU or ARM core for scheduling and processing, while large-scale parallel computing is completed by GPUs, FPGAs, or ASICs. ASICs have various architectures, including Google’s TPU, Horizon’s BPU, and the NPUs launched by Cambrian and Huawei.

I previously read an article about how AI chips such as GPUs process deep learning and I will summarize it here to reinforce my memory.

Both CPUs and GPUs are general-purpose chips; however, GPUs have enhanced their parallel computing units specifically for AI algorithms in recent years. Therefore, besides CPUs, chips like GPUs, NPUs, and FPGAs serve as hardware accelerators for AI algorithms, each playing their own advantages in different application scenarios and deep learning algorithms. Let’s discuss them one by one.

In autonomous driving algorithms, perception algorithms serve as the foundation for all autonomous driving control processing. Environmental perception mainly relies on cameras and LiDAR, with a significant emphasis on camera-based visual processing. Taking facial recognition as an example from camera visual deep learning algorithms, the basic processing flow and corresponding functional module power requirements are distributed as follows:

In visual algorithms, the camera first captures images, which are then preprocessed by the CPU or GPU, performing basic judgment and task allocation. Following this, a substantial amount of data computation and analysis is required, at which point AI chips like GPUs, NPUs, or FPGAs come into play. This involves understanding the structural differences between general-purpose chips like CPUs and AI computing chips like GPUs.

2. Comparison of CPU and GPU Principles/Architectures

2. Comparison of CPU and GPU Principles/Architectures

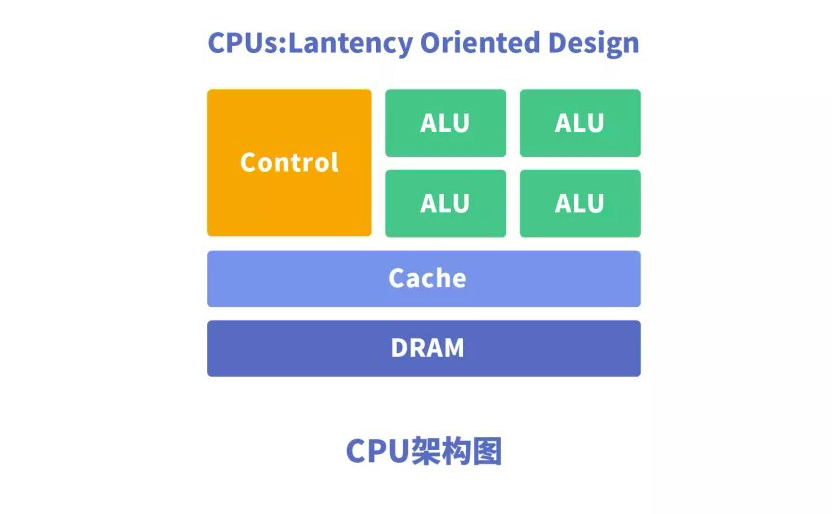

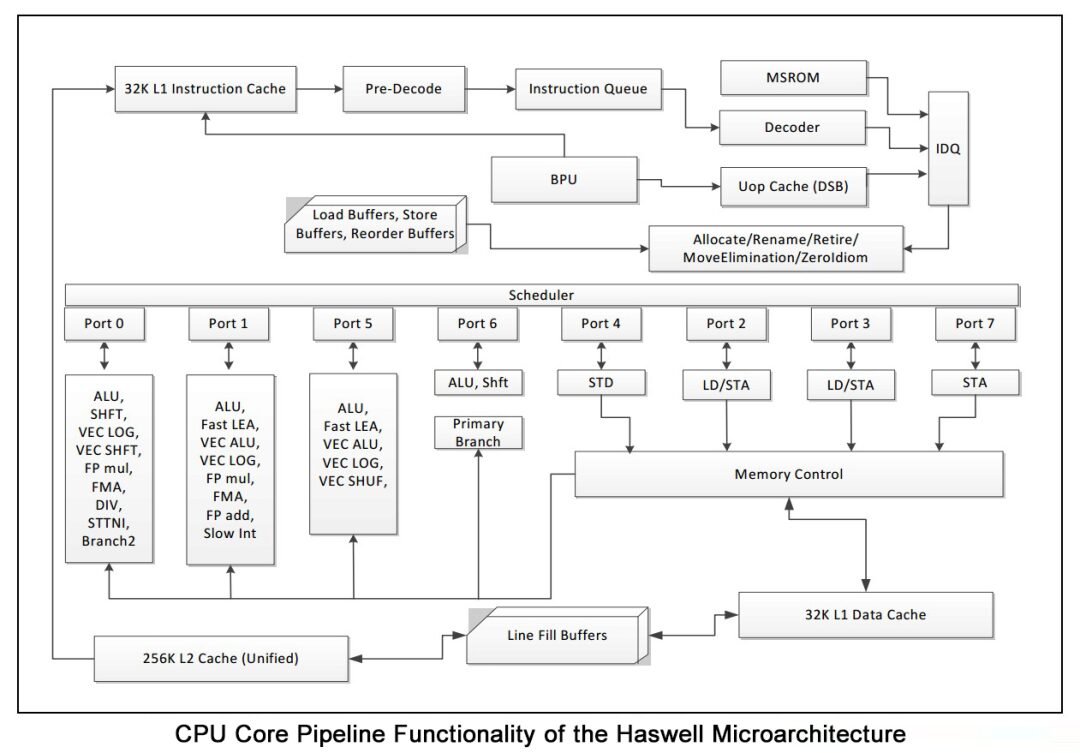

The CPU (Central Processing Unit) is a large-scale integrated circuit, and its main logical architecture includes the Control Unit, ALU (Arithmetic Logic Unit), and Cache, along with the data, control, and status buses that connect them. In simple terms, it consists of computation units, control units, and storage units.

The architecture diagram is shown below:

CPUs follow the von Neumann architecture, which is centered around storing programs/data and executing them in a serial order. Therefore, the architecture of the CPU requires a significant amount of space to accommodate storage units (Cache) and control units, whereas the computation unit (ALU) occupies a relatively small portion. This limitation makes the CPU less adept at large-scale parallel computing, making it more suitable for handling logical control.

While CPUs cannot perform extensive parallel calculations, GPUs can.

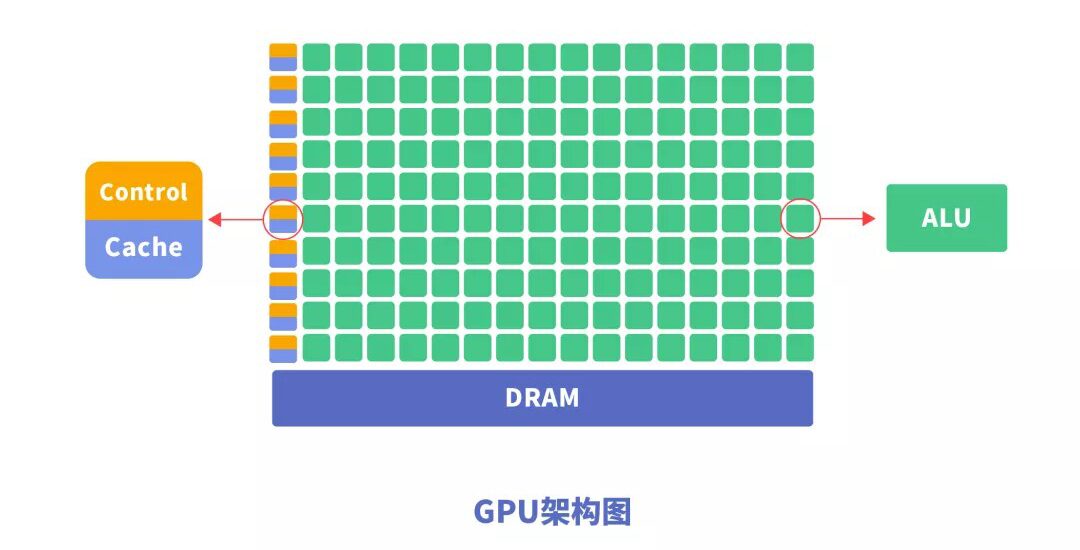

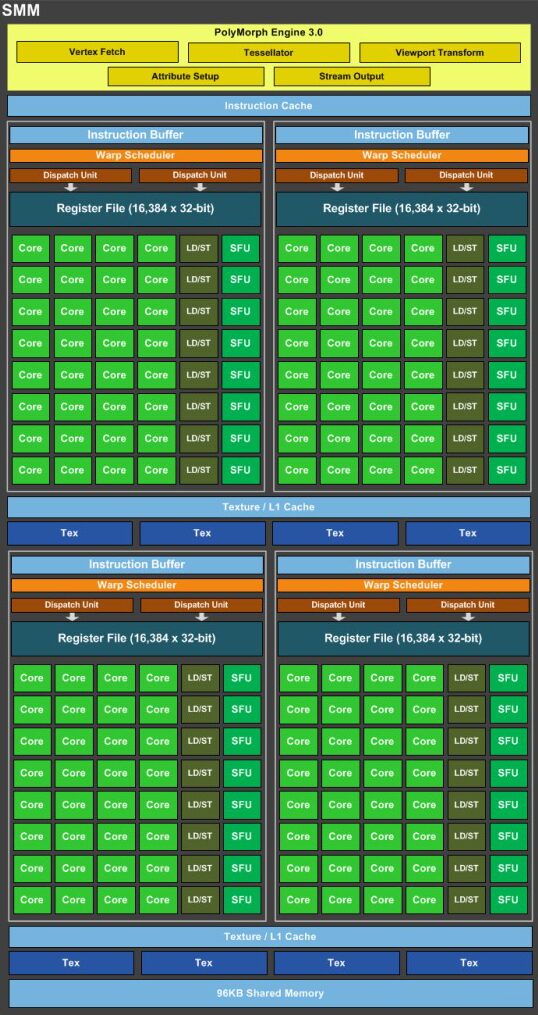

The GPU (Graphics Processing Unit) is a large-scale parallel computing architecture composed of many computation units. Initially separated from CPUs, it was designed specifically for processing image parallel computing data and is optimized for simultaneously handling multiple parallel computing tasks.

GPUs also contain basic computation units, control units, and storage units, but their architecture differs significantly from that of CPUs, as shown in the architecture diagram below.

Compared to CPUs, less than 20% of the space on a CPU chip is occupied by ALUs, while over 80% of the space on a GPU chip is occupied by ALUs. This means that GPUs have more ALUs available for data parallel processing, which is why GPUs possess powerful parallel computing capabilities.

From a hardware architecture perspective, CPUs and GPUs appear similar, both containing memory, Cache, ALU, and CU, and both having many cores. However, CPU cores are relatively heavy, with fewer computation units (ALUs) available for processing complex control logic, predicting branches, out-of-order execution, multi-level pipeline tasks, etc. In contrast, GPU cores are lighter, optimized for simple control logic data parallel tasks, with a focus on maximizing throughput for parallel programs.

In simple terms, CPU cores excel at completing multiple complex tasks, focusing on logic and serial programming; whereas GPU cores excel at completing tasks with simple control logic, focusing on computation and parallel processing.

Next, let’s take a look at a publicly released architecture diagram of NVIDIA GPUs.

The above diagram shows that NVIDIA GPUs have 4 SMs (streaming multiprocessors), each with 4*8=32 cores, totaling 4*4*8=128 cores. In comparison, the Core microarchitecture diagram of the CPU Haswell shows that it has over 20 types of “Execution Units” (Execution Units), such as ALU, FMA, FP add, and FP mul, etc. Each “Execution Unit” is used to process different instructions; for example, in a CPU core, there are 2 of them, and a six-core CPU has 12. Thus, in terms of execution units, the ratio is 128:12.

(For reference only, the cores in the GPU do not equate to the cores in the CPU structure diagram; they can only be compared to an “Execution Unit” in the CPU microarchitecture.)

The comparison between CPU and GPU can be metaphorically described as follows: the CPU is an excellent leader with multiple functions, excelling in “scheduling” rather than pure computation; while the GPU can be seen as an employee with “massive computing power” who follows the CPU’s scheduling.

In summary, GPUs have the following characteristics:

1) Multi-threading, providing a structural foundation for multi-core parallel computing, with a very high number of cores that can support massive data parallel computations, processing neural network data far more efficiently than CPUs.

2) Higher memory access speed.

3) Greater floating-point operation capability.

Thus, GPUs are more suitable for handling large training datasets, extensive matrices, and convolution operations in deep learning.

Although GPUs exhibit significant advantages in parallel computing capabilities, they cannot function independently and require the collaborative processing of CPUs for constructing neural network models and transferring data streams.

However, GPUs also have inherent drawbacks, namely high power consumption, large size, and expensive pricing.

The higher the performance of a GPU, the larger its size, the higher its power consumption, and the more expensive it becomes, making it unsuitable for small or mobile devices.

As a result, a specialized ASIC chip known as NPU (Neural Processing Unit) was born, characterized by its small size, low power consumption, high computational performance, and high efficiency.

3. NPU Principles and AI Computing Advantages

3. NPU Principles and AI Computing Advantages

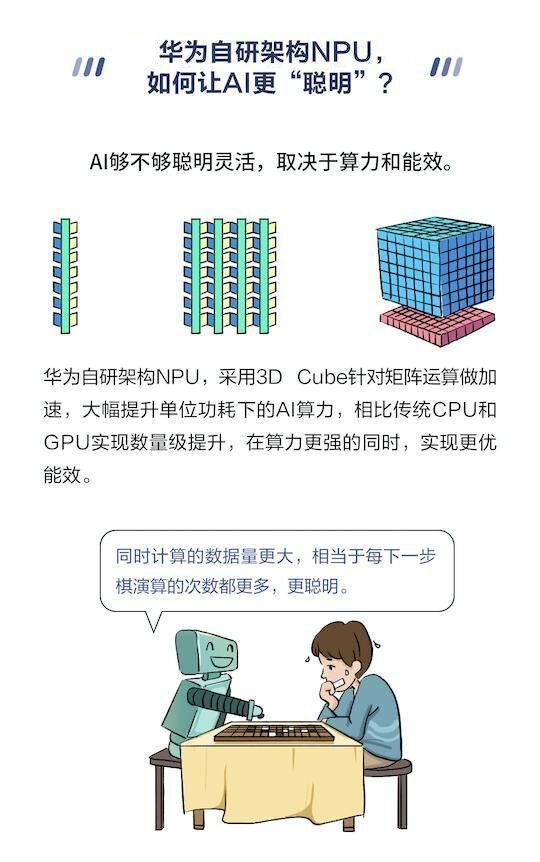

NPU (Neural Networks Processing Units) is a specialized processing unit for neural networks. The working principle of NPU simulates human neurons and synapses at the circuit level and directly processes large-scale neurons and synapses with a deep learning instruction set, completing the processing of a group of neurons with a single instruction. Compared to CPUs and GPUs, NPUs achieve integrated storage and computation by emphasizing weights, thus improving operational efficiency.

In China, Cambrian was the earliest enterprise to research NPU, and Huawei’s Kirin 970 adopted Cambrian’s NPU architecture. However, since 2018, Huawei has released its self-developed Ascend chip specifically for the Da Vinci architecture.

NPUs are constructed to mimic biological neural networks, requiring thousands of instructions for neuron processing by CPUs and GPUs, while NPUs can achieve this with just one or a few instructions, thus showcasing significant advantages in processing efficiency for deep learning.

It is said that under the same power consumption, the performance of NPUs is 118 times that of GPUs. (Data source: network)

Like GPUs, NPUs also require collaborative processing with CPUs to complete specific tasks.

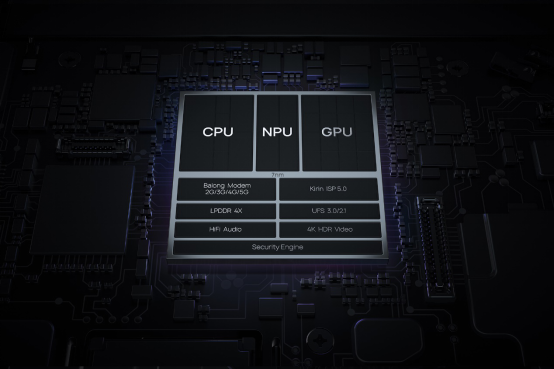

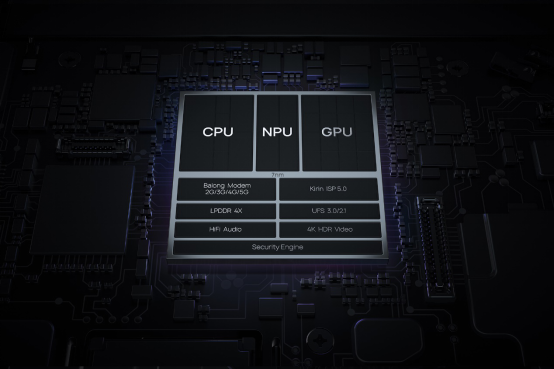

At this point, it is worth mentioning mobile phone chips, which are specialized SoCs for mobile devices that integrate CPUs, GPUs, and NPUs.

For example, the Kirin 990 5G version has a total of 8 CPU cores, consisting of two 2.86GHz A76 architecture big cores, two 2.36GHz A76 architecture medium cores, and four 1.95GHz A55 architecture small cores. In terms of GPU, it uses a 16-core Mali-G76 GPU, and for NPU, it integrates 2 big cores and 1 small core, using Huawei’s self-developed Da Vinci architecture NPU.

The CPU is responsible for smooth application switching on the phone, the GPU supports rapid loading of game graphics, while the NPU (Neural Processing Unit) is specifically responsible for implementing AI calculations and applications. In other words, the CPU handles computation and overall coordination, the GPU deals with graphics-related parts, and the NPU is responsible for AI-related tasks. The workflow is that any task must first go through the CPU, which then allocates it based on the nature of the task. If it involves graphics computation, it will be assigned to the GPU; if it involves AI computation, it will be assigned to the NPU.

This article uses Huawei’s official comic series “Understanding Chips Made Easy” to introduce Huawei’s self-developed Da Vinci architecture NPU. Compared to traditional scalar and vector operation modes, Huawei’s self-developed architecture NPU adopts a 3D Cube approach to accelerate matrix operations, thus allowing for larger data volumes in unit time and stronger AI computing power per unit of power consumption, achieving an order of magnitude improvement compared to traditional CPUs and GPUs, resulting in better energy efficiency.

It can be seen that in mobile SoCs, the CPU is a crucial component. Similarly, in the autonomous driving industry, GPUs and NPUs need to collaborate with CPUs to unleash their performance advantages.

The CPU is responsible for smooth application switching on the phone, the GPU supports rapid loading of game graphics, while the NPU (Neural Processing Unit) is specifically responsible for implementing AI calculations and applications. In other words, the CPU handles computation and overall coordination, the GPU deals with graphics-related parts, and the NPU is responsible for AI-related tasks. The workflow is that any task must first go through the CPU, which then allocates it based on the nature of the task. If it involves graphics computation, it will be assigned to the GPU; if it involves AI computation, it will be assigned to the NPU.

This article uses Huawei’s official comic series “Understanding Chips Made Easy” to introduce Huawei’s self-developed Da Vinci architecture NPU. Compared to traditional scalar and vector operation modes, Huawei’s self-developed architecture NPU adopts a 3D Cube approach to accelerate matrix operations, thus allowing for larger data volumes in unit time and stronger AI computing power per unit of power consumption, achieving an order of magnitude improvement compared to traditional CPUs and GPUs, resulting in better energy efficiency.

It can be seen that in mobile SoCs, the CPU is a crucial component. Similarly, in the autonomous driving industry, GPUs and NPUs need to collaborate with CPUs to unleash their performance advantages.

4. How CPUs Assist GPUs in Acceleration

4. How CPUs Assist GPUs in Acceleration

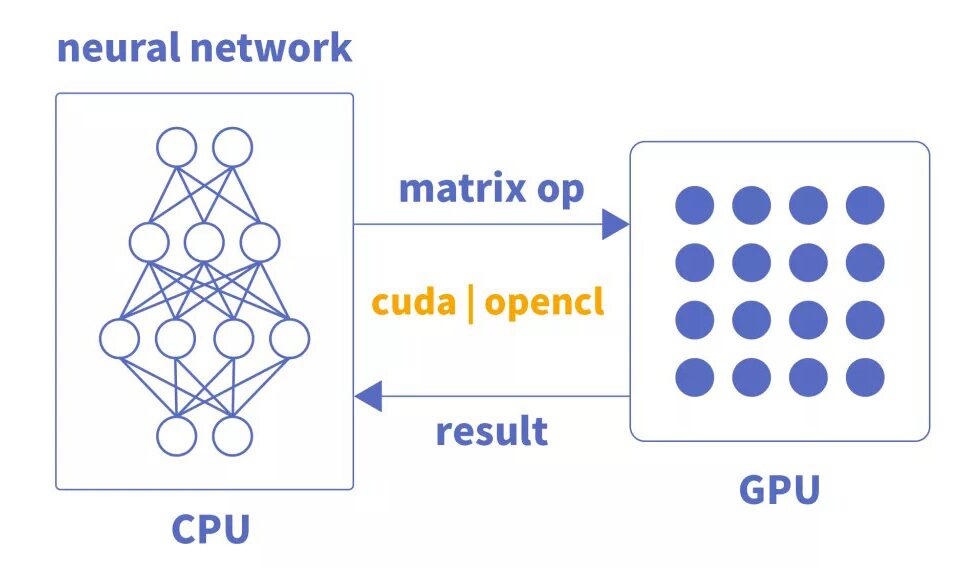

Currently, GPUs are primarily used for parallel matrix multiplication and addition operations, while the construction of neural network models and data flow transmission still occurs on CPUs.

The CPU loads weight data, constructs the neural network model according to the code, and transmits the matrix operations of each layer to the GPU for parallel computation via libraries such as CUDA or OpenCL, outputting results. The CPU then schedules the matrix data calculations of the lower layers of neurons until the neural network output layer calculations are completed, yielding final results.

CPU and GPU interaction process:

1) Retrieve GPU information and configure GPU ID

2) Load neuron parameters to GPU

3) GPU accelerates neural network calculations

4) Receive GPU computation results

5. How CPUs Assist NPUs in Acceleration

5. How CPUs Assist NPUs in Acceleration

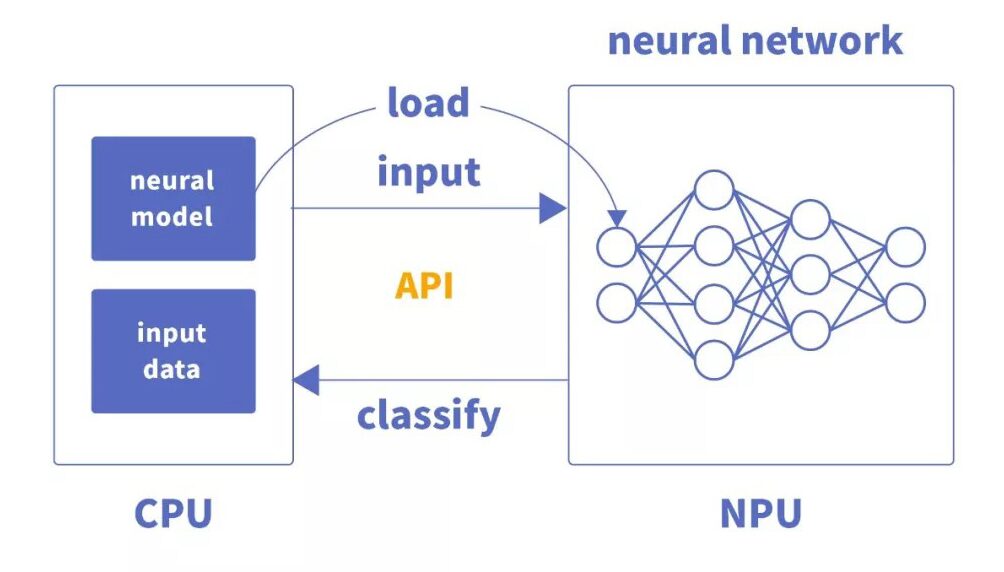

Unlike GPU acceleration, NPU acceleration primarily involves not outputting the results of each layer of neuron calculations to main memory but instead passing them to the next layer of neurons for continued computation based on the connections of the neural network. This leads to significant improvements in computational performance and power consumption.

The CPU loads the compiled neural network model file and weight files onto the dedicated chip, completing hardware programming.

Throughout the operation, the CPU mainly facilitates data loading and controls the business process. Its interaction process is as follows:

1) Open the dedicated NPU chip device

2) Pass in the model file to obtain the model task

3) Retrieve the input and output information of the task

4) Copy input data to model memory

5) Run the model to obtain output data

In addition to NPUs, FPGAs also compete in terms of power consumption and computational capability.

6. FPGA Principles and AI Computing Advantages

6. FPGA Principles and AI Computing Advantages

Having discussed GPUs and NPUs along with their leader, the CPU, let’s take a look at another popular chip: FPGA.

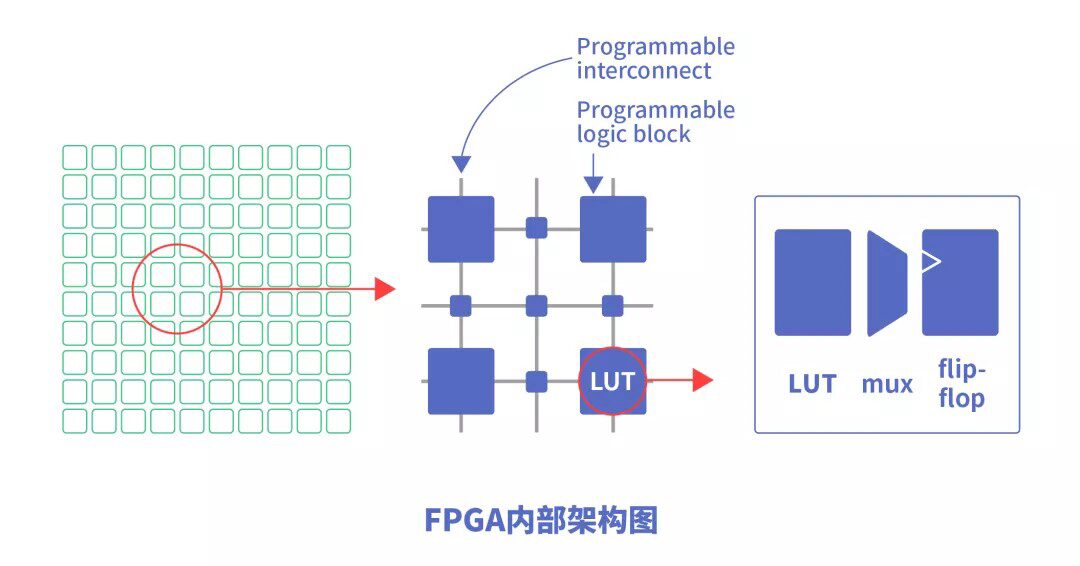

FPGA (Field-Programmable Gate Array) is a type of chip that can be repeatedly programmed according to user needs. Compared to CPUs and GPUs, it features high performance, low power consumption, and hardware programmability.

The basic principle of FPGA is to integrate a large number of basic digital circuit gates and memory within the chip, allowing users to define the connections between these gates and memory by burning FPGA configuration files. This burning process is not one-time; it can be repeatedly rewritten and reconfigured.

The internal structure of FPGA is shown in the diagram below:

FPGA’s programmable logic blocks contain many functional units, composed of LUTs (Look-up Tables) and flip-flops. FPGA directly implements user algorithms through these gates without the need for instruction system translation, resulting in higher execution efficiency.

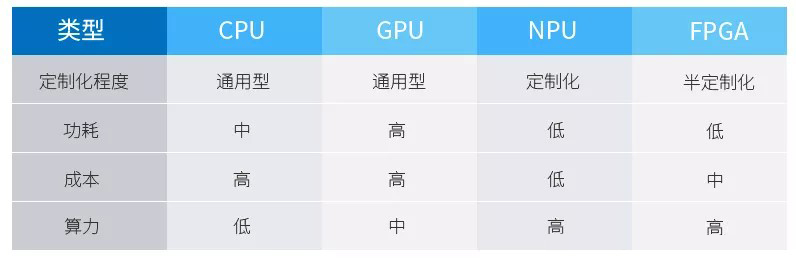

Let’s compare the characteristics of CPUs, GPUs, NPUs, and FPGAs:

7. Summary of Chip Architecture Characteristics

7. Summary of Chip Architecture Characteristics

70% of transistors are used to build Cache, a portion for control units, and few for computation units, making it suitable for logical control calculations.

It is single-instruction, multi-data processing; most transistors are used to construct computation units, with low computational complexity, making it suitable for large-scale parallel computing. It is primarily applied in big data, backend servers, and image processing. GPUs excel in accelerating computations related to images, but they cannot operate independently and must be controlled by a CPU to function. CPUs can operate independently, handling complex logical calculations and different data types, but when a large amount of uniformly processed data is needed, they can call on GPUs for parallel computing.

NPUs simulate neurons at the circuit level, achieving integrated storage and computation through synaptic weights, allowing a single instruction to complete the processing of a group of neurons, thus enhancing operational efficiency. They are primarily applied in the fields of communication, big data, and image processing. As a type of application-specific integrated circuit (ASIC), NPUs are custom chips designed to meet specific requirements. They have advantages in power consumption, reliability, and size, particularly in high-performance, low-power mobile applications.

With programmable logic, high computational efficiency, and closer access to low-level I/O, FPGAs achieve logic editability through redundant transistors and connections. Essentially, they operate without instructions and do not require shared memory, resulting in higher computational efficiency than CPUs and GPUs. They are primarily applied in smartphones, portable devices, and automobiles. Implementing complex algorithms using FPGAs can be challenging, and their downside is the relatively high cost. A comparison between FPGAs and GPUs reveals that the former lacks the memory and control that contribute to storage and reading, resulting in faster speeds. Additionally, due to the absence of reading functions, FPGAs consume less power, but their computational capacity is not as extensive.

As the most general-purpose component, CPUs collaborate with other processors to complete different tasks. GPUs are suitable for training large datasets and performing matrix convolution operations in deep learning. NPUs and FPGAs have significant advantages in performance, area, and power consumption, enabling them to accelerate neural network computations more effectively. The characteristics of FPGAs are that they require hardware description languages for development, resulting in a higher development threshold compared to GPUs and NPUs.

ASIC chips are fully customized chips that are suitable for artificial intelligence in the long term. Many companies working on AI algorithms are entering the market from this perspective. As algorithm complexity increases, a dedicated chip architecture is increasingly necessary to correspond with it. ASICs customized based on AI algorithms show promising development prospects. Brain-like chips represent the ultimate development model of artificial intelligence, but commercialization is still far away.

It can be said that every type of processor has its advantages and disadvantages, and in different application scenarios, it is essential to weigh the pros and cons based on demands and choose the appropriate chip.

Cooperation WeChat ID:18008462630

There are dozens of groups related to the automotive industry, including complete vehicles, key parts, new energy vehicles, intelligent connected vehicles, aftermarket, automotive investment, autonomous driving, and the Internet of Vehicles. Please scan the administrator’s WeChat to join (please indicate your company name). There is also a financing group for startup companies, welcome angel round and A round companies to join.

1. Overview

1. Overview

2. Comparison of CPU and GPU Principles/Architectures

2. Comparison of CPU and GPU Principles/Architectures

3. NPU Principles and AI Computing Advantages

3. NPU Principles and AI Computing Advantages

4. How CPUs Assist GPUs in Acceleration

4. How CPUs Assist GPUs in Acceleration

5. How CPUs Assist NPUs in Acceleration

5. How CPUs Assist NPUs in Acceleration

6. FPGA Principles and AI Computing Advantages

6. FPGA Principles and AI Computing Advantages

7. Summary of Chip Architecture Characteristics

7. Summary of Chip Architecture Characteristics