Abstract:

This article is authorized for publication by Zosi Automotive Research, authored by Zhou Yanwu.

Following last week’s analysis of Toyota’s mass-produced LiDAR, this article continues to analyze Toyota’s autonomous driving computing system. Its main system is the ADS ECU, with a backup of ADX, and it also adds a second battery system. In terms of positioning, it not only includes GPS absolute positioning with QZSS, but also relative positioning based on long-range cameras. If you want to know more, don’t miss this article~

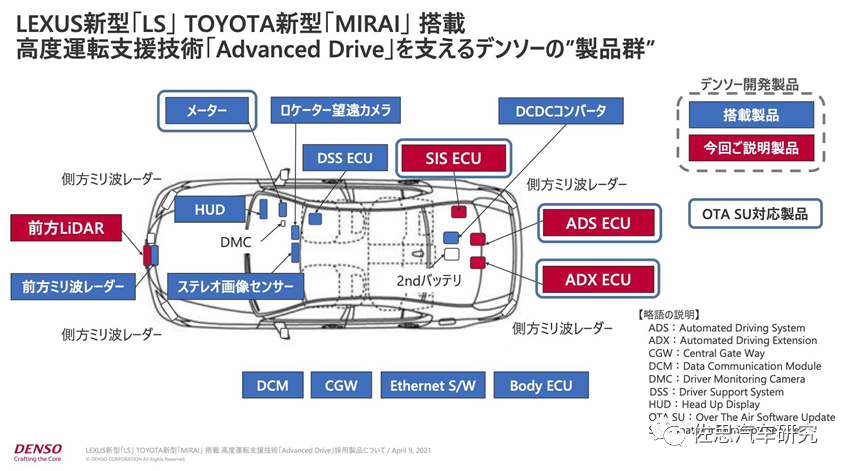

On April 12, 2021, Toyota launched the new Mirai and the new Lexus LS in the Japanese market, both equipped with the Advanced Drive system, which features Level 2 autonomous driving technology. The starting price for the former is 8.6 million yen (approximately 515,000 RMB), while the latter starts at 17.94 million yen (approximately 1,075,000 RMB). While this price is not high for a Mercedes S-Class, it is quite high for a Lexus.Although it is labeled as Level 2 autonomous driving, it is actually significantly superior to Tesla’s FSD, and calling it Level 4 is not an exaggeration. Previously, Honda launched the world’s first Level 3 vehicle, but only produced 100 units, which were available for rent only. Only Toyota has truly mass-produced vehicles, but the price is also quite high, and sales are likely to be low; Toyota is likely very aware of this. It is estimated that annual sales will only be in the thousands, or even hundreds, which could be a good thing, allowing Toyota to use non-mass-produced semiconductor components.Many people may question the exaggeration of Toyota’s Level 2, but upon closer examination, it becomes clear that Toyota’s Level 2 is essentially Level 4 autonomous driving, or that Toyota’s hardware system already possesses the capabilities and requirements for Level 4 autonomous driving. The most significant difference between Level 4 and Level 3 is that Level 4 can achieve Fail-Operation, meaning it has two completely different autonomous driving computation systems, one as a backup in case the main system fails, allowing it to maintain autonomous driving even when the main system fails. Level 3, on the other hand, is Fail-Safety, where human intervention is required in case of system failure, meaning safety must be maintained even in failure. Companies like Tesla or NVIDIA, which set up two identical main chips, only increase computing power and do not provide a backup; since the main system has failed, only a completely different system from the main system can be a true backup, achieving real Fail-Operation. Toyota’s autonomous driving system, as shown in the image above, not only has a backup for the computing system but also considers the power supply system, adding a second battery system. The main system is the ADS ECU, with a backup of ADX. Both systems operate simultaneously, with ADS handling the majority of tasks to ensure basic safety, while ADX enhances the system, making the vehicle not only safe but also intelligent.This setup adds approximately 660,000 yen in hardware costs, which is about 40,000 RMB. In the future, Toyota vehicles may offer this as an optional feature, with an estimated optional price of 200,000 RMB. Combined with the dual-camera system already standard on the Lexus LS500H, this forms a complete Level 4 autonomous driving system. The cost of the forward main LiDAR in this system is estimated to be the highest, around 10,000 to 15,000 RMB, while the two side LiDARs together cost about 5,000 to 8,000 RMB, and the three ECUs are estimated to cost 12,000 to 14,000 RMB. The software cost is difficult to estimate and will depend on sales volume.In terms of sensors, the main forward LiDAR is developed by Denso, which has a design similar to Valeo’s Scala, but cleverly converts a unidirectional design into a bidirectional one. Denso may also be at the level of the second-generation Scala, with a 16-line design, but it could also be higher, with a 32-line design. The side LiDAR is Continental’s HFL-110 Flash LiDAR. The core dual-camera system is also developed by Denso. Millimeter-wave radar is still necessary, with a total of five millimeter-wave radars, all developed and produced by Denso. Toyota’s supply chain aims for vertical integration, producing as many components in-house as possible to ensure high cost-performance and rapid product iteration, while maintaining supply chain stability. In contrast, manufacturers in Europe and China tend to outsource as much as possible.

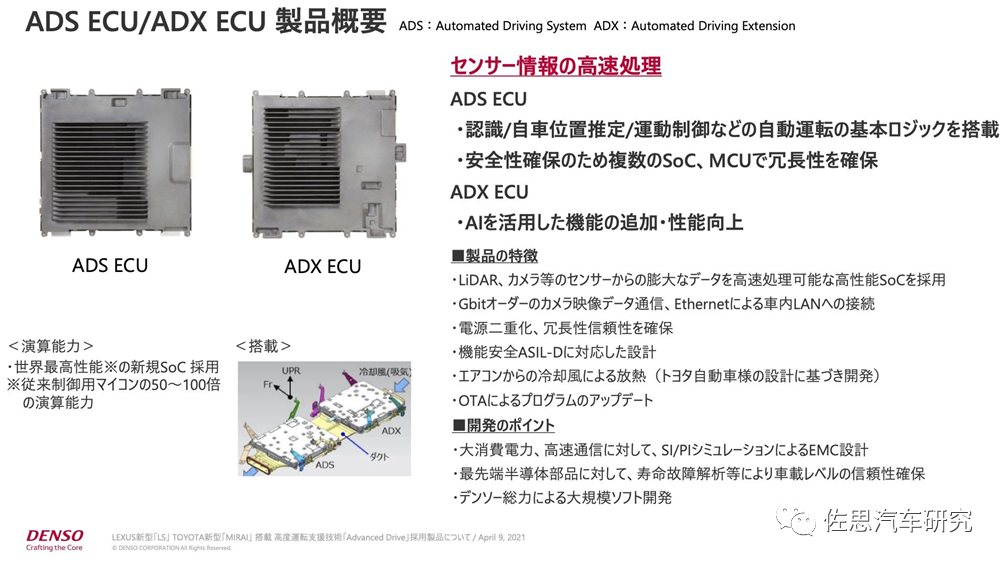

Toyota’s autonomous driving system, as shown in the image above, not only has a backup for the computing system but also considers the power supply system, adding a second battery system. The main system is the ADS ECU, with a backup of ADX. Both systems operate simultaneously, with ADS handling the majority of tasks to ensure basic safety, while ADX enhances the system, making the vehicle not only safe but also intelligent.This setup adds approximately 660,000 yen in hardware costs, which is about 40,000 RMB. In the future, Toyota vehicles may offer this as an optional feature, with an estimated optional price of 200,000 RMB. Combined with the dual-camera system already standard on the Lexus LS500H, this forms a complete Level 4 autonomous driving system. The cost of the forward main LiDAR in this system is estimated to be the highest, around 10,000 to 15,000 RMB, while the two side LiDARs together cost about 5,000 to 8,000 RMB, and the three ECUs are estimated to cost 12,000 to 14,000 RMB. The software cost is difficult to estimate and will depend on sales volume.In terms of sensors, the main forward LiDAR is developed by Denso, which has a design similar to Valeo’s Scala, but cleverly converts a unidirectional design into a bidirectional one. Denso may also be at the level of the second-generation Scala, with a 16-line design, but it could also be higher, with a 32-line design. The side LiDAR is Continental’s HFL-110 Flash LiDAR. The core dual-camera system is also developed by Denso. Millimeter-wave radar is still necessary, with a total of five millimeter-wave radars, all developed and produced by Denso. Toyota’s supply chain aims for vertical integration, producing as many components in-house as possible to ensure high cost-performance and rapid product iteration, while maintaining supply chain stability. In contrast, manufacturers in Europe and China tend to outsource as much as possible. ADS uses high-grade automotive components and employs traditional non-deep learning algorithms, which are deterministic and interpretable. ADX primarily uses deep learning algorithms, but deep learning lacks determinism and interpretability, making it impossible to pass functional safety certification. However, to achieve vehicle intelligence rather than merely maintaining safety, deep learning is essential. The ADX system can handle 90% of autonomous driving tasks but cannot guarantee 100% safety, while ADS ensures safety. The combination of both systems allows for intelligence while still maintaining normal autonomous driving operation in the event of a main system failure, although this autonomous driving period cannot be too long. This is where Toyota differs from most manufacturers; Toyota places greater emphasis on safety, while others focus more on intelligence.In the ADS, Toyota uses Renesas’ main SoC, while the ADX uses NVIDIA’s Xavier as the main chip.Why use Renesas’ main SoC? Firstly, Renesas is a traditional automotive-grade chip manufacturer that places greater emphasis on automotive standards and safety. Secondly, Renesas has a close relationship with Toyota, and the autonomous driving chip was developed in collaboration with Toyota.

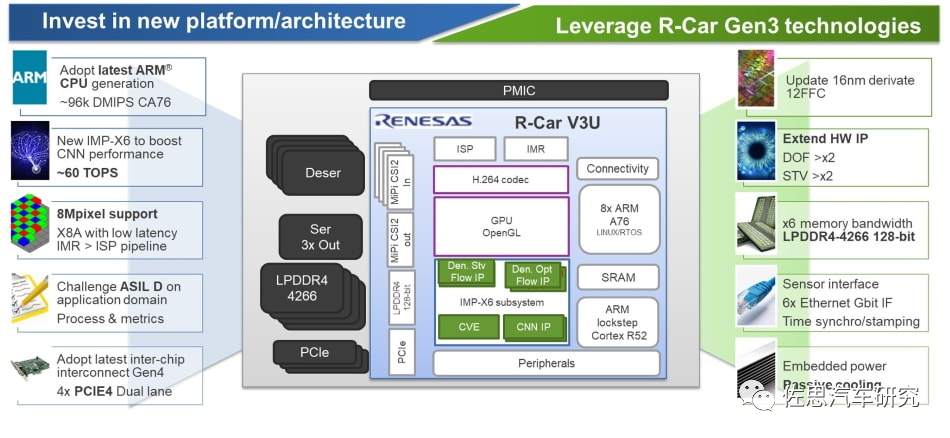

ADS uses high-grade automotive components and employs traditional non-deep learning algorithms, which are deterministic and interpretable. ADX primarily uses deep learning algorithms, but deep learning lacks determinism and interpretability, making it impossible to pass functional safety certification. However, to achieve vehicle intelligence rather than merely maintaining safety, deep learning is essential. The ADX system can handle 90% of autonomous driving tasks but cannot guarantee 100% safety, while ADS ensures safety. The combination of both systems allows for intelligence while still maintaining normal autonomous driving operation in the event of a main system failure, although this autonomous driving period cannot be too long. This is where Toyota differs from most manufacturers; Toyota places greater emphasis on safety, while others focus more on intelligence.In the ADS, Toyota uses Renesas’ main SoC, while the ADX uses NVIDIA’s Xavier as the main chip.Why use Renesas’ main SoC? Firstly, Renesas is a traditional automotive-grade chip manufacturer that places greater emphasis on automotive standards and safety. Secondly, Renesas has a close relationship with Toyota, and the autonomous driving chip was developed in collaboration with Toyota. The table above shows Renesas’ shareholder list, with Denso, which is controlled by Toyota (formerly Toyota’s electronics and thermal exchange division), being the second-largest shareholder. Initially, Denso held only 0.5% of the shares, but later invested billions of dollars to increase its stake to 8.95%. Toyota holds 2.92% of the shares. The largest shareholder of Renesas is the Innovation Network Corporation of Japan (INCJ), a government-backed investment company established by the Japanese government and 19 private enterprises, with the Ministry of Finance holding 95.3% of the shares, making Renesas a quasi-state-owned enterprise in Japan. Among the 19 private enterprises is Toyota. Early on, INCJ held over 50% of Renesas’ shares, later transferring about 8% of the shares to Denso. In 2017, Toyota, Renesas, and Denso established an autonomous driving cooperation alliance.Toyota’s autonomous driving ADS may use Renesas’ latest R-CAR V3U. Some may argue that according to the schedule, the R-CAR V3U will not be mass-produced until 2023, and this mass production refers to a scale of over 10,000 units per month. Given the shipment volume of Toyota’s autonomous driving system and the close cooperation between Toyota and Renesas, it is possible that Toyota’s autonomous driving ADS will use Renesas’ R-CAR V3U, as even before large-scale production, a few hundred units per month could still be sufficient. By the way, China’s FAW Hongqi has also decided to use R-CAR V3U as the main chip for its autonomous driving system, but mass production will not begin until the second half of 2023. The Volkswagen Group’s autonomous driving system is also likely to use R-CAR V3U, as both parties have already established a close cooperation in the cockpit domain, with Volkswagen Germany and SAIC Volkswagen having set up joint laboratories with Renesas.

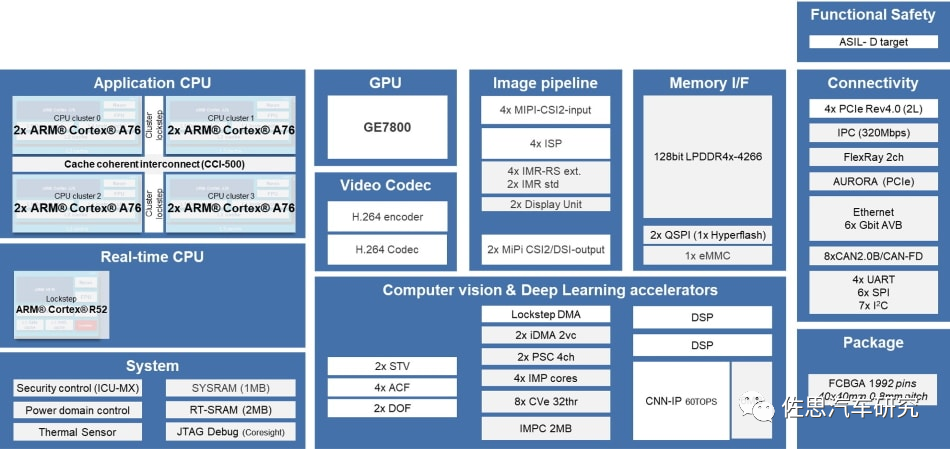

The table above shows Renesas’ shareholder list, with Denso, which is controlled by Toyota (formerly Toyota’s electronics and thermal exchange division), being the second-largest shareholder. Initially, Denso held only 0.5% of the shares, but later invested billions of dollars to increase its stake to 8.95%. Toyota holds 2.92% of the shares. The largest shareholder of Renesas is the Innovation Network Corporation of Japan (INCJ), a government-backed investment company established by the Japanese government and 19 private enterprises, with the Ministry of Finance holding 95.3% of the shares, making Renesas a quasi-state-owned enterprise in Japan. Among the 19 private enterprises is Toyota. Early on, INCJ held over 50% of Renesas’ shares, later transferring about 8% of the shares to Denso. In 2017, Toyota, Renesas, and Denso established an autonomous driving cooperation alliance.Toyota’s autonomous driving ADS may use Renesas’ latest R-CAR V3U. Some may argue that according to the schedule, the R-CAR V3U will not be mass-produced until 2023, and this mass production refers to a scale of over 10,000 units per month. Given the shipment volume of Toyota’s autonomous driving system and the close cooperation between Toyota and Renesas, it is possible that Toyota’s autonomous driving ADS will use Renesas’ R-CAR V3U, as even before large-scale production, a few hundred units per month could still be sufficient. By the way, China’s FAW Hongqi has also decided to use R-CAR V3U as the main chip for its autonomous driving system, but mass production will not begin until the second half of 2023. The Volkswagen Group’s autonomous driving system is also likely to use R-CAR V3U, as both parties have already established a close cooperation in the cockpit domain, with Volkswagen Germany and SAIC Volkswagen having set up joint laboratories with Renesas. The internal architecture of the V3U is shown in the image above, featuring an 8-core A76 design, but it is not as simple as stacking 12 A72 cores like Tesla; it uses ARM’s Corelink CCI-500, which provides complete cache coherence between processor clusters, such as Cortex-A76 and Cortex-A55, and can achieve big.LITTLE processing. It can also provide I/O consistency for other devices (such as Mali GPUs, network interfaces, and accelerators). The real-time lockstep CPU is ARM’s R52, and NVIDIA’s latest 1000TOPS chip, Atlan, also uses R52.

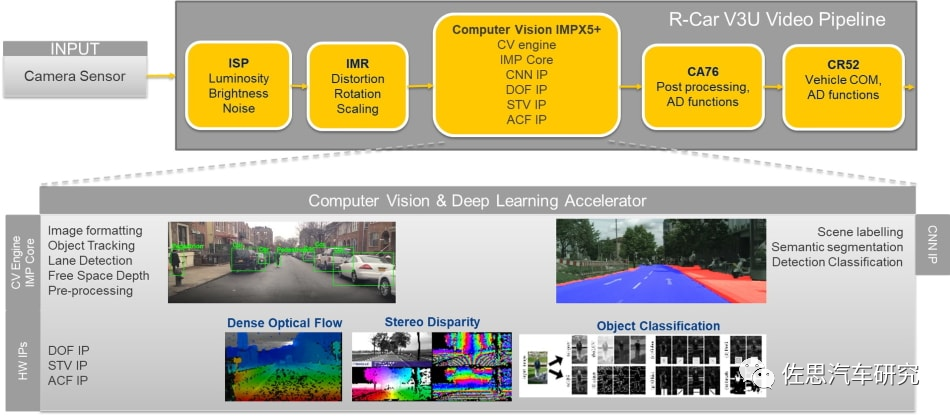

The internal architecture of the V3U is shown in the image above, featuring an 8-core A76 design, but it is not as simple as stacking 12 A72 cores like Tesla; it uses ARM’s Corelink CCI-500, which provides complete cache coherence between processor clusters, such as Cortex-A76 and Cortex-A55, and can achieve big.LITTLE processing. It can also provide I/O consistency for other devices (such as Mali GPUs, network interfaces, and accelerators). The real-time lockstep CPU is ARM’s R52, and NVIDIA’s latest 1000TOPS chip, Atlan, also uses R52. The V3U visual pipeline is shown in the image above, where it can be seen that the V3U has many hardware-accelerated computer vision modules, including stereo disparity, dense optical flow, CNN, DOF, STV, ACF, etc. Functional aspects include image formatting, object tracking, lane detection, free space depth, scene labeling, semantic segmentation, and detection classification, similar to Mobileye’s fully enclosed algorithm.

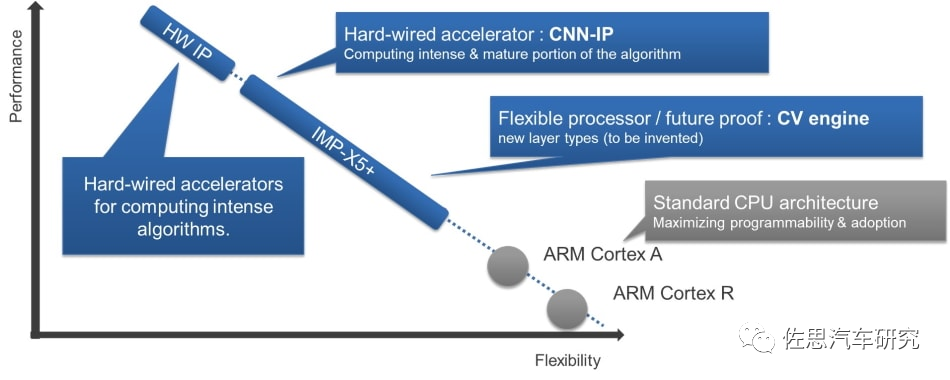

The V3U visual pipeline is shown in the image above, where it can be seen that the V3U has many hardware-accelerated computer vision modules, including stereo disparity, dense optical flow, CNN, DOF, STV, ACF, etc. Functional aspects include image formatting, object tracking, lane detection, free space depth, scene labeling, semantic segmentation, and detection classification, similar to Mobileye’s fully enclosed algorithm. Image processing is primarily handled by IMP-X5+, which should offer more flexibility than Mobileye. Due to its strong targeting and to save costs and reduce power consumption, Renesas did not use an expensive GPU but simply added a low-power GPU, specifically Imagination Technologies’ PowerVR GE7400, which has one shader cluster and 32 ALU cores, with a computing power of only 38.4 GFLOPS at 600 MHz.

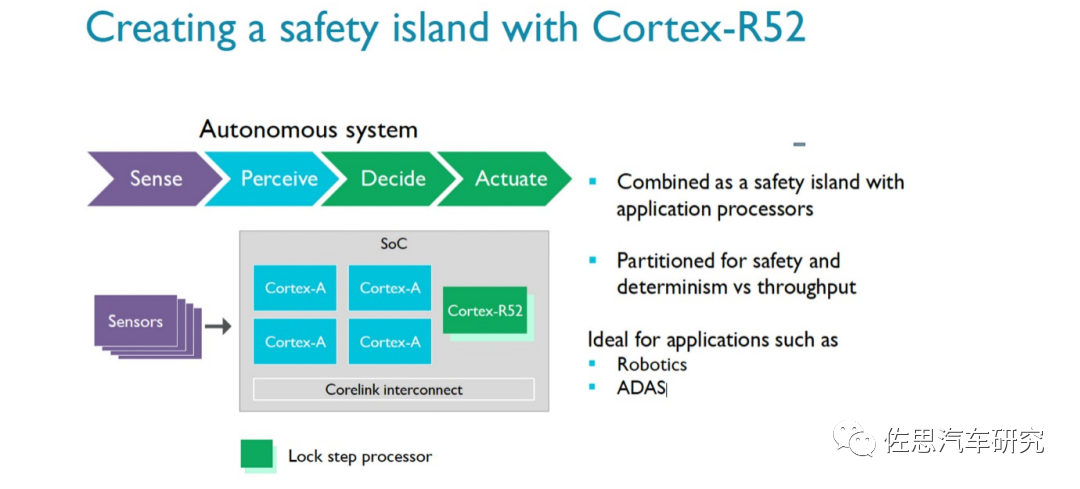

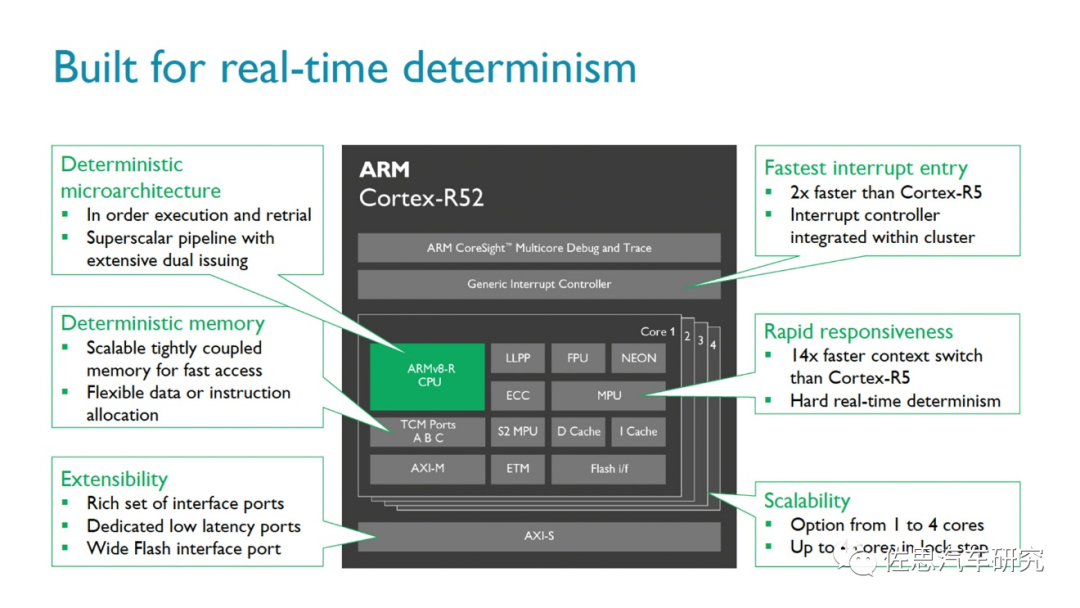

Image processing is primarily handled by IMP-X5+, which should offer more flexibility than Mobileye. Due to its strong targeting and to save costs and reduce power consumption, Renesas did not use an expensive GPU but simply added a low-power GPU, specifically Imagination Technologies’ PowerVR GE7400, which has one shader cluster and 32 ALU cores, with a computing power of only 38.4 GFLOPS at 600 MHz. The R52 is an ARM core released in 2016 specifically for the autonomous driving safety market, with the Cortex-R52 supporting up to 4-core lockstep technology, offering a 35% performance improvement over the Cortex-R5, with a 14-fold increase in context switching (out-of-order) and a 2-fold increase in entry preemption, supporting hardware virtualization technology. According to ARM, simple central control systems can directly use the Cortex-R52, but for industrial robots and ADAS (Advanced Driver Assistance Systems), it is recommended to pair it with Cortex-A, Mali GPUs, etc., to enhance overall computation. Additionally, the ARM Cortex-R52 has passed multiple safety standard certifications, including IEC 61508 (industrial), ISO 26262 (automotive), IEC 60601 (medical), EN 50129 (automotive), and RTCA DO-254 (industrial). In March 2021, the R52+ architecture was also launched, supporting up to 8-core lockstep.The R52 includes three major functions:• Software Isolation: Hardware-implemented software isolation means that software functions do not interfere with each other. For safety-related tasks, this also means that less code needs certification, saving time, cost, and effort.• Support for Multiple Operating Systems: With virtualization capabilities, developers can use multiple operating systems within a single CPU to integrate applications. This simplifies the addition of functions without increasing the number of electronic control units.• Real-time Performance: The high-performance multi-core cluster of Cortex-R52+ provides real-time response capabilities for deterministic systems, generating the lowest latency among all Cortex-R products.

The R52 is an ARM core released in 2016 specifically for the autonomous driving safety market, with the Cortex-R52 supporting up to 4-core lockstep technology, offering a 35% performance improvement over the Cortex-R5, with a 14-fold increase in context switching (out-of-order) and a 2-fold increase in entry preemption, supporting hardware virtualization technology. According to ARM, simple central control systems can directly use the Cortex-R52, but for industrial robots and ADAS (Advanced Driver Assistance Systems), it is recommended to pair it with Cortex-A, Mali GPUs, etc., to enhance overall computation. Additionally, the ARM Cortex-R52 has passed multiple safety standard certifications, including IEC 61508 (industrial), ISO 26262 (automotive), IEC 60601 (medical), EN 50129 (automotive), and RTCA DO-254 (industrial). In March 2021, the R52+ architecture was also launched, supporting up to 8-core lockstep.The R52 includes three major functions:• Software Isolation: Hardware-implemented software isolation means that software functions do not interfere with each other. For safety-related tasks, this also means that less code needs certification, saving time, cost, and effort.• Support for Multiple Operating Systems: With virtualization capabilities, developers can use multiple operating systems within a single CPU to integrate applications. This simplifies the addition of functions without increasing the number of electronic control units.• Real-time Performance: The high-performance multi-core cluster of Cortex-R52+ provides real-time response capabilities for deterministic systems, generating the lowest latency among all Cortex-R products.

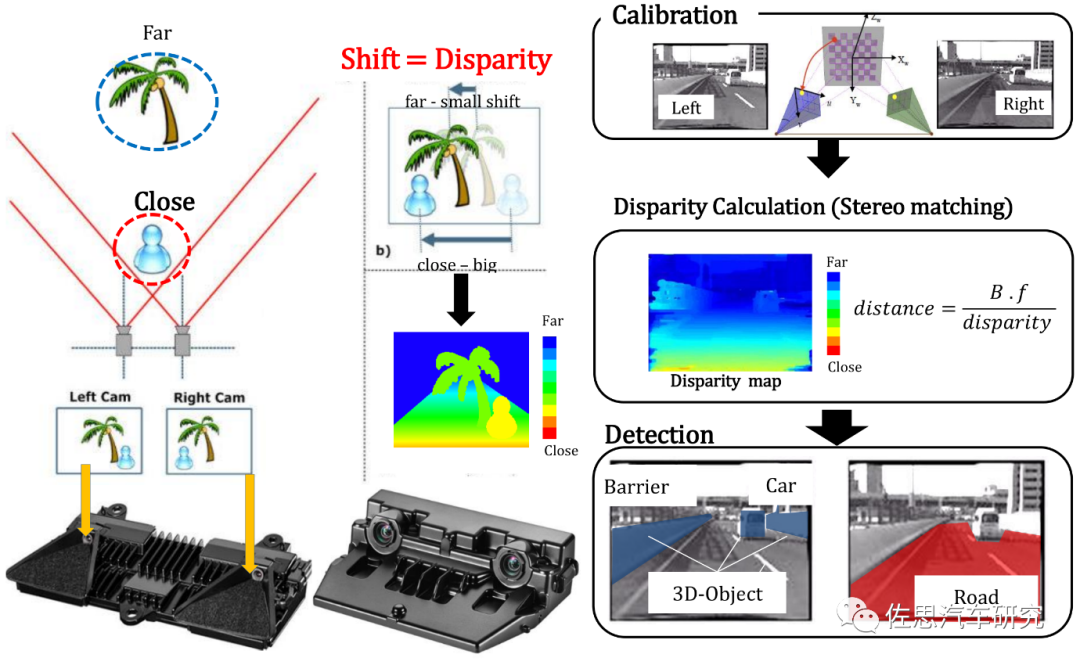

Considering cost factors, Renesas did not use cutting-edge 7nm technology but instead opted for a 12nm process, upgrading from the original 16nm FinFET process of the Renesas R-CAR H3 to a 12nm FFC process, resulting in minimal one-time expenses. However, in terms of AI performance, it is not inferior to those 5nm chips, with Renesas claiming that the V3U achieves an astonishing energy efficiency ratio of 13.8 TOPS/W, which is six times that of the top-tier EyeQ6.Like Mercedes, Toyota has always regarded stereo vision as the core of autonomous driving. Supporting stereo vision are Denso, Bosch, Jaguar Land Rover, Subaru, Honda, Continental Automotive, Huawei, DJI, Suzuki, and the electric vehicle brand Rivian, which is invested in by Amazon and Ford. Both Renesas V3H and V3U have dedicated hardware processing parts for stereo vision, and NVIDIA’s Xavier also has this capability.

Considering cost factors, Renesas did not use cutting-edge 7nm technology but instead opted for a 12nm process, upgrading from the original 16nm FinFET process of the Renesas R-CAR H3 to a 12nm FFC process, resulting in minimal one-time expenses. However, in terms of AI performance, it is not inferior to those 5nm chips, with Renesas claiming that the V3U achieves an astonishing energy efficiency ratio of 13.8 TOPS/W, which is six times that of the top-tier EyeQ6.Like Mercedes, Toyota has always regarded stereo vision as the core of autonomous driving. Supporting stereo vision are Denso, Bosch, Jaguar Land Rover, Subaru, Honda, Continental Automotive, Huawei, DJI, Suzuki, and the electric vehicle brand Rivian, which is invested in by Amazon and Ford. Both Renesas V3H and V3U have dedicated hardware processing parts for stereo vision, and NVIDIA’s Xavier also has this capability. Compared to monocular and trinary 2D systems, the greatest advantage of stereo vision is that it can detect 3D information of targets without needing to recognize them, making it better at tracking and predicting the position of targets. The fatal flaw of monocular and trinary cameras is that target recognition (classification) and detection are inseparable; recognition must occur first to obtain information about the target. The exhaustive nature of deep learning leads to inevitable missed detections, meaning that the 3D model is incomplete. Since the cognitive range of deep learning comes from its dataset, which is limited, deep learning is prone to missed detections, ignoring obstacles ahead. This means that if a target cannot be recognized, the system will assume that no obstacle exists ahead and will not decelerate, which is the cause of many Tesla accidents.Even if Tesla’s HW4.0 (FSD Beta) has high computing power, it is still Level 2 and cannot avoid missed detections. Moreover, the FSD Beta version still has limitations in recognizing and alerting to objects, being unable to identify static objects, vehicles emerging suddenly, construction zones, and complex intersections. Another drawback of monocular and trinary systems is their slow response to static targets.Most algorithms based on stereo vision are traditional geometric algorithms, not deep learning algorithms, which possess determinism and interpretability. Currently, the mentioned autonomous driving computing power is all aimed at deep learning convolution; no matter how high the computing power, it is unrelated to safety. For stereo vision, the maximum computational load lies in stereo matching, which is generally completed by hardware.The disadvantages of stereo vision include difficult calibration, requiring long periods of exploration. There is a severe shortage of algorithm talent, and the ecosystem is thin, unlike monocular and trinary systems, where free resources are abundant. Stereo vision requires internal talent cultivation, a process that can take over 10 years. This is not something manufacturers like Tesla can manage. BMW has also struggled; they have followed Mercedes in using stereo vision but with poor results, worse than monocular and trinary systems, and BMW has recently basically abandoned the stereo vision route.

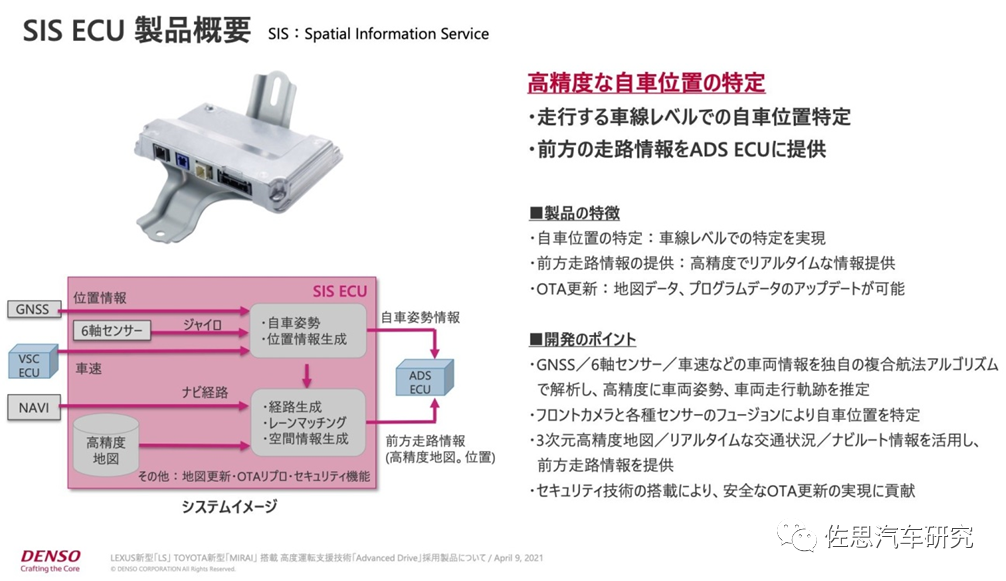

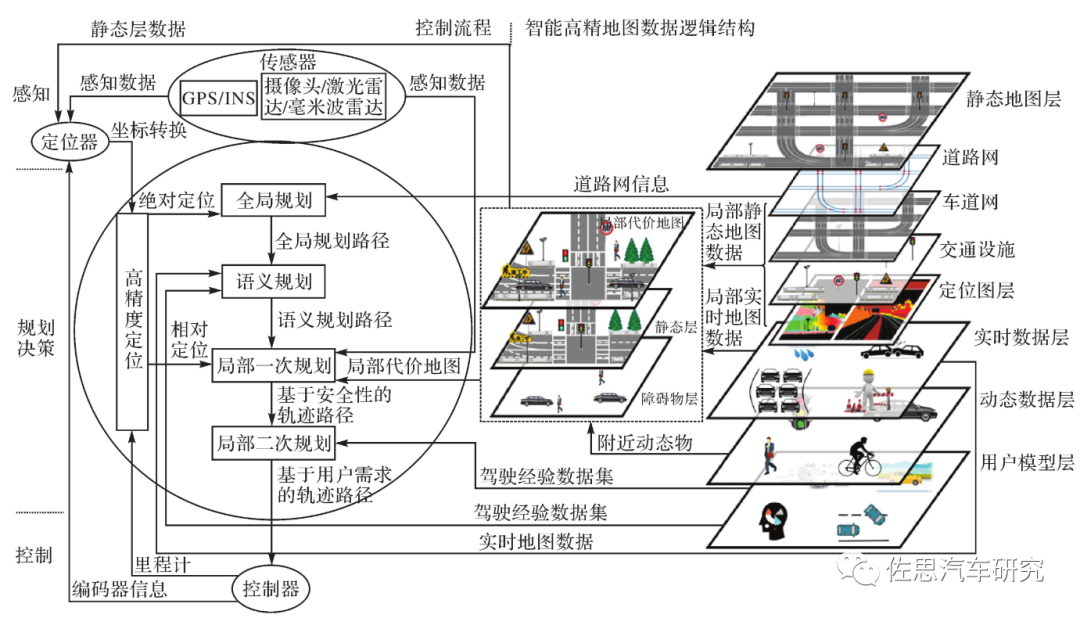

Compared to monocular and trinary 2D systems, the greatest advantage of stereo vision is that it can detect 3D information of targets without needing to recognize them, making it better at tracking and predicting the position of targets. The fatal flaw of monocular and trinary cameras is that target recognition (classification) and detection are inseparable; recognition must occur first to obtain information about the target. The exhaustive nature of deep learning leads to inevitable missed detections, meaning that the 3D model is incomplete. Since the cognitive range of deep learning comes from its dataset, which is limited, deep learning is prone to missed detections, ignoring obstacles ahead. This means that if a target cannot be recognized, the system will assume that no obstacle exists ahead and will not decelerate, which is the cause of many Tesla accidents.Even if Tesla’s HW4.0 (FSD Beta) has high computing power, it is still Level 2 and cannot avoid missed detections. Moreover, the FSD Beta version still has limitations in recognizing and alerting to objects, being unable to identify static objects, vehicles emerging suddenly, construction zones, and complex intersections. Another drawback of monocular and trinary systems is their slow response to static targets.Most algorithms based on stereo vision are traditional geometric algorithms, not deep learning algorithms, which possess determinism and interpretability. Currently, the mentioned autonomous driving computing power is all aimed at deep learning convolution; no matter how high the computing power, it is unrelated to safety. For stereo vision, the maximum computational load lies in stereo matching, which is generally completed by hardware.The disadvantages of stereo vision include difficult calibration, requiring long periods of exploration. There is a severe shortage of algorithm talent, and the ecosystem is thin, unlike monocular and trinary systems, where free resources are abundant. Stereo vision requires internal talent cultivation, a process that can take over 10 years. This is not something manufacturers like Tesla can manage. BMW has also struggled; they have followed Mercedes in using stereo vision but with poor results, worse than monocular and trinary systems, and BMW has recently basically abandoned the stereo vision route. Unlike most autonomous driving systems, Toyota specifically highlights its positioning system, referred to as the SIS ECU.There are two types of positioning for autonomous driving: one is called absolute positioning, which does not rely on any reference points or prior information, directly providing the autonomous vehicle’s coordinates relative to the Earth or the WGS84 coordinate system, which is represented as coordinates (B, L, H), where B is latitude, L is longitude, and H is the geodetic height, which is the height to the WGS-84 ellipsoid.The other is relative positioning, which relies on reference points or prior information. There are visual crowd-sourced positioning systems like Mobileye’s REM, which are very sensitive to changes in light; the light changes constantly, making data consistency nearly impossible. Backlighting and front lighting are completely different, and the ADAS system of a certain domestic car almost completely fails in backlighting, resulting in very low accuracy. There are also laser radar-based positioning systems with extremely high accuracy, but they are also very costly and cannot be used over large areas (hundreds of kilometers). Furthermore, relative positioning cannot be used in conjunction with standard high-precision systems, as their coordinate systems, data formats, interfaces, and timelines are completely different; standard traditional high-precision maps must have absolute positioning.

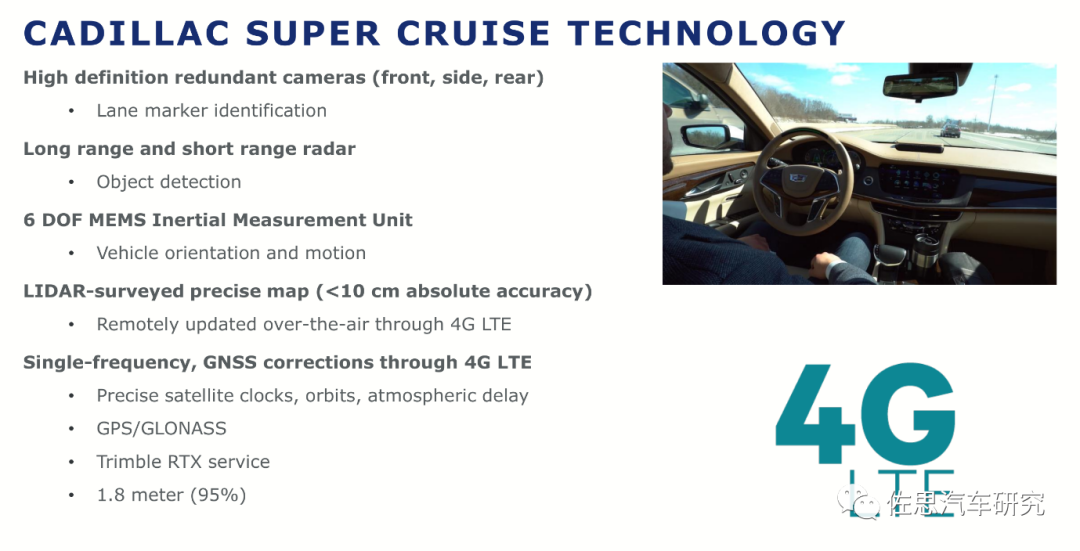

Unlike most autonomous driving systems, Toyota specifically highlights its positioning system, referred to as the SIS ECU.There are two types of positioning for autonomous driving: one is called absolute positioning, which does not rely on any reference points or prior information, directly providing the autonomous vehicle’s coordinates relative to the Earth or the WGS84 coordinate system, which is represented as coordinates (B, L, H), where B is latitude, L is longitude, and H is the geodetic height, which is the height to the WGS-84 ellipsoid.The other is relative positioning, which relies on reference points or prior information. There are visual crowd-sourced positioning systems like Mobileye’s REM, which are very sensitive to changes in light; the light changes constantly, making data consistency nearly impossible. Backlighting and front lighting are completely different, and the ADAS system of a certain domestic car almost completely fails in backlighting, resulting in very low accuracy. There are also laser radar-based positioning systems with extremely high accuracy, but they are also very costly and cannot be used over large areas (hundreds of kilometers). Furthermore, relative positioning cannot be used in conjunction with standard high-precision systems, as their coordinate systems, data formats, interfaces, and timelines are completely different; standard traditional high-precision maps must have absolute positioning. Absolute positioning is indispensable, especially for global planning. Currently, absolute positioning can only be achieved through satellite positioning, and aside from QZSS, no other system can achieve lane-level positioning for autonomous driving. This is one of the bottlenecks for Level 3/Level 4.This is why Tesla is considered a standard Level 2 intelligent driving system; it simply cannot achieve lane-level positioning. Currently, the only system capable of lane-level positioning is Cadillac’s Super Cruise, which uses Trimble’s RTX service and requires an annual service fee to obtain lane-level positioning.

Absolute positioning is indispensable, especially for global planning. Currently, absolute positioning can only be achieved through satellite positioning, and aside from QZSS, no other system can achieve lane-level positioning for autonomous driving. This is one of the bottlenecks for Level 3/Level 4.This is why Tesla is considered a standard Level 2 intelligent driving system; it simply cannot achieve lane-level positioning. Currently, the only system capable of lane-level positioning is Cadillac’s Super Cruise, which uses Trimble’s RTX service and requires an annual service fee to obtain lane-level positioning. Trimble’s RTX global tracking base station network has deployed around 120 tracking stations worldwide, which track and store GNSS observation values in real-time, sending them to control centers located in Europe and the United States. These control centers model the precise satellite orbits, clock errors, and atmospheric conditions for the entire constellation, obtaining global precision positioning correction values. These global precision positioning correction values are broadcast to authorized terminal users via L-band satellites (Trimble’s own satellites) or through network methods.The positioning system in Tesla only uses a single GPS. The GPS module is the NEO-M8L-01A-81, with a horizontal accuracy circular error probability (CEP) of 2.5 meters, which can be improved to 1.5 meters with SBAS assistance. CEP and RMS are units of GPS positioning accuracy (commonly referred to as precision). For example, a CEP of 2.5M means that if you draw a circle with a radius of 2.5M, there is a 50% chance that a point will fall within that circle, meaning that the GPS positioning has a 50% probability of being accurate to 2.5M. The corresponding RMS (66.7%) and 2DRMS (95%) values indicate that in 95% of cases, the accuracy is 6 meters, and with SBAS assistance, it is 3.6 meters, which already exceeds a lane’s width. The cold start time is 26 seconds, the hot start time is 1 second, and the assisted start time is 3 seconds. The built-in simple 6-axis IMU has a refresh rate of only 20Hz, and the cost is unlikely to exceed 80 cents.Japan’s GPS is a special type of GPS, augmented by QZSS.

Trimble’s RTX global tracking base station network has deployed around 120 tracking stations worldwide, which track and store GNSS observation values in real-time, sending them to control centers located in Europe and the United States. These control centers model the precise satellite orbits, clock errors, and atmospheric conditions for the entire constellation, obtaining global precision positioning correction values. These global precision positioning correction values are broadcast to authorized terminal users via L-band satellites (Trimble’s own satellites) or through network methods.The positioning system in Tesla only uses a single GPS. The GPS module is the NEO-M8L-01A-81, with a horizontal accuracy circular error probability (CEP) of 2.5 meters, which can be improved to 1.5 meters with SBAS assistance. CEP and RMS are units of GPS positioning accuracy (commonly referred to as precision). For example, a CEP of 2.5M means that if you draw a circle with a radius of 2.5M, there is a 50% chance that a point will fall within that circle, meaning that the GPS positioning has a 50% probability of being accurate to 2.5M. The corresponding RMS (66.7%) and 2DRMS (95%) values indicate that in 95% of cases, the accuracy is 6 meters, and with SBAS assistance, it is 3.6 meters, which already exceeds a lane’s width. The cold start time is 26 seconds, the hot start time is 1 second, and the assisted start time is 3 seconds. The built-in simple 6-axis IMU has a refresh rate of only 20Hz, and the cost is unlikely to exceed 80 cents.Japan’s GPS is a special type of GPS, augmented by QZSS. As early as 1972, the then Japan Radio Research Laboratory (now the National Institute of Information and Communications Technology) proposed the concept of the Quasi-Zenith Satellite System (QZSS), demonstrating that such a system is well-suited for Japan, a country located in the mid-latitudes with a long and narrow territory. On November 1, 2002, the new satellite business company Advanced Space Business Corporation (ASBC) was officially established, with 43 companies investing, including Mitsubishi Electric, Hitachi, and Toyota, which together hold 77% of the shares.However, the project did not proceed smoothly, and it was ultimately taken over by the Japanese government’s Ministry of Internal Affairs. After the government took over, the first satellite, Michibiki, was launched on September 11, 2010, and navigation services officially began on June 1, 2011. The second satellite was launched on June 1, 2017, the third on August 10, 2017, and the fourth on October 10, 2017. Japan plans to increase the number of QZSS navigation satellites to seven by 2023, at which point it will no longer rely on the US GPS and will be able to provide location information independently.From 2023 to 2026, the spatial signal ranging error without any ground-based enhancement will be 2.6 meters, and with ground-based enhancement, it can achieve around 0.8 meters. From 2027 to 2036, the error without ground-based enhancement will be 1 meter, and after 2036, the error will be 0.3 meters. No additional hardware costs are required for QZSS receivers, only software costs, and Apple phones already support QZSS. QZSS is inexpensive, efficient, and broadcasts without bandwidth bottlenecks or delays, making it the most suitable technology for autonomous driving. The downside is that Japan’s long and narrow territory can be covered by seven satellites, exceeding 100% coverage, while for large countries like China and the US, dozens of low-orbit satellites may be needed. Such foundational work will likely take decades to decide, approve, and implement.

As early as 1972, the then Japan Radio Research Laboratory (now the National Institute of Information and Communications Technology) proposed the concept of the Quasi-Zenith Satellite System (QZSS), demonstrating that such a system is well-suited for Japan, a country located in the mid-latitudes with a long and narrow territory. On November 1, 2002, the new satellite business company Advanced Space Business Corporation (ASBC) was officially established, with 43 companies investing, including Mitsubishi Electric, Hitachi, and Toyota, which together hold 77% of the shares.However, the project did not proceed smoothly, and it was ultimately taken over by the Japanese government’s Ministry of Internal Affairs. After the government took over, the first satellite, Michibiki, was launched on September 11, 2010, and navigation services officially began on June 1, 2011. The second satellite was launched on June 1, 2017, the third on August 10, 2017, and the fourth on October 10, 2017. Japan plans to increase the number of QZSS navigation satellites to seven by 2023, at which point it will no longer rely on the US GPS and will be able to provide location information independently.From 2023 to 2026, the spatial signal ranging error without any ground-based enhancement will be 2.6 meters, and with ground-based enhancement, it can achieve around 0.8 meters. From 2027 to 2036, the error without ground-based enhancement will be 1 meter, and after 2036, the error will be 0.3 meters. No additional hardware costs are required for QZSS receivers, only software costs, and Apple phones already support QZSS. QZSS is inexpensive, efficient, and broadcasts without bandwidth bottlenecks or delays, making it the most suitable technology for autonomous driving. The downside is that Japan’s long and narrow territory can be covered by seven satellites, exceeding 100% coverage, while for large countries like China and the US, dozens of low-orbit satellites may be needed. Such foundational work will likely take decades to decide, approve, and implement. In addition to absolute positioning, Toyota has also considered relative positioning, for which it has added a long-range camera, specifically the camera above the stereo vision, primarily used to detect lane markings, in conjunction with high-precision map prior information to achieve relative lane-level positioning.In terms of IMU, Toyota may be using TDK’s IMU developed specifically for autonomous driving, namely the IAM-20685 or IAM-20680HP, which are standalone external IMUs with high precision. The IAM-20685 meets ASIL-B standards, with a gyroscope range of ±2949 bps and an accuracy of ±300 bps. The accelerometer range varies from ±16g, ±32g to ±65g, with accuracies of ±8g, ±20g, and ±36g, respectively. Naturally, the price is not low, with the IAM-20680HP costing about $10 (for orders of 5,000 units), and the IAM-20658 costing about $15, which is comparable to the price of Tesla’s entire GPS module, and this is just for one IMU.The competition in autonomous driving among China, the US, and Japan is fierce. Japan has always pursued high product completion, giving the impression of lagging behind, but who will ultimately prevail? Time will tell.

In addition to absolute positioning, Toyota has also considered relative positioning, for which it has added a long-range camera, specifically the camera above the stereo vision, primarily used to detect lane markings, in conjunction with high-precision map prior information to achieve relative lane-level positioning.In terms of IMU, Toyota may be using TDK’s IMU developed specifically for autonomous driving, namely the IAM-20685 or IAM-20680HP, which are standalone external IMUs with high precision. The IAM-20685 meets ASIL-B standards, with a gyroscope range of ±2949 bps and an accuracy of ±300 bps. The accelerometer range varies from ±16g, ±32g to ±65g, with accuracies of ±8g, ±20g, and ±36g, respectively. Naturally, the price is not low, with the IAM-20680HP costing about $10 (for orders of 5,000 units), and the IAM-20658 costing about $15, which is comparable to the price of Tesla’s entire GPS module, and this is just for one IMU.The competition in autonomous driving among China, the US, and Japan is fierce. Japan has always pursued high product completion, giving the impression of lagging behind, but who will ultimately prevail? Time will tell.

END

Selected Hardcore Videos

WeRide challenges Huawei HI, showcasing a video of an unmanned vehicle navigating through an urban village at night, boldly stating, “We welcome everyone to compare and compete comprehensively.” Wow, that’s a direct challenge!

Click the link below to watch the video👇👇👇