Currently, we are at the early stages of an exciting transformation in artificial intelligence (AI) technology. With the accelerated evolution of natural language processing, multimodal large models, and generative AI technologies, AI is reshaping various industries at an unprecedented pace. According to IDC’s forecast, the global data volume is expected to grow from 159.2ZB in 2024 to over 384.6ZB by 2028, with a compound annual growth rate of 24.4%. Among this, it is anticipated that by 2028, 37% of data will be generated directly in the cloud, while the remaining data will be generated from edge and endpoint devices.

In the face of the surge in edge data, efficient data processing, low-latency transmission, and intelligent, secure storage have become key focuses for the industry. Future computing architectures must not only provide stronger computing power but also integrate more closely with storage systems to ensure that AI models can operate efficiently while optimizing data management and access methods.

From the current direction of AI technology development, on one hand, large models are evolving towards artificial general intelligence (AGI), exploring new directions such as multimodal and physical AI, and continuously challenging the new limits of computing power. On the other hand, to advance the comprehensive deployment of large models, the industry is beginning to move towards deep optimization and vertical customization, allowing large models to penetrate various industries and adapt to different scenarios such as mobile, edge computing, and cloud deployment.

The launch of DeepSeek has had a profound impact on the global AI market: as an open innovation technology, it not only demonstrates the optimization potential of AI in training and inference processes but also significantly improves the efficiency of large-scale deployment, fully proving that models can operate stably in environments with lower costs and higher efficiency. This achievement is of great significance for promoting the large-scale application of AI in enterprise applications and edge computing.

Arm Computing Platform: Continuously Promoting AI Optimization Deployment from Cloud to Edge

In the early stages of AI development, data centers, as the core locations for model training and initial inference, are facing unprecedented challenges. Traditional standard general-purpose chips struggle to handle the compute-intensive AI workloads, failing to meet the urgent demands for high performance, low power consumption, and flexible scalability in the AI era. Against this backdrop, the Arm computing platform, with its advanced technological advantages, opens up a new paradigm for the development of next-generation AI cloud infrastructure. From the Arm Neoverse computing subsystem (CSS) and Arm Total Design ecosystem project to the chiplet system architecture (CSA), Arm has made an integrated layout from technology to ecosystem, providing efficient, flexible, and scalable solutions for AI data center workloads while helping partners focus on product differentiation and accelerating time-to-market.

AI inference is key to unlocking AI value, rapidly expanding from the cloud to the edge, covering every corner of the world. In the field of edge AI, Arm, leveraging its unique technological and ecosystem advantages, continues to innovate, ensuring that the smart IoT and consumer electronics ecosystems can execute optimal workloads at the right time and in the most suitable locations.

To meet the rising AI workload demands at the edge, Arm recently launched an edge AI computing platform centered around the new Armv9 ultra-high-efficiency CPU Cortex-A320 and the Ethos-U85 AI accelerator, which has native support for Transformer networks. This platform achieves deep integration between the CPU and AI accelerator. Compared to last year’s platform with Cortex-M85 paired with Ethos-U85, it has improved machine learning (ML) computing performance by eight times, bringing significant breakthroughs in AI computing capabilities, enabling edge AI devices to easily run large models with over 1 billion parameters.

Figure: Arm’s edge AI computing platform supports running edge AI models with over 1 billion parameters

Figure: Arm’s edge AI computing platform supports running edge AI models with over 1 billion parameters

The newly released ultra-high-efficiency Cortex-A320 not only provides higher memory capacity and bandwidth for Ethos-U85, enhancing the execution of large models on Ethos-U85, but also supports larger addressable memory space and can manage multi-level memory access latency more flexibly. The combination of Cortex-A320 and Ethos-U85 is an ideal choice for addressing the memory capacity and bandwidth challenges posed by running large models and edge AI tasks.

Additionally, Cortex-A320 fully utilizes the enhanced AI computing features of Armv9, along with security features such as Secure EL2, Pointer Authentication/Branch Target Identification (PACBTI), and Memory Tagging Extension (MTE). Previously, these features have been widely applied in other markets, and Arm is now introducing them into the IoT and edge AI computing fields, providing excellent and flexible AI performance while achieving better isolation of software loads and protection against software memory anomalies, thereby enhancing overall system security.

Storage Development in the AI Era: Comprehensive Upgrades in Storage, Computing, and Security Capabilities

As the demand for AI computing continues to grow, the cloud-edge-end paradigm not only raises higher requirements for computing power but also imposes stricter demands on the performance, density, real-time capabilities, and power consumption of storage systems. In traditional models, computing architectures often separate storage and computing, with storage devices merely serving as data repositories, leading to frequent data transfers between storage and computing nodes, resulting in bottlenecks between “storage and computing.” However, in the AI era, to meet the needs for real-time data analysis, intelligent management, and efficient access, it becomes crucial to place storage closer to computing units or to enable storage itself to possess computing capabilities. This ensures that AI tasks are executed efficiently at the most appropriate locations.

AI computing from cloud to edge has varying demands for storage throughput, latency, energy consumption, security, and enhancements in host manageability such as Open Channel. Storage controllers and firmware running on Arm CPUs within storage controllers play an extremely important role in supporting differentiated AI storage needs.

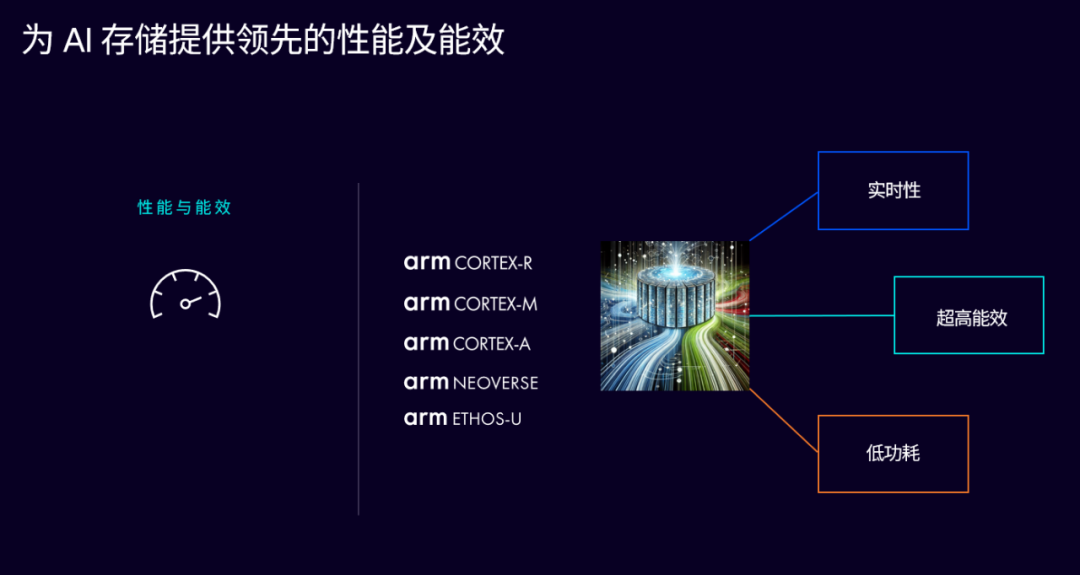

Figure: Arm’s rich IP platform solutions provide leading performance and energy efficiency for AI storage

Figure: Arm’s rich IP platform solutions provide leading performance and energy efficiency for AI storage

In fact, as the cornerstone of data storage and network control, Arm has been providing high-performance, low-power, and secure solutions for global storage controllers and devices, including:

• Arm Cortex-R series real-time processors, which have the fastest interrupt latency and real-time response speed, are widely used in various storage devices;

• Arm Cortex-M series embedded processors are popular choices for backend flash and media control, supporting custom instructions that allow customers to create differentiation through deep optimization for unique NAND media;

• Arm Cortex-A series application processors, designed with high-throughput pipeline architecture, support the highest processing performance while having solid ecosystem support for ML, data processing software, and rich operating systems;

• Arm Ethos-U AI accelerators support native acceleration of Transformers at 2048 MACs per second, enabling storage controllers to become smarter;

• Additionally, there are Neoverse solutions tailored for data centers. We are beginning to see innovative designs in CXL (Compute Express Link) adopting Arm Coherent Mesh Network (CMN) combined with Neoverse to achieve composable memory expansion and integrate near-storage computing concepts, reducing data movement.

Collaborating with the Ecosystem to Build the Future of AI Computing and Storage

While focusing on providing leading technologies and products, Arm is also committed to working hand in hand with ecosystem partners to promote the development of the storage industry. Platforms based on Arm architecture are being widely adopted by industry-leading storage companies to optimize their storage solutions. For example, Solidigm’s latest 122TB PCIe SSD Solidigm™ D5-P5336 significantly enhances the energy efficiency, storage density, and performance of AI data centers, with its storage controller utilizing Arm Cortex-R CPUs, effectively improving read and write real-time performance and latency determinism; Silicon Motion’s SM2508 controller chip for AI PCs employs Arm Cortex-R8 and Cortex-M0, achieving breakthroughs in energy efficiency and data throughput, with its SM2264XT-AT being the industry’s first automotive PCIe Gen4 controller chip, supporting hybrid critical workload access to data through enhanced virtualization while saving 30% in energy consumption; and Jiangbolong’s XP2300, ORCA 4836, and UNCIA 3836 solid-state drives, built on Arm Cortex-R CPUs, are widely used in AI PCs, servers, cloud computing, distributed storage, and edge computing, meeting the localized deployment needs of AI technology.

Moreover, in the domestic storage market, leading storage companies such as DaPu Microelectronics, LianYun Technology, YiXin Technology, Tenafly, DeYi Microelectronics, and YingRun Technology have also widely adopted Arm technology to create SSD controller chips and device solutions.

To date, nearly 20 billion storage devices based on Arm architecture and platforms have been deployed, including cloud and enterprise SSDs, automotive SSDs, consumer SSDs, hard disk drives, and embedded flash devices. Currently, storage devices powered by Arm technology continue to maintain a shipment volume of approximately 3 million units per day.

With cutting-edge technological strength, a rich ecosystem layout, and deep accumulation in the storage industry, Arm continues to lead technological innovation, empowering the development of computing and storage in the AI era. Arm will also continue to work with partners to build a new future for computing and storage in the AI era through secure and efficient Arm computing platforms.

Latest Recommended Reading:

-

What Kind of Storage Do AI Data Centers Need? – Solidigm Explains Challenges and Responses

-

Xpeng Motors: AI-Driven Smart Car Storage Demand Surges, Challenges and Opportunities Coexist

-

Price Increase! Storage Spot Market Fully Rises!

-

In the Era of AI, How Will Yiheng Chuangyuan, Refined Over 14 Years, Forge Stronger Products?

-

CFM Expects Some Markets to Stabilize in 2Q25, Storage Industry Will Achieve “Value” Restructuring

-

In the Era of “AI Democratization,” How Will DaPu Microelectronics Create High-Quality Full-Stack Storage Products?

-

After SanDisk, Another Manufacturer Announces Price Increase!

-

CFMS | MemoryS 2025 Successfully Concludes! Collection of Highlights from Industry Experts’ Speeches