Click the blue text above to subscribe!

Click the blue text above to subscribe!

Solving nonlinear equations is one of the core problems in scientific and engineering computations, involving various fields such as physical modeling, machine learning, and financial analysis. C++ has become the preferred language for such problems due to its high performance and low-level control capabilities, but there are still many challenges in efficiently implementing solutions. This article systematically outlines the best practices for solving nonlinear equations in C++ from four dimensions: algorithm selection, tool application, stability optimization, and performance enhancement.

1. Specialized Mathematical Libraries: Fast Implementation and Engineering Solutions

1.1 tomsolver: Symbolic Computation and Automatic Differentiation

The tomsolver library stands out with its extremely simple interface design and powerful symbolic processing capabilities. Its core advantages include:

- Symbolic Expression Parsing: Directly input mathematical expressions (e.g.,

<span>exp(-exp(-(x1 +x2)))</span>), without manually writing function code. - Automatic Jacobian Matrix Generation: Automatically compute derivatives through

<span>Jacobian(f)</span>, avoiding manual derivation errors. - Multi-Algorithm Support: Built-in Newton’s method, Levenberg-Marquardt (LM) algorithm, etc., to adapt to different scenario needs.

The example code demonstrates how to define and solve a system of equations in 10 lines:

#include<tomsolver/tomsolver.hpp>

using namespace tomsolver;

int main() {

SymVec f = {Parse("exp(-exp(-(x1 +x2)))-x2*(1+x1^2)"),

Parse("x1*cos(x2)+x2*sin(x1)-0.5")};

GetConfig().initialValue = 0.0; // Global initial value setting

VarsTable ans = Solve(f); // Automatically select algorithm to solve

std::cout << ans << std::endl; // Structured output result

}

This library is particularly suitable for quickly validating algorithms or handling complex forms of equations involving exponentials, trigonometric functions, etc.

1.2 Ceres Solver: A Tool for Large-Scale Optimization

Google’s open-source Ceres Solver is designed specifically for nonlinear least squares problems, with advantages including:

- Automatic Differentiation Support: Automatically generate derivatives through template metaprogramming, improving development efficiency.

- Parallel Computing Optimization: Accelerate Jacobian matrix computation using multithreading, suitable for problems with millions of variables.

- Robust Configuration: Provides parameter adjustments such as line search strategies and trust region methods.

A typical workflow includes:

- Define a cost function class inheriting from

<span>SizedCostFunction</span>. - Use

<span>Problem.AddResidualBlock()</span>to construct the optimization problem. - After configuring iteration counts, convergence thresholds, and other parameters, call

<span>Solve()</span>.

1.3 Boost.Math and GSL: Classic Solution Comparison

- Boost.Math: Provides template functions like

<span>newton_raphson_iterate</span>, requiring users to implement function values and their derivatives, suitable for scenarios with custom code control needs. - GNU Scientific Library (GSL): Supports various algorithms through solvers like

<span>gsl_multiroot_fsolver_hybrids</span>, but requires manual maintenance of functions and Jacobian matrices, more suitable for migrating existing FORTRAN/C legacy code.

2. Manual Implementation of Newton’s Method: Principles and Optimization

2.1 Basic Newton Iteration Method

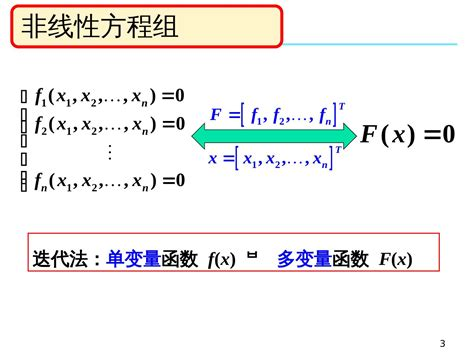

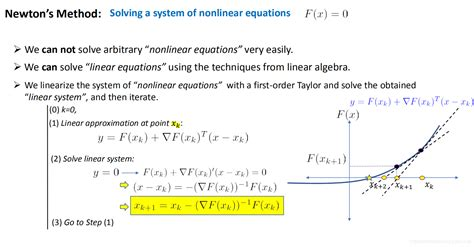

The core of Newton’s method lies in the iteration formula:

where is the Jacobian matrix, and is the function of the system of equations. Implementation steps include:

- Function and Derivative Implementation: Write C++ functions to compute and .

- Matrix Inversion Optimization: Use LU decomposition from the Eigen library instead of directly computing the inverse matrix.

VectorXd newton_solve(const VectorXd& x0) {

VectorXd x = x0;

for (int i = 0; i < max_iter; ++i) {

MatrixXd J = compute_jacobian(x);

VectorXd F = compute_function(x);

x -= J.lu().solve(F); // LU decomposition speedup

if (F.norm() < tol) break;

}

return x;

}

This method’s advantage lies in code transparency, but care must be taken regarding potential singularity issues with the Jacobian matrix.

2.2 Affine Invariance Improvement

When the dimensions of variables differ significantly, the basic Newton method may encounter convergence issues. Introducing a diagonal scaling matrix modifies the iteration to:

where the diagonal elements of are typically taken as the absolute values of the variable’s initial values to enhance numerical stability.

3. Special Problem Handling: Stability and Automation

3.1 Numerical Stability of Exponential Functions

Equations containing <span>exp</span> terms are prone to iteration divergence due to numerical overflow. Solutions include:

- Logarithmic Transformation: Rewrite the equation as , for example, transforming

<span>exp(x)-y=0</span>into<span>x - log(y)=0</span>. - Adaptive Step Size: Introduce a step size factor during iterations, dynamically adjusting through the Armijo rule:

double alpha = 1.0; while (residual(x - alpha*dx) > (1 - 0.5*alpha)*residual(x)) { alpha *= 0.5; }

3.2 Symbolic Differentiation Techniques

Manually deriving the Jacobian matrix is prone to errors and time-consuming. tomsolver automatically generates derivative expressions through symbolic differentiation:

SymVec f = {Parse("x1^2 + sin(x2)"), Parse("x1*x2 - 3")};

SymMat J = Jacobian(f); // Automatically computes {{2*x1, cos(x2)}, {x2, x1}}

This method not only avoids manual errors but also generates compilable and efficient C++ code.

4. Advanced Performance Optimization Strategies

4.1 Memory Preallocation and Sparsity

-

Matrix Preallocation: Preallocate memory for Eigen matrices outside of loops to reduce dynamic allocation overhead:

MatrixXd J(2,2); J.setZero(); // Reuse memory -

Sparse Matrices: For cases where the Jacobian matrix has many zero elements, use

<span>Eigen::SparseMatrix</span>combined with the Conjugate Gradient solver to reduce computational complexity.

4.2 Parallel Computing Acceleration

-

OpenMP Parallelization: Parallelize function evaluations for multiple equations:

#pragma omp parallel for for (int i = 0; i < n; ++i) { f[i] = compute_component(i, x); } -

GPU Acceleration: Utilize CUDA to offload Jacobian matrix computations to the GPU, achieving over 10x speedup for problems with dimensions exceeding 1000.

5. Solution Selection Guide

| Scenario Features | Recommended Solution | Key Advantages |

|---|---|---|

| Rapid Prototyping | tomsolver | Symbolic input, automatic differentiation, concise syntax |

| Large-Scale Nonlinear Least Squares | Ceres Solver | Automatic differentiation, parallel computing, industrial-grade optimization |

| Teaching and Small-Scale Problems | Manual Newton’s Method + Eigen | Algorithm transparency, easy to understand principles |

| Complex Function / High-Dimensional Sparse Problems | tomsolver symbolic differentiation + GPU | Avoid symbolic errors, leverage hardware acceleration |

By reasonably selecting tools and optimization strategies, developers can achieve efficient solutions from rapid validation to production deployment in C++. In practical projects, it is recommended to prioritize mature libraries and then customize optimizations for bottlenecks to balance development efficiency and runtime performance.

• end •

• end •

Accompanying is the longest confession of love

We push the most practical information for you

Scan the QR code to follow us