Authors: Li Xin Ma, Xin Cheng Tian

Abstract:

Autonomous intelligent unmanned systems are one of the important application scenarios of artificial intelligence, with autonomy and intelligence being the two most important characteristics of such systems. This paper attempts to find an engineering development method for achieving the autonomy and intelligence of autonomous intelligent unmanned systems based on adaptive software systems by reasonably and effectively integrating control engineering and software engineering disciplines, studying the adaptive structural model, operational mechanisms, learning methods, and adaptive strategies of software systems.

Keywords:

Autonomous Intelligent Systems; Software Adaptation; Adaptive Software Systems

1 Overview of Autonomous Intelligent Systems

Intelligent systems are generally considered to be computer systems that can produce human-like intelligent behavior. Intelligent systems can operate autonomously and adaptively on traditional von Neumann architecture computers, as well as on the new generation of non-von Neumann architecture computers. The meaning of “intelligence” is broad, and its essence remains to be explored further, making it difficult to provide a complete and precise definition of the term “intelligence”. Currently, it is stated that intelligence is a manifestation of the higher-level activities of the human brain, which should at least possess the ability to automatically acquire and apply knowledge, the ability to think and reason, the ability to solve problems, and the ability to learn autonomously.

Autonomous intelligent unmanned systems are complex systems formed by the integration of various technologies such as mechanics, control, computing, communication, and materials, with artificial intelligence undoubtedly being one of the key technologies for developing such systems. Utilizing various artificial intelligence technologies, such as image recognition (intelligent perception), human-computer interaction, as well as cognitive-based learning and reasoning, intelligent decision-making, and autonomous control, is an effective method to achieve and continually improve the autonomy and intelligence of autonomous intelligent unmanned systems, the two most important characteristics. We find that the development of the aforementioned artificial intelligence technologies enables humans to create unmanned systems with higher levels of autonomy and intelligence, and in some aspects, such systems may approach human intelligence levels in the future. Therefore, research on autonomous intelligent unmanned systems has become a new focus of international competition in the field of artificial intelligence, as well as a new driving force for the development of the new generation of information technology, and thus the research and application of such systems will have a significant impact on social development and human life.

As early as the beginning of this century, the U.S. Department of Defense published multiple versions of the “U.S. Unmanned Aerial Vehicle Roadmap,” proposing an overall strategic guide for the development of unmanned systems. In August 2018, the U.S. Department of Defense released the “Unmanned Systems Integrated Roadmap (2017-2042),” which is the 8th edition of the comprehensive roadmap for unmanned aerial vehicles/unmanned systems published since 2001, aimed at guiding the comprehensive development of military unmanned aerial vehicles, unmanned underwater vehicles, unmanned surface vessels, unmanned ground vehicles, and other unmanned systems, while also reflecting the trends of intelligence, collaboration, and safety in unmanned systems. In 2016, the European Union launched the “Horizon 2020” robotics program, investing nearly 100 million euros in the development and application of robotics. Meanwhile, China has also rapidly entered the era of intelligent unmanned systems. On July 8, 2017, the State Council issued and implemented the “New Generation Artificial Intelligence Development Plan,” which proposed that significant progress should be made in foundational theories and core technologies such as data intelligence, cross-media intelligence, collective intelligence, mixed augmented intelligence, and autonomous intelligent systems. The 3rd Global Unmanned Systems Conference was grandly held on November 22, 2020, in Zhuhai, with more than 80 domestic and international guests from the industry, over 500 enterprises, and more than 700 professionals gathering to discuss and exchange views on the development trends and hot issues of global unmanned systems. Therefore, it can be seen that leaders from national and industry management departments attach great importance to the research and development of intelligent unmanned systems.

2 Autonomous, Intelligent, and Adaptive Software Systems

2.1 Limitations and Vulnerabilities of Traditional Automation Systems

Autonomous intelligent unmanned systems have evolved from traditional automation systems, which help complete target tasks according to programming under the premise of consistency, reliability, and predictability. The challenge is that these actions are generally only suitable for situations with constraints (i.e., situations anticipated by designers and programmed accordingly by software developers) and are limited to the measurements provided by a limited array of sensors used for perceiving and understanding the environment. In other words, traditional automation systems are suitable for completing target tasks within the scope of design and programming but require human intervention to handle situations outside of the design and programming scope. It is evident that the “automation” in automation systems is based on pre-programmed tasks, lacking autonomous learning and decision-making capabilities, and even more so, adaptive capabilities. Intelligent decision-making, which is one of the key supporting technologies for autonomous intelligent unmanned systems, requires such capabilities, enabling precise perception and accurate understanding (such as recognizing and classifying) of detected targets; simultaneously establishing situational relationships and more importantly, system goals, poses challenges for intelligent systems, especially when encountering unexpected (not within the pre-designed scope) targets, events, or situations (Object, Event, or Situation). Therefore, the “autonomy” in autonomous intelligent unmanned systems is knowledge-driven, and “autonomy and intelligence” refer to the dynamic process of completing “perception-analysis-reasoning-decision-making (control)-execution,” capable of responding to unpredictable emergencies.

2.2 Characteristics of Autonomy

Autonomy and intelligence are two different categories of concepts. Autonomy refers to the manner of behavior, where a behavior is completed through self-decision, thus termed “autonomous”; intelligence refers to the ability to complete the behavioral process, which means whether the methods, strategies, and approaches used comply with natural laws or the behavioral rules of humans (or certain groups), finding reasonable “paths and methods” to complete a task in a constantly changing environment. Therefore, intelligence is hierarchical and graded. Of course, intelligence requires a reference system to be set.

The relationship between autonomy and intelligence is that autonomy is the foundation, while intelligence is an extension and upgrade of the former, with both complementing each other, and intelligence can “enhance” autonomy. The level of intelligence depends on the level of autonomy, with intelligence being a combination of autonomy and knowledge and its application. The general process of generating intelligence should be based on autonomy, comprehensively utilizing various capabilities such as scope of authority and proactive perception, data fusion, self-learning, understanding cognition, and optimizing iteration, proactively perceiving information, extracting information, accumulating knowledge, summarizing and applying knowledge, inductively refining characteristics, and enhancing knowledge structure to reach objectives that comply with natural laws.

Intelligence is relative, with different “individuals” exhibiting differences in intelligence. These differences arise both from the intelligence endowed at the time of “birth” and from the intelligence acquired through postnatal learning and improvement.

2.3 Automation and Autonomy of Autonomous Intelligent Unmanned Systems

In autonomous intelligent unmanned combat systems, “autonomy” typically refers to “performing tasks using a higher level of automation under broader combat conditions, environmental factors, and more diverse tasks or actions, utilizing more sensors and more complex software.” The characteristics of autonomy are often reflected in the degree to which the system independently completes task objectives. In other words, an autonomous system must be able to completely eliminate (handle) external interference under extremely uncertain environmental conditions, ensuring that it can repair system faults or address issues caused by external interference, even in the absence of communication or under poor communication conditions, and ensure the system operates well for an extended period.

To achieve autonomy, a series of intelligence-driven capabilities must be present, enabling responses to situations that were not planned or predicted in system design (i.e., decision-based responses). Autonomous systems should be able to achieve a certain degree of self-management and self-guidance; in terms of software design, it is necessary to base it not only on computational logic (more commonly referred to as “rule-based”) but also to adopt computational intelligence (such as fuzzy logic, neural networks, Bayesian networks), achieving goals (completing tasks) through communication and collaboration of intelligent systems. Furthermore, utilizing learning algorithms can achieve self-learning, enabling intelligent systems to adapt to dynamic environments. Autonomy can be seen as an important extension and upgrade of automation based on intelligence trends, capable of successfully executing high-level instructions aimed at target tasks in various unpredictable environments (conditions). Therefore, autonomy can also be understood as well-designed automation with high intelligence.

2.4 Autonomy and Adaptive Systems

To delve deeper into the realization of “autonomy,” one cannot avoid the discussion of adaptability or adaptive systems. As previously mentioned, to achieve autonomy, systems must possess self-learning capabilities and the ability to adapt to dynamic environments (extremely uncertain conditions). Adaptive systems have the characteristic of changing their behavior in response to changes in environmental conditions; that is, they possess the ability to modify internal rules based on external stimuli. Adaptive systems exhibit high-level perception, adaptability, and automation, capable of monitoring their own state and correcting and improving themselves when changes occur in the environment or errors arise, typically utilizing feedback-control-system behavior to modify or reorganize their own programs or subsystems.

Currently, adaptive software systems can collect real-time information on changes in systems and environments during operation and automatically adjust their structure or behavior as necessary according to pre-established strategies to better adapt to changes in external environments and demands. Such adjustments can target different aspects of the software system (e.g., attributes, components, etc.) through control theory. The adaptability of adaptive software systems is viewed as a closed-loop feedback process, encompassing four basic activities: monitoring to acquire various raw change data; discovery to analyze data; decision-making to determine how to adjust; and implementation to execute adjustment actions. It is evident that the adaptability of software systems manifests at two different levels: the adaptability of the software system entity itself and the adaptability of the system entity structure; that is, adaptive software entities and the overall system modify their behavior or change their structure to meet functional and quality requirements. Adaptive software systems have four characteristics: 1) The resident environment is open. Resources in the environment continuously change, typically without defined boundaries. 2) Sensitivity to changes. The system can perceive environmental changes and respond to relevant events occurring in the environment. 3) Dynamics of the system. The operational mode of the system is characterized by the interaction and change between the system and the environment. 4) Correctness and effectiveness of assessment. There is a need for correct and effective assessments of system changes to determine whether the system meets predefined goals.

Thus, regarding the adaptability of software systems, it can be defined from both the perspective of functional and performance improvement and the perspective of responding to environmental changes. It is generally believed that software adaptation, while being a capability attribute of software systems, can also be understood as a process, namely, software adaptation possesses typical dynamic and purposeful characteristics; that is, software adaptation is the capability of a software system to self-adjust and dynamically approach adaptive goals under the disturbance of environmental changes. Of course, from a temporal dimension, the software adaptation process is a continuous iterative cycle that coexists with the operation of the software system itself. To achieve software adaptation, corresponding software facilities must be supported, including perceiving and recognizing changes, adaptive decision-making facilities based on changes, and execution facilities to apply adaptive decision results to the target software system. It should be emphasized that, unlike traditional automation control software, which targets physical systems in the objective world, adaptive software systems focus on the software itself, emphasizing adjustments and refinements of their own (such as software states, method calls, architecture, etc.).

3 Limitations and Prospects of Adaptive Software Systems

Despite the extensive research conducted on control theory-based software adaptation, most have adopted linear or approximately linear methods, thus exposing shortcomings when operating in dynamic, open, and variable environments that exhibit nonlinear characteristics. How to effectively evaluate, analyze, and control the nonlinear dynamic processes of software adaptation remains a problem that has not been well resolved. The main manifestations include the difficulty in establishing precise models of software systems with nonlinear characteristics; an overemphasis on the design of control strategies; a lack of consideration for the uncertainty of software adaptation; and a lack of effective software methodology research and refinement.

Perhaps we can consider a different angle, attempting to explore how to conduct deeper research on software system adaptability based on a reasonable and effective integration of control engineering and software engineering disciplines. Thus, we may have to face three key issues: 1) The systemic software methodology issue; 2) The handling and mastering of the complexity and uncertainty of the software adaptation process; 3) The engineering issue. These issues can be further decomposed into six aspects: 1) Models and software methods for control theory-based adaptive software systems; 2) Analysis and evaluation of software adaptive dynamic processes; 3) Addressing uncertainty and fuzziness in software adaptation; 4) Multi-loop collaborative software adaptation; 5) Human-computer collaborative software adaptation; 6) Development support environment and tools for control theory-based adaptive software systems.

4 Adaptive Mechanisms and Online Adaptive Decision-Making in Software

As previously mentioned, “autonomy” implies that the system can achieve self-decision-based autonomous control. It is generally understood that autonomous control is the ability to organically combine the perception, decision-making, collaboration, and action capabilities of autonomous control systems without human intervention, to make self-decisions in unstructured environments based on certain control strategies, and to continuously execute a series of control functions to complete predetermined tasks. In specific engineering application scenarios, the intelligence of control systems, namely, “intelligent control” based on adaptation, is a significant technical advantage and feature of autonomous control systems. Generally, in engineering applications, two problems need to be addressed: 1) How to design a reliable adaptive software system that can make adaptive adjustments to unpredictable and various possible changes or disturbances, thereby enhancing the system’s robustness and flexibility, which is evidently a significant challenge; 2) Adaptive software systems typically require continuous operation. Currently, offline decision-making methods are usually employed, where adjustments are implemented based on predefined change descriptions and defined adaptive logic provided by developers. Once the adaptive requirements change (e.g., unexpected environmental changes occur that require adaptive responses), the adaptive logic of the system needs to be redefined, and the operation of the system must be terminated and reloaded, which is clearly unacceptable due to the frequent interruptions in system operation.

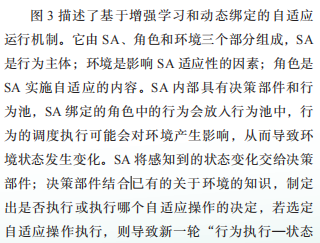

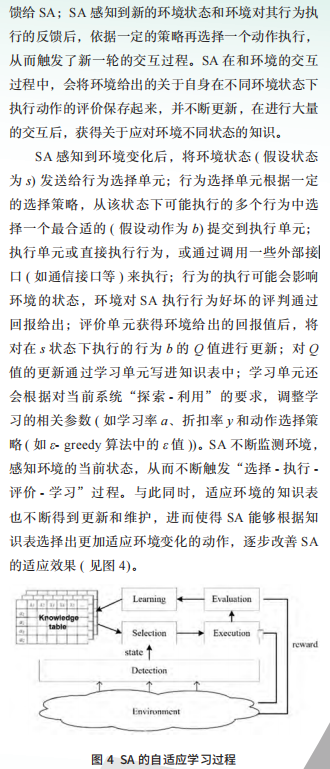

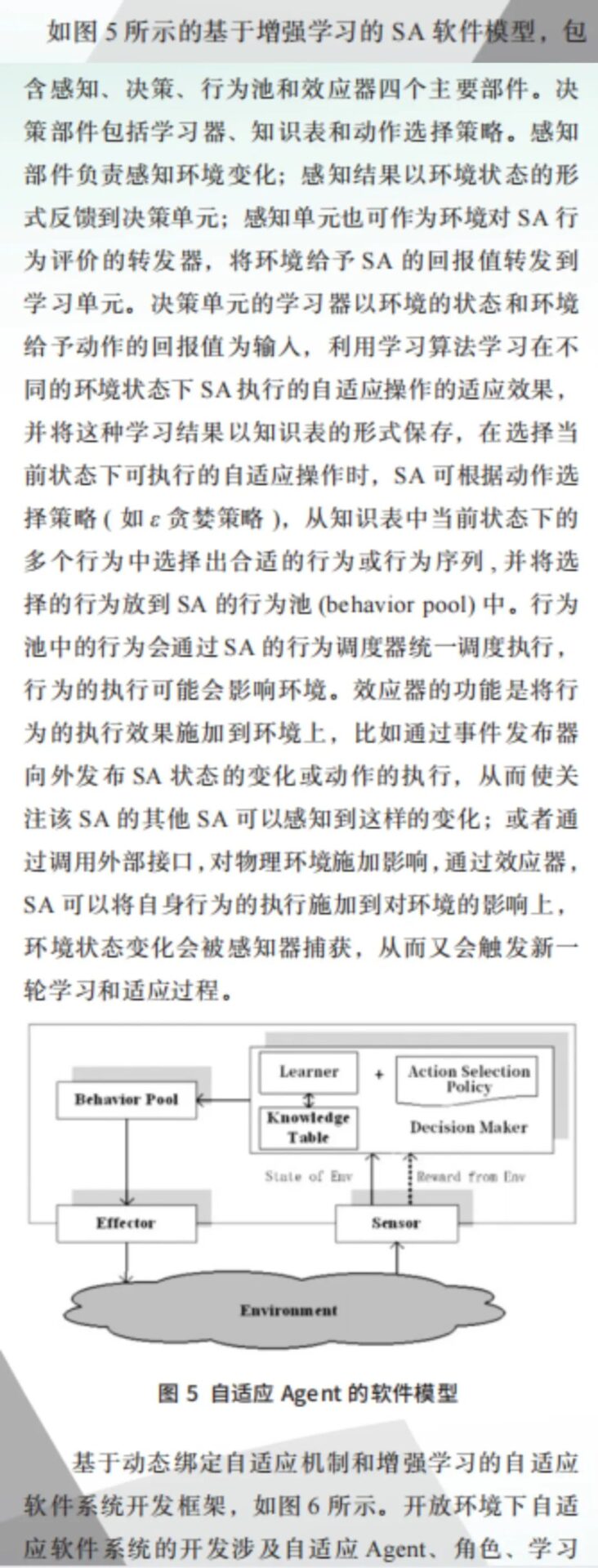

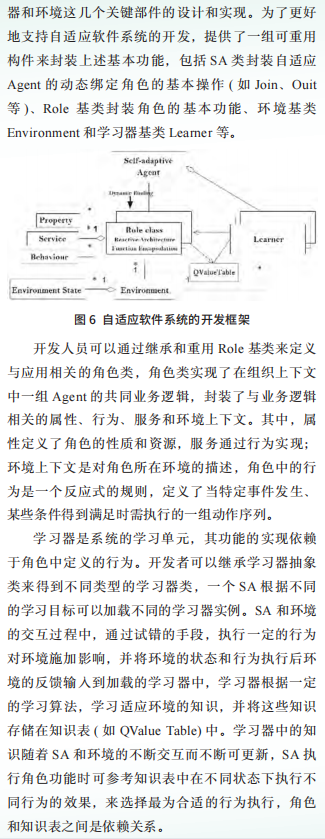

To address the above issues and challenges, we attempt to transform the adaptive logic originally provided by developers during the design phase into one completed by the system itself during the operational phase; adaptive decision-making also needs to transition from offline to online methods. This means designing an adaptive operational mechanism based on the abstraction and description of adaptive software systems, utilizing reinforcement learning methods to respond to unforeseen changes (disturbances), thereby achieving adaptive decision-making during the operational phase of the system. The specific design ideas and roadmap involve first establishing an abstract model of adaptive software systems; then, based on this, designing a dynamic binding adaptive mechanism and designing online adaptive decision-making methods (strategies) based on reinforcement learning and dynamic binding, seeking and practicing engineering development methods for adaptive software systems.

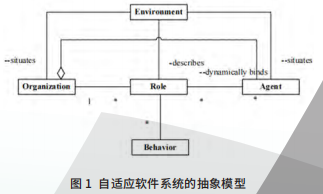

Based on the explicit characteristics of social groups or team organizations in adaptive software systems, high-level abstractions and concepts (such as Role, Organization, Rule, etc.) can be provided for the research of adaptive software systems from organizational and sociological perspectives. Figure 1 describes the adaptive system model based on agents and organizational abstractions. An adaptive software system is viewed as a social organization, where individuals in the organization are abstracted as agents, and both the organization and its agents reside in a specific environment, thus being influenced by environmental changes. The organization possesses a set of roles that encapsulate behaviors and resources within the organization. Individual agents within the organization exhibit their different statuses and behaviors by playing different roles.

Organization refers to a collection of agents with common goals and interactions in a specific context, where the context defines the environment in which the organization resides. For systems with adaptive capabilities, environmental changes will impact the elements, structure, and behavior of the organization.

Agent refers to a behavioral entity that can autonomously and flexibly execute actions in a specific environment to meet design objectives. Agents become members of the organization by playing roles within the organization, gaining status and resources. For certain agents, changes in the residing environment and internal conditions will prompt them to adjust their own structures and behaviors; such agents are referred to as self-adaptive agents (SA). Role represents an abstract representation of the environment, behavior, and resources exhibited by an agent in the organizational context, reflecting the agent’s status within the organization.

Environment abstractly represents the factors that influence the behavior or adjustments of the organization or agents. Environmental changes can be abstracted as the occurrence of relevant events in the environment. For open environments, changes are often dynamic, uncertain, and unpredictable in advance.

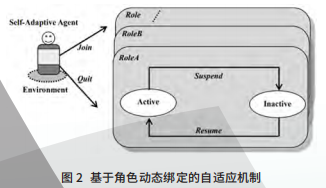

Agents within the organization can dynamically adjust the roles they play in the organization as their residing environment and internal conditions change, thereby “joining” or “quitting” a particular organization or changing their “position” within the organization, reflecting a certain adaptability to changes in their environment and themselves. Adjustments to roles can be completed through four fundamental adaptive atomic operations: Join (加入), Quit (退出), Suspend (挂起), and Resume (恢复). Once an agent changes its role through these atomic operations, its internal resources, attributes, and behaviors will also adjust accordingly. We refer to this adaptive mechanism as a dynamic binding mechanism (see Figure 2).

Join. An agent can execute the adaptive action Join to join a role in the organization, acquiring the structural and behavioral characteristics defined by that role, thereby changing the agent’s status within the organization, such as becoming a member of the organization or changing the role it plays within the organization. Once an agent joins a role, it is said to have bound to that role. Subsequently, the structure and behavior defined by that role will constrain and influence the agent.

Quit. An agent can execute the adaptive action Quit to exit a role in the organization, losing the structural and behavioral characteristics defined by that role; thereby changing the agent’s status within the organization, such as no longer being a member of the organization or changing the role it plays within the organization.

The roles bound to agents can exist in either an active (Active) state or an inactive (Inactive) state, and at any moment, they can only be in one of these two states.

Suspend. An agent can execute the adaptive action Suspend to change the state of the bound role from Active to Inactive. When the role bound to the agent is in an Inactive state, it will no longer constrain or guide the agent’s operation, but the agent can still access the structural information defined by the role and its internal attributes.

Resume. An agent can execute the adaptive action Resume to change the state of the bound role from Inactive to Active. When the role bound to the agent is in an active state, it will constrain the agent’s operation. For example, the agent will choose and execute actions based on the behaviors defined by the role.

5 Models and Development Framework of Adaptive Software Systems

6 Conclusion

In recent years, research on adaptive software systems has garnered significant attention and importance from both academia and industry, such as networked software in open, dynamic, and hard-to-control environments, and ultra-large-scale systems that are software-intensive. Although research on adaptive software systems has been ongoing for a long time and has achieved numerous successes in many engineering fields, how to support the engineering development of adaptive software systems in a systematic and efficient manner remains an important challenge currently faced, including requirements, modeling, implementation, and verification; especially since research on adaptive software systems is interdisciplinary, involving software engineering, control theory, decision theory, artificial intelligence, network, and distributed computing. In the field of software engineering, people mostly rely on software architecture technologies, component-based software engineering, aspect-oriented programming, middleware, and computational reflection to support the engineering development of adaptive software systems.

(References omitted)

Selected from “Communications of the Chinese Association for Artificial Intelligence”

Volume 12, Issue 6, 2022

Special Issue on Intelligent System Design and Applications Empowered by Artificial Intelligence Technology