Introduction: When we talk about artificial intelligence, we often think of machine vision, fingerprint recognition, facial recognition, retinal recognition, iris recognition, palm print recognition, automated planning, intelligent search, game theory, automated program design, intelligent control, etc.; however, its core content is inseparable from embedded systems.

However, not all artificial intelligence processors are the same. The reality is that many so-called AI engines are just traditional embedded processors with a vector processing unit added. There are also some other functions that are crucial for the front-end of artificial intelligence processing.

Optimizing Embedded System Workloads

In cloud computing processes, floating-point calculations are used for training, while fixed-point calculations are used for inference to achieve maximum accuracy. Using large server clusters for data processing, energy consumption and size must be considered, but compared to edge-constrained processing, they are almost unlimited.

In mobile devices, the feasibility design of power consumption, performance, and area (PPA) is crucial. Therefore, more efficient fixed-point calculations are prioritized on embedded SoC chips.

When converting networks from floating-point to fixed-point, some precision is inevitably lost. However, proper design can optimize precision loss, achieving results nearly identical to the original trained network.

One method to control precision is to choose between 8-bit and 16-bit integer precision. While 8-bit precision can save bandwidth and computational resources, many commercial neural networks still require 16-bit precision to ensure accuracy.

Each layer of a neural network has different constraints and redundancies, so selecting higher precision for each layer is crucial.

For developers and SoC designers, a tool that can automatically output optimized graphical compilers and executable files, such as the CEVA network generator, is a significant advantage in terms of time to market.

Additionally, maintaining the flexibility to choose higher precision (8-bit or 16-bit) for each layer is also important. This allows each layer to balance optimization between precision and performance, then generate efficient and accurate embedded network inference with one click.

Dedicated Hardware for True AI Algorithm Processing

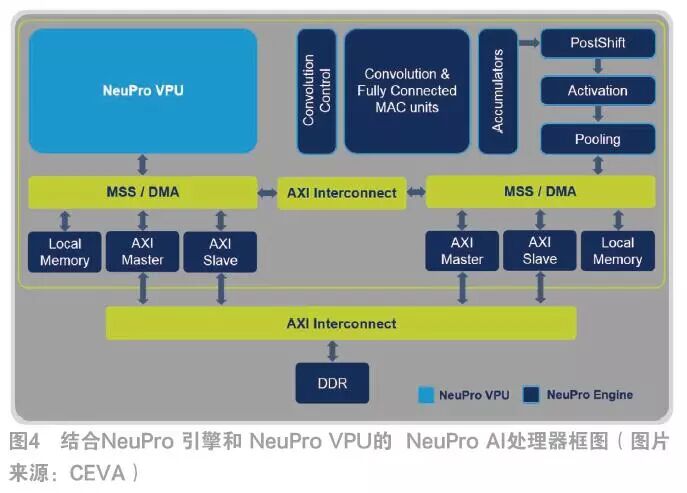

VPUs are flexible, but the large number of bandwidth channels required by many common neural networks pose challenges to standard processor instruction sets. Therefore, dedicated hardware must be available to handle these complex computations.

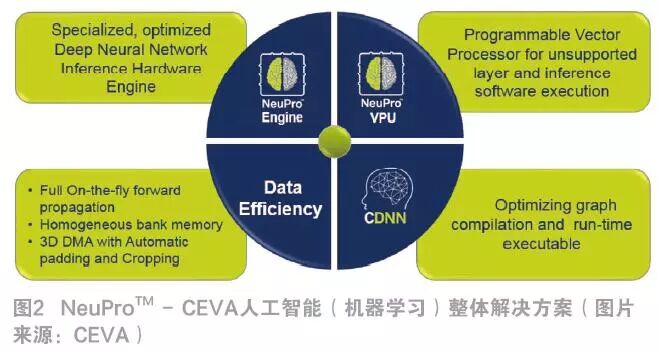

For example, the NeuPro AI processor includes dedicated engines for processing matrix multiplication, fully connected layers, activation layers, and pooling layers. This advanced dedicated AI engine, combined with the fully programmable NeuPro VPU, can support all other layer types and neural network topologies.

The direct connections between these modules allow for seamless data exchange, eliminating the need to write to memory. Furthermore, optimized DDR bandwidth and advanced DMA controllers using dynamic pipelining can further enhance speed while reducing power consumption.

Unknown AI Algorithms of Tomorrow

Artificial intelligence is still an emerging and rapidly developing field. The application scenarios for neural networks are rapidly increasing, such as target recognition, voice and sound analysis, 5G communication, etc. Maintaining an adaptive solution that meets future trends is the only way to ensure the success of chip design.

Therefore, dedicated hardware that meets existing algorithms is certainly not enough; it must also be paired with a fully programmable platform. With algorithms continuously improving, computer simulation is a key tool for making decisions based on actual results, and it reduces time to market.

The CDNN PC simulation package allows SoC designers to weigh their designs in a PC environment before developing real hardware.

Another valuable feature to meet future demands is scalability. The NeuPro AI product family can be applied to a wide range of target markets, from lightweight IoT and wearable devices (2 TOPs) to high-performance industrial monitoring and autonomous driving applications (12.5 TOPs).

The race to implement flagship AI processors on mobile devices has begun. Many are quickly catching up with this trend, using artificial intelligence as a selling point for their products, but not all products possess the same level of intelligence.

If you want to create a smart device that remains “smart” in the ever-evolving field of artificial intelligence, you should ensure that you check all the features mentioned above when selecting an AI processor.

1.Learn FPGA well and easily realize your engineering dreams

2.Foreign programmers seek help on what to do after 40, see what replies they get!

3.Oh my! As an electronic engineer, you are still using Baidu to find information?

4.To learn STM32, you must understand these five embedded operating systems!

5.How important are RF filters? This article will clarify it for you

6.Many people find FPGA difficult to learn because they haven’t summarized that digital electronics ultimately lead to analog electronics!

Disclaimer: This article is a network reprint, and the copyright belongs to the original author. If there are any copyright issues, please contact us, and we will confirm the copyright based on the materials you provide and pay for the manuscript or delete the content.