The wave of edge computing is sweeping the globe, especially against the backdrop of China’s “new infrastructure,” where intelligent demand is surging. As the core engine, embedded processors are at the center of fierce competition between AMD and Intel, with new products integrating NPU, targeting edge AI. This article will deeply compare the product layouts, performance tiers, AI potential, and ecosystems of these two giants, helping you gain insights into this battle for dominance in edge computing and make precise selections.

Decisive Edge: A Deep Dive into the Embedded Processor Showdown between AMD and Intel

Prologue: Edge Computing, Reshaping the Frontier of Intelligence

A technological wave known as “edge computing” is pushing computational intelligence from distant clouds to every device and corner around us with unprecedented force. This is not just a migration of technology but a profound transformation concerning efficiency, real-time capabilities, and intelligence, redefining factory production lines, urban traffic networks, retail experiences, and even every aspect of our lives.

Especially in China, this wave resonates with the booming digital economy, leading 5G networks, and grand national strategies. The call for “new infrastructure” and “East Data West Computing” provides fertile soil and a broad stage for the implementation of edge computing. From bustling streets to high-speed production lines, from rapidly changing financial markets to life-critical telemedicine, the demand for local real-time decision-making, intelligent analysis, and data security is experiencing explosive growth.

Core Engine: The Mission of Embedded Processors in This Era

At the heart of this exciting transformation, embedded processors play an irreplaceable role—they are the “heart” and “brain” driving edge intelligence. As the two giants in the x86 world, AMD and Intel have long set their sights on this promising battlefield, carefully laying out their strategies and launching a full range of embedded processing solutions from ultra-low power to server-level, marking the beginning of a contest for the future dominance of edge computing.

More challenging is that edge devices are not only required to handle traditional tasks but are increasingly expected to possess strong intelligent processing capabilities, even efficiently running optimized AI large models (such as language models and multimodal models). This is not only a test of raw computing power but also a comprehensive challenge to the processors’ capabilities in power consumption control, memory access, and acceleration efficiency of specific algorithms (such as neural networks). This is precisely the core of the product iteration and upgrade exhibited by both manufacturers in recent years, showcasing their respective technological paths and strategic focuses.

This article will take you deep into the core of this showdown, analyzing layer by layer the main product lines of AMD and Intel in the embedded and edge computing fields, focusing on:

- • Product Layout and Lifecycle Commitment: Understanding how both parties divide their product lines and the long-term supply guarantees that are highly valued in industries such as manufacturing and healthcare.

- • Performance Tier Strength Comparison: Examining representative models from entry-level to high-end in terms of CPU, graphics, intelligent processing (including NPU), and video processing (focusing on H.264/H.265) performance.

- • Intelligent Potential of Graphics Cores: Exploring the acceleration mechanisms and application prospects of integrated graphics in AI inference.

- • Challenges and Opportunities of Deploying Large Models at the Edge: Evaluating the capability boundaries of different processors in carrying optimized large models.

- • Application Layering of Computing Power Demand: Discussing the specific computing power requirements of different intelligent application scenarios and corresponding selection ideas.

- • Developer Ecosystem: Comparing the software toolchains, libraries, and framework support of both parties to see who can better empower developers.

- • Unique Perspective of the Chinese Market: Gaining insights into the market landscape by combining local application characteristics and policies such as “Xinchuang”.

We strive to illuminate a lamp for you in the complex fog of edge computing technology selection with clear context, detailed data, and objective analysis.

Cornerstone: Strategic Layout and Long-term Commitment of Embedded Product Lines

For embedded systems that require long-term stable operation, the sustainable supply of processors is the cornerstone of project success. Both AMD and Intel understand this well and provide significant lifecycle guarantees for their embedded product lines.

- • AMD’s Focus Strategy: AMD clearly brands its embedded products under the “Embedded” label, mainly divided into two series: one integrates high-performance Zen architecture CPUs with powerful Radeon graphics cores, the Ryzen™ Embedded APU (covering from low-power R series, mid-range V series to the latest 8000 series with integrated NPU); the other is the EPYC™ Embedded processors derived from the server product line, aimed at high-performance edge servers. AMD typically provides a 7 to 10-year supply commitment for its designated embedded products, such as the R2000 and 8000 series, both promising 10 years, providing confidence for industry customers requiring long-term deployment and maintenance.

- • Intel’s Extensive Coverage: Intel’s embedded product line is extremely vast, usually requiring specific SKU suffixes (such as E, UE, HE, etc.) or checking its official product database (Intel ARK) to confirm whether they belong to the IoT or Embedded category. Its product range covers ultra-low power Atom® series, mainstream performance embedded versions of Core™ processors, to integrated networking and acceleration features of Xeon® D SoCs, as well as server features and ECC support of the Xeon® E/W-1x00E series. Intel provides industry-leading 7 to 15-year supply cycles for its clearly designated IoT/embedded SKUs. However, it is essential to carefully verify the lifecycle commitments of specific models during selection to avoid confusion with consumer-grade products.

Core Extraction: Long-term supply is a consensus for both parties serving the embedded market. AMD’s product line is relatively focused, while Intel offers a broader selection space, but users need to be more meticulous in distinguishing.

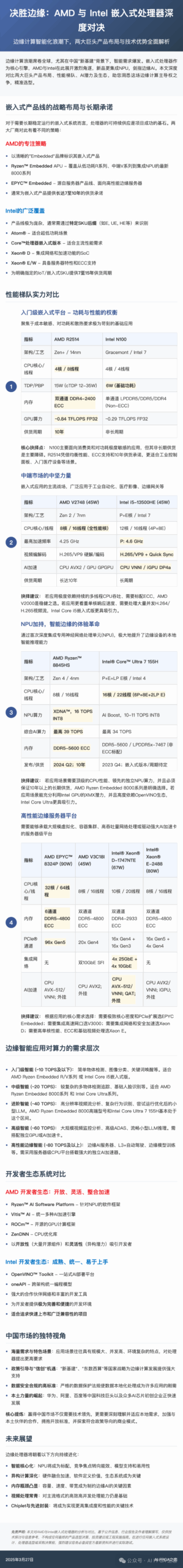

First Tier: Entry-level Embedded Platforms – Balancing Power Consumption and Performance

This tier focuses on cost-sensitive, power, and heat-sensitive basic applications, such as simple device control, information display, or network connectivity.

Parameter Specification Comparison (Typical Models: AMD R2514 vs. Intel N100)

| Indicator | AMD Ryzen™ Embedded R2514 | Intel® Processor N100 |

| Architecture/Process | Zen+ / 14nm | Gracemont (Efficiency Core) / Intel 7 |

| CPU Cores / Threads | 4 Cores / 8 Threads | 4 Cores / 4 Threads |

| Frequency (Base/Boost) | 2.1 GHz / 3.5 GHz | 1.0 GHz / 3.4 GHz |

| Cache (L2+L3) | 2MB + 4MB | 2MB + 6MB |

| TDP/PBP | 15W (cTDP 12-35W) | 6W (Base Power) |

| Memory | Dual-channel DDR4-2400 ECC | Single-channel LPDDR5/DDR5/DDR4 (Non-ECC) |

| iGPU | Radeon™ Vega 6 (6 CU @ ~1.1GHz) | Intel® UHD Graphics (Xe-LP, 24 EU @ 0.75GHz) |

| Display Output | Up to 3x 4K | Up to 3x 4K |

| Video Codec | H.265/H.264/VP9 Hardware Decode/Encode | H.265/H.264/VP9 Hardware Decode/Encode |

| Intelligent Processing Acceleration | No NPU (CPU SIMD / GPU GPGPU) | No NPU (CPU VNNI / iGPU DP4a / GNA 3.0) |

| GPU Theoretical Performance | ~0.84 TFLOPS FP32 / ~6.7 TOPS INT8 | ~0.29 TFLOPS FP32 / ~1.2 TOPS INT8 (DP4a) |

| Release/Supply | 2022 Q2; 10 years[5] | 2023 Q1; Non-long cycle[9] |

Table 1: Entry-level embedded processor specification comparison.

Core Competitiveness Analysis

In the entry-level market, power consumption and cost are often the primary considerations. Intel N100, with its advanced Intel 7 process and only 6W base power, excels in energy efficiency control, making it an ideal choice for compact, fanless designs. Its 4-core Gracemont efficiency core, while lacking the single-core performance and multi-threading capability (4 threads) of its competitor, is sufficient for basic computing and control tasks.

In contrast, AMD R2514, based on the slightly older Zen+ architecture and 14nm process, has a TDP of 15W but offers stronger CPU multi-threading performance (4 cores and 8 threads) and significantly better GPU graphics and general computing capabilities (the FP32 performance of Radeon Vega 6 is nearly three times that of N100 UHD Graphics). This gives R2514 an advantage in scenarios requiring simultaneous handling of more tasks or better graphics rendering capabilities.

In terms of intelligent processing, neither is equipped with a dedicated NPU, resulting in very limited capabilities. N100 relies on CPU VNNI and GPU DP4a instructions for basic INT8 acceleration and has a GNA unit for specific low-power tasks. R2514 mainly depends on the general computing capabilities of the GPU.

For video processing (mainly looking at H.264/H.265), both have hardware encoding and decoding capabilities. N100, with its newer Xe-LP media engine, typically performs better in concurrent decoding efficiency and energy consumption, potentially supporting more simultaneous decodes of 1080p H.265 streams (such as 4-6 streams). R2514’s capabilities are relatively basic (possibly 2-4 streams).

In terms of platform features, R2514 supports dual-channel memory and ECC verification, making it more friendly for applications with high reliability requirements. N100 typically comes with single-channel non-ECC memory.

Key Decision Point: N100 primarily targets consumer-grade and extremely cost/power-sensitive lightweight applications, and its lack of long-term supply guarantee[9] is a major obstacle for its use in industrial-grade embedded systems. In contrast, R2514, with its balanced performance, ECC support, and most importantly, 10-year supply commitment[5], is more suitable for applications requiring long-term stable operation, such as industrial control panels, entry-level medical devices, and digital signage.

Second Tier: The Backbone of the Mid-range Market (AMD V2000 vs. Intel Core 13th Gen Embedded)

This tier is the mainstream battlefield for embedded applications, widely used in industrial automation, medical imaging, edge gateways, digital signage, and mid-range video surveillance (NVR), requiring the best balance between performance, power consumption, functionality, and cost.

Parameter Specification Comparison (Typical Models: V2718 vs. i5-1345UE; V2748 vs. i5-13500HE)

| Indicator | AMD V2718 (15W) | Intel i5-1345UE (15W)[10] | AMD V2748 (45W) | Intel i5-13500HE (45W)[11] |

| Architecture/Process | Zen 2 / 7nm | P+E Cores / Intel 7 | Zen 2 / 7nm | P+E Cores / Intel 7 |

| CPU Cores / Threads | 8 Cores / 16 Threads | 10 Cores / 12 Threads (2P+8E) | 8 Cores / 16 Threads | 12 Cores / 16 Threads (4P+8E) |

| Max Boost Frequency (GHz) | 4.15 | P: 4.6 / E: 3.4 | 4.25 | P: 4.6 / E: 3.5 |

| Cache (L3) | 8MB | 12MB | 8MB | 18MB |

| Memory | DDR4-3200 ECC | DDR5/LPDDR5x/DDR4 (Optional ECC)[10] | DDR4-3200 ECC | DDR5/LPDDR5x/DDR4 (Optional ECC)[11] |

| iGPU | Radeon™ Vega 7 (7 CU @ 1.6GHz) | Iris® Xe Graphics (80 EU @ 1.25GHz) | Radeon™ Vega 7 (7 CU @ 1.6GHz) | Iris® Xe Graphics (80 EU @ 1.3GHz) |

| Video Codec | H.265/VP9 Hardware Decode/Encode | H.265/VP9 Hardware Decode/Encode; Quick Sync | H.265/VP9 Hardware Decode/Encode | H.265/VP9 Hardware Decode/Encode; Quick Sync |

| Intelligent Processing Acceleration | No NPU (CPU AVX2 / GPU GPGPU) | No NPU (CPU VNNI / iGPU DP4a) | No NPU (CPU AVX2 / GPU GPGPU) | No NPU (CPU VNNI / iGPU DP4a) |

| GPU Theoretical Performance | ~1.43 TFLOPS FP32 / ~11.4 TOPS INT8 | ~1.6 TFLOPS FP32 / ~6.4 TOPS INT8 (DP4a) | ~1.43 TFLOPS FP32 / ~11.4 TOPS INT8 | ~1.66 TFLOPS FP32 / ~6.7 TOPS INT8 (DP4a) |

| TDP/PBP (W) | 15 (cTDP 10-25) | 15 (Max Turbo 55) | 45 (cTDP 35-54) | 45 (Max Turbo 95) |

| Supply Cycle | Up to 10 years[12] | Long Cycle[10] | Up to 10 years[12] | Long Cycle[11] |

Table 2: Mid-range embedded processor specification comparison.

Core Competitiveness Analysis

The competition in the mid-range market is more intense, with both sides sending out strong contenders. AMD V2000 series stands out with its up to 8 full-performance Zen 2 cores, excelling in applications requiring stable, high-intensity parallel processing capabilities, such as running multiple real-time control tasks or virtualization environments simultaneously.

Intel Core i5 Embedded Version (13th Gen UE/HE Series) adopts a hybrid architecture of performance cores and efficiency cores. The performance cores (P-core) provide leading single-thread performance, which is crucial for applications requiring quick responses to user inputs or executing complex instructions. The efficiency cores (E-core) optimize multi-tasking and power consumption under low loads, intelligently scheduled by Intel Thread Director technology.

In terms of intelligent processing, neither has integrated NPUs. The Intel platform has hardware acceleration advantages in INT8 inference due to CPU VNNI instructions and GPU DP4a instructions, and can easily achieve cross-device optimized deployment through the mature OpenVINO toolchain. Although the V2000’s theoretical INT8 performance is higher, it mainly relies on general computing units and requires deeper optimization through frameworks like ROCm to unleash its potential. For mid to low complexity AI tasks, both can perform adequately, but Intel may have an edge in usability.

Video processing capability (H.264/H.265) is a key differentiator between the two. Intel’s Quick Sync Video engine is renowned for its efficiency in handling high-concurrency H.264/H.265 video streams, with its efficiency and simultaneous processing capabilities (decoding/encoding 16 streams or even more 1080p streams) typically significantly outperforming AMD V2000’s VCN 2.0 engine (which may handle 4-8 streams). This gives the Intel platform a natural advantage in applications such as multi-channel video surveillance (NVR), video analysis servers, and high-definition video conferencing terminals.

In terms of platform features, both provide long-term supply guarantees. The AMD V2000 comes standard with ECC support. The Intel Core i5 Embedded Version offers more flexible memory type support (DDR4/DDR5/LPDDR5x) and optional ECC[10, 11].

Intel i5-1345UE/13500HE is Intel’s mainstay for this market, inheriting the advanced architecture and powerful graphics core of the desktop platform while adding the long-term supply, wide temperature, and ECC features required for embedded applications, gaining widespread application through Intel’s vast ecosystem.

Decision Recommendation: If the application heavily relies on sustained multi-thread CPU throughput, requires standard ECC, and trusts AMD’s supply record, AMD V2000 is a robust choice (such as for industrial control, medical imaging). If the application values single-core response speed, needs to handle large numbers of concurrent H.264/H.265 video streams, or wishes to leverage the mature and user-friendly OpenVINO ecosystem for AI development, Intel Core i5 Embedded Version[10, 11] is more attractive (such as for high-performance IPC and multi-channel NVR).

Third Tier: NPU Empowerment, The Experience Revolution of Intelligent Edge (AMD R8000 vs. Intel Core Ultra)

This generation of processors marks the entry of edge computing into a new intelligent era. By deeply integrating dedicated neural network processing units (NPU) for the first time, they significantly enhance the local intelligent inference capabilities of edge devices, even making it possible to run small AI large models on the edge.

Parameter Specification Comparison (Typical Models: AMD 8845HS vs. Intel Core Ultra 7 155H)

| Indicator | AMD Ryzen™ Embedded 8845HS (Representative)[6] | Intel® Core™ Ultra 7 155H[13] |

| Architecture/Process | Zen 4 / 4nm | P+E+LP E Cores / Intel 4 |

| CPU Cores / Threads | 8 Cores / 16 Threads | 16 Cores / 22 Threads (6P+8E+2LP E) |

| Frequency (Base/Boost, GHz) | 3.8 / 5.1 | P: 1.4/4.8, E: 0.9/3.8, LP E: 0.7/2.5 |

| Cache (L3) | 16MB | 24MB |

| TDP/PBP (W) | 45W (cTDP 35-54W) | 28W (Max Turbo 115W) |

| Memory | Dual-channel DDR5-5600 ECC | DDR5-5600 / LPDDR5x-7467 (Non-ECC Standard) |

| iGPU | Radeon™ 780M (RDNA 3, 12 CU @ 2.7GHz) | Intel® Arc™ Graphics (Xe-LPG, 8 Xe Cores @ 2.25GHz) |

| Video Codec | H.265/H.264/VP9/AV1 Hardware Decode/Encode (VCN 4.0) | H.265/H.264/VP9/AV1 Hardware Decode/Encode (Quick Sync) |

| Intelligent Processing (NPU) | XDNA™ (Ryzen™ AI), 16 TOPS INT8 | Intel® AI Boost, 10-11 TOPS INT8 |

| GPU Theoretical Performance | ~4.15 TFLOPS FP32 / INT8 TOPS (including Matrix Acceleration) | ~4.6 TFLOPS FP32 / ~18.4 TOPS INT8 (DP4a) / Up to ~73.6 TOPS INT8 (XMX Peak) |

| Comprehensive Intelligent Performance | Up to 39 TOPS (AMD Official) | Up to 34 TOPS (Intel Official) |

| IO | 20x PCIe® 4.0 | PCIe® 5.0 x8 + PCIe® 4.0 x12; Thunderbolt™ 4 |

| Release/Supply | 2024 Q2; 10 years[6] | 2023 Q4; Embedded Version/Supply Cycle TBD[13] |

Table 3: New Generation AI Edge Platform Specification Comparison.

Core Competitiveness Analysis

The composition of intelligent computing power is the core highlight of this generation of products. AMD Ryzen 8000 series features the XDNA NPU, providing leading 16 TOPS of independent computing power. Combined with its high-performance Zen 4 CPU and powerful RDNA 3 architecture GPU (including matrix acceleration units), AMD claims that the platform’s comprehensive AI computing power can reach up to 39 TOPS.

Intel Core Ultra adopts a different strategy. Its AI Boost NPU provides 10-11 TOPS of computing power. The CPU uses an innovative three-tier hybrid architecture for more refined power management. Its Arc GPU not only has strong graphics performance but also introduces the XMX matrix acceleration engine, with a theoretical peak INT8 performance that is extremely high. Although Intel officially announces a comprehensive AI computing power of about 34 TOPS (possibly based on contributions from NPU + CPU + GPU DP4a), the XMX potential of its GPU provides a higher ceiling for specific AI workloads. The mature OpenVINO toolchain is key to leveraging its heterogeneous computing capabilities.

For running small AI large models (SLM) locally, both open up possibilities. The tens of TOPS level of computing power, combined with support for low-precision calculations, makes it possible to run optimized models at the 3B-7B parameter level. However, this heavily relies on sufficient memory capacity (recommended 32GB+) and bandwidth (DDR5/LPDDR5x), as well as efficient model quantization and software stack optimization. They are the starting point for exploring local SLMs, not the endpoint.

In terms of video processing capability (H.264/H.265), both are equipped with extremely powerful latest hardware encoding and decoding engines, capable of easily handling ultra-high concurrency video streams (far exceeding 16 channels of 1080p H.265), providing ample data input capabilities for subsequent intelligent video analysis (IVA). At this point, the bottleneck shifts more towards the AI analysis computing power itself.

Intel Core Ultra 7 155H adopts advanced Foveros 3D packaging, integrating modules of different processes, reflecting its architectural innovation. However, the supply status and lifecycle commitment of its embedded version remain unknown, which is crucial for embedded projects requiring stable supply.

Decision Recommendation: If the application scenario requires top-tier CPU performance, leading independent NPU computing power, and must ensure a long-term supply of over 10 years, AMD Ryzen Embedded 8000 series is the clear choice (such as for high-end industrial vision and medical AI). If the application scenario can fully utilize the XMX potential of Intel’s GPU, values its refined power management of the CPU, and heavily relies on the OpenVINO ecosystem for rapid AI development and deployment, Intel Core Ultra (if there is an embedded long supply version) will be highly attractive (such as for smart retail and AI kiosks).

Fourth Tier: High-Performance Edge Server Platforms – The Ultimate Pursuit of Computing Power and Scalability

At the top of the edge computing pyramid, platforms capable of supporting large-scale virtualization, container clusters, high-throughput network processing, or driving powerful AI acceleration cards are required.

Parameter Specification Comparison (Typical Models)

| Indicator | AMD EPYC™ 8324P (Siena, 90W)[18] | AMD V3C18I (45W)[19] | Intel® Xeon® D-1747NTE (67W)[20] | Intel® Xeon® E-2488 (80W)[21] |

| Architecture/Process | Zen 4c / 5nm | Zen 3 / 6nm | Ice Lake-D / 10nm ESF | Raptor Lake / Intel 7 |

| CPU Cores/Threads | 32 Cores / 64 Threads | 8 Cores / 16 Threads | 10 Cores / 20 Threads | 8 Cores / 16 Threads |

| Max Boost Frequency (GHz) | 3.0 | 3.8 | 3.0 | 5.6 |

| Cache (L3) | 64MB | 16MB | 15MB | 24MB |

| Memory | 6-channel DDR5-4800 ECC | Dual-channel DDR5-4800 ECC | Dual-channel DDR4-2933 ECC | Dual-channel DDR5-4800 ECC |

| iGPU | None | None | None | Intel® UHD Graphics 770 (32 EU) |

| Video Codec | No Hardware Acceleration | No Hardware Acceleration | No Hardware Acceleration | Quick Sync Video (H.265/VP9, etc.) |

| Intelligent Processing Acceleration | CPU AVX-512/VNNI; External | CPU AVX2; External | CPU AVX-512/VNNI; QAT; External | CPU AVX2/VNNI; iGPU; External |

| GPU Theoretical Performance | N/A | N/A | N/A | ~0.8 TFLOPS FP32 / ~3.2 TOPS INT8 (DP4a) |

| Integrated Networking | None | Dual 10GbE SFI | 4x 25GbE + 4x 10GbE | None |

| PCIe® Channels | 96x Gen5 | 20x Gen4 | 16x Gen4 + 16x Gen3 | 16x Gen5 + 4x Gen4 |

| TDP/PBP (W) | 90 (cTDP 70-100) | 45 (cTDP 35-54) | 67 | 80 |

| Supply Cycle | Long Cycle Embedded | Long Cycle Embedded | Long Cycle Embedded | Long Cycle Embedded |

Table 4: High-end embedded/edge server specification comparison.

Core Competitiveness Analysis

In this tier, the value of processors lies more in their platform capabilities rather than the integrated functions of a single chip.

- • AMD EPYC Embedded 8000 (Siena)[18]: With its ultra-high core density (up to 64 cores Zen 4c), excellent energy efficiency, and design optimized for single-socket, it becomes an ideal platform for running large-scale virtualization/containerized environments, processing high-concurrency data streams (such as telecom DU/CU, CDN), or requiring strong CPU parallel computing capabilities (edge data analysis). Its 96 PCIe Gen5 channels provide unparalleled scalability for connecting numerous high-speed peripherals and AI acceleration cards.

- • AMD Ryzen Embedded V3000[19]: With its unique integrated dual 10G network ports, it precisely targets the high-performance networking device market, such as enterprise-grade routers, firewalls, SD-WAN, and NAS. Its Zen 3 CPU performance is also powerful enough for these network-intensive applications.

- • Intel Xeon D[20]: As a highly integrated network SoC, its core competitiveness lies in its built-in numerous high-speed network ports and strong QAT hardware acceleration engine (for encryption and compression), making it a powerful tool for building compact, high-performance network function virtualization (NFV) platforms, 5G edge devices, secure gateways, and storage controllers.

- • Intel Xeon E[21]: More like a high-performance desktop CPU with server features (such as ECC), it has an extremely high single-core frequency, suitable for applications requiring quick responses. The integrated UHD Graphics and Quick Sync engine enable it to independently handle medium-scale video encoding/decoding tasks. It is a reliable choice for entry-level edge servers, industrial workstations, and medical imaging control.

In terms of intelligent processing, these processors mainly play the role of hosts. They provide a platform for external high-performance AI accelerators (GPU/ASIC/FPGA) through powerful CPU cores (supporting AVX-512, VNNI, etc.), large-capacity high-bandwidth memory, and rich PCIe channels. Running large LLMs or executing extremely complex AI inferences at the edge relies on this combination of “strong host CPU + independent AI acceleration card.” EPYC Embedded and Xeon Scalable Embedded provide the best scalability.

For video processing (H.264/H.265), only Xeon E can rely on its built-in Quick Sync for hardware processing. The other three lack this capability and need to rely on CPU software decoding (which is inefficient) or external GPU/VPU cards.

Decision Recommendation: Choose based on the core needs of the application: for extreme core density and PCIe expansion, choose EPYC Embedded[18]; for integrated high-speed network ports, choose V3000[19]; for integrated networking and security acceleration, choose Xeon D[20]; for high single-core performance, ECC, and basic video processing, choose Xeon E[21].

Computing Power Ladder: The Hierarchical Demand for Computing Power in Edge Intelligent Applications and Selection Matching

Different edge intelligent applications have vastly different demands for AI computing power. Understanding this demand hierarchy helps us select processor platforms more accurately.

- • Entry-level Intelligence (~10 TOPS and below): Suitable for simple object detection, image classification, keyword wake-up, basic sensor fusion, etc. Such tasks typically do not require dedicated NPUs. AMD Ryzen Embedded R/V series[5, 12] or Intel Core i5 Embedded Version[10, 11] can meet the needs relying on their GPU or CPU+GPU combination capabilities, with considerations for CPU performance, power consumption, and cost.

- • Intermediate Intelligence (~20 TOPS): Can support more complex multi-object detection tracking, basic facial recognition, medium complexity industrial quality inspection, and intelligent analysis of a small number of high-definition video streams. Dedicated NPUs begin to show efficiency advantages. AMD Ryzen Embedded 8000 series[6] (utilizing its 16T NPU) and Intel Core Ultra series[13] (utilizing its 11T NPU + GPU/CPU) are ideal choices for this tier.

- • Advanced Intelligence (~40 TOPS): Capable of handling higher resolution, more video stream analysis, more complex behavior recognition, entry-level robotic perception, or preliminary attempts to run optimized ultra-small LLMs. The mid to high-end models of AMD Ryzen Embedded 8000 series[6] (total computing power

39T) and Intel Core Ultra 7 155H[13] (total computing power34T) are basically in this range, representing the current peak of single-chip embedded AI performance. - • High-level Intelligence (~60 TOPS): Can support large-scale video surveillance analysis, higher-level ADAS, smoother small LLM inference, etc. Currently, single embedded SoCs/APUs usually struggle to stably reach this level of computing power. It often requires using AMD Ryzen 8000 or Intel Core Ultra as the main control, combined with a mid to low-end independent GPU or AI acceleration card to achieve.

- • High-performance Edge Intelligence (~80 TOPS and above): Aimed at high-performance edge AI servers, L3+ autonomous driving, edge model training, running medium to large LLMs, etc. This must use server-grade CPU platforms (such as AMD EPYC Embedded[18], Intel Xeon D/E/Scalable Embedded[20, 21]) as the host, equipped with one or more powerful independent AI accelerators (such as NVIDIA GPU, AMD Instinct, Intel Gaudi, etc.).

Accurately matching computing power demands with processor capabilities is a key step in building successful edge intelligent solutions.

The Fuel of the Engine: The Importance of Developer Ecosystems

Powerful hardware requires easy-to-use, efficient software toolchains to drive it. The completeness of the developer ecosystem directly affects the development efficiency and final implementation of edge intelligent applications.

- • AMD Developer Ecosystem: Open, Flexible, Integrated AccelerationAMD is accelerating the integration of its CPU, GPU, and AI and FPGA capabilities brought by the acquisition of Xilinx, building an increasingly powerful heterogeneous computing platform. Its core software includes the Ryzen™ AI Software Platform for NPU, the feature-rich Vitis™ AI platform, the open-source GPU computing framework ROCm™ and CPU optimization library ZenDNN. The AMD ecosystem attracts developers with its openness (a large number of open-source components) and flexibility (heterogeneous potential of CPU+GPU+NPU+FPGA). Although the uniformity of the toolchain and certain aspects (such as ROCm support on APU) are still being improved, its development speed and depth of technological combination are promising.

- • Intel Developer Ecosystem: Mature, Unified, Easy to UseIntel’s ecosystem is known for its high maturity, unity, and ease of use. The OpenVINO™ Toolkit serves as the core, providing a one-stop AI deployment process that can seamlessly optimize and deploy models across Intel’s various hardware units (CPU, iGPU, NPU, GNA, FPGA), greatly simplifying development work. oneAPI aims to provide a unified programming model across architectures. Coupled with its vast partner network and rich development tools (such as VTune Profiler), Intel offers developers an extremely complete and convenient development environment, especially suitable for projects pursuing rapid market entry and broad compatibility.

Core Extraction: Intel’s ecosystem is mature and unified, facilitating rapid development and deployment. AMD’s ecosystem is open and flexible, with great heterogeneous potential, suitable for deep customization. Which ecosystem to choose depends on the development team’s technology stack, project needs, and preference for the open-source community.

Rooted in China, Looking Globally: Unique Market Environment and Localization Strategies

In China, the world’s largest and fastest-growing edge computing market, factors beyond technology profoundly influence the competitive landscape.

- • Massive Demand and Unique Scenarios: From large-scale video analysis in smart cities to precise control in the industrial internet, China’s application scenarios often feature large scale, high concurrency, and complex environments, placing higher demands on processor performance, stability, and customization capabilities.[1, 22]

- • Policy Guidance and “Xinchuang” Opportunities: National strategies such as “new infrastructure” and “East Data West Computing” provide strong policy support for the development of edge computing. “Xinchuang” promotes the process of self-controllable key information infrastructure, which is both a challenge and an opportunity for international manufacturers to deepen local cooperation (joint ventures, licensing, etc.).

- • High Standards for Data Security Compliance: Strict data protection regulations make data localization processing a necessity for many edge applications (especially those involving sensitive information), further promoting the development of edge intelligence and local model deployment.

- • The Rise of Local Forces: Chinese tech giants such as Huawei, Alibaba, Baidu, and numerous AI chip startups are rapidly developing in the edge computing field, forming strong local competitiveness and increasingly diversified market choices.

Core Extraction: For AMD and Intel, winning the Chinese market requires not only technological leadership but also a deep understanding of and adaptation to local needs, strengthening cooperation with local partners, embracing open standards, and exploring business models that align with policy directions. The depth of localization and ecosystem building capabilities are crucial.

Final Chapter: Intelligence Without Boundaries, The Future Path of Edge Computing Processors

The competition between AMD and Intel in the field of edge computing is undoubtedly an important engine driving technological progress. This showdown is far from over, but it has revealed a clear context and future picture of edge processor development:

- 1. Intelligent Core: NPUs will become standard, with competition focusing on energy efficiency, model support, and ease of use.

- 2. Deepening Heterogeneous Computing: Hardware integration accelerates, software defines value, and ecosystems become key.

- 3. Memory Bottlenecks Highlighted: Capacity, speed, and bandwidth become key factors limiting edge AI (especially large models).

- 4. Video Processing Remains Evergreen: Efficient high-concurrency processing capabilities for mainstream formats such as H.264/H.265 remain fundamental.

- 5. Chinese Market Leading: China’s demand and policies profoundly influence global technology and market trends.

- 6. Precise Positioning and Long-term Commitment: Product lines become more segmented, and long-term supply commitments maintain trust in the industrial market.

Looking ahead, edge processors will continue to evolve towards higher energy efficiency, stronger heterogeneous integration (Chiplet/advanced packaging is key), smarter power management, faster interconnects (CXL, etc.), and more comprehensive built-in security. Software-defined and cloud-native technologies will further blur the boundaries between edge and cloud. In this journey towards intelligent everything, the innovative sparks of AMD, Intel, and all participants will jointly illuminate the brilliant future of edge computing.

Appendix: Explanation of Computing Power Calculation Methods

The computing power of processors mentioned in this article, especially GPU and AI-related computing power, follows the following calculation strategies and explanations:

- 1. GPU FP32 Theoretical Computing Power (TFLOPS): Typically calculated based on standard architecture formulas:

<span>FP32 TFLOPS ≈ (Number of Compute Units CUs/EUs) × (Number of Shaders/ALUs per Unit) × (GPU Frequency GHz) × 2 (FMA instructions count as 2 operations)</span>This represents the theoretical peak performance of the GPU in 32-bit floating-point operations. - 2. GPU INT8 Theoretical Computing Power (TOPS): INT8 (8-bit integer) is a commonly used precision for AI inference, and its computing power calculation depends on GPU architecture characteristics:

- • DP4a Instructions: Some architectures (such as Intel Xe-LP) support DP4a instructions, theoretically achieving 4 times the INT8 throughput compared to FP32 operations.

<span>INT8 TOPS (DP4a) ≈ FP32 TFLOPS × 4</span>. - • Matrix Acceleration Units: Newer architectures (such as Intel XMX, AMD WMMA) include dedicated matrix computation units, significantly enhancing INT8 (even INT4) computing power, with theoretical peaks potentially reaching 16 times or more than FP32.

<span>INT8 TOPS (Matrix) ≈ FP32 TFLOPS × 16+</span>. Actual performance gains depend on specific operations and optimizations. - • General Computing Estimation: For older architectures that do not support dedicated INT8 instructions (such as AMD Vega), they typically rely on general SIMD units for execution, making their INT8 computing power difficult to calculate accurately. The article may use

<span>FP32 TFLOPS × 8</span>as a rough estimate for reference.

- • The total computing power announced by manufacturers may be based on specific combinations and precisions, and the contribution of the GPU part may be based on DP4a paths or more conservative estimates, not necessarily reflecting the highest theoretical peak of matrix units.

- • Different manufacturers may have discrepancies in their calculation criteria.

- • This article prioritizes citing the latest total computing power data published by manufacturers and attempts to indicate its calculation scope.

When interpreting computing power data, it is essential to understand that there may be differences between theoretical peaks and actual application performance, which is also influenced by multiple factors such as memory bandwidth, software optimization, and model types.

Disclaimer:

The analysis and comparison of AMD and Intel embedded processors in this article are based on publicly available information, industry reports, and the author’s understanding, for technical discussion and informational reference only, and do not constitute any final product selection decisions, investment advice, or engineering implementation guidelines.

The specifications of the processors mentioned in the text (such as core/thread counts, frequencies, caches, TDP), performance indicators (such as GPU/NPU computing power estimates in TOPS), video encoding/decoding capabilities, memory support, interface types, and supply cycle commitments may change over time due to product iterations or manufacturer strategy adjustments. All computing power data (especially GPU INT8 TOPS and comprehensive AI TOPS) are theoretical peaks or estimates based on specific instructions/models, and actual application performance will be affected by load, software optimization, memory bandwidth, cooling, and other factors.

Before making any embedded system design, processor selection, or procurement decisions, it is strongly recommended that you must:

- 1. Consult and verify the latest and most accurate product specifications (Datasheet), product databases (such as Intel ARK) and related technical documents published on the official websites of AMD and Intel.

- 2. Directly consult the chip manufacturers or their authorized agents (such as AMD agent Ketong) to confirm specific SKU models, supply status, lifecycle commitments, prices, and whether they meet the specific needs of the project (such as wide temperature, ECC support, etc.).

- 3. Conduct sufficient sample testing and performance validation in actual application scenarios.

For any direct or indirect consequences that may arise from reliance on, reference to, or improper use of the information in this article (including but not limited to selection errors, performance not meeting expectations, project delays, commercial losses, etc.), the author assumes no legal responsibility. Readers must make decisions based on independent judgment, prudent assessment, and thorough verification.

References:

- 1. IDC – IDC Forecasts Worldwide Edge Spending Will Reach $359 Billion in 2028, Press Release, Dec. 2024, https://www.idc.com/getdoc.jsp?containerId=prUS52587424, Summary: Forecasts global edge spending growth, reflecting the market’s immense potential.

- 2. China Academy of Information and Communications Technology (CAICT) – Relevant research reports and white papers, http://www.caict.ac.cn/kxyj/qwfb/bps/, Summary: Provides official data and analysis on the development of edge computing in China.

- 3. Gartner – Gartner Top 10 Strategic Technology Trends for 2024, Oct. 2023, https://www.gartner.com/en/articles/gartner-top-10-strategic-technology-trends-for-2024, Summary: Lists AI as a key technology trend, including edge AI and large model applications.

- 4. AMD – AMD Embedded Solutions, https://www.amd.com/en/products/embedded, Summary: Official overview of AMD’s embedded product line, mentioning long-term supply commitment framework.

- 5. AMD – AMD Ryzen™ Embedded R2000 Series Processors, Product Brief, https://www.amd.com/system/files/documents/ryzen-embedded-r2000-series-product-brief.pdf, Summary: R2000 series product manual, clearly stating a 10-year supply commitment.

- 6. AMD – AMD Ryzen™ Embedded 8000 Series, https://www.amd.com/en/products/embedded/ryzen-embedded-8000-series, Summary: Official page for the 8000 series, including NPU information and 10-year supply commitment.

- 7. Intel – Intel® Product Specifications, Intel ARK, https://ark.intel.com/, Summary: Intel’s official product database, where specific SKU specifications and supply information can be queried.

- 8. Intel – Intel® IoT Solutions, https://www.intel.cn/content/www/cn/zh/internet-of-things/overview.html, Summary: Overview of Intel IoT/embedded solutions, mentioning long lifecycle support.

- 9. Intel – Intel® Processor N100, Product Specifications, https://www.intel.cn/content/www/cn/zh/products/sku/231803/intel-processor-n100-6m-cache-up-to-3-40-ghz/specifications.html, Summary: N100 specification page, supply cycle information shows it is a non-embedded long cycle product.

- 10. Intel – Intel® Core™ i5-1345UE Processor, Product Specifications, https://www.intel.cn/content/www/cn/zh/products/sku/231994/intel-core-i51345ue-processor-12m-cache-up-to-4-60-ghz/specifications.html, Summary: i5-1345UE specifications, clearly marked as an embedded long cycle product, supporting optional ECC.

- 11. Intel – Intel® Core™ i5-13500HE Processor, Product Specifications, https://www.intel.cn/content/www/cn/zh/products/sku/231999/intel-core-i513500he-processor-18m-cache-up-to-4-60-ghz/specifications.html, Summary: i5-13500HE specifications, clearly marked as an embedded long cycle product, supporting optional ECC.

- 12. AMD – AMD Ryzen™ Embedded V2000 Series, https://www.amd.com/en/products/embedded/ryzen-embedded-v2000-series, Summary: Official page for the V2000 series, typically providing up to 10 years of lifecycle support.

- 13. Intel – Intel® Core™ Ultra 7 Processor 155H, Product Specifications, https://www.intel.cn/content/www/cn/zh/products/sku/236846/intel-core-ultra-7-processor-155h-24m-cache-up-to-4-80-ghz/specifications.html, Summary: Core Ultra 7 155H specification page, not clearly identified as an embedded version or long supply.

- 14. Microsoft – Introducing Phi-3: Redefining what’s possible with SLMs, Azure Blog, Apr. 2024, https://azure.microsoft.com/en-us/blog/introducing-phi-3-redefining-whats-possible-with-slms/, Summary: Introduces small language models (SLM) and their potential on edge devices.

- 15. Qualcomm – Getting personal (and productive) with on-device generative AI, OnQ Blog, Oct. 2023, https://www.qualcomm.com/news/onq/2023/10/getting-personal-with-on-device-ai, Summary: Discusses the applications and advantages of on-device generative AI.

- 16. Hugging Face – Optimum: Hardware acceleration for Transformers, Hugging Face Documentation, https://huggingface.co/docs/optimum/index, Summary: Provides tools for optimizing and accelerating Transformer models on edge hardware.

- 17. Intel – Intel® AI PC Acceleration Program, https://www.intel.cn/content/www/cn/zh/products/docs/processors/core-ultra/ai-pc-acceleration.html, Summary: Introduces Intel’s support for software and hardware ecosystem for on-device AI applications.

- 18. AMD – AMD EPYC™ Embedded 8324P, https://www.amd.com/en/products/embedded/epyc-series/amd-epyc-embedded-8324p, Summary: EPYC Embedded 8324P (Siena) specifications, emphasizing core density and PCIe.

- 19. AMD – AMD Ryzen™ Embedded V3C18I, https://www.amd.com/en/products/embedded/ryzen-series/amd-ryzen-embedded-v3c18i, Summary: V3C18I specifications, highlighting integrated dual 10G network ports.

- 20. Intel – Intel® Xeon® D-1747NTE Processor, Product Specifications, https://www.intel.cn/content/www/cn/zh/products/sku/217920/intel-xeon-d1747nte-processor-15m-cache-2-00-ghz/specifications.html, Summary: Xeon D-1747NTE specifications, emphasizing integrated networking and QAT.

- 21. Intel – Intel® Xeon® E-2488 Processor, Product Specifications, https://www.intel.cn/content/www/cn/zh/products/sku/236731/intel-xeon-e2488-processor-24m-cache-3-20-ghz/specifications.html, Summary: Xeon E-2488 specifications, emphasizing high frequency, ECC, and Quick Sync.

- 22. CCID Consulting – Relevant industry research reports, http://www.ccidconsulting.com/, Summary: Provides market analysis and demand insights for specific industries in China (such as industrial internet and smart cities).

Thank you for reading! If you found this insightful, please like, share, and follow for more in-depth technology interpretations! Your recognition is our creative motivation. We look forward to your valuable insights or questions in the comments section, and don’t forget to click “follow” for more!