With the development of large models, general intelligence continues to iterate and upgrade, and application models are also innovating continually, from simple prompt applications, RAG (Retrieval-Augmented Generation) to AI Agents. Among them, AI Agents have always been a hot topic and will be ubiquitous in the future. Bill Gates even claimed that the ultimate technology competition will revolve around developing top AI agents. He said, “You will no longer search websites or Amazon…” This indicates his optimism about the significant changes artificial intelligence will bring to human-computer interaction modes and acknowledges the important role AI Agents play.

An AI Agent is a virtual assistant powered by artificial intelligence that helps achieve process automation, generate insights, and enhance efficiency. It can act as an employee or partner to help achieve goals set by humans.

A thermostat is a simple example of an AI Agent; it can adjust heating to reach a specific temperature based on a specific time. It perceives the environment through temperature sensors and a clock. It takes action through a switch, turning the heating on or off based on the actual temperature or time. By adding AI capabilities, a thermostat can evolve into a more complex AI agent, learning from the habits of the people living in the house.

AI Agents can be categorized into different types based on the impact patterns of their actions on perceived intelligence and capabilities.

This article mainly introduces six different types of AI Agents, including:

-

Simple reflex agents

-

Model-based agents

-

Goal-based agents

-

Utility-based agents

-

Learning agents

-

Hierarchical agents

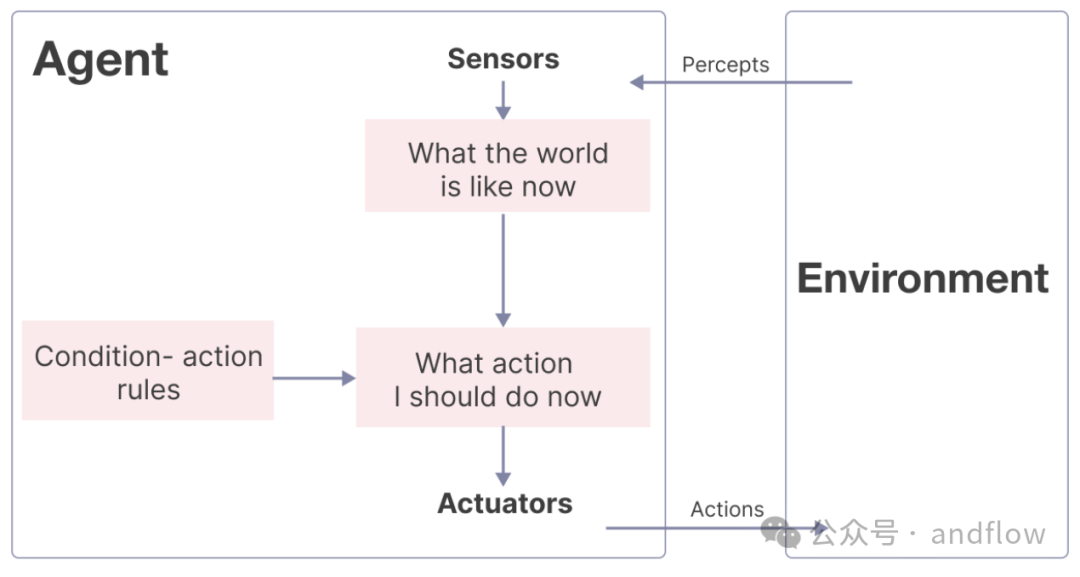

Simple Reflex Agents

Simple reflex agents are AI systems that can make decisions based on predefined rules. They only react to the current situation without considering past or future consequences.

Simple reflex agents are suitable for environments with stable rules and direct actions, as their behavior is purely reactive and can respond immediately to environmental changes.

Principle:

Simple reflex agents execute their functions by following condition-action rules, which specify the actions to be taken under specific conditions.

Example:

A rule-based system for implementing intelligent customer service. If a customer’s message contains the keyword “password reset,” the system can automatically generate a predefined response containing instructions for resetting the password.

Advantages:

-

Simple: Easy to design and implement, requiring few computational resources, and no extensive training or complex hardware.

-

Implementation: Capable of responding to environmental changes in real-time.

-

High Reliability: High reliability when the input sensors are accurate and the rules are well-designed.

Weaknesses:

-

If the input sensors fail or the rules are poorly designed, errors are likely to occur.

-

No memory or state, which limits their applicability.

-

Cannot handle environmental changes that are not explicitly programmed.

-

Limited to a specific set of actions, unable to adapt to new situations.

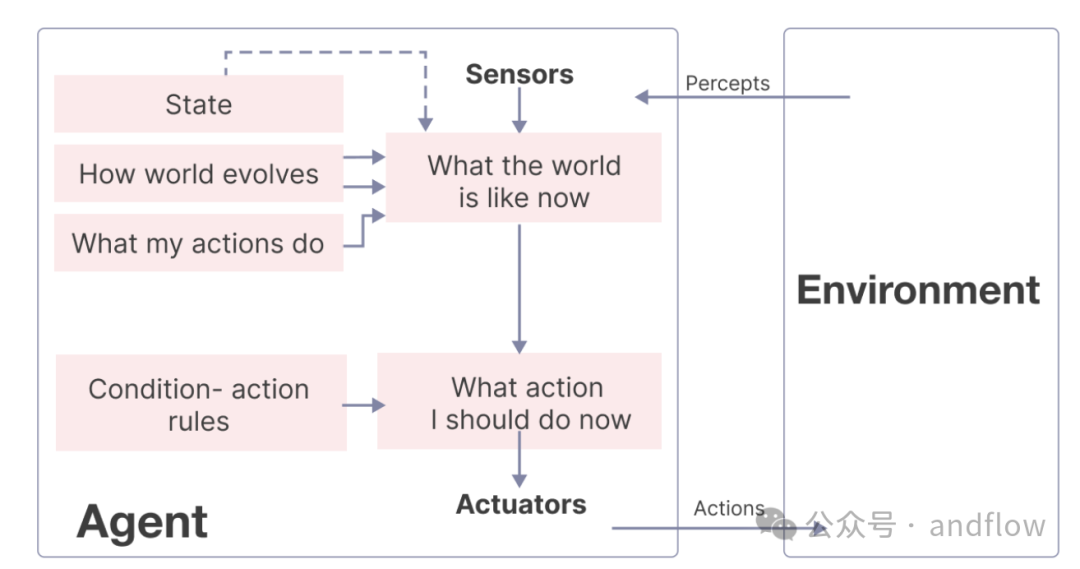

Model-based Agents

Model-based agents execute actions based on current perceptions and internal states representing unobservable aspects of the world. They update their internal states based on two factors:

-

How the world evolves independently of the agent

-

How the agent’s actions affect the world

Principle:

Model-based agents follow condition + action rules, which specify the appropriate action to take under given circumstances. However, unlike simple reflex agents, model-based agents also use their internal states to evaluate the conditions during the decision-making and action processes.

Model-based agents operate in four stages:

-

Perception: They perceive the current state of the world through sensors.

-

Model: They build an internal model of the world based on what they observe.

-

Reasoning: They use their world model to decide how to act based on a predefined set of rules or guidelines.

-

Action: The agent executes its chosen action.

Example:

https://aws.amazon.com/cn/bedrock/One of the best examples of a model-based agent is Amazon Bedrock. Amazon Bedrock is a service that uses foundational models to simulate operations, gain insights, and make informed decisions for effective planning and optimized services.

Through various models, Bedrock can gain insights, predict outcomes, and make informed decisions. It continually improves its models using real data, allowing it to adapt and optimize its operations.

Then, Amazon Bedrock plans for different scenarios and selects the best strategy by simulating and adjusting model parameters.

Advantages:

-

Make quick and effective decisions based on understanding of the world.

-

Make more accurate decisions by constructing an internal model of the world.

-

Adapt to environmental changes by updating their internal models.

-

Determine conditions using their internal states and rules.

Weaknesses:

-

The computational cost of building and maintaining models can be high.

-

These models may not capture the complexities of real-world environments well.

-

Models cannot predict all potential situations that may arise.

-

Models need to be frequently updated to remain current.

-

Models may face challenges in terms of understanding and interpretability.

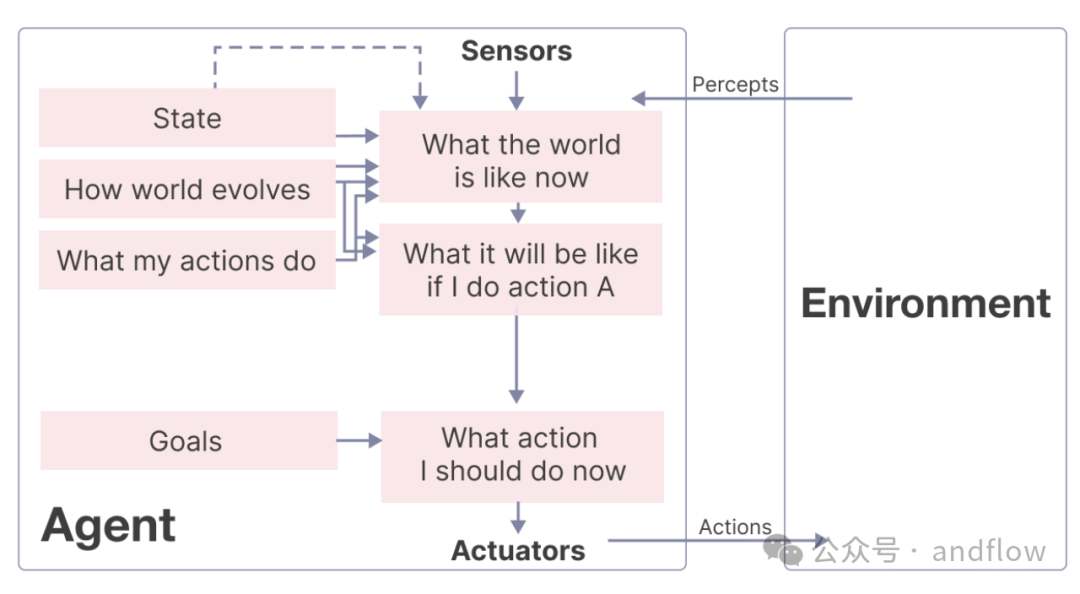

Goal-based agents are AI agents that utilize environmental information to achieve specific goals. They use search algorithms to find the most effective path to achieve goals in a given environment.

These agents are also known as rule-based agents because they follow predefined rules to achieve goals and take specific actions based on certain conditions.

Goal-based agents are easy to design and can handle complex tasks. They can be used in various applications such as robotics, computer vision, and natural language processing.

Unlike basic models, goal-based agents can determine the best path for decision-making and action processes based on their desired outcomes or goals.

Principle:

Given a plan, goal-based agents will try to select the best strategy to achieve the goal and then use search algorithms to find effective paths to the goal.

The working mode of goal-based agents can be divided into five steps:

-

Perception: The agent uses sensors or other input devices to perceive its environment and gather information about its surroundings.

-

Reasoning: The agent analyzes the collected information and decides on the best course of action to achieve its goals.

-

Action: The agent takes actions to achieve its goals, such as moving or manipulating objects in the environment.

-

Evaluation: After taking action, the agent assesses its progress toward achieving its goals and adjusts its actions if necessary.

-

Goal Completion: Once the agent achieves its goal, it either stops working or starts working on a new goal.

Example:

https://blog.google/technology/ai/bard-google-ai-search-updates/Google Bard is a learning medium. In a sense, it is also a goal-based agent. As a goal-based agent, its aim is to query users and provide high-quality responses. The actions it chooses may help users find the information they need and achieve their expected goal of obtaining accurate and useful replies.

Advantages:

-

Easy to understand and implement.

-

Effectively achieves specific goals.

-

Performance can be easily evaluated based on goal completion.

-

It can be combined with other AI technologies to create more advanced agents.

-

Very suitable for clearly defined structured environments.

-

It can be used in various applications such as: robotics, gaming, and autonomous vehicles.

Weaknesses:

-

Limited to specific goals.

-

Cannot adapt to changing environments.

-

Ineffective for complex tasks with too many variables.

-

Requires extensive domain knowledge to define goals.

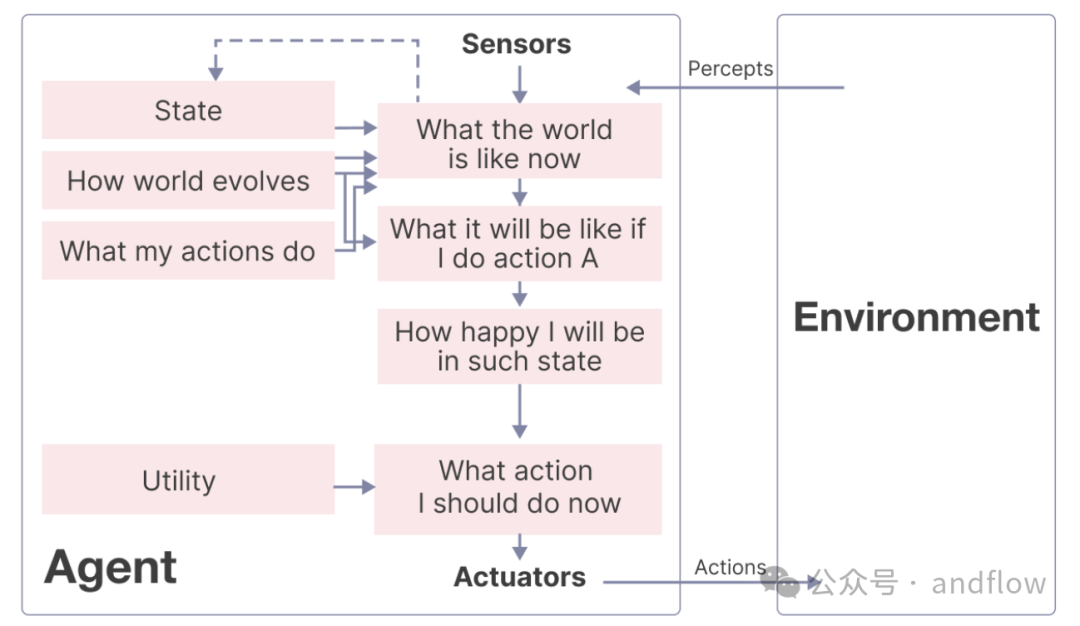

Utility-based agents are AI agents that make decisions based on utility functions or value maximization. They choose actions with the highest expected utility, and this choice determines the quality of the final outcome. This model is more flexible and adapts to handle tasks in complex situations.

Utility-based agents are typically used in scenarios where comparisons and selections must be made among multiple options, such as resource allocation, task scheduling, and gameplay.

Principle:

Utility-based agents aim to select behaviors that lead to efficient utility states. To achieve this, they need to model their environment, which can be simple or complex.

Then, they evaluate the expected utility of each possible outcome based on probability distributions and utility functions.

Finally, they choose actions with the highest expected utility and repeat this process at each time step.

Example:

https://www.anthropic.com/news/introducing-claudeAnthropic Claude is an AI tool designed to help cardholders maximize their rewards from card usage, making it a utility-based agent.

To achieve its goal, it employs a utility function that assigns numerical values representing success or happiness to different states (situations faced by cardholders, such as purchases, bill payments, reward redemptions, etc.). It then compares the outcomes of different actions in each state and makes trade-off decisions based on their utility values.

Additionally, it employs heuristics and AI techniques to simplify and improve decision-making.

Advantages:

-

Can handle a wide range of decision-making problems.

-

Learn from experience and adjust their decision-making strategies.

-

Provide a unified, objective framework for decision-making applications.

Weaknesses:

-

Requires an accurate model of the environment; otherwise, it may lead to decision errors.

-

High computational costs, requiring significant computation.

-

Does not consider moral or ethical factors.

-

Humans find it difficult to understand and verify its processes.

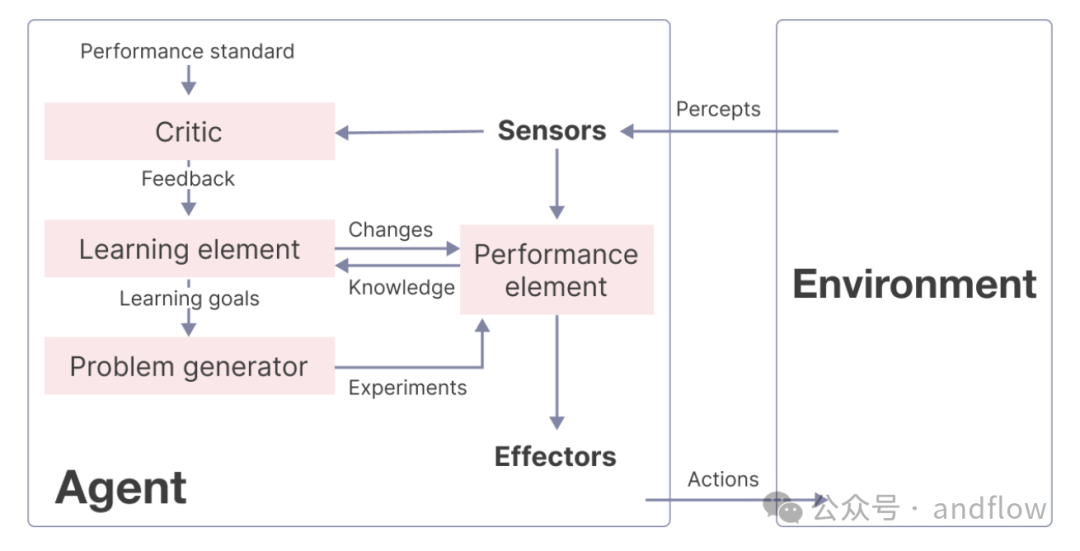

Learning agents are a model that can learn from past experiences and improve model performance. Initially, the agent possesses basic knowledge and continuously grows through machine selfadaptive learning.

Learning agents consist of four main components:

-

Learning Element: Responsible for learning and improving based on experiences gained from the environment.

-

Critic: Provides feedback to the learning element based on the agent’s performance against predefined standards.

-

Performance Element: Selects and executes external actions based on information from the learning element and the critic.

-

Problem Generator: Suggests actions to create new informational experiences for the learning element to enhance its performance.

AI learning agents follow a feedback-based loop of observation, learning, and action. They interact with the environment, learn from feedback, and adjust their behavior for future interactions.

The working process of this loop is as follows:

-

Observation: The learning agent observes its environment through sensors or other inputs.

-

Learning: The agent analyzes data using algorithms and statistical models, learning from feedback on its behavior and performance.

-

Action: Based on what it has learned, the agent takes action in its environment to decide how to act.

-

Feedback: The agent receives feedback about its behavior and performance through rewards, penalties, or environmental cues.

-

Adaptation: Using feedback, the agent changes its behavior and decision-making process, updating its knowledge and adapting to its environment.

This loop process repeats over time, enabling the agent to continuously improve its performance and adapt to changing environments.

Example:

https://dataconomy.com/2023/04/13/what-is-autogpt-and-how-to-use-ai-agents/AutoGPT is a great example of a learning agent. Suppose you want to buy a smartphone. So, you give AutoGPT a prompt to conduct market research on the top ten smartphones, providing insights on their pros and cons.

To complete your task, AutoGPT will analyze the pros and cons of the top ten smartphones by exploring various websites and sources. It uses sub-agents to evaluate the authenticity of the websites. Finally, it generates a detailed report summarizing the findings and listing the pros and cons of the top ten smartphone companies.

-

Agents can translate ideas into actions based on AI decisions.

-

Learning agents can follow basic commands, such as verbal instructions or task execution.

-

Unlike classical agents that execute predefined operations, learning agents can evolve over time.

-

AI agents consider utility measurements, making them more realistic.

-

May produce biased or incorrect decisions.

-

High development and maintenance costs.

-

Require significant computational resources.

-

Depend on large amounts of data.

-

Lack human intuition and creativity.

Hierarchical agents are structured in a hierarchical manner, which can include high-level agents and low-level agents, with high-level agents supervising low-level agents. However, these levels may vary based on the complexity of the system.

Hierarchical agents are applied in scenarios such as robotics, manufacturing, transportation, etc. They excel at coordinating, handling multiple tasks and subtasks.

Principle:

Hierarchical agents operate like an organizational structure of a company. They organize tasks in a structured hierarchy consisting of different levels, where higher-level agents supervise and break down goals into smaller tasks.

Subsequently, lower-level agents execute these tasks and provide progress reports.

In complex systems, there may be intermediate agents coordinating the activities of lower-level agents with higher-level agents.

Example:

https://research.google/blog/unipi-learning-universal-policies-via-text-guided-video-generation/Google’s UniPi is an innovative AI hierarchical agent that utilizes text and video as a universal interface, enabling it to learn various tasks in different environments.

UniPi includes a high-level strategy for generating instructions and demonstrations and a low-level strategy for executing tasks. The high-level strategy adapts to various environments and tasks, while the low-level strategy learns through imitation and reinforcement learning.

This hierarchical structure allows UniPi to effectively combine high-level reasoning with low-level execution.

Advantages:

-

Hierarchical agents provide resource efficiency by assigning tasks to the most suitable agents and avoiding redundant work.

-

The hierarchical structure strengthens communication by establishing clear authority and direction.

-

Hierarchical reinforcement learning (HRL) improves agent decision-making by reducing action complexity and enhancing exploration. It employs high-level operations to simplify problems and facilitate agent learning.

-

Hierarchical decomposition offers benefits of minimizing computational complexity through a more concise and reusable representation of the entire problem.

Weaknesses:

-

Complexities can arise when solving problems using a hierarchical structure.

-

Fixed hierarchical structures limit adaptability in changing or uncertain environments, hindering the agent’s ability to adjust or find alternatives.

-

Hierarchical agents follow a top-down control flow, which can lead to bottlenecks and delays, even when lower-level tasks are ready.

-

Hierarchical structures may lack reusability across different problem domains, requiring new hierarchical structures to be created for each domain, which is time-consuming and relies on expertise.

-

Training hierarchical agents poses certain challenges due to the need for labeled training data and fine algorithm design. Applying standard machine learning techniques to improve performance becomes more difficult due to its complexity.

Conclusion

With the rapid iteration and upgrade of recent large language models, AI agents are no longer a novelty. When we put multiple agents together, the capabilities of a team of agents will far exceed those of a single agent. From simple reflex agents maintaining household temperature to more advanced agents driving cars, AI agents will be everywhere. In the future, everyone will be able to create their own agents and their own agent teams more easily. This enables people to accomplish tasks that may take hours or days in just a few minutes!