In the past year, the rapid development of general large language models (LLMs) has attracted global attention. Tech giants like Baidu have launched their own large models, continuously pushing the performance limits of language models. However, the industry’s goals for LLMs are no longer limited to basic Q&A functions, but are seeking to utilize large models to perform more complex and diverse tasks. This is the background for the emergence of the concept of Agents.

An Agent can be understood as a system capable of autonomously planning decisions and integrating various tools to complete complex tasks. In this system, the large language model acts as the “core scheduler.” This scheduler is responsible for interpreting users’ natural language inputs, planning a series of executable actions, and relying on memory modules and other components along with external tools to gradually complete these tasks.

In 2024, the focus of the artificial intelligence industry has shifted from general large models to AI-native applications. This technological transformation cannot be separated from the deep involvement of AI Agents. The core value of AI Agents lies in their adaptability to changing environments and needs, as well as their ability to make effective decisions and reliable operations, indicating that we are entering the era of AGI (Artificial General Intelligence). As Bill Gates predicted: “In the next five years, everything will change dramatically. You won’t need to switch applications for different tasks; you can simply communicate with your devices in everyday language, and the software will provide personalized feedback based on the information you share, as it gains a deeper understanding of your life.”

▎ERNIE SDK

Recently, ERNIE SDK has added a powerful feature—Agent development, marking a new stage in LLM development. Based on the powerful Wenxin large model and its Function Calling capabilities, it provides a new perspective for LLM application development. This framework not only addresses the core challenges faced in LLM application development but also showcases its outstanding performance through Wenxin large model 4.0. ERNIE SDK offers effective solutions to several key issues:

■ 1. Token Input Limit:

When traditional large models analyze and summarize large documents, they are limited by the token input limit. ERNIE SDK provides a local knowledge base retrieval method, making it easier to handle large document Q&A tasks.

■ 2. Integration of Business API Tools:

ERNIE SDK enables the integration of existing business API tools, broadening the functionality and adaptability of LLM applications.

■ 3. Data Source Connection:

ERNIE SDK can query SQL databases through custom tools, connecting various data sources to provide more information for the large model. As an efficient development framework, it significantly improves developers’ work efficiency. Leveraging the rich pre-made components of the PaddlePaddle Star River community, developers can directly utilize existing resources or customize them according to specific business needs, providing comprehensive support for the entire development lifecycle of LLM applications.

01

Analysis of Agent Architecture Based on ERNIE SDK

▎Agent

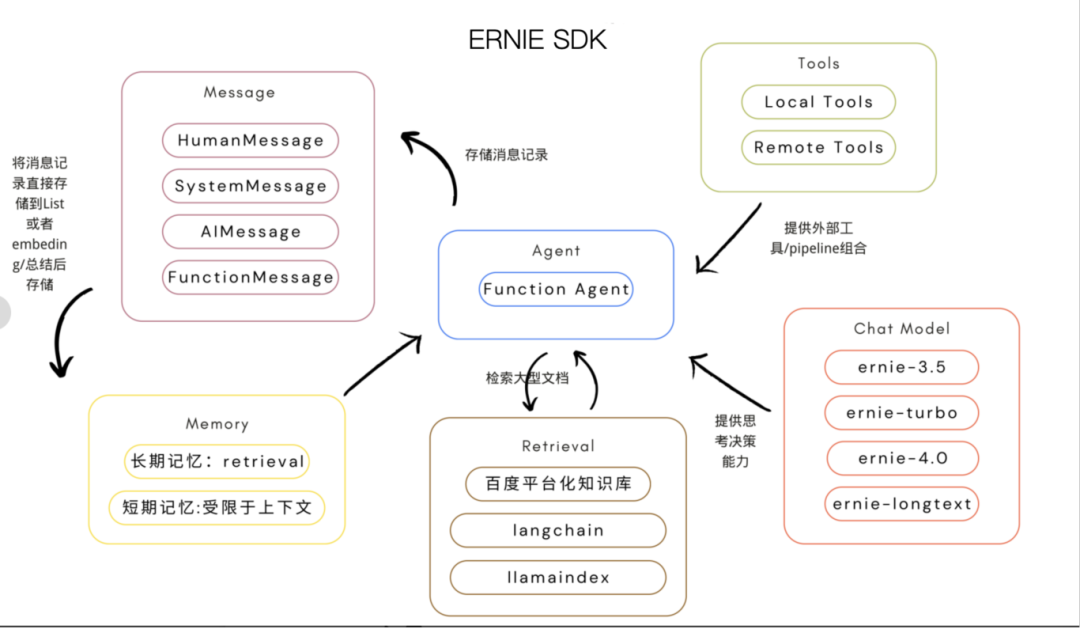

In some complex scenarios, we need to flexibly call LLMs and a series of required tools based on user input, and Agents provide the possibility for such applications. ERNIE SDK offers Agent development driven by the Function Calling capability based on the Wenxin large model. Developers can directly use preset Agents instantiated through Chat Model, Tool, and Memory, or customize their own Agent by inheriting from the erniebot_agent.agents.Agent base class.

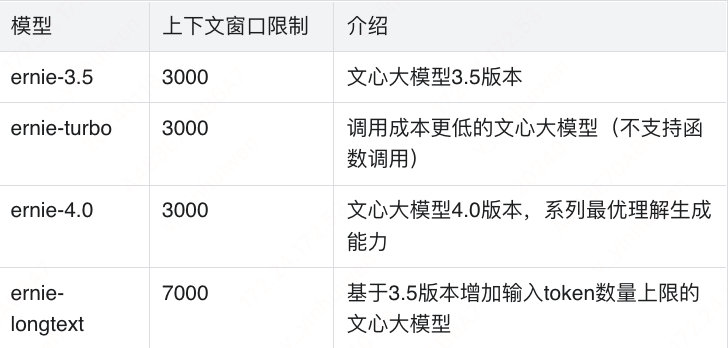

▎Chat Model (The Brain of the Agent)

The Chat Model module in ERNIE SDK is the core scheduler for decision-making, which is the knowledge-enhanced large language model developed by Baidu: the Wenxin large model.

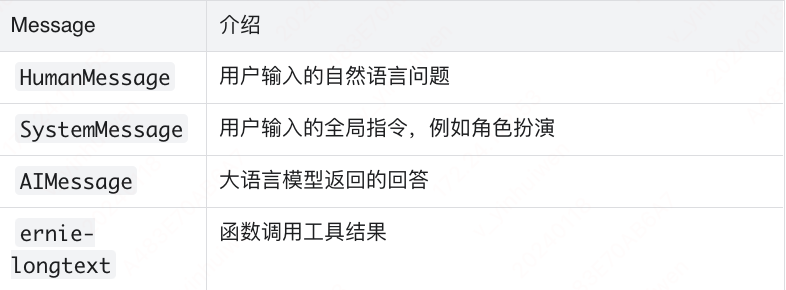

▎Message (Encapsulation of Agent Input and Output Information)

Developers interact with the Chat Model through the encapsulated Message, allowing the large language model to understand the source of the input information.

This module standardizes user inputs and the feedback messages from the Wenxin large model, making it easier to store in the subsequent Memory module.

▎Memory (The Agent’s Memory)

The large language model itself does not have memory, so one important aspect of building large model applications is to give the Agent memory functionality. ERNIE SDK provides fast memory capabilities, allowing information from multi-turn conversations to be stored in a List and then transferred to the context window of the Chat Model. However, this memory mode is also limited by the input tokens of the Wenxin large model. Additionally, ERNIE SDK allows developers to build more complex memory modules, with reference processing methods including:

1. Vector store-backed memory, where each Message from a conversation round is stored in a vector database after embedding processing, allowing for semantic vector approximate retrieval based on the user’s natural language input to find the most relevant memory fragments. This method enables long-term memory, no longer limited by the context window of the Wenxin large model.

2. Conversation summary memory, where after each round of conversation, the conversation information is summarized using the Chat Model and the summarized short content is stored, thereby reducing the pressure of storage content.

3. LangChain/LlamaIndex, allowing the implementation of custom memory modules. ERNIE SDK permits developers to freely integrate frameworks like LlamaIndex, enabling more complex memory modules that leverage LlamaIndex’s excellent document retrieval capabilities for longer-term memory.

▎Tools (The Agent’s Tools)

Allowing the Agent to autonomously combine and use complex external tools to solve more intricate problems is key to the widespread adoption of future AI applications. ERNIE SDK enables developers to use over 30 tools already launched by the PaddlePaddle Star River community to quickly build complex applications, and also allows for the customization of local tools based on their business needs.

▎Retrieval (The Agent’s Knowledge Base)

Although general large models absorb extensive knowledge during training, they have limited understanding of specific fields or proprietary business knowledge of users. The cost of fine-tuning large models with domain-specific data is too high, hence the introduction of RAG (Retrieval Augmented Generation) technology, which integrates external knowledge bases into large models rapidly to gain a deep understanding of the specialized knowledge in specific fields. The key functions of the Retrieval module include:

-

Loading data sources, covering various data types: structured data like SQL and Excel; unstructured data like PDF and PPT documents; semi-structured data like Notion documents.

-

Chunk conversion of data.

-

Vectorization embedding processing of data.

-

Storing processed data in a vector database.

-

Quickly locating relevant information through approximate vector retrieval. The ERNIE SDK’s Retrieval module not only supports Baidu’s Wenxin Baizhong search but is also compatible with the Retrieval components of LangChain and LlamaIndex, significantly enhancing data processing efficiency and accuracy.

02

Quick Development Experience of Agent Based on ERNIE SDK

Now, let’s quickly understand how to develop an Agent—a manuscript review assistant. The main function of this Agent is to help us review whether the manuscripts published on various platforms comply with regulations.

Step one, log into the PaddlePaddle Star River community and create a new personal project. The free computing configuration provided by the community is sufficient.

Step two, after logging into the PaddlePaddle Star River community, click on your avatar to obtain your access token in the console. PaddlePaddle provides a free quota of 1 million tokens for each newly registered user.

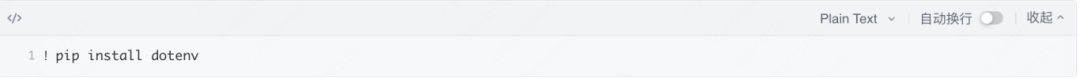

To securely manage your sensitive token information, we recommend using Dotenv. First, install Dotenv, then save your token in a newly created .env file. Note that this file is not visible by default in the file directory; you need to change settings to view it.

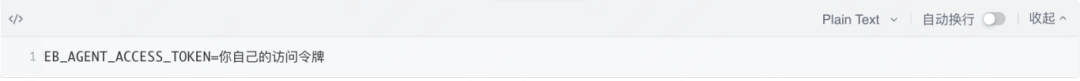

Example .env file content:

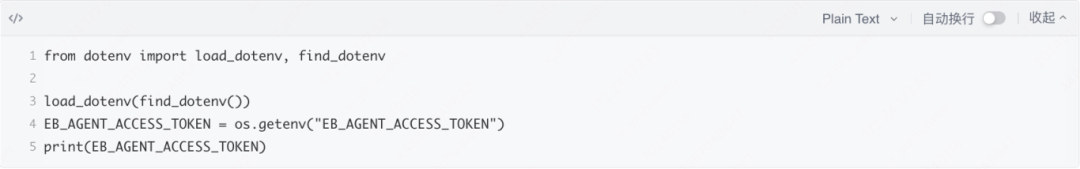

Step three, verify whether your access token can be used normally:

If everything is normal, it will print your access token. Create a text file manuscript.txt that contains the text content you want to audit for compliance.

Step four, build a basic Agent (using the pre-made tools provided by the PaddlePaddle Star River community tool center).

Run this code, and you will see that the Agent uses the [text-moderation/v1.2/text_moderation] tool to review the manuscript content and outputs the review results. In this way, the development of a simple manuscript review assistant Agent is completed. We experienced the quick development process and practicality of Agents based on ERNIE SDK together.

03

Multi-Tool Intelligent Orchestration

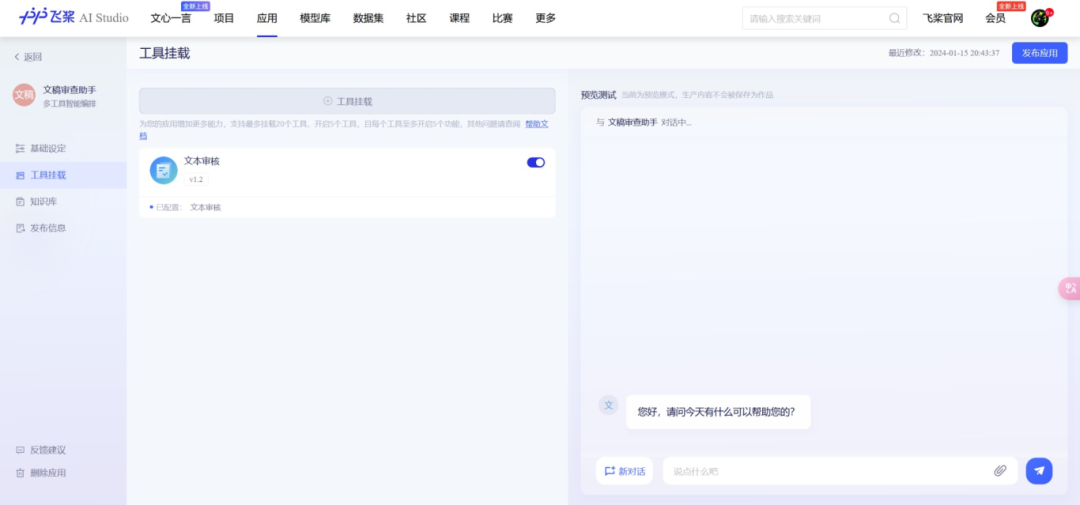

After deeply exploring ERNIE SDK, let’s look at the multi-tool intelligent orchestration feature of the PaddlePaddle Star River community. The PaddlePaddle Star River community not only provides fine-grained SDKs to support the detailed needs of technical developers but also introduces the multi-tool intelligent orchestration feature. This means developers can easily integrate various external tools based on the powerful Wenxin large model to create personalized AI applications. Compared to simply using ERNIE SDK, this method is faster and more convenient, greatly simplifying the development process. We will use multi-tool intelligent orchestration to recreate the manuscript review assistant.

First, create an application using low-code development and select intelligent orchestration.

Next, click to mount the “Text Review Tool” in the sidebar tool mounting. This is one of the more than 30 pre-made tools provided by the PaddlePaddle Star River community tool center, and you can also create your own tools.

Then, set the role identity for the manuscript assistant in the basic settings. After that, click to apply all settings, and you can experience it in the sidebar.

It is worth mentioning that the multi-tool intelligent orchestration of the PaddlePaddle Star River community is very friendly to team members without a technical background. Even without in-depth programming knowledge, team members can quickly get started and easily build their own AI applications. For example, creating the above manuscript assistant only takes a few minutes, which not only accelerates the product iteration speed but also promotes collaboration and innovation within the team.

Currently, Baidu PaddlePaddle has opened applications for testing. Visit the PaddlePaddle Star River community to learn more details and apply for use.

With the development of general large language models and the rise of intelligent Agent technology, we are ushering in a new era of AI application development. From the in-depth exploration of ERNIE SDK to the application of multi-tool intelligent orchestration in the PaddlePaddle Star River community, we see how AI technology frameworks like Baidu PaddlePaddle ERNIE SDK can break traditional boundaries, providing developers with unprecedented convenience and great development potential. Whether developers with a strong technical background or non-technical personnel can find their own space in this new era, together promoting the advancement of AI technology and the popularization of AI applications. The future of AI is full of infinite potential. The vast world of AI applications awaits us to explore and create.

▎Related Links

ERNIE-SDK:

https://github.com/PaddlePaddle/ERNIE-SDK

Multi-tool intelligent orchestration testing application:

https://aistudio.baidu.com/activitydetail/1503017298

Click to read the original text for more information.