Click the blue text to follow us

SUBSCRIBE to US

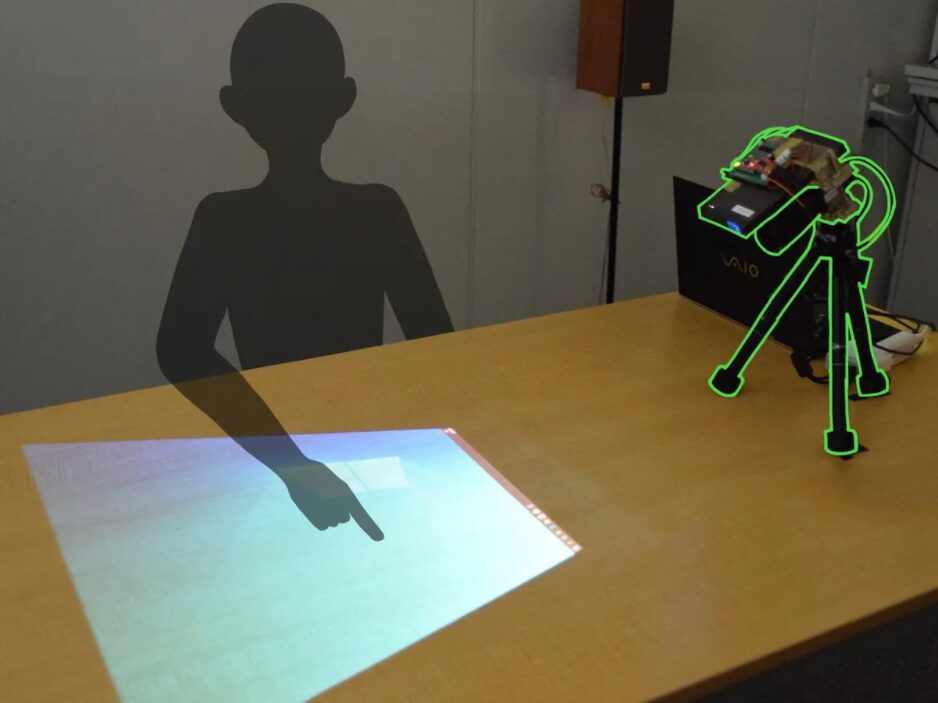

The projector-camera system can display a touchscreen on any surface. NARA INSTITUTE OF SCIENCE AND TECHNOLOGY

This article is part of our exclusive IEEE Journal Watch series in partnership with IEEE Xplore.

Since the advent of smartphones, interacting with digital content via touch has become ubiquitous. However, touchscreens have largely been confined to relatively compact devices. Now, researchers have proposed a low-cost method to turn any surface into a touchscreen, opening up many new possibilities for interacting with the digital world.

Previously, attempts to project images for touch interaction relied on special input devices, multiple sensors, or image processing algorithms, all of which struggled with cluttered or chaotic visual content. The new system reportedly only requires a camera installed beneath the projector, and the system developers claim it works regardless of what you want to project.

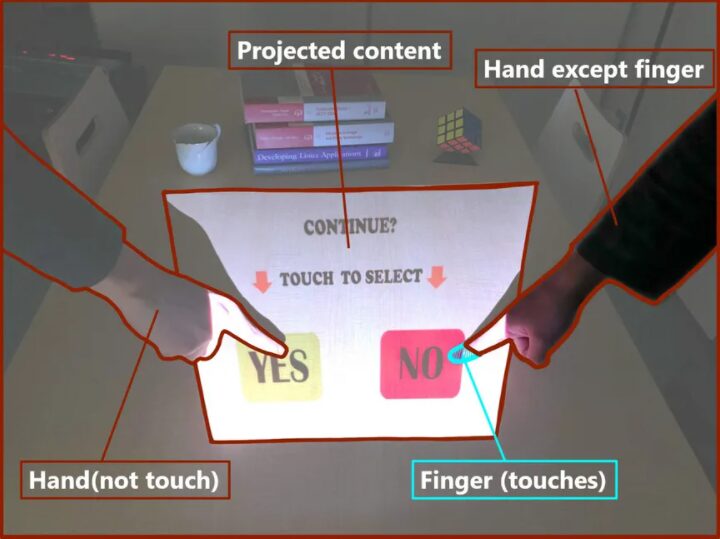

The key to this method is a clever optical trick that ensures only movements above the projected surface are detected. This allows users to register finger information when pressing buttons while ignoring all other content in the camera’s view. Its designers hope this technology can be used to create large interactive displays almost anywhere.

The camera only picks up movements just above the surface of the projection. NARA INSTITUTE OF SCIENCE AND TECHNOLOGY

Assistant Professor Suren Jayasuriya from Arizona State University’s School of Arts, Media, Engineering, and Electrical Engineering said, “You can project anything you want, and the system interacts for games or different types of user experiences.” He helped design the system along with colleagues from the Nara Institute of Science and Technology (NAIST) in Japan.

Jayasuriya stated, “The infrastructure required for the device is very simple, just a projector and a camera. Other systems require multiple cameras or additional depth sensors to achieve this level of interaction.”

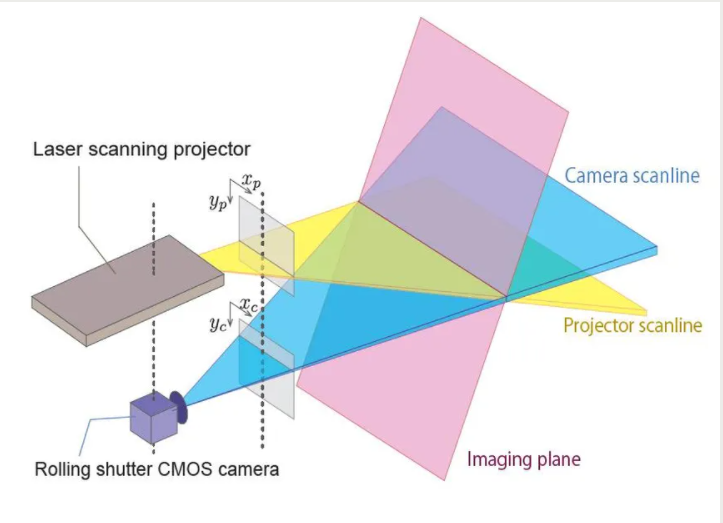

This method relies on a laser scanning projector that can quickly scan up and down, drawing the projected image line by line. This is paired with a rolling shutter camera, which exposes one line of sensor pixels at a time to scan the visual scene line by line, just like the projector. By synchronizing the two devices, the horizontal light plane emitted by the projector can intersect with the horizontal plane received by the camera. Since the two devices are slightly offset, triangulation allows for the calculation of the depth of their overlapping points.

This enables calibration settings so that the camera only picks up light from a specific distance from the projector, which can be set to hover directly above the projected image. This means when a user presses the area of the projected image, the camera can pick up their finger while ignoring the rest of the visual scene.

In a paper published in IEEE Access, the researchers describe how they paired this setup with a simple image processing algorithm to track the user’s finger position relative to the projected image. This tracking information can then be used as input for any touch-based application.

Jayasuriya noted that the system’s setup is already quite simple, but the team is now exploring an automatic calibration scheme to make things even easier. He added that its cost is also relatively low. The initial prototype cost about $500 to build, but if commercialized, the cost could be significantly reduced.

Touchscreens on phones, tablets, and smart devices are at the core of digital life today. But why can’t *any* surface be made into a touchscreen? With a projector and a single camera, US and Japanese researchers did just that. NARA INSTITUTE OF SCIENCE AND TECHNOLOGY

Chris Harrison, Associate Professor at Carnegie Mellon University’s Human-Computer Interaction Institute, said, “The idea of combining a scanning laser with a scanning camera to create a virtual sensing plane is very clever.”

He mentioned that the accuracy of this method is unlikely to match that of physical touchscreens. However, the ability to project them onto any surface could enable various novel functionalities, such as watching interactive sports games on a coffee table or projecting TV controls onto nearby surfaces, eliminating the need for a remote control. Harrison stated, “It is visually similar to augmented reality in many ways, but it does not require people to wear headset devices.”

The researchers believe this technology is particularly useful in situations where fingers get sticky, such as when dealing with young children, or when trying to use a touchscreen while cooking. NAIST Associate Professor Takuya Funatomi said, “With this device, we can search for recipes or find instructions on how to make dishes without having to wash our hands first.”

Currently, the device can only register one finger at a time, but achieving multi-touch would only require the researchers to replace the image processing algorithm with a smarter one. The team also hopes to implement more complex gesture recognition in future iterations of the device.

WeChat ID|IEEE Electrical and Electronics Engineers

Sina Weibo|IEEE China

· IEEE Electrical and Electronics Engineers Society ·

Previous Recommendations

Recommended Reading

Boston Dynamics Atlas Robot Shows Impressive Parkour Skills

9-01 EVAN ACKERMAN

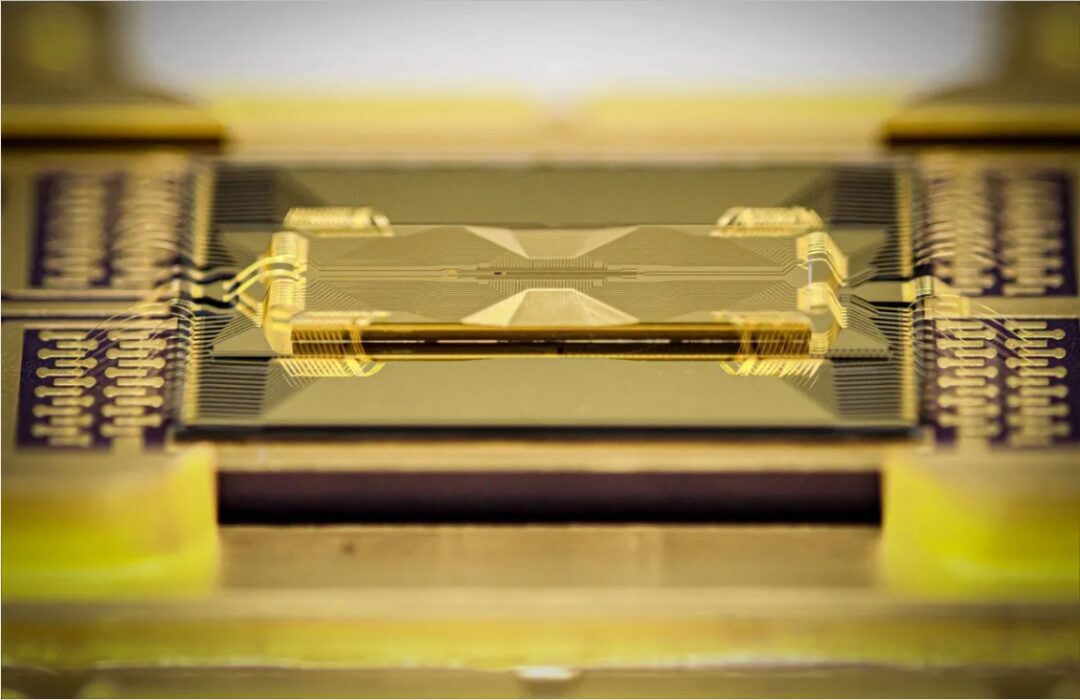

Glass Chips Become Key to New Quantum Architectures

9-13 SAMUEL K. MOORE

Top Programming Languages Ranking of 2021

9-08 STEPHEN CASS

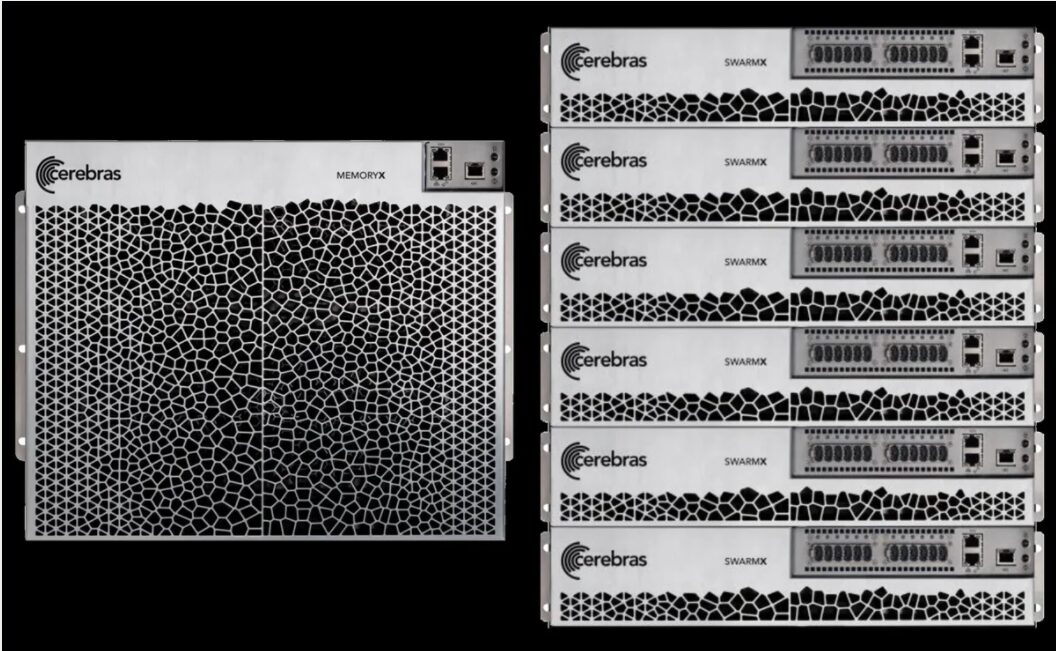

Cerebras Unveils World’s First AI Solution at Human Brain Scale

9-06 SAMUEL K. MOORE