From: Juejin, Author: Ruheng

Link: https://juejin.im/post/6844903490595061767

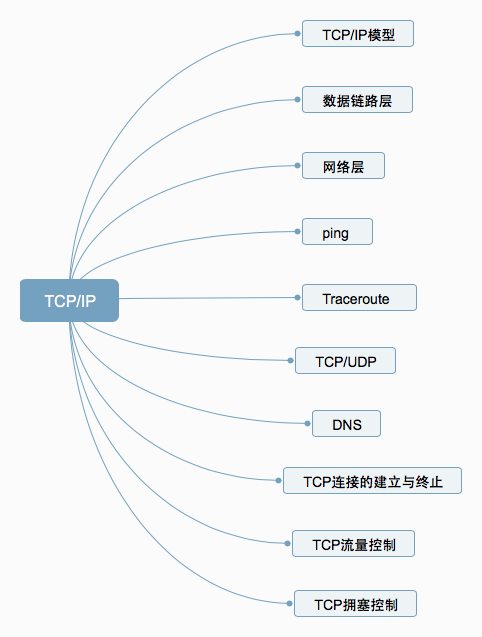

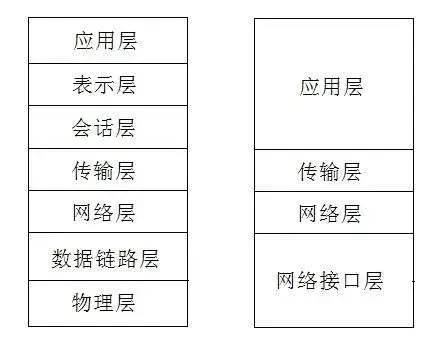

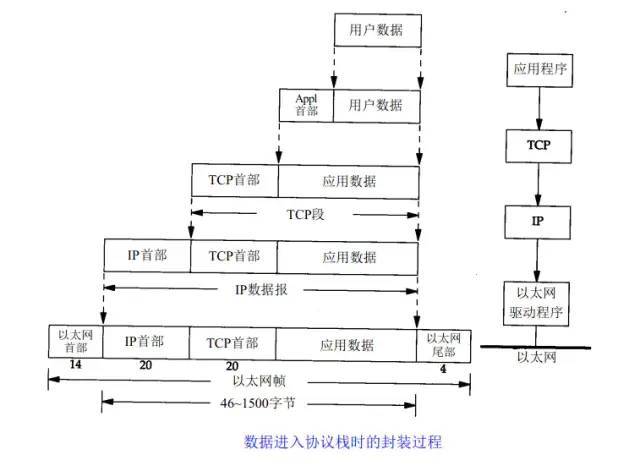

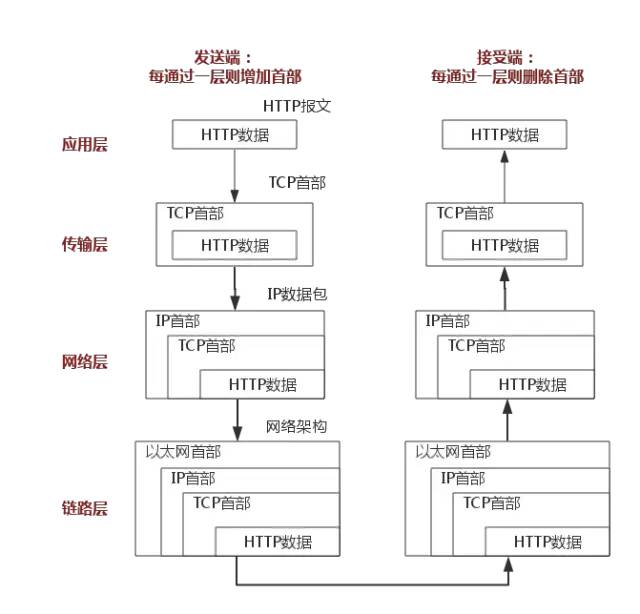

1. TCP/IP Model

The above image uses the HTTP protocol as an example to explain in detail.

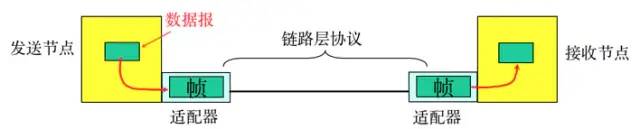

2. Data Link Layer

-

Encapsulation into Frames: Add headers and trailers to the Network Layer datagram to encapsulate it into a frame, with the frame header including the source and destination MAC addresses.

-

Transparent Transmission: Zero bit padding, escape characters.

-

Reliable Transmission: Rarely used on low-error-rate links, but wireless links like WLAN ensure reliable transmission.

-

Error Detection (CRC): The receiver detects errors; if an error is found, the frame is discarded.

3. Network Layer

1. IP Protocol

The IP protocol is the core of the TCP/IP protocol, and all TCP, UDP, ICMP, and IGMP data is transmitted in IP data format. It is important to note that IP is not a reliable protocol, meaning that the IP protocol does not provide a mechanism for handling data that has not been delivered, which is considered the responsibility of upper-layer protocols like TCP or UDP.

1.1 IP Address

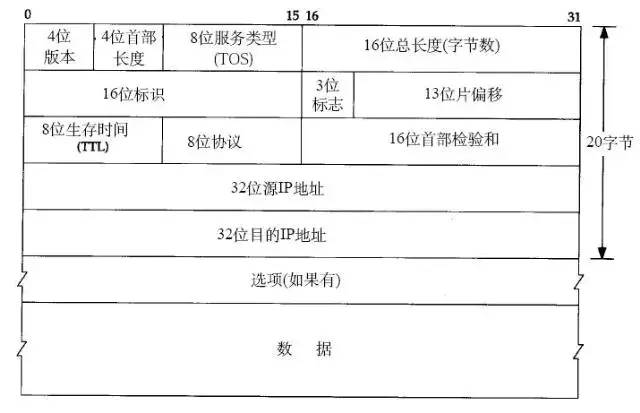

1.2 IP Protocol Header

2. ARP and RARP Protocols

3. ICMP Protocol

The IP protocol is not a reliable protocol; it does not guarantee that data is delivered. Therefore, naturally, the task of ensuring data delivery should be handled by other modules. One important module is the ICMP (Internet Control Message Protocol). ICMP is not a high-level protocol but a protocol at the IP layer.

When errors occur in transmitting IP data packets, such as unreachable hosts or unreachable routes, the ICMP protocol will package the error information and send it back to the host, giving the host a chance to handle the error. This is why it is said that protocols built on top of the IP layer can potentially achieve reliability.

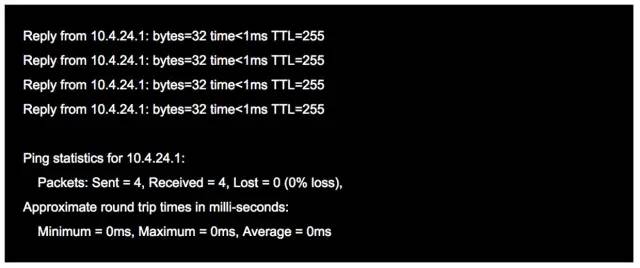

4. Ping

Ping can be regarded as the most famous application of ICMP and is part of the TCP/IP protocol. The “ping” command can check whether the network is reachable and is very helpful in analyzing and diagnosing network failures.

For example, when we cannot access a certain website, we usually ping that website. Ping will echo some useful information. General information is as follows:

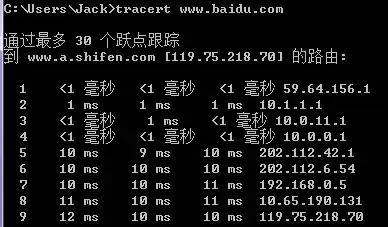

5. Traceroute

Traceroute is an important tool for detecting the routing situation between a host and the destination host, and it is also one of the most convenient tools.

The principle of Traceroute is very interesting. After receiving the destination host’s IP, it first sends a UDP packet with TTL=1 to the destination host. The first router that receives this packet automatically decreases the TTL by 1, and when the TTL becomes 0, the router discards the packet and generates an ICMP destination unreachable message for the host. The host receives this message and then sends a UDP packet with TTL=2 to the destination host, prompting the second router to send an ICMP message back to the host. This process continues until reaching the destination host. Thus, Traceroute obtains all the router IPs along the path.

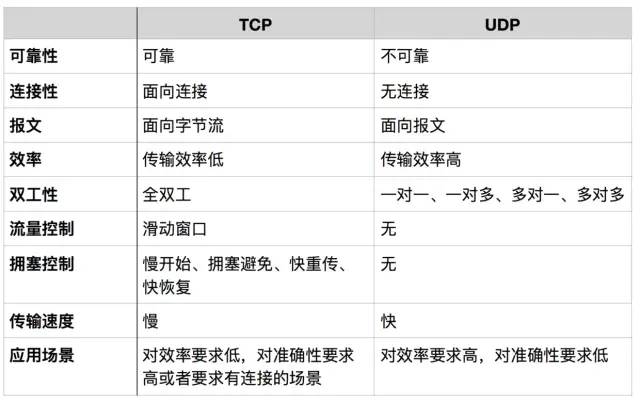

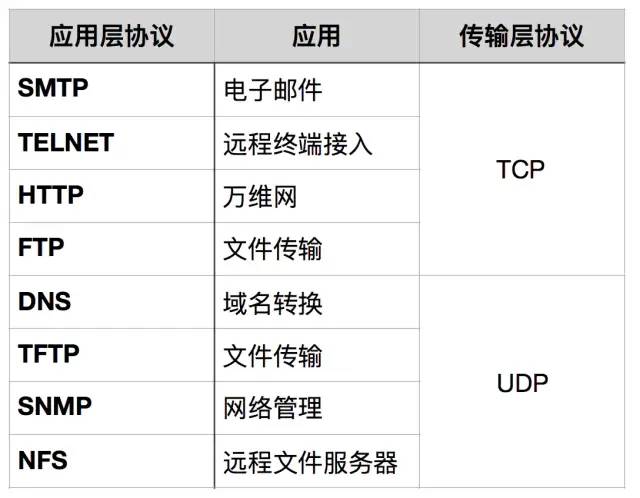

6. TCP/UDP

Message-Oriented

The message-oriented transmission method allows the application layer to specify the length of the message to UDP, which sends it as is, meaning it sends one message at a time. Therefore, the application must choose an appropriate message size. If the message is too long, the IP layer needs to fragment it, reducing efficiency. If it is too short, the IP packet may be too small.

Byte Stream-Oriented

When Should TCP Be Used?

When Should UDP Be Used?

7. DNS

8. Establishment and Termination of TCP Connections

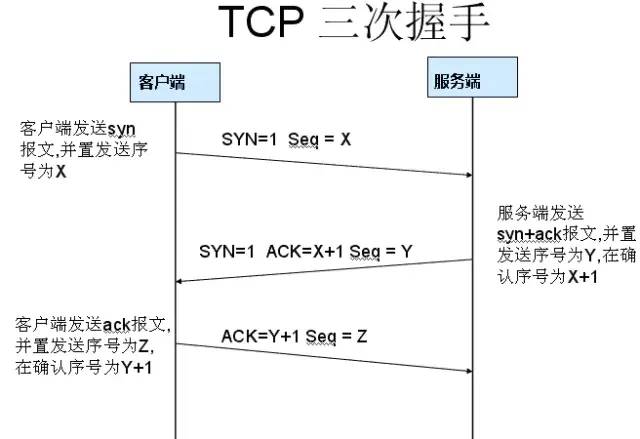

1. Three-Way Handshake

Why Three-Way Handshake?

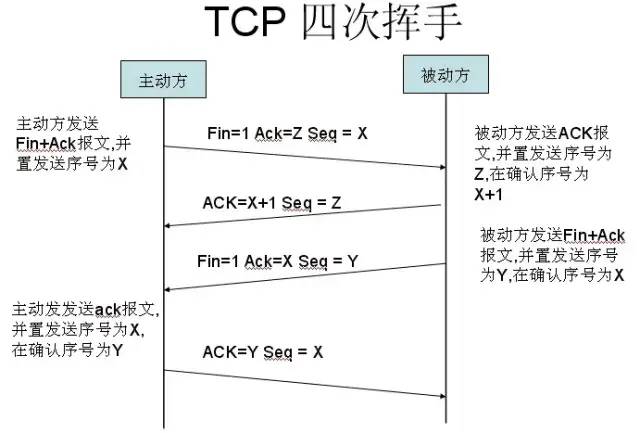

2. Four-Way Termination

After the client and server establish a TCP connection through the three-way handshake, when the data transmission is complete, the TCP connection must be terminated. This is known as the mysterious “four-way termination”.

First Termination: Host 1 (which can be either the client or the server) sets the Sequence Number and sends a FIN segment to Host 2; at this point, Host 1 enters the FIN_WAIT_1 state; this indicates that Host 1 has no data to send to Host 2;

Second Termination: Host 2 receives the FIN segment sent by Host 1 and replies with an ACK segment, setting the Acknowledgment Number to Sequence Number plus 1; Host 1 enters the FIN_WAIT_2 state; Host 2 informs Host 1 that it “agrees” to the shutdown request;

Third Termination: Host 2 sends a FIN segment to Host 1, requesting to close the connection, while Host 2 enters the LAST_ACK state;

Fourth Termination: Host 1 receives the FIN segment sent by Host 2 and sends an ACK segment to Host 2, then Host 1 enters the TIME_WAIT state; after Host 2 receives Host 1’s ACK segment, it closes the connection; at this point, Host 1 waits for 2MSL (Maximum Segment Lifetime) before closing the connection, confirming that the server has closed normally.

Why Four-Way Termination?

Why Wait for 2MSL?

MSL: Maximum Segment Lifetime, which is the longest time any segment can remain in the network before being discarded. There are two reasons:

-

To ensure that the TCP protocol’s full-duplex connection can be reliably closed.

-

To ensure that any duplicate segments from this connection disappear from the network.

First point: If Host 1 directly goes to CLOSED status, there is a risk that due to IP protocol unreliability or other network reasons, Host 2 may not receive Host 1’s final ACK. Then, after timing out, Host 2 may continue to send FIN. At this point, since Host 1 has already gone to CLOSED, it cannot find a corresponding connection for the retransmitted FIN. Therefore, Host 1 does not directly go to CLOSED but maintains a TIME_WAIT state, ensuring that it can confirm that the other party receives the ACK when it receives another FIN, thus properly closing the connection.

Second point: If Host 1 goes directly to CLOSED and then initiates a new connection to Host 2, we cannot guarantee that the new connection and the closed connection will have different port numbers. This means that it is possible for the new connection and the old connection to have the same port number. Generally, this will not cause issues, but special cases can arise: if the new connection and the already closed old connection have the same port number and some delayed data from the previous connection arrives at Host 2 after establishing the new connection, the TCP protocol will mistakenly treat that delayed data as belonging to the new connection, causing confusion with the actual data packets of the new connection. Therefore, TCP connections must remain in TIME_WAIT status for 2MSL to ensure that all data from this connection disappears from the network.

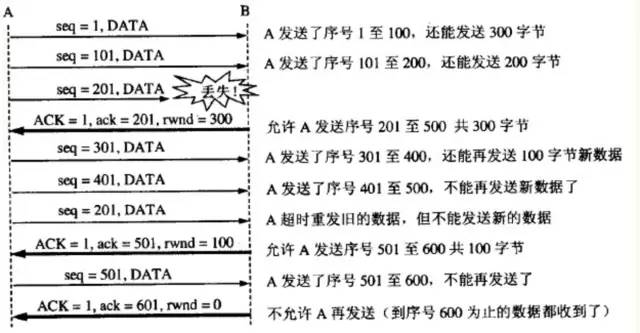

9. TCP Flow Control

If the sender sends data too quickly, the receiver may not be able to keep up, leading to data loss. Flow control ensures that the sender’s rate of transmission is not too fast, allowing the receiver to catch up.

Using the sliding window mechanism makes it easy to implement flow control on a TCP connection.

Assuming A sends data to B. During the connection setup, B informs A: “My receive window is rwnd = 400” (where rwnd represents the receiver window). Therefore, the sender’s sending window cannot exceed the value given by the receiver. Note that the TCP window is measured in bytes, not segments. Assuming each segment is 100 bytes long and the initial sequence number is set to 1. Uppercase ACK indicates the acknowledgment bit in the header, while lowercase ack indicates the value of the acknowledgment field.

From the diagram, we can see that B performed three flow control actions. The first reduced the window to rwnd = 300, the second to rwnd = 100, and finally to rwnd = 0, meaning the sender is no longer allowed to send data. This state of pausing the sender will last until Host B issues a new window value.

TCP establishes a persistence timer for each connection. Whenever one side of a TCP connection receives a zero-window notification from the other side, it starts the persistence timer. If the persistence timer expires, a zero-window probe segment (carrying 1 byte of data) is sent. The receiving party of this segment resets its persistence timer.

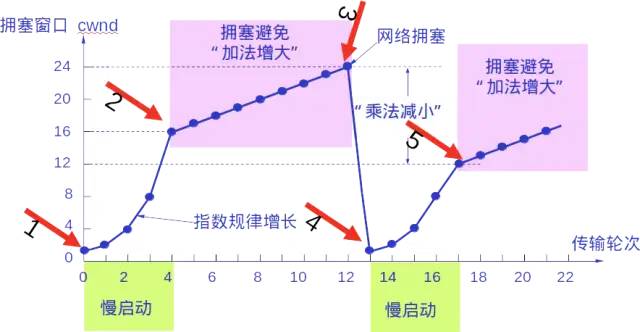

10. TCP Congestion Control

The sender maintains a state variable called the congestion window (cwnd). The size of the congestion window depends on the level of network congestion and changes dynamically. The sender sets its sending window equal to the congestion window.

The principle behind controlling the congestion window is: as long as there is no congestion in the network, the congestion window increases to allow more packets to be sent. However, if network congestion occurs, the congestion window decreases to reduce the number of packets injected into the network.

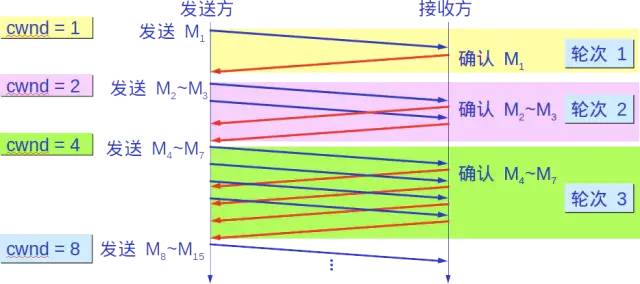

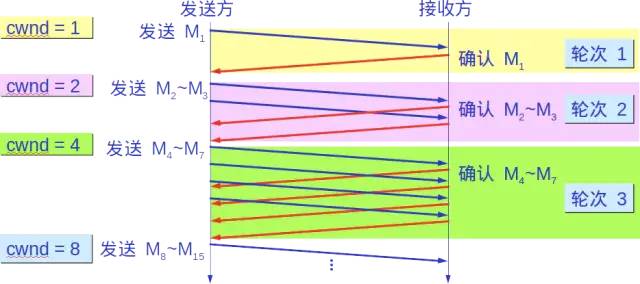

The slow start algorithm:

When a host begins to send data, if it injects a large amount of data into the network immediately, it may cause network congestion, as the load of the network is not yet clear. Therefore, a better approach is to probe the network gradually, increasing the sending window size from small to large, meaning gradually increasing the congestion window value.

Typically, when starting to send segments, the congestion window (cwnd) is set to a value of one maximum segment size (MSS). For every new segment acknowledgment received, the congestion window is increased by at most one MSS. This gradual increase of the sender’s congestion window (cwnd) allows for a more reasonable rate of packet injection into the network.

After each transmission round, the congestion window (cwnd) doubles. The duration of a transmission round is essentially the round-trip time RTT. However, “transmission round” emphasizes that the segments allowed by the congestion window (cwnd) are sent continuously until the last byte sent is acknowledged.

Additionally, the “slow” in slow start does not refer to a slow growth rate of cwnd but rather that during the initial sending of segments, cwnd is set to 1, allowing the sender to send only one segment at first (to probe the network congestion) before gradually increasing cwnd.

To prevent the congestion window (cwnd) from growing too large and causing network congestion, a slow start threshold (ssthresh) state variable is set. The usage of the slow start threshold (ssthresh) is as follows:

-

When cwnd < ssthresh, use the slow start algorithm.

-

When cwnd > ssthresh, stop using the slow start algorithm and switch to the congestion avoidance algorithm.

-

When cwnd = ssthresh, either the slow start algorithm or the congestion avoidance algorithm can be used.

Congestion Avoidance

Gradually increase the congestion window (cwnd), meaning that for every round-trip time (RTT) that passes, the sender’s congestion window (cwnd) increases by 1 rather than doubling. This allows the congestion window (cwnd) to grow slowly in a linear manner, much slower than the growth rate of the congestion window in the slow start algorithm.

Whether in the slow start phase or the congestion avoidance phase, as long as the sender determines that network congestion has occurred (which is indicated by not receiving an acknowledgment), the slow start threshold (ssthresh) is set to half of the sender’s window value at the time of congestion (but not less than 2). Then, the congestion window (cwnd) is reset to 1, and the slow start algorithm is executed.

The purpose of this is to quickly reduce the number of packets sent into the network, giving congested routers enough time to process the packets queued in their buffers.

The following diagram illustrates the above process of congestion control with specific values. Now the size of the sending window is equal to that of the congestion window.

2. Fast Retransmit and Fast Recovery

Fast Retransmit

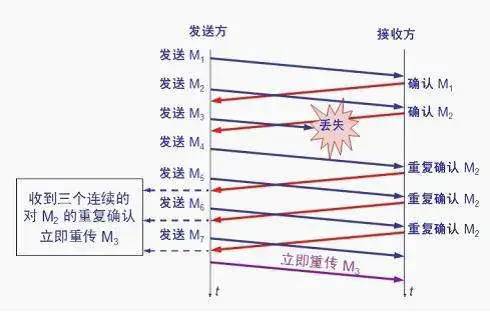

The fast retransmit algorithm requires the receiver to immediately send duplicate acknowledgments for each out-of-order segment received (to allow the sender to know as soon as possible that a segment has not reached the receiver) rather than waiting until it sends its own data to include the acknowledgment.

Assuming the receiver has received segments M1 and M2 and has sent acknowledgments for both. Now, suppose the receiver did not receive M3 but subsequently received M4.

Clearly, the receiver cannot acknowledge M4 because it is an out-of-order segment. According to the reliable transmission principle, the receiver can choose to do nothing or send a timely acknowledgment for M2.

However, under the fast retransmit algorithm, the receiver should promptly send a duplicate acknowledgment for M2. This allows the sender to know as soon as possible that segment M3 has not arrived at the receiver. The sender then sends segments M5 and M6. The receiver receives these two segments and must again send a duplicate acknowledgment for M2. Thus, the sender has received four acknowledgments for M2 from the receiver, three of which are duplicate acknowledgments.

The fast retransmit algorithm also stipulates that as soon as the sender receives three duplicate acknowledgments, it should immediately retransmit the unacknowledged segment M3 without waiting for the retransmission timer for M3 to expire.

By retransmitting unacknowledged segments early, the overall throughput of the network can be improved by approximately 20%.

Fast Recovery

-

When the sender receives three consecutive duplicate acknowledgments, it executes the “multiplicative decrease” algorithm, halving the slow start threshold (ssthresh). -

Unlike slow start, the congestion window (cwnd) is not set to 1; instead, it is set to the value of the slow start threshold (ssthresh) after halving, and then the congestion avoidance algorithm (“additive increase”) is executed, allowing the congestion window to increase slowly in a linear manner.