Introduction

In recent years, with the rapid development and maturity of voice interaction technology, the voice industry has entered a phase of explosive growth. According to statistics from Analysys, the voice market reached a scale of 20 billion in 2017 (Figure 1). As it fully lands in markets such as smart speakers, smart homes, in-car control, translation machines, and story machines, it is expected to maintain rapid growth in the future.

Figure 1 Scale of China’s Voice Industry (Source: Analysys)

Faced with the rapidly growing market demand, mainstream voice technology players are turning their attention to intelligent voice chips to meet the demands of reducing development costs and cycles, quickly connecting with customers, and protecting algorithm intellectual property. They are launching or about to launch artificial intelligence chips equipped with voice algorithms to respond to the ever-changing market needs and challenges.

1. Concept of Intelligent Voice Chips

Artificial Intelligence: According to the definition from the Dartmouth Conference in 1956, it is “making machines behave in ways that resemble human intelligent behavior, including autonomous understanding, judgment, and execution of language, images, and actions.” Development stages are divided into weak AI, strong AI, and super AI; currently, we are in the weak AI stage, focusing on completing specific tasks such as voice recognition, image recognition, and translation, excelling in single areas of artificial intelligence. They exist mainly to solve specific task problems, mostly relying on statistical data and deep learning, which has not yet reached the level of simulating human brain thinking, so weak AI still belongs to the category of “tools.”

Broadly speaking, any chip that meets the needs of voice-based artificial intelligence applications can be referred to as an intelligent voice chip.

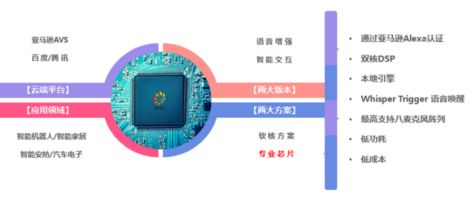

Figure 2 Industry Drivers of Intelligent Voice Chips

2. Current Status and Major Players of Intelligent Voice Chips

Currently, intelligent voice chips are still in the early stages of development, with only a few manufacturers releasing mass-produced chip products, while most companies’ chip products are still in the planning or prototype stage, far from mass production and large-scale promotion.

The players in the intelligent voice chip market can be divided into four categories:

2.1 Companies Engaged in Voice or Main Control Chip Research and Production

Representative companies include Knowles, MicroSemi, MediaTek, Allwinner, Junzheng, and Rockchip.

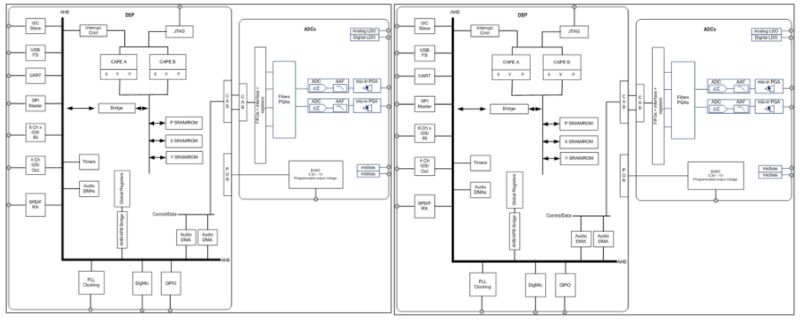

(1) Knowles: Launched the AudioSmart voice solution, including the CX20924 four-microphone and CX20921 dual-microphone voice input processing chips, with main functions including voice enhancement (four-microphone echo cancellation, noise suppression, dereverberation), 360-degree sound source localization, and voice wake-up.

Figure 3 Knowles 20924/20921 Chip Block Diagram

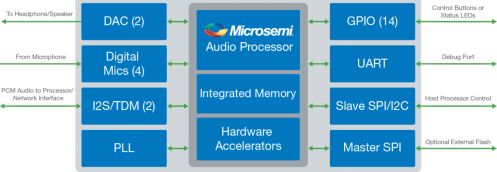

(2) MicroSemi: Released the ZL38051 chip solution, with main functions including voice enhancement (echo cancellation, beamforming, noise suppression, sound source localization), supporting full-duplex calls, audio playback, and codec support.

Figure 4 MicroSemi ZL38051 Chip Block Diagram

(3) MediaTek: Currently promoting the MT8516 voice-specific chip, supporting up to 8-channel TDM microphone array interface and 2-channel PDM digital microphone interface, making it very suitable for far-field microphone voice control and smart speaker devices. Currently, Alibaba’s Tmall Genie, Baidu’s DuerOS, and Sony’s Google Assistant smart speakers all use MediaTek’s solutions.

(4) Junzheng: Launched the X1800 chip product in deep collaboration with Micro-Nano Perception Computing, which is a solution supporting four-microphone arrays, supporting software noise reduction and echo cancellation processing, and has already been implemented in products like the Little Pepper S1 and Baidu products.

Figure 5 Little Pepper S1 Speaker Equipped with X1800

(5) Allwinner: The R16 features a cost-effective quad-core ARM Cortex-A7 architecture processor with strong computing performance and rich interfaces; it supports the Linux-based open-source system Tina (developed by Allwinner), which is currently adopted by products like Dingdong speakers and Xiaomi mini; the R311, launched simultaneously, has a complete noise reduction algorithm built-in and supports solutions for screen-equipped speakers, reducing the development difficulty for product companies.

Figure 6 Allwinner R16/R311 Chip

(6) Rockchip: The RK3229 is based on the Cortex-A7 quad-core, supporting 4-8 microphones. In terms of voice algorithms, it supports sound source localization, sound source enhancement, echo cancellation, and noise suppression technologies. The RK3229 is also the first chip solution to support 8-channel digital I2S digital silicon microphone direct connection, significantly reducing costs and being compatible with various microphone array algorithms and platforms.

Figure 7 Rockchip RK3229 Chip

2.2 Voice Algorithm Technology Companies

Representative companies include iFlytek and Unisound, which have been providing voice processing algorithms and voice recognition engine services.

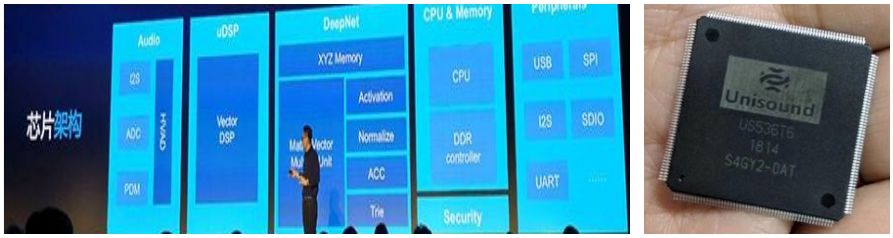

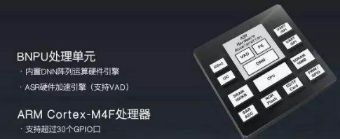

Unisound Unione: Built-in neural network algorithms and accelerators can achieve functions including sound source localization, echo cancellation, voice wake-up, offline recognition, and voiceprint recognition; specific mass production time is to be determined.

Figure 8 Unisound Unione

2.3 Artificial Intelligence Startups

Representative companies include Micro-Nano Perception, Qiying Talon, Rokid, and Voice Intelligence Technology.

(1) Micro-Nano Perception: Provides one-stop acoustic solutions from soft-core algorithms, chip modules, to acoustic design. They have partnered with Junzheng to launch the X1800 smart speaker solution and have jointly released the CK805 lightweight AIoT processor with Hangzhou Zhongtian Micro. They have completed the design of their own intelligent voice chip solution, with wafer production expected to be completed in the fourth quarter. This chip integrates AD, DSP, and neural network acceleration modules, enabling far-field pickup, wake-up and interruption, noise reduction, and echo cancellation functions with 2-8 microphone arrays.

Figure 9 Overview of Micro-Nano Perception Intelligent Voice Chip

(2) Qiying Talon: A company focused on the design of artificial intelligence chips and the development of supporting intelligent algorithm engines. In September 2016, it launched the dedicated deep neural network intelligent voice recognition chip CI1006, which has achieved mass production and shipment.

Figure 10 CL1006 Chip Architecture

3. Application Scenarios of Intelligent Voice Chips

(1) In-Car

Since both hands and eyes are occupied in the car, and the demand is very clear, intelligent voice has become the most suitable interaction method in this scenario. The application of intelligent voice in the car mainly focuses on in-car navigation, supplemented by queries and simple command controls. Therefore, once intelligent voice chips mature, smart rearview mirrors, smart central controls, and smart navigation products equipped with such chips will quickly emerge.

Regarding the industry’s hot discussion of “using voice as an entry point to connect various services and build a vehicle networking ecosystem,” it currently seems far from reality. The core difficulty lies in the fact that the entire industry has yet to find users’ rigid, high-frequency demands in-car scenarios. Perhaps it will only be possible to build a comprehensive in-car ecosystem when autonomous vehicles become widespread, freeing up human hands, eyes, and minds.

Figure 11 In-Car Voice Interaction System

(2) Smart Home/Smart Speakers

In home scenarios, intelligent voice applications mainly revolve around smart TVs, speakers, and home robots, addressing needs such as searching for movies, listening to music, reminders, simple interactions, and application retrieval.

The natural characteristics of the home environment make voice the most suitable interaction method. Platforms like smart speakers will attract more and more applications, continuously enriching their product functions and completing the intelligent home ecosystem. As user habits are cultivated, such “smart home control centers” and “traffic entry points” are expected to quickly gain popularity and become bestsellers. However, since voice is a completely new interaction method, in addition to the necessary technological enhancements, various applications will face new challenges in user experience and value considerations during development and design.

In addition to smart appliances like speakers and table lamps, home robots are also being pursued by entrepreneurs and investors. Ultimately, which form of smart product will succeed depends on consumers’ willingness to pay, but it is already undeniable that voice interaction will become the mainstream technology in smart homes. The intelligent voice chips equipped with voice interaction technology will undoubtedly become the best choice for manufacturers to quickly develop products.

Figure 12 Amazon Echo Series Smart Speaker Products

(3) Security Monitoring

Currently, security monitoring cameras primarily focus on obtaining high-definition images, and the demand for voice collection, separation, and recognition is still in its infancy. However, as fields such as anti-terrorism and public safety face new challenges, the need for high-definition audio data collection and noise and reverberation suppression supported by multi-microphone arrays will gradually be included in the demand directory for security products. Therefore, dedicated intelligent voice chips supporting such functions will gradually be integrated into security camera products.

4. Future Trends of Intelligent Voice Chips

(1) Achieving Edge Computing

Currently, apart from voice wake-up and front-end audio processing, complex scenarios of voice recognition, voice interaction, and real-time translation must connect to cloud servers to complete. In the future, when chip computing power is sufficient, it will be possible to run the entire voice processing process on-chip without relying on the cloud and network, expanding more mobile applications while ensuring user data privacy.

(2) Compatibility with Multiple Neural Network Frameworks

Currently, some intelligent voice chips rely on traditional digital signal processing algorithms, while others utilize RNN/CNN and other neural network algorithms for data processing. In the future, more types of neural network algorithms may emerge to meet various voice interaction scenarios, and these algorithms can be compatible with the same intelligent chip, allowing different networks to be invoked under different situations to meet customer needs.

(3) Low Power Consumption and Small Size

Currently, various voice chips face limitations in power consumption and size, preventing large-scale application in wearable devices, toys, drones, and other devices without power supply, where hardware space is extremely limited. If these issues can be resolved, voice interaction will be able to penetrate more industries and lead to more products.

References:

[1] China’s Strategic Emerging Industries: New Generation Information Technology Development Map Series Lecture 3: Artificial Intelligence Chips Author: Partner Industry Research Institute

[2] Understanding China’s Intelligent Voice Semantic Industry Issues, Patterns, and Trends Author: Ai Analysis Public Account (ID: ifenxicom)