By utilizing edge AI technology, robotics developer Kabilan KB, an undergraduate at Kalyani Government Engineering College, is dedicated to improving the mobility quality for people with disabilities.

With the assistance of artificial intelligence, robots, tractors, and baby strollers (and even skateboard parks) are achieving autonomous development. A developer named Kabilan KB is applying autonomous navigation features to wheelchairs, helping individuals with disabilities gain a better quality of travel experience.

This undergraduate from Kalyani Government Engineering College in India is advancing his autonomous wheelchair project using the NVIDIA Jetson edge AI and robotics technology platform.

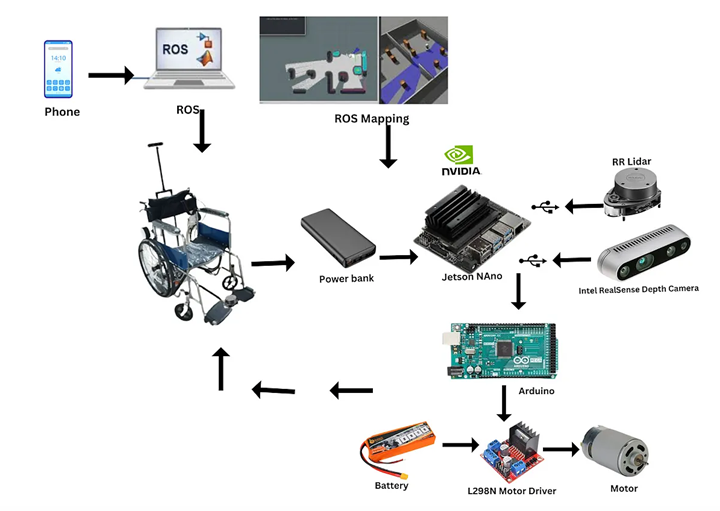

This autonomous electric wheelchair is equipped with depth sensors and LiDAR sensors, connected to a USB camera, allowing it to perceive its surroundings and plan an accessible path to the user’s designated destination.

KB stated: “Users of this electric wheelchair only need to provide the location they wish to go to, and the autonomous navigation system will program these locations. Then, it uses designated numerical values to represent the planned path, for example, pressing ‘1’ for the kitchen and ‘2’ for the bedroom. The user just needs to press the corresponding button, and the autonomous wheelchair will take them to the specified location.”

The NVIDIA Jetson Nano developer kit can process data from cameras and sensors in real-time, using deep learning-based computer vision models to identify obstacles in the environment.

This developer kit acts as the “brain” of the electric wheelchair’s autonomous system, generating a 2D map of the surrounding environment to plan a collision-free path to the destination, while also sending update signals to ensure the wheelchair’s travel safety.

About the Maker

With a background in mechanical engineering, KB has become fascinated with AI and robotics in recent years, spending his free time searching for relevant course videos on video-sharing platforms.

He is currently pursuing a bachelor’s degree in Robotics and Automation at Kalyani Government Engineering College, with aspirations to start his own robotics company one day.

KB prides himself on being a self-educator, having obtained multiple certifications from the NVIDIA Deep Learning Institute, including “Building Edge Video AI Applications on Jetson Nano” and “Developing, Customizing, and Publishing with Extensions in Omniverse.”

After grasping the fundamentals of robotics, KB began conducting simulation experiments in NVIDIA Omniverse, a 3D tool operating platform based on the OpenUSD framework.

He mentioned, “Using Omniverse for simulation doesn’t require spending a fortune to create robotic prototype models since synthetic data can be generated as a substitute. It’s truly a software for the future.”

Source of Inspiration

KB hopes to create a device through this latest NVIDIA Jetson project to assist his relatives with mobility impairments and other individuals who may be unable to control manual or electric wheelchairs.

KB stated: “Sometimes people cannot afford fully electric wheelchairs. In India, only a portion of the population can afford such wheelchairs, so I am determined to use the most basic electric wheelchair available on the market and connect it to Jetson for autonomous operation.”

KB’s personal project has received sponsorship from Boston Children’s Hospital and Harvard Medical School’s Program in Global Surgery and Social Change.

KB’s Jetson Project

After purchasing a basic electric wheelchair, KB connected the wheelchair’s motor hub to NVIDIA Jetson Nano, LiDAR, and depth cameras.

He utilized the YOLO object detection feature on Jetson Nano and the Robot Operating System (ROS), a mainstream software for building robotic applications, to train the AI algorithms for the autonomous wheelchair.

These algorithms enable the autonomous electric wheelchair to perceive and map its environment and plan a collision-free path.

“The real-time processing speed of NVIDIA Jetson Nano prevents delays or lags, ensuring user experience remains unaffected,” KB noted. He has been developing the prototype of this project since June. The developer has detailed the technical components of this autonomous wheelchair on his blog and uploaded demonstration videos on the Kalyani Innovation and Design Studio’s video account.

Looking ahead, KB hopes to further expand his project, allowing users to control the wheelchair using brain signals from an EEG connected to machine learning algorithms.

KB stated: “I want to create a product that allows individuals with complete mobility impairment to simply think of their desired destination, and the wheelchair will smoothly take them there.”

To learn more about the NVIDIA Jetson platform, visit:

https://www.nvidia.cn/autonomous-machines/embedded-systems/