Overview

This is a high-performance thread pool implemented based on the C++11 standard, featuring the following characteristics:

Supports any function as a task: Can execute regular functions, member functions, lambda expressions, etc.

Supports obtaining return values: Asynchronously obtain task execution results through the std::future mechanism

Type-safe: Ensures type safety using C++11 template deduction

Efficient synchronization: Implements efficient thread synchronization using condition variables and mutexes

Flexible control: Supports operations such as starting, stopping, and waiting for the thread pool

Video explanation and source code access:

https://www.bilibili.com/video/BV1ywG5zhE8X/

Core Design Philosophy

1. Producer-Consumer Model

User threads (producers) → Task queue → Worker threads (consumers)

2. Task Abstraction

Abstract all executable tasks as std::function<void()> type for unified management.

3. Asynchronous Result Retrieval

Achieve asynchronous result retrieval through std::packaged_task and std::future.

Key C++11 Features Explained

1. Variadic Templates

template <class F, class... Args> auto exec(F&& f, Args&&... args) -> std::future<decltype(f(args...))> Core Concepts:

-

class… Args: Indicates that it can accept 0 to any number of template parameters

-

Args&&… args: Parameter pack expansion, supports perfect forwarding

-

Usage Example:

threadpool.exec(func0); // 0 parameters threadpool.exec(func1, 10); // 1 parameter threadpool.exec(func2, 20, "darren"); // 2 parameters2. Perfect Forwarding

std::bind(std::forward<F>(f), std::forward<Args>(args)...)Function:

Preserves the value category of parameters (lvalue/rvalue)

Avoids unnecessary copy operations

Ensures parameters are passed to the target function as is

3. Automatic Type Deduction

using RetType = decltype(f(args...)); // Deduce return type auto task = std::make_shared<std::packaged_task<RetType()>>(...);Characteristics:

-

decltype: Compile-time type deduction

-

auto: Automatic type deduction, simplifies code

-

using: Type aliasing, more powerful than typedef

4. Move Semantics

task = std::move(_tasks.front()); // Avoid copying, improve performanceAdvantages:

-

Reduces unnecessary deep copies

-

Improves efficiency of passing large objects

-

Supports move-only types (e.g., unique_ptr)

5. Lambda Expressions

fPtr->_func = [task]() { // Capture task, no parameters printf("do task-----------\n"); (*task)(); };Syntax:

-

[task]: Capture task by value

-

() : Parameter list

-

{ }: Function body

6. Smart Pointers

typedef shared_ptr<TaskFunc> TaskFuncPtr; auto task = std::make_shared<std::packaged_task<RetType()>>(...);Advantages:

-

Automatic memory management

-

Thread-safe reference counting

-

Avoids memory leaks

7. Standard Thread Library

std::thread, std::mutex, std::condition_variable, std::atomicCharacteristics:

-

Cross-platform thread support

-

Standardized synchronization primitives

-

Efficient inter-thread communication

Architecture Design Analysis

Class Diagram Structure

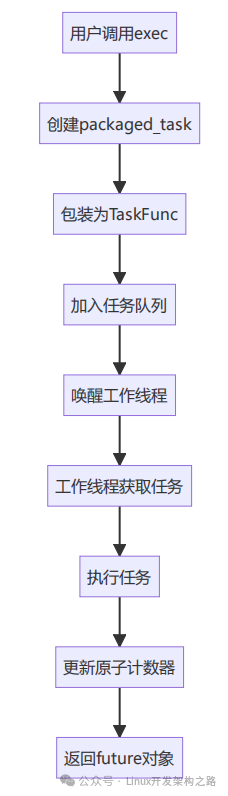

ZERO_ThreadPool ├── _threads: vector<thread*> // Worker thread pool ├── _tasks: queue<TaskFuncPtr> // Task queue ├── _mutex: mutex // Mutex ├── _condition: condition_variable // Condition variable ├── _threadNum: size_t // Number of threads ├── _bTerminate: bool // Termination flag └── _atomic: atomic<int> // Atomic counterExecution Flow Diagram

Core Component Details

1. Task Encapsulation Mechanism

struct TaskFunc { std::function<void()> _func; };Design Advantages:

-

Unified task interface

-

Supports any callable object

-

Facilitates queue management

2. Task Submission Process (exec function)

template <class F, class... Args> auto exec(F&& f, Args&&... args) -> std::future<decltype(f(args...))> { // 1. Type deduction using RetType = decltype(f(args...)); // 2. Create packaged_task auto task = std::make_shared<std::packaged_task<RetType()>>( std::bind(std::forward<F>(f), std::forward<Args>(args)...)); // 3. Wrap as a unified task type TaskFuncPtr fPtr = std::make_shared<TaskFunc>(); fPtr->_func = [task]() { (*task)(); }; // 4. Add to queue and notify std::unique_lock<std::mutex> lock(_mutex); _tasks.push(fPtr); _condition.notify_one(); // 5. Return future return task->get_future();}Key Steps Analysis:

1. Type Deduction: decltype(f(args…)) deduces the function return type

2. Task Wrapping: packaged_task wraps the function as a storable task

3. Parameter Binding: std::bind binds parameters to the function

4. Unified Interface: lambda expressions unify different types of tasks as void()

5. Asynchronous Retrieval: Asynchronously obtain results through future

3. Worker Thread Execution Logic

void run() { while (!isTerminate()) { TaskFuncPtr task; bool ok = get(task); // Get task if (ok) { ++_atomic; // Increment execution count try { task->_func(); // Execute task } catch (...) { // Exception handling } --_atomic; // Decrement execution count // Check if all tasks are completed std::unique_lock<std::mutex> lock(_mutex); if (_atomic == 0 && _tasks.empty()) { _condition.notify_all(); // Notify waitForAllDone } } }}4. Task Retrieval Mechanism

bool get(TaskFuncPtr& task) { std::unique_lock<std::mutex> lock(_mutex); if (_tasks.empty()) { // Wait for task or termination signal _condition.wait(lock, [this] { return _bTerminate || !_tasks.empty(); }); } if (_bTerminate) return false; if (!_tasks.empty()) { task = std::move(_tasks.front()); // Move semantics _tasks.pop(); return true; } return false;}Design Highlights:

-

Uses condition variables to avoid busy waiting

-

Lambda predicates ensure accurate wait conditions

-

Move semantics improve performance

5. Graceful Shutdown Mechanism

void stop() { { std::unique_lock<std::mutex> lock(_mutex); _bTerminate = true; // Set termination flag _condition.notify_all(); // Wake all waiting threads } // Wait for all threads to finish for (auto& thread : _threads) { if (thread->joinable()) { thread->join(); } delete thread; } _threads.clear();}Usage Methods and Examples

1. Basic Usage Flow

ZERO_ThreadPool threadpool; threadpool.init(4); // Initialize 4 worker threads threadpool.start(); // Start thread pool // Submit tasks threadpool.exec(func0); threadpool.exec(func1, 10); threadpool.exec(func2, 20, "hello"); threadpool.waitForAllDone(); // Wait for all tasks to complete threadpool.stop(); // Stop thread pool2. Obtaining Return Values

// Function with return value int compute(int a, int b) { return a + b; } // Submit task and get result auto future = threadpool.exec(compute, 10, 20); int result = future.get(); // Block until result cout << "Result: " << result << endl; // Output: Result: 303. Calling Class Member Functions

class Calculator { public: int multiply(int a, int b) { return a * b; }}; Calculator calc; auto future = threadpool.exec( std::bind(&Calculator::multiply, &calc, std::placeholders::_1, std::placeholders::_2), 5, 6); cout << "Result: " << future.get() << endl; // Output: Result: 304. Lambda Expression Tasks

auto future = threadpool.exec([](int x) -> int { cout << "Processing: " << x << endl; return x * x;}, 5); cout << "Square: " << future.get() << endl; // Output: Square: 25Performance Optimization Points

1. Memory Management Optimization

-

Use smart pointers for automatic memory management

-

Move semantics reduce copy overhead

-

Object pools can further optimize memory allocation

2. Lock Contention Optimization

-

Use condition variables to reduce busy waiting

-

Minimize critical section size

-

Consider lock-free queues for further optimization

3. Cache Friendliness

-

Task queues use contiguous memory (std::queue based on deque)

-

Atomic operations reduce cache coherence overhead

4. Thread Affinity

// Expandable: Set CPU affinity #ifdef __linux__ cpu_set_t cpuset; CPU_ZERO(&cpuset); CPU_SET(cpu_id, &cpuset); pthread_setaffinity_np(pthread_self(), sizeof(cpu_set_t), &cpuset); #endifNotes and Best Practices

1. Setting the Number of Threads

// CPU-intensive tasks size_t cpu_threads = std::thread::hardware_concurrency(); // IO-intensive tasks size_t io_threads = cpu_threads * 2; // Empirical value threadpool.init(cpu_threads);2. Exception Safety

try { auto future = threadpool.exec(risky_function); auto result = future.get(); // May throw an exception} catch (const std::exception& e) { // Handle exceptions during task execution}3. Resource Management

// RAII pattern for managing thread pool class ThreadPoolManager { ZERO_ThreadPool pool; public: ThreadPoolManager(size_t threads) { pool.init(threads); pool.start(); } ~ThreadPoolManager() { pool.stop(); // Automatic cleanup } template<typename F, typename... Args> auto submit(F&& f, Args&&... args) { return pool.exec(std::forward<F>(f), std::forward<Args>(args)...); }};4. Performance Monitoring

// Add performance statistics class EnhancedThreadPool : public ZERO_ThreadPool { std::atomic<uint64_t> tasks_completed{0}; std::atomic<uint64_t> total_execution_time{0}; public: void printStats() { cout << "Tasks completed: " << tasks_completed << endl; cout << "Average execution time: " << (total_execution_time / tasks_completed) << "ms" << endl; }};5. Expansion Suggestions

-

1. Priority Queue: Support task priority

-

2. Dynamic Adjustment: Dynamically adjust the number of threads based on load

-

3. Task Timeout: Support task execution timeout

-

4. Statistical Information: Add performance monitoring and statistics

-

5. Exception Handling: More comprehensive exception handling mechanisms

Conclusion

This C++11 thread pool implementation showcases the powerful features of modern C++:

Technical Highlights:

-

✅ Utilizes variadic templates to achieve type-safe generic interfaces

-

✅ Avoids unnecessary copies through perfect forwarding

-

✅ Implements asynchronous result retrieval using future/promise patterns

-

✅ Achieves efficient thread synchronization using condition variables

-

✅ Ensures memory safety with smart pointers

Application Value:

-

📈 Significantly improves multi-core CPU utilization

-

🔧 Simplifies the complexity of concurrent programming

-

⚡ Supports various types of asynchronous tasks

-

🛡 Provides type safety and exception safety guarantees