Having been involved with NPU validation for several years, I realized I hadn’t organized and summarized my knowledge well, which makes it easy to forget. I will take some time to整理 and share some basic concepts.

1) Basic Definition of AI

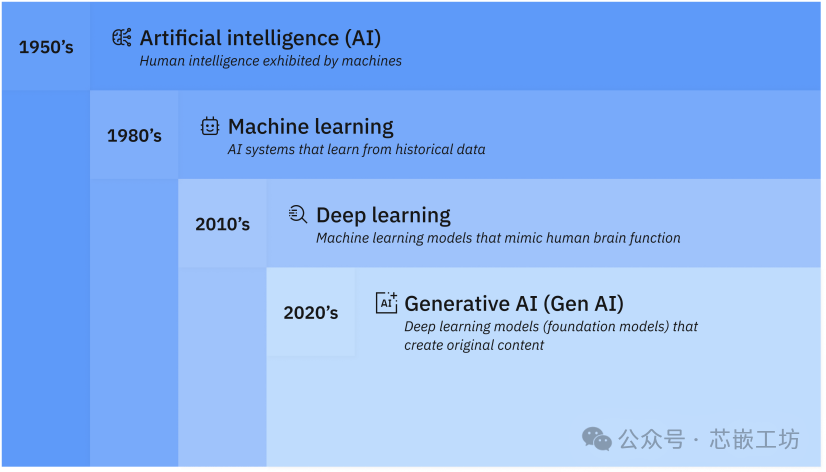

Figure 1 – Artificial IntelligenceAI, Machine LearningML, Deep LearningDL and GenerativeAI are interrelated

Artificial Intelligence(AI)

is a technology that enables computers and machines to simulate human learning, understanding, problem-solving, decision-making, creativity, and autonomy. Applications and devices equipped with AI can recognize objects, understand and respond to human language, learn from new information and experiences, provide detailed recommendations to users and experts, and act independently, replacing human intelligence or intervention (a typical example is autonomous vehicles).

Machine Learning

At the core of artificial intelligence is machine learning, which createsmodels by training algorithms, enabling them to make predictions or decisions based on data. Machine learning allows computers to learn from data and make inferences without being explicitly programmed for specific tasks.

Deep Learning

Deep learning is a subset of machine learning that uses multi-layer neural networks (known as deep neural networks) to more closelysimulate the complex decision-making capabilities of the human brain.

GenerativeAI

Generative artificial intelligence (sometimes referred to as“gen AI”) refers to deep learning models that can create complex original content (such as long text, high-quality images, realistic videos, or audio) based on user prompts or requests.

At a high level, generative models encode a simplified representation of their training data and then extract content from that representation to create new works that are similar but not identical to the original data.

Transformers (also known as transformer models) are trained on sequential data to generate extended sequences of content (such as words in a sentence, shapes in an image, frames in a video, or commands in software code). Transformers are at the core of most popular generative AI tools today, including ChatGPT and GPT-4, Copilot, BERT, Bard, and Midjourney.

2) History of AI

The concept of a “thinking machine” can be traced back to ancient Greece. Since the advent of electronic computing, significant events and milestones in the development of artificial intelligence include:

In 1950, Alan Turing published “Computing Machinery and Intelligence”. In this paper, Turing, known for breaking the German ENIGMA code during World War II and often referred to as the “father of computer science,” posed the question: “Can machines think?”

Building on this, he proposed a test, now famously known as the“Turing Test.” In this test, a human questioner attempts to distinguish between the text responses of a computer and a human. Although this test has garnered attention since its publication, it remains an important part of AI history and continues to be a concept in philosophy due to its linguistic implications.

In 1956, John McCarthy coined the term “artificial intelligence” at the first AI conference held at Dartmouth College. (McCarthy later invented the Lisp programming language.) Later that year, Allen Newell, J.C. Shaw, and Herbert Simon created the Logic Theorist, the first working AI computer program.

In 1967, Frank Rosenblatt developed the Mark 1 Perceptron, the first computer based on neural networks that learned through trial and error. Just a year later, Marvin Minsky and Seymour Papert published a book titled “Perceptrons,” which not only became a milestone work in the field of neural networks but also served as an argument against future neural network research plans for a time.

In 1980,

Neural networks using backpropagation algorithms for self-training became widely used in AI applications.

In 1995, Stuart Russell and Peter Norvig published “Artificial Intelligence: A Modern Approach,” which became one of the authoritative textbooks in the field of AI research. In the book, they explored four potential goals or definitions of AI and distinguished computer systems based on rationality and thinking versus action.

In 1997, IBM’s “Deep Blue” defeated the reigning world chess champion Garry Kasparov in a chess match (and rematch).

In 2004, John McCarthy published a paper titled “What is Artificial Intelligence?” and proposed a frequently cited definition of AI. By this time, the era of big data and cloud computing had arrived, allowing businesses to manage increasingly large data assets that would be used to train AI models in the future.

In 2011, IBM Watson® defeated champions Ken Jennings and Brad Rutter on the game show “Jeopardy!” At the same time, data science began to emerge as a popular discipline.

In 2015, Baidu’s Minwa supercomputer used a special deep neural network called convolutional neural networks to recognize and classify images with higher accuracy than an average person.

In 2016, DeepMind’s AlphaGo program (powered by deep neural networks) defeated world Go champion Lee Sedol in a five-game match. Given the vast number of possible moves in Go (over 14.5 trillion after just four moves), this victory was significant. Later, Google reportedly acquired DeepMind for $400 million.

In 2022,

The rise of large language models (LLMs), such as OpenAI’s ChatGPT, is set to bring significant transformations to AI performance and its potential to drive business value. With these new generative AI practices, deep learning models can be pre-trained on vast amounts of data.

In 2024, the latest AI trends indicate that AI will continue to thrive. Multimodal models that can take various types of data as input are providing richer and more powerful experiences. These models integrate computer vision, image recognition, and natural language processing (NLP) voice recognition capabilities. In an era of diminishing returns for large models with vast parameter counts, smaller models are also making continuous progress.

3) Basic Principles

Taking Gen AI as an example, it can be divided into three stages:

1)Training, to build the foundational model.

2)Tuning, to adapt the model to specific applications.

3)Generating, evaluating, and further tuning, to improve accuracy.

3.1 Training

Generative AI (Gen AI) begins with a “foundational model”; deep learning models serve as the basis for various generative AI applications.

The most common foundational models today are large language models (LLMs), created specifically for text generation applications. Additionally, there are foundational models for generating images, videos, sounds, or music, as well as multimodal foundational models that support various content types.

To create foundational models, practitioners need to utilize vast amounts of relevant raw, unstructured, and unlabeled data (e.g., TB or PB of text, image, or video data from the internet) to train deep learning algorithms. The training process generates a neural network containing billions of parameters that encode representations of entities, patterns, and relationships in the data, enabling it to autonomously generate content based on prompts. This is the foundational model.

This training process is computationally intensive, time-consuming, and costly. It requires thousands of clusters of graphics processing units (GPUs) and weeks of processing time, all of which typically costs millions of dollars. Open-source foundational model projects like Meta’s Llama-2 allow the next generation of AI (GEN AI) developers to avoid this step and its costs.

3.2 Tuning

Next, the model must be tuned for specific content generation tasks. This can be achieved in various ways, including:

·Fine-tuning, which involves providing the model with application-specific labeled data, potential questions or prompts the application may receive, and corresponding correct answers in the desired format.

·Reinforcement learning with human feedback (RLHF), where human users evaluate the accuracy or relevance of the model’s outputs, helping the model improve itself. This can be as simple as allowing users to input or respond with corrections to a chatbot or virtual assistant.

3.3 Generating, Evaluating, and Further Tuning

Developers and users regularly evaluate the outputs of their generative AI applications and further tune the model, sometimes as frequently as once a week, to improve accuracy or relevance. In contrast, the foundational model itself is updated much less frequently, possibly once a year or every 18 months.

Another option for enhancing the performance of first-generation AI applications is retrieval-augmented generation (RAG), a technique that extends foundational models to use relevant sources beyond the training data to optimize parameters for higher accuracy or relevance.