——–· Introduction ·——–

Emotion Support Conversation (ESC) is an emerging and challenging task aimed at alleviating emotional distress. Previous studies often struggle to maintain smooth transitions in dialogues due to their failure to capture fine-grained transition information in each turn of the conversation. To address this issue, this paper proposes modeling the turn-level state transitions in ESC from three perspectives: TransESC, including:

-

Semantic Transition,

-

Strategy Transition,

-

Emotion Transition,

to drive the conversation towards a more natural and smooth direction. Specifically, we adopt a two-step approach called “transit-then-interact” to construct a state transition graph that captures these three types of turn-level transition information. Finally, this information is injected into the transition-aware decoder to generate more interactive and supportive responses.

Automatic and human evaluations on benchmark datasets indicate that TransESC has a significant advantage in generating smoother and more effective emotional support responses. Our source code is open-sourced at: 👉 https://github.com/circle-hit/TransESC

——· Introduction ·——

Emotional Support Conversation (ESC) is a goal-oriented task aimed at alleviating individuals’ emotional distress and improving their mental state. This is an ideal and critical capability that chatbots with good interaction abilities are expected to possess, with potential applications in various fields such as mental health support and customer service platforms.

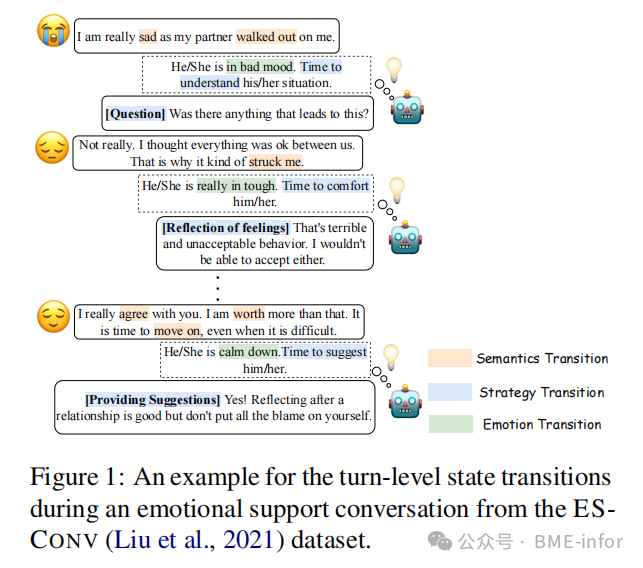

Unlike emotional dialogues and empathetic dialogues, ESC typically involves multi-turn conversations, requiring skilled dialogue processes and support strategies to achieve its goals. For example, in the case shown in Figure 1, the supporter should first explore the seeker’s situation, identify the problems they face, then attempt to soothe their emotions, and finally provide helpful suggestions to help them out of their predicament.

Explanation of the figure:

Explanation of the figure:

💬 Seeker Turn 1:

Original Text:I am really sad as my partner walked out on me.

Translation:我真的很伤心,因为我的伴侣离开了我。

🤖 Supporter System (Internal Reasoning):

He/She is in a bad mood. Time to understand his/her situation.他/她情绪低落。是时候去了解一下他/她的处境了。

Supporter Strategy (Question):Was there anything that leads to this?是发生了什么导致了这种情况吗?

💬 Seeker Turn 2:

Original Text:Not really. I thought everything was ok between us. That is why it kind of struck me.

Translation:并没有。我以为我们之间一切都还好。所以这让我有点震惊。

🤖 Supporter System (Internal Reasoning):

He/She is really in tough. Time to comfort him/her.他/她真的很难受。是时候安慰他/她了。

Supporter Strategy (Reflection of Feelings):That’s terrible and unacceptable behavior. I wouldn’t be able to accept either.那太糟糕了,是不可接受的行为。我也无法接受。

💬 Seeker Turn 3:

Original Text:I really agree with you. I am worth more than that. It is time to move on, even when it is difficult.

Translation:我真的认同你说的。我值得更好的。即使很难,也是时候继续前行了。

🤖 Supporter System (Internal Reasoning):

He/She is calm down. Time to suggest him/her.他/她已经平静下来了。是时候给出一些建议了。

Supporter Strategy (Providing Suggestions):Yes! Reflecting after a relationship is good but don’t put all the blame on yourself.没错!在一段关系结束后反思是好的,但不要把所有的责任都归咎于自己。

This dialogue demonstrates the ability of TransESC to recognize and utilize semantic changes, strategy evolution, and emotion changes to drive the conversation towards supportive and rehabilitative goals.

Explanation of the Three Types of State Transitions:

-

Semantic Transition (Light Orange):

-

This indicates a change in the content of the conversation at the semantic level, such as shifting from “confusion” to “relief”, or from “past” to “future”.

-

Example: The seeker gradually expresses from “I am sad because my partner left me” to “I deserve to be cherished”, “It is time to move on”.

Strategy Transition (Light Blue):

-

Question

-

Reflection of feelings

-

Providing Suggestions

-

The supporter adopts different support strategies in different turns, including:

-

Each strategy aims to drive the conversation towards a more supportive direction.

Emotion Transition (Light Green):

-

This refers to the evolution of the seeker’s emotional state, such as from sadness 😭 → calm 😔 → positive 😊.

-

The system tracks these emotional changes to adjust response strategies, making the conversation more empathetic and supportive.

Intuitively, such a complex and challenging task raises the question: How to maintain smooth transitions between statements at different stages and naturally drive the conversation forward? Previous studies have struggled to address this issue because they treat the dialogue history as a long sequence, ignoring the fine-grained transition information in each turn of the conversation.

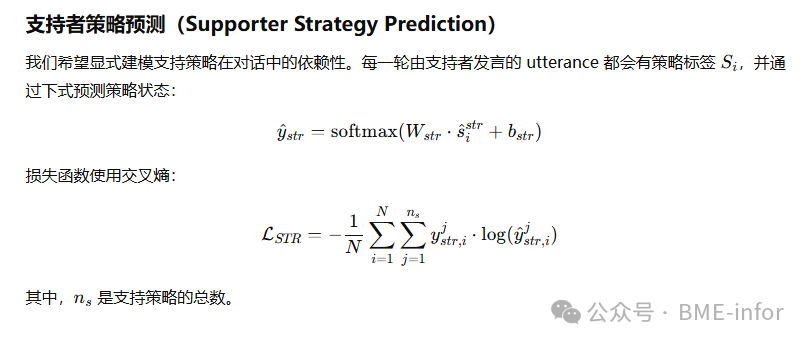

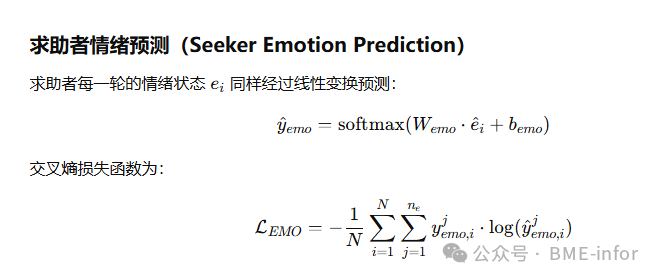

We believe that considering these turn-level state transition information is crucial for achieving high-quality emotional support dialogues, as it allows the conversation to naturally progress towards the established goals while alleviating the seeker’s suffering. To this end, this paper models the transition information in ESC from three angles, referring to each dimension as a “state”:

-

Semantic Transition: During the conversation, even when focusing on the same topic, the seeker may express different aspects or meanings in different turns. For example, in Figure 1, the seeker initially feels sad about the breakup and is unclear about the reasons (e.g., “sadness”, “leaving me”, “shock”, etc.), but after the supporter’s warm comfort, he becomes relieved and gains the motivation to move on (e.g., “agree”, “worth”, “move on”). Therefore, to truly understand the content of the conversation and achieve effective emotional support, it is essential to capture these fine-grained semantic changes.

-

Strategy Transition: The timing of adopting appropriate support strategies is another key aspect of achieving effective emotional support. For instance, in Figure 1, the supporter first understands the seeker’s problem through questioning, then soothes the other’s emotions through the “reflection of feelings” strategy, and finally adopts the “providing suggestions” strategy to help them out of the predicament. The flexible combination and correlation between different strategies constitute the strategy transition in ESC, allowing the conversation to develop towards problem-solving in a more natural and smooth manner.

-

Emotion Transition: Tracking the changes in the seeker’s emotional state during the conversation is also very important. In Figure 1, the seeker initially feels down due to the partner’s departure, but as the conversation progresses, he gradually calms down and prepares to move on with life. Capturing such emotional transitions can provide clear signals for the supporter to timely adjust strategies and assess the effectiveness of emotional support.

To achieve the above goals, this paper proposes a new model TransESC, which smooths the dialogue and naturally drives the conversation process by modeling the turn-level state transitions (semantics, strategy, emotion) in ESC. We construct a state transition graph in the emotional support process, where each node represents three states (semantic state, strategy state, emotion state) in a turn of dialogue, and design seven types of edges for information flow transmission.

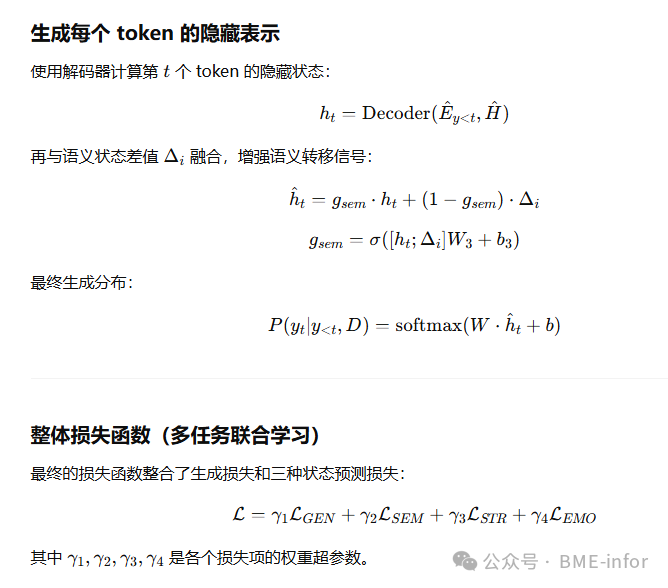

We further propose a “two-step method” (transit-then-interact) to explicitly execute state transitions and update the representation of each node. In this process, ESC is smoothly modeled, and the system achieves turn-level supervisory signals by predicting keywords in each utterance, the strategies adopted by the supporter, and the seeker’s immediate emotional state.

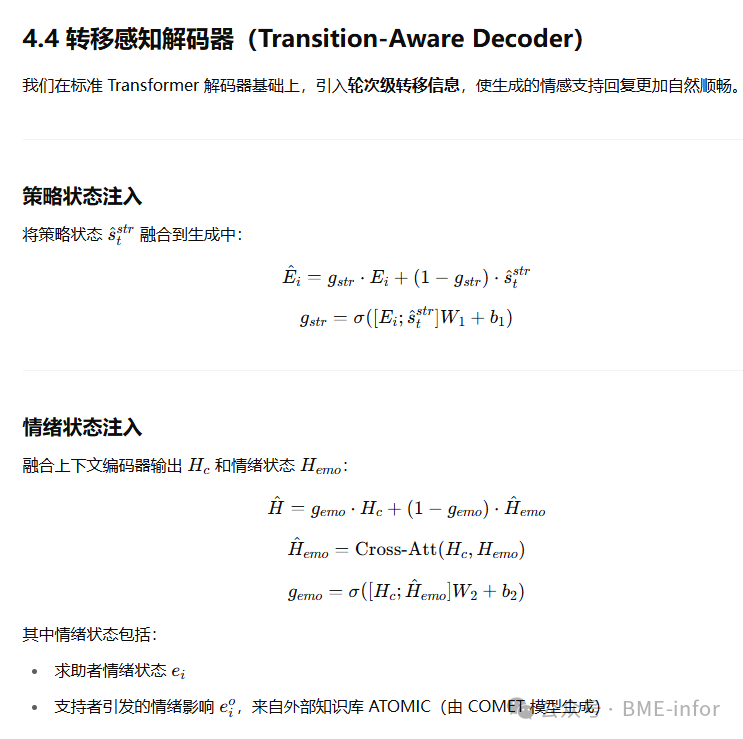

Ultimately, these three types of transition information are injected into the decoder to generate more engaging and supportive responses.

——· Related Work ·——

Emotional Support Conversation (ESC)

Liu et al. first proposed the Emotional Support Conversation task (ESC) and released the benchmark dataset ESConv. They attached support strategies as special tags at the beginning of each supportive response and conditioned the generation process on this predicted strategy tag. Peng et al. (2022) proposed a hierarchical graph neural network framework that models global emotional causes and local user intentions. Tu et al. (2022) introduced common sense knowledge and a mixed strategy mechanism to enhance the emotional support capabilities of dialogue systems.

Further, Cheng et al. (2022) proposed a forward-looking strategy planning method to select strategies that may yield the best long-term support effects; Peng et al. (2023) attempted to utilize the seeker’s feedback to select appropriate support strategies.

However, all of the above methods treat the dialogue history as a linear sequence, ignoring the turn-level state transition information. It is this information that plays a key role in guiding emotional support dialogues towards a more natural and smooth direction.

Emotional & Empathetic Conversation

In recent years, endowing dialogue systems with emotional and empathetic capabilities has gradually become a research hotspot. To achieve the goal of emotional generation, researchers have proposed both generative and retrieval-based methods to incorporate emotions into dialogue generation.

However, these methods often only meet the basic quality requirements of dialogue systems. In terms of empathetic generation, existing work has attempted to introduce emotional factors (Alam et al., 2018; Rashkin et al., 2019; Lin et al., 2019; Majumder et al., 2020; Li et al., 2020, 2022), cognitive mechanisms (Sabour et al., 2022; Zhao et al., 2022), or persona (Zhong et al., 2020) to enhance empathetic expression.

However, intuitively, merely expressing empathy is just a necessary step towards achieving effective emotional support. In contrast, emotional support conversation represents a more advanced capability that dialogue systems should truly possess.

——· Related Knowledge ·——

ESConv Dataset

This study is based on the emotional support conversation dataset ESConv. In each dialogue, a seeker experiencing emotional distress seeks help to get through difficulties, while the supporter needs to identify the problems faced by the seeker, provide comfort, and offer suggestions to help resolve the issues.

The dataset annotates the support strategies adopted by the supporter, which includes eight types of strategy (e.g., questioning, reflecting emotions, providing suggestions, etc.). However, the ESConv dataset does not include the keyword set for each sentence and the emotional labels for the seeker’s turns, so we used external tools for automatic annotation. More detailed annotation methods can be found in Appendix A.

Note: We used six emotional categories to label the seeker’s turns: joy, anger, sadness, fear, disgust, and neutral.

Task Definition

——· Method ·——

——· Method ·——

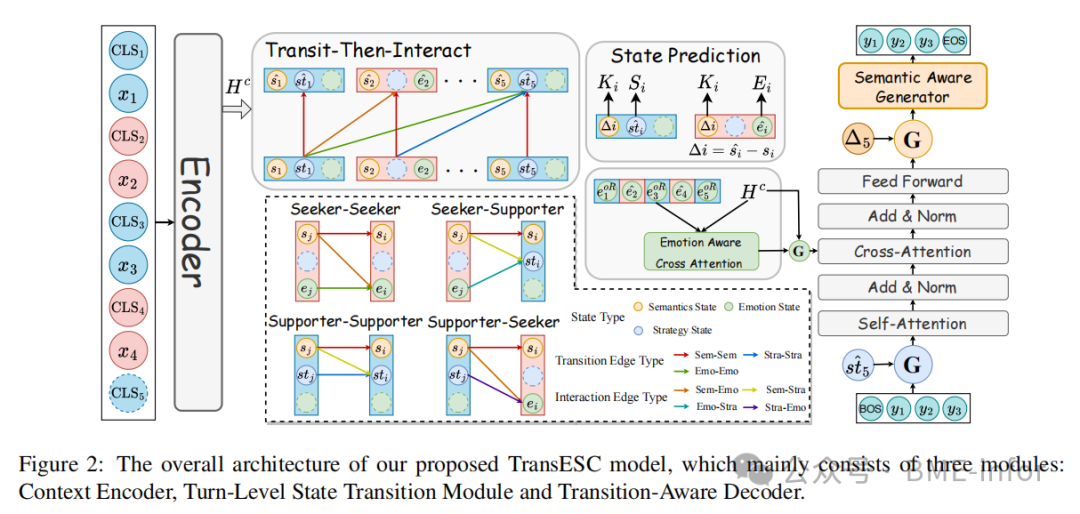

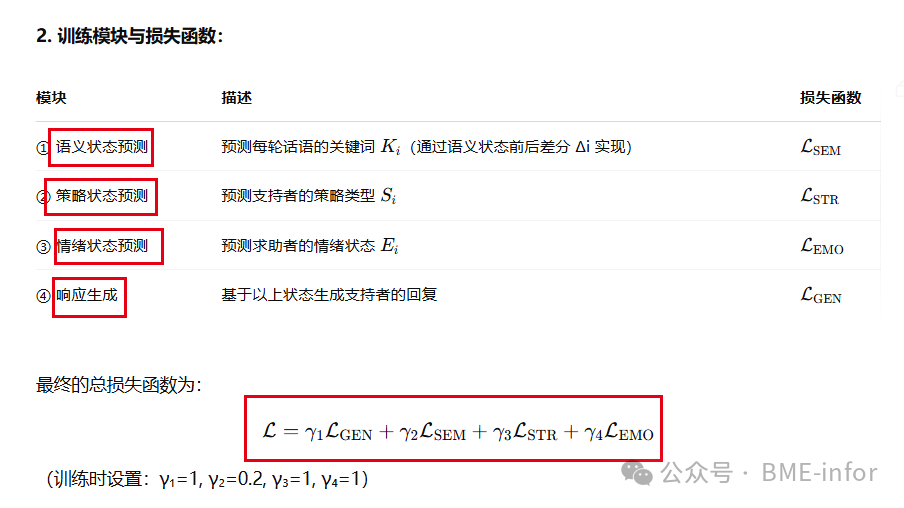

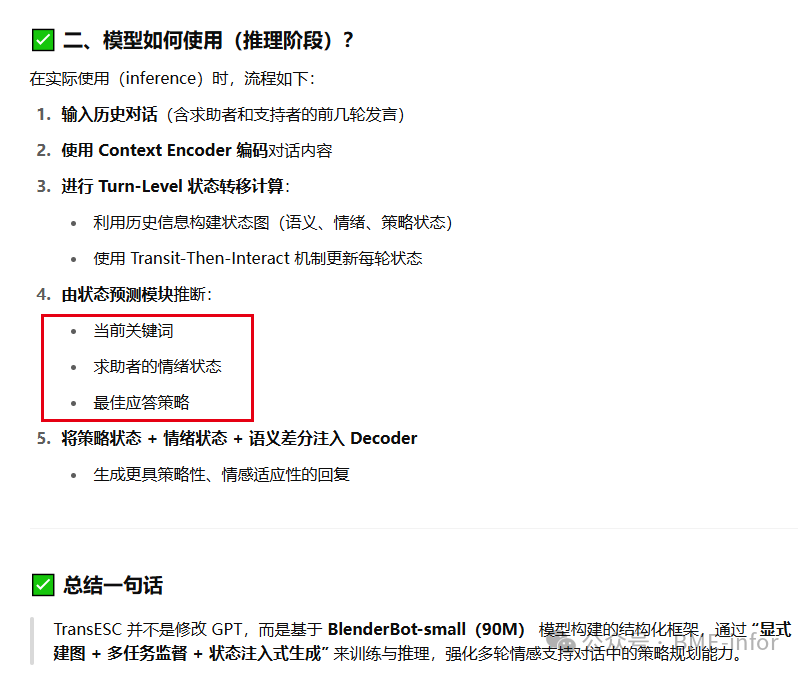

The overall architecture of our proposed TransESC is shown in Figure 2. First, dialogue representations are obtained through a context encoder, and then fine-grained state transition information, including semantic transition, strategy transition, and emotion transition, is extracted and propagated in the turn-level state transition module. Finally, to generate more natural and smooth emotional support responses, this state transition information is explicitly injected into the Transition-Aware Decoder.

Figure 2 illustrates the overall architecture of the TransESC model, which mainly consists of three modules:

① Context Encoder② Turn-Level State Transition Module③ Transition-Aware Decoder

🌐 Overall Process Interpretation

✅ Module ①:Context Encoder

-

Input dialogue history (blue represents seeker utterance, red represents supporter utterance).

-

Add

<span>[CLS]</span>as an identifier before each utterance. -

Use Transformer Encoder (e.g., BERT) to encode all text into context vectors

H c

✅ Module ②:Turn-Level State Transition Module

🔸 1. Node Modeling: Each utterance constructs three state nodes

-

Yellow Circle: Semantic State

-

Green Circle: Emotion State (only for seeker)

-

Blue Circle: Strategy State (only for supporter)

🔸 2. State Connection Graph (small figure below)

-

Shows four types of connection methods:

-

Seeker → Seeker

-

Seeker → Supporter

-

Supporter → Supporter

-

Supporter → Seeker

🔸 3. Types of Edges

-

Transition Edges: e.g., Sem→Sem, Stra→Stra, Emo→Emo, etc., used to capture the changes of the same type of state over time.

-

Interaction Edges: e.g., Strategy→Emotion, Emotion→Strategy, used to model cross-state dependencies.

🔸 4. Transit-Then-Interact (Two-Step Mechanism)

-

First, propagate state information through transition edges (Transit)

-

Then, integrate other state information through interaction edges (Interact)

-

Use R-MHA (Relation-enhanced Multi-Head Attention) to perform each step

✅ Module ③:Transition-Aware Decoder

🔹 Input:

-

The initial conditions of the decoder are composed of the states calculated from the previous step (e.g., strategy state

s ^ t Δ t = s ^ t − s t -

Incorporate Emotion-Aware Cross Attention to merge emotional state representations

🔹 Decoder Structure:

-

Self-Attention Layer

-

Cross-Attention Layer

-

Add & Norm

-

Feed Forward Layer

🔹 Output:

-

Next response sequence generated by the semantic-aware generator

y 1 y 2 y 3 , . . .

📌 Summary of Key Points

| Module | Function |

|---|---|

| Context Encoder | Encodes dialogue history |

| State Transition Module | Extracts three states (semantics, emotion, strategy) and models transition/interact dependencies |

| Transition-Aware Decoder | Utilizes state update information to generate coherent and strategically strong supportive responses |

The core highlight of this architecture is:Integrating the modeling of states across the three dimensions of semantics, strategy, and emotion into the dialogue generation process, allowing the model to engage in supportive communication more naturally and avoid mechanical and stiff responses.

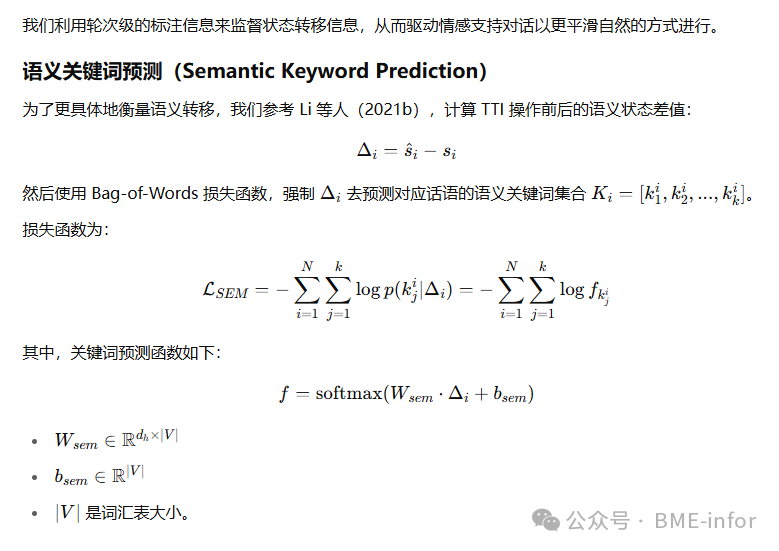

State Prediction

——· Experiments ·——

——· Experiments ·——

Baseline Models

We compared the proposed TransESC with the following competitive baseline models:

-

Four empathy response generators:

-

Transformer (Vaswani et al., 2017)

-

Multi-Task Transformer (MultiTRS) (Rashkin et al., 2019)

-

MoEL (Lin et al., 2019)

-

MIME (Majumder et al., 2020)

-

Three advanced models on the Emotional Support Conversation (ESC) task:

-

BlenderBot-Joint (Liu et al., 2021)

-

GLHG (Peng et al., 2022)

-

MISC (Tu et al., 2022)

——–· Results ·——–

——–· Results ·——–

Overall Results

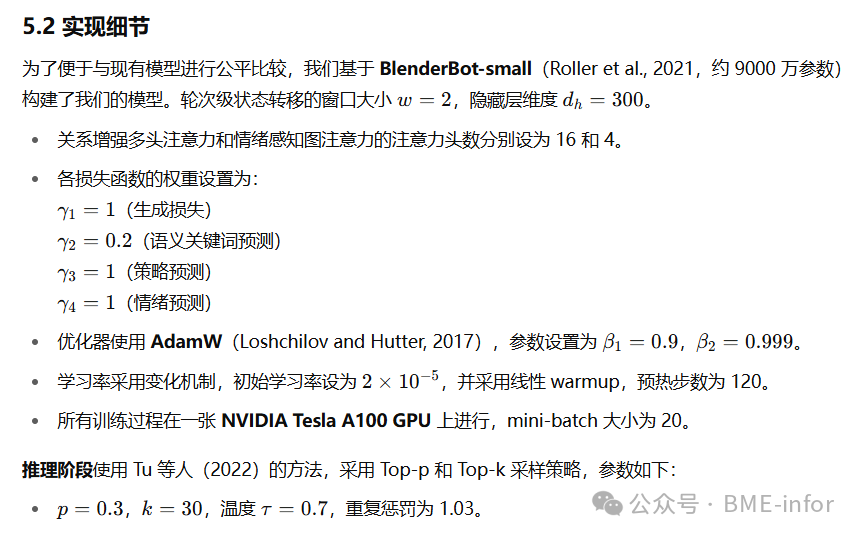

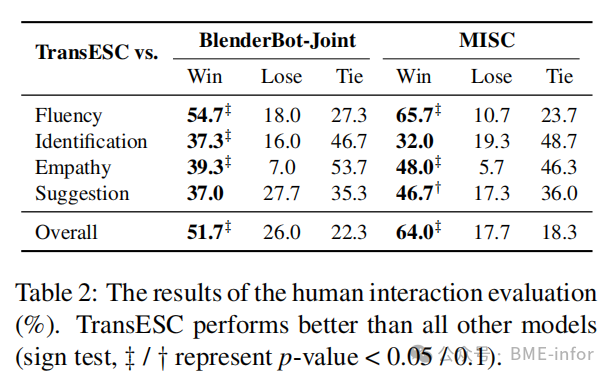

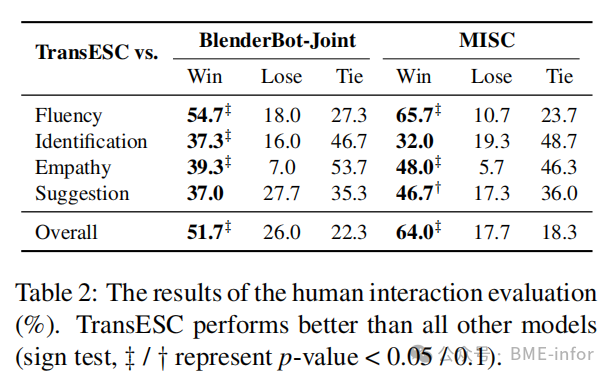

Automatic Evaluation

As shown in Table 2, TransESC achieved a new SOTA (state-of-the-art) level on automatic evaluation metrics. Thanks to the effective grasp of the three types of transition information in emotional support conversation (ESC), TransESC is able to generate more natural and smoother emotional support responses, outperforming baseline models on almost all metrics.

Compared to other empathy response generation models, the significant performance improvement of TransESC indicates that evoking empathy is just one of the key steps in emotional support conversation, while identifying the problems faced by the seeker and providing helpful suggestions are also important components of ESC.

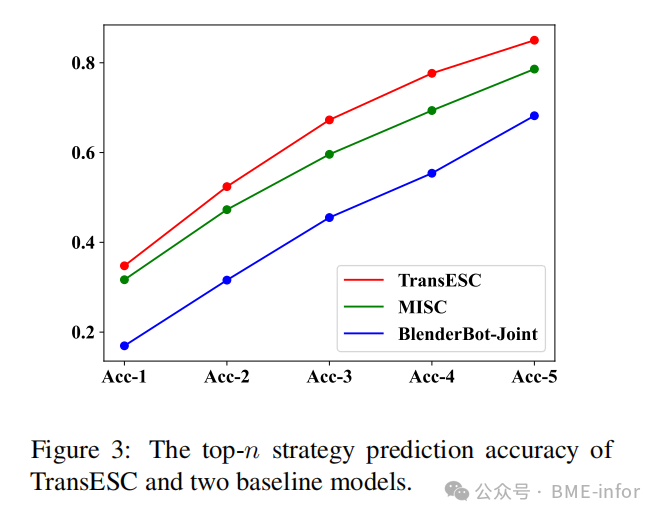

Moreover, although BlenderBot-Joint and MISC also consider the strategy prediction process, TransESC’s significant advantage in strategy selection can be attributed to its explicitly modeled turn-level strategy transition mechanism, which can fully capture the dependencies between different strategies adopted by the supporter in each turn.

As shown in Figure 3, TransESC also outperforms the comparison models on all Top-n accuracy metrics.

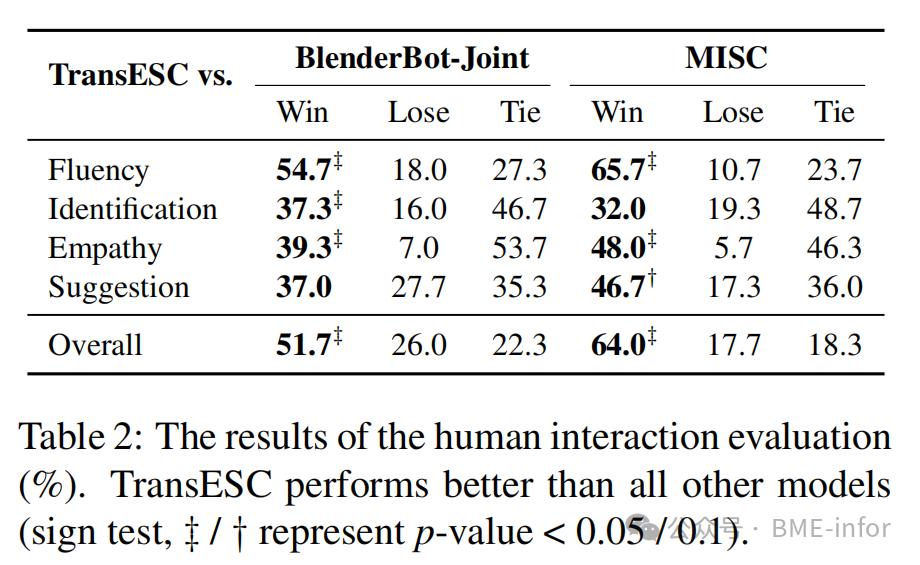

Human Evaluation

It should be noted that MISC uses the “seeking situation” before the dialogue as input, which is unreasonable in actual conversations, as supporters usually can only gradually understand the seeker’s situation during the dialogue. Therefore, for a fair comparison, we did not input the “situation” in all three models.

As shown in Table 2, TransESC outperforms the comparison models on all evaluation dimensions. Specifically, it performs better in the “Fluency” score, indicating that it can better maintain natural transitions between turns in the dialogue; additionally, although the three models may perform similarly in identifying the seeker’s problems, TransESC is able to evoke stronger empathetic responses to comfort the seeker and ultimately provide more helpful suggestions.

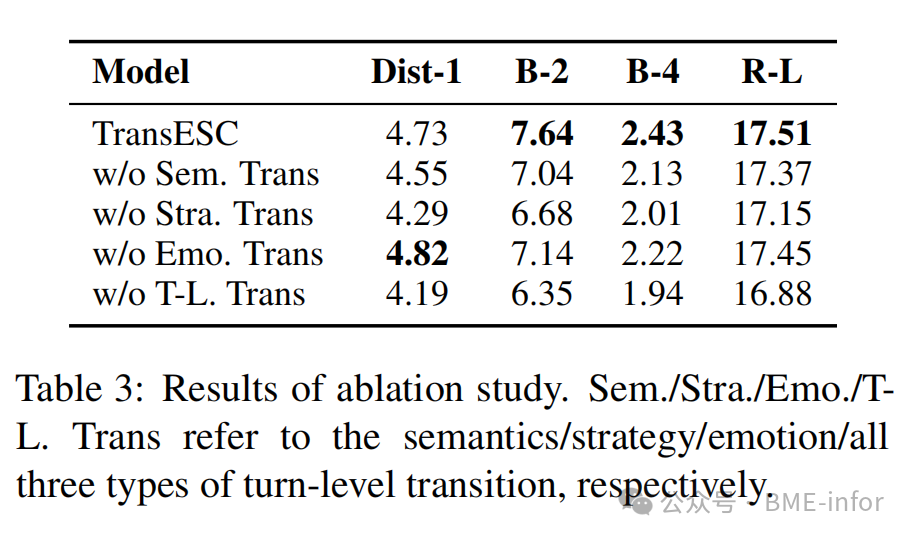

Ablation Study

To investigate the impact of the three types of state transition information, we removed the corresponding state representations and edges in the transition graph, turn-level label predictions, and the process of injecting into the decoder. Meanwhile, to verify the effectiveness of the turn-level transition process, we also tested a setting where the three states were predicted using the entire dialogue history without turn-level modeling.

As shown in Table 3, removing any type of transition information leads to a decline in automatic evaluation results, indicating that modeling each type of state information is effective. More specifically, removing strategy transition information (w/o Stra.Trans) results in the most significant performance drop, indicating that choosing the appropriate strategy to support the seeker is the most critical step in ESC.

In contrast, the impact of emotion transition is relatively small (w/o Emo.Trans), possibly due to noise in the annotated emotional labels and some uncertainty in the emotional knowledge generated from external knowledge bases.

Furthermore, when we completely remove the turn-level state transition process, performance significantly declines, further validating our core contribution: grasping fine-grained turn-level transition information can indeed guide ESC to generate smoother and more natural dialogues.

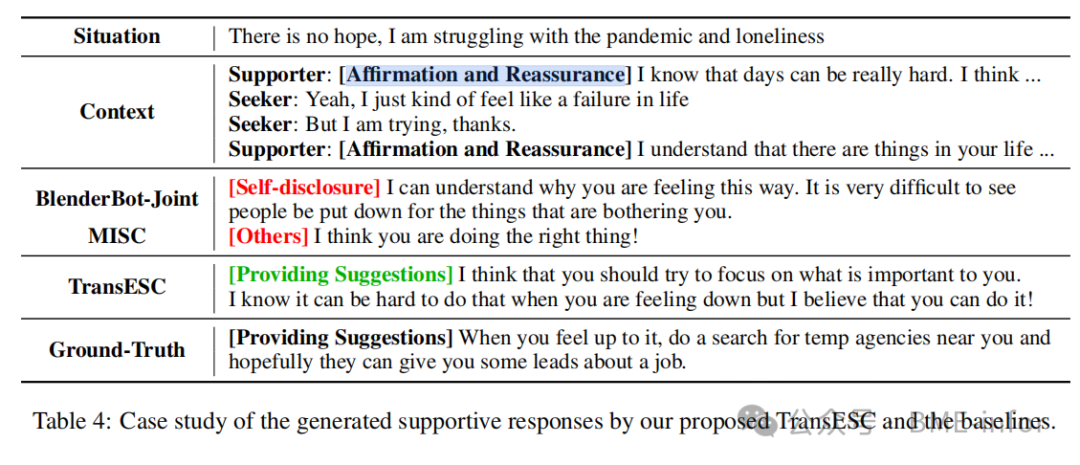

Case Study

In Table 4, we present a comparison case of responses generated by TransESC and two baseline models. With the help of emotion transition and strategy transition mechanisms, TransESC perceives that the seeker’s emotional state has shifted to “joy” after several rounds of comfort, and appropriately predicts that it should start providing helpful suggestions.

Additionally, through semantic transition, the model further grasped the seeker’s tendency to “make a decision”, thus suggesting that they try and encouraging them to face failure.

In contrast, MISC and BlenderBot-Joint guide the conversation improperly, resulting in generated responses lacking effectiveness and empathy.

Transition Window Length Analysis

We adjusted different transition window lengths to analyze the impact of modeling transition information more deeply. The results are shown in Table 5, where the model with a window length of 2 achieved the best performance.

-

On one hand, shorter windows struggle to capture the dependencies between statements in the dialogue history adequately, leading to insufficient modeling.

-

On the other hand, longer windows may introduce a lot of redundant information, interfering with the model’s judgment and thus weakening overall performance.

Therefore, a moderate window length (e.g., 2) strikes a good balance between information capture and modeling efficiency, most beneficial for improving model performance.

——–· Predefined ·——–

A ESCONV Dataset

A.1 Keyword and Emotion Annotation

Since the original ESCONV dataset does not include the keyword set for each sentence and the emotional labels for the seeker’s utterances, we annotated them using external tools:

-

Keyword Extraction: We used the TF-IDF method to extract keywords for each sentence. The vocabulary and inverse document frequency (IDF) in TF-IDF are learned based on the ESCONV training set. We then used TF-IDF to extract the top k keywords for each sentence as the keyword set.

-

Emotion Annotation: We fine-tuned a BERT model (Devlin et al., 2019) on a fine-grained emotion classification dataset GoEmotions (Demszky et al., 2020). This model achieved an accuracy of 71% on the test set, indicating its high reliability in emotion classification. Subsequently, we used this model to annotate emotional labels for each utterance from the seeker.

A.2 Dataset Statistics

We conducted experiments on the ESCONV dataset (Liu et al., 2021). In the preprocessing stage, following the approach of Tu et al. (2022), we truncated each dialogue into every 10 turns and randomly divided the data into training, validation, and test sets in a 8:1:1 ratio. Specific data statistics can be found in Table 6.

A.3 Definition of Support Strategies

A total of 8 supportive strategies are annotated in the ESCONV dataset, defined as follows:

-

Question: Asking informative questions related to the problem to help the seeker express the issues they face more clearly.

-

Restatement or Paraphrasing: Concisely restating the seeker’s utterance to help them view their situation more clearly.

-

Reflection of Feelings: Describing the seeker’s emotions to indicate understanding and empathy towards their situation.

-

Self-disclosure: The supporter shares similar situations or emotions they have experienced to express empathy.

-

Affirmation and Reassurance: Affirming the seeker’s views, motivations, and strengths, providing encouragement and comfort.

-

Providing Suggestions: Offering suggestions on how to overcome difficulties and change the current situation.

-

Information: Providing useful information, such as data, facts, opinions, resources, or answers to questions.

-

Others: Other supportive strategies that do not fall into the above categories.

B Common Sense Knowledge Acquisition

B.1 Introduction to ATOMIC Relationship Definitions

ATOMIC (Sap et al., 2019) is a knowledge graph containing everyday common sense reasoning, describing various causal reasoning knowledge in natural language. ATOMIC defines 9 types of if-then relationships to distinguish between causality, actors and recipients, voluntary and involuntary actions, and actions and mental states. Brief definitions are as follows:

-

xIntent: What does PersonX intend to cause this event?

-

xNeed: What does PersonX need to do before the event occurs?

-

xAttr: How is PersonX’s character or traits described?

-

xEffect: What impact does this event have on PersonX?

-

xWant: What might PersonX want to do after the event occurs?

-

xReact: How does PersonX emotionally react after the event occurs?

-

oReact: How do others emotionally react after the event occurs?

-

oWant: What might others want to do after the event occurs?

-

oEffect: What impact does this event have on others?

B.2 Implementation Details of COMET

We adopted the generative common sense reasoning model COMET (Bosselut et al., 2019) to acquire common sense knowledge in emotional support conversations. In each turn of dialogue, we selected the xReact relationship type to reflect the emotional feelings of the seeker.

Specifically, we used a COMET variant based on the BART architecture (Lewis et al., 2020), which was trained on the ATOMIC-2020 dataset (Hwang et al., 2021).

For each utterance belonging to the seeker

C Baseline Models

We compared with the following strong baseline methods:

-

Transformer (Vaswani et al., 2017): A classic Transformer encoder-decoder generative model.

-

Multi-Task Transformer (Multi-TRS) (Rashkin et al., 2019): An auxiliary task is added to the standard Transformer to perceive the user’s emotions.

-

MoEL (Lin et al., 2019): A Transformer-based model that captures the emotions of the “other” in the dialogue, generating emotional distributions through multiple decoders. Each decoder is optimized for a specific emotion, ultimately generating empathetic responses through a soft combination of multiple emotional responses.

-

MIME (Majumder et al., 2020): Also a Transformer-based model that introduces the mechanism of “simulating the emotions of others”. This model divides emotions into two groups and generates more diverse empathetic responses by introducing randomness.

-

BlenderBot-Joint (Liu et al., 2021): A strong baseline model that performs well on the ESCONV dataset, whose core approach is to add the “strategy type” as a special token at the beginning of the response and condition the generation process on this.

-

GLHG (Peng et al., 2022): A hierarchical graph neural network model used to model the relationship between the overall emotional causes and local support intentions of users, thereby better generating emotional support conversations.

-

MISC (Tu et al., 2022): An encoder-decoder-based model that introduces external common sense knowledge to infer the seeker’s fine-grained emotional state and generates more skillful supportive responses based on a mix of various strategies.

——–· Editor’s Interpretation ·——–

——–· Summary and Comments ·——–This engineering exploration work is worth learning!

——–· Summary and Comments ·——–This engineering exploration work is worth learning!