Compilation | Nuclear Cola, Tina

“Mojo may be the biggest advancement in programming languages in decades.” Recently, a new programming language called Mojo was launched by Modular AI, a company founded by Chris Lattner, co-creator of the LLVM and Swift programming languages.

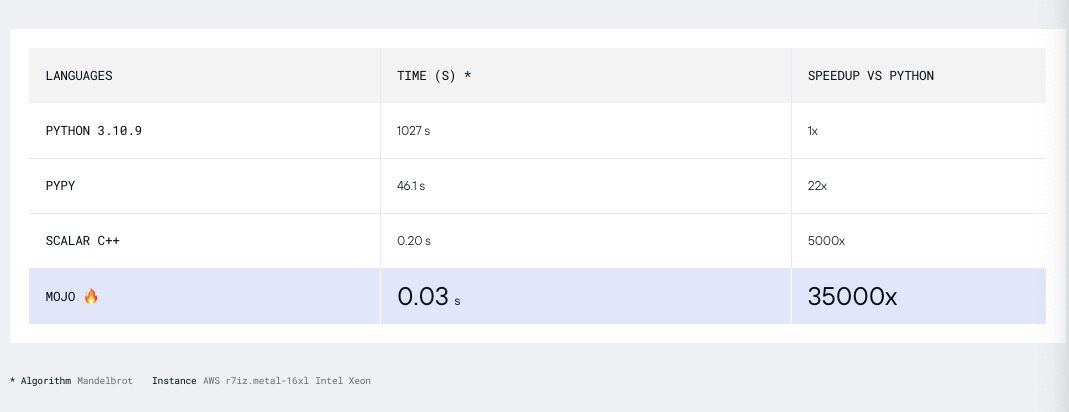

Mojo combines the beloved features of Python with the system programming capabilities of C, C++, and CUDA, distinguishing itself from other Python speed enhancement solutions through its so-called “extreme acceleration.” With hardware acceleration, it runs digital algorithms like Mandelbrot 35,000 times faster than original Python!

More importantly, as the creator of LLVM, Chris Lattner’s strong background lays a solid foundation for Mojo’s success. Data scientist and founding researcher of fast.ai, Jeremy Howard, remarked that “Mojo may be the biggest advancement in programming languages in decades.”

1 Why Mojo?

For developers around the world, we should all be familiar with the name Chris Lattner.

Chris Lattner has worked for companies like Apple, Google, and Tesla, contributing to many projects we rely on today: developing the LLVM compiler toolchain, co-creating the MLIR compiler, and leading the development of the Swift programming language.

Initially, he began developing LLVM as part of his doctoral thesis. LLVM fundamentally changed the way compilers are created and forms the basis of many widely used language ecosystems today. He then went on to launch Clang, a C and C++ compiler built on LLVM.

Chris found that C and C++ did not fully leverage the powerful capabilities of LLVM, so during his time at Apple, he designed a new language called “Swift,” which he described as “syntactic sugar for LLVM.” Swift has become one of the most widely used programming languages in the world, especially as the primary means of creating iOS applications for iPhone, iPad, MacOS, and Apple TV today.

Unfortunately, Apple’s control over Swift meant it never truly shone outside of Apple’s ecosystem. Chris worked at Google for a while, trying to take Swift out of Apple’s comfort zone, hoping it would become a replacement for Python in AI model development, but unfortunately, he did not receive the support needed from either Apple or Google and ultimately did not succeed.

That said, during his time at Google, Chris also developed another hugely successful project: MLIR. MLIR is an alternative to LLVM IR for modern multi-core computing and AI workloads. This is crucial for fully utilizing the powerful capabilities of hardware such as GPUs, TPUs, and vector units increasingly added to server-grade CPUs.

In January 2022, Chris Lattner officially announced his startup, co-founding “Modular AI” with Tim Davis, aiming to rebuild the global ML infrastructure from the ground up. Tim Davis previously led Google’s machine learning projects, managing Google’s machine learning APIs, compilers, and runtime infrastructure.

While building their platform to unify the world’s ML/AI infrastructure, they realized that programming across the entire stack was too complex. “These systems are severely fragmented, with a wide variety of hardware, each with custom tools.”

“What we want is an innovative, scalable programming model that can target accelerators and the heterogeneous systems common in machine learning. This means a programming language with powerful compile-time metaprogramming, integrating adaptive compilation techniques, caching throughout the compilation process, and other features not supported by existing languages.”

In this context, the startup announced two related projects: Mojo, a programming language built on Python, claiming to have speed advantages comparable to C; and a portable, high-performance Modular recommendation engine capable of running AI models in production at lower costs (the so-called inference, which is the use of trained models in real-world scenarios).

The company stated, “Mojo combines the Python features loved by researchers with the system programming capabilities of C, C++, and CUDA.”

“Mojo is built on next-generation compiler technology. When you add types to your program, this technology can significantly improve performance, helping you define zero-cost abstractions, benefit from Rust-level memory safety features, and support unique auto-tuning and compile-time metaprogramming capabilities.”

“Mojo has learned a lot from Rust and Swift and has taken it a step further.”

2 Mojo: A Programming Language 35,000 Times Faster than Python

Mojo is a member of the Python family but has grand ambitions—to be fully compatible with the Python ecosystem so that developers can continue using the tools they are familiar with. Mojo aims to gradually become a superset of Python by retaining Python’s dynamic features while adding new primitives for system programming.

These new system programming primitives will allow Mojo developers to build high-performance libraries that currently require C, C++, Rust, CUDA, and other accelerator systems.

On MLIR, Mojo code can access various AI-tuned hardware features, such as TensorCores and AMX extensions. Therefore, for certain types of algorithms, its speed far exceeds that of original Python—running the Mandelbrot algorithm on AWS r7iz.metal-16xl takes only 0.03 seconds, while Python 3.10.9 takes 1027 seconds (about 17 minutes).

Chris Lattner stated on Hacker News: “Our goal is not to make dynamic Python magically fast. While we are much faster on dynamic code (because we have a compiler instead of an interpreter), it does not rely on a ‘smart enough’ compiler to eliminate dynamicity.”

He explained that the reason Mojo is much faster than Python is that it allows programmers to control static behavior and adopt it gradually in meaningful places. The key payoff of this approach is that the compilation process is very simple, requiring no JIT, and provides predictable and controllable performance.

Mojo is still under development, but it is currently available for trial in Jupyter notebooks. Once fully completed, it is expected to become a superset of Python—a Python ecosystem with a system programming toolkit. By that time, it should be able to run all Python programs. However, currently, Mojo only supports Python’s core features, including async/await, error handling, and variable arguments, and there is still a long way to go for full compatibility.

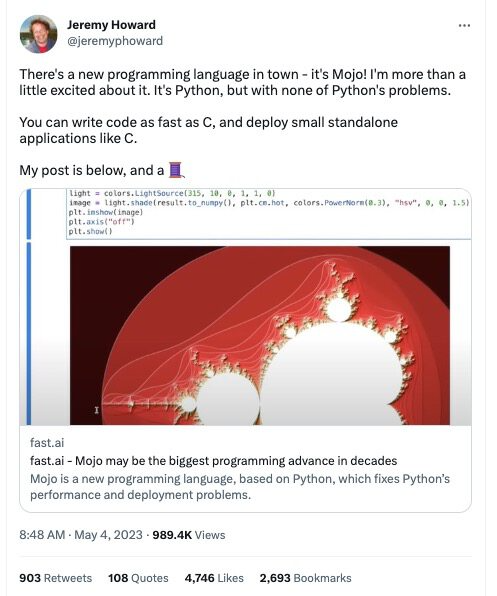

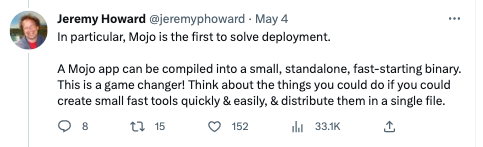

“It feels like programming has been disrupted.” In an announcement released on Thursday, fast.ai co-founder and data scientist Jeremy Howard stated, “Mojo may be the biggest advancement in programming languages in decades.”

“A new programming language has emerged in town: Mojo! I am very excited about it. It feels like Python but without any of Python’s issues. You can write code as fast as C and deploy small standalone applications like C.”

“It feels like programming has been disrupted.” In Jeremy Howard’s memory, the last time he felt this way was when he first used Visual Basic v1.0.

Jeremy Howard is a heavy user of Python, which can and indeed does everything, but it has a drawback: performance, as it is thousands of times slower than languages like C++. Developing with Python requires avoiding implementing performance-critical parts in Python and using Python wrappers to cover C, FORTRAN, Rust, etc.

Thus, with the richness of the ecosystem, Python indeed holds an advantage in AI model development, but due to its limited performance, Python programmers often end up pointing their code to faster-running modules in other languages (like C/C++ and Rust). This “bilingual” reality makes analysis, debugging, learning, and deploying machine learning applications increasingly difficult. Howard explained that Mojo aims to address this fragmented reality within AI.

“One of Mojo’s highlights is that developers can choose a faster ‘mode’ at any time, using ‘fn’ instead of ‘def’ to create their own functions. In this mode, developers must accurately declare the type of each variable, allowing Mojo to create optimized machine code for the required function.”

“Additionally, if you use ‘struct’ instead of ‘class’, the properties will be tightly packed in memory and can even be used directly within the data structure without searching for pointers everywhere. These features give it performance comparable to C, allowing Python programmers to master this performance treasure with just a little new syntax learning.”

Another benefit of Mojo is its ability to compile code into standalone and quickly starting binary files, making it easy to leverage available cores and accelerated hardware for deployment.

As a compiled language, Mojo’s deployment process is similar to that of C. For example, a sample program containing a matmul version written from scratch is about 100k in size.

“This completely disrupts the traditional game rules.”

Of course, the current Mojo still has some shortcomings, such as package management and build systems—issues that the Python community has long been striving to address. Additionally, the Mojo language has not yet specified an open-source license, but it is believed to be only a matter of time.

Howard summarized in a tweet, “Mojo is not yet finalized—but the current results are already exciting, especially since it has been built by a very small team in a short time. Lattner, with years of experience in Clang, LLVM, and Swift development, has laid another carefully constructed language foundation for us.”

Reference Links:

https://www.modular.com/blog/the-future-of-ai-depends-on-modularityhttps://news.ycombinator.com/item?id=35811380

https://twitter.com/jeremyphoward/status/1653924474536984577

https://docs.modular.com/mojo/programming-manual.html#argument-passing-control-and-memory-ownership

https://www.theregister.com/2023/05/05/modular_struts_its_mojo_a/

This article is a translation and compilation by InfoQ, and reproduction without permission is prohibited.

Today’s Recommended Articles

Pinduoduo responds to relocating its headquarters from China to Ireland; Microsoft’s Bing explosive update, native support for Chinese in text-to-image; 75-year-old AI pioneer leaves Google, regretting a lifetime of work | Q News

Google and OpenAI are both losing out; open source is the ultimate winner! Google internal documents leaked: aiming to defeat OpenAI with open source

Google uses robots to delete code on a large scale: over two decades accumulated billions of lines, 5% of C++ code has been deleted

Are developers’ good days coming? Apple is about to welcome major changes in iOS 17

Conference Preview

Business expansion overseas has been a hot topic in recent years, but why do some businesses soar after going abroad while others repeatedly fail? There are many underlying reasons. Among them, data compliance, multi-cloud architecture, compliance architecture, and global user experience are challenges faced during the overseas expansion process. The QCon Global Software Development Conference (Guangzhou Station) 2023 has specially planned a topic on “Reflections on Going Overseas,” inviting representative experts from various fields to analyze the problems and solutions they encountered in their overseas business expansion. Exciting topics to look forward to—

-

How did ZOLOZ achieve a 20-fold increase in overseas business in one year? / Zhang Fang, Ant Group’s Chief Architect of Security Technology

-

Compliance and Cloud Data Compliance Practices for Enterprises Going Overseas / Liao Zhijie, Senior Compliance Expert at Alibaba Cloud

-

Lessons from KuJiaLe’s Overseas Expansion on Evolutionary Architecture / Wang Dongnian, Senior Technical Expert of KuJiaLe Cloud Native Middleware

More exciting content awaits at QCon Guangzhou, where we will also discuss stability as a lifeline, AGI and AIGC implementation, next-generation software architecture, improving R&D efficiency, DevOps vs. platform engineering, efficiency intelligence in the AIGC wave, front-end technology exploration, and practical programming languages. The conference schedule is now online, click ‘Read More’ for details. The conference is offering a 10% discount, and group ticket purchases come with more discounts. Interested parties can contact the ticket manager: 15600537884 (phone same as WeChat).