On March 12, news reported by Reuters, citing two informed sources, indicates that Meta is testing its first self-developed RISC-V architecture chip designed for training AI systems. This custom-designed chip will meet Meta’s own computational needs and reduce reliance on major AI chip manufacturers like NVIDIA.

It is understood that the testing of Meta’s self-developed AI chip began after the chip design was finalized (the so-called tape-out). The cost of a single tape-out can reach tens of millions of dollars, taking approximately 3 to 6 months to complete, with no guarantee of successful testing. In the event of failure, Meta will need to identify design issues and redo the tape-out process.

Previously, Meta had launched a self-developed AI inference chip during a small-scale testing deployment, but it was canceled due to poor performance, leading to a multi-billion dollar order for NVIDIA GPUs in 2022. Since then, Meta has become a major customer of NVIDIA, accumulating a large number of GPUs for training models, including those for content recommendation and advertising systems, as well as the large language model Llama, while also performing inference tasks for over 3 billion daily users of Meta’s social media platforms. However, this has resulted in significant expenditures on NVIDIA GPUs. Therefore, Meta hopes to reduce costs through self-developed AI chips.

One informed source stated that unlike Meta’s previous MTIA series AI inference chips, the latest self-developed AI chip is specifically designed for AI training tasks and is expected to integrate HBM3 or HBM3E memory. Due to its custom design, the chip’s size, power consumption, and performance can be optimized, potentially making it more efficient than GPUs used for the same AI workloads. Its performance per watt is expected to compete with NVIDIA’s latest GPUs (such as H200, B200, and even the next-generation B300).

Another source indicated that Meta’s self-developed AI chip will be manufactured by TSMC.

Both Meta and TSMC declined to comment on these rumors.

It is noteworthy that self-developed chips are part of Meta’s long-term plan to reduce the costs associated with AI infrastructure. In fact, Meta estimates that total expenditures in 2025 will range between $114 billion and $119 billion, with capital expenditures projected at $65 billion, primarily for AI infrastructure development, which includes self-developed AI chips.

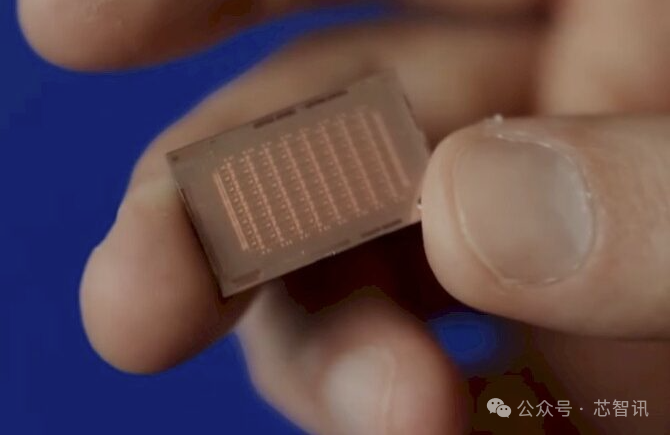

In 2023, Meta launched its first self-developed AI chip, MTIA (MTIA v1), which was manufactured using TSMC’s 7nm process. The second generation of MTIA, set to launch in 2024, will also be manufactured by TSMC, but the process technology will be upgraded to 5nm, equipped with more processing cores, and the on-chip memory will double to 256MB (MTIA v1 had only 128MB), while off-chip LPDDR5 will increase to 128GB (MTIA v1 had 64GB), and the clock frequency will rise from 800MHz to 1.35GHz, with power consumption increasing to 90W (MTIA v1 was 25W). It should be noted that both generations of MTIA chips are primarily used for inference applications.

△MTIA v2

Meta previously revealed that the second generation of MTIA has been deployed in 16 data center regions, primarily for the content recommendation system that determines the display of dynamic messages on Facebook and Instagram. Meta also acknowledged that the second generation of MTIA will not replace the GPUs currently used for training models but will supplement computational resources.

Meta’s Chief Product Officer Chris Cox stated at the Morgan Stanley Technology, Media, and Telecom Conference last week, “We are exploring how to train recommendation systems, ultimately considering how to train and infer generative AI.” He described Meta’s chip development efforts as currently being in the stage of “from walking to crawling to running,” with internal belief that the first generation inference chip used for content recommendation systems is a significant success. Meta hopes to begin using self-developed chips in the AI training phase by 2026.

Clearly, this also means that if the newly revealed Meta self-developed AI training chip is successful, it could enter large-scale deployment in 2026.

Editor: Chip Intelligence – Wandering Sword