Skip to content

As an open hardware instruction set architecture (ISA) based on Reduced Instruction Set Computing (RISC), the design philosophy of RISC-V emphasizes “simplicity is the ultimate sophistication.” Although RISC-V still needs continuous efforts and improvements in ecosystem building, software support, and security issues, it has already shown strong vitality and broad development prospects in fields such as embedded systems, cloud computing, big data, artificial intelligence (AI) and machine learning (ML), and the Internet of Things (IoT) and edge computing due to its simple, efficient, and flexible characteristics.

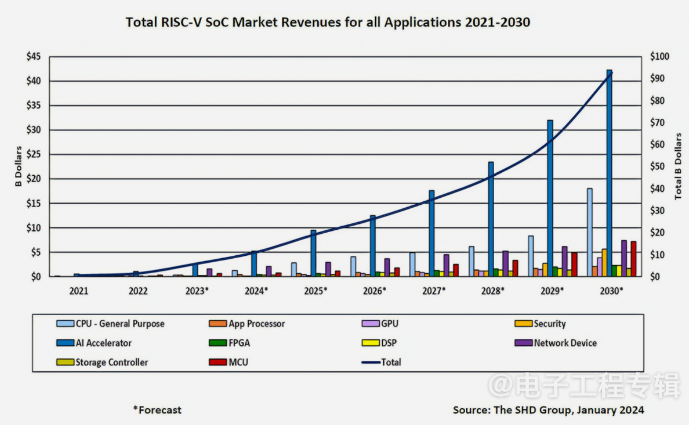

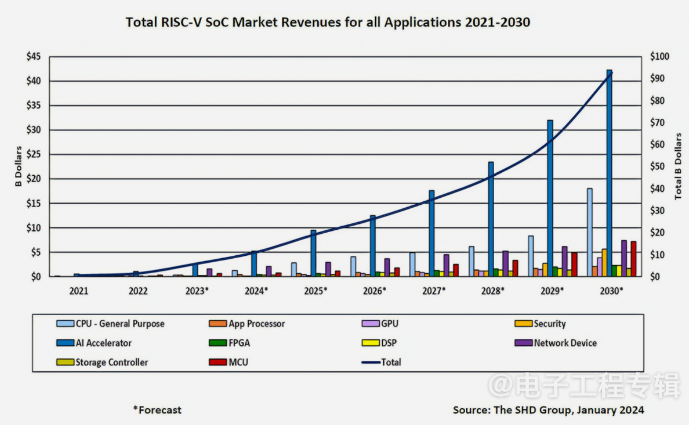

According to a research report by SHD Group, the global market revenue for RISC-V related SoC chip products reached $6.1 billion in 2023, an increase of 276.8% compared to 2022 (Figure 1). It is expected that by 2030, the global market size of RISC-V will reach $92.7 billion, with an average annual growth rate of 47.4% over the next few years. Notably, it is expected that by 2030, the shipment of RISC-V SoCs for AI accelerators will reach 4.1 billion units, generating revenue of $42.2 billion.

Figure 1: Total revenue of RISC-V SoCs for all applications from 2021 to 2030.

From “Open Source” to “Open”

At the RISC-V European Summit in June this year, we noticed that RISC-V’s positioning as an “open standard” has been further strengthened. Dr. Bao Yungang, Deputy Director and researcher at the Institute of Computing Technology, Chinese Academy of Sciences, pointed out on social media that in recent years, people have often confused “open” with “open source” when referring to RISC-V, with some saying it is an open instruction set and others saying it is an open-source instruction set, leading to some conceptual confusion. At this summit, the industry has basically reached a consensus to emphasize in future promotional statements that “RISC-V is an open standard, not an open-source implementation.” He believes that clarifying this concept is important and could even help avoid some geopolitical misjudgments.

So, why has there been such a change? How should we correctly understand the differences and similarities between “open” and “open source”?

Dr. He Ning, Senior Vice President and Chief Technology Officer of SiFive, interpreted this from two aspects: on one hand, the complex international geopolitical situation has put pressure on RISC-V management organizations and their partners; on the other hand, “open source” means no secrets, no proprietary lock-in, and no need to pay high licensing and royalty fees, which does not align with commercial realities, hence the emphasis on the change between “open source” and “open.” In other words, RISC-V is available for free use without paying patent fees, but the chips themselves can be either open-source designs or closed proprietary designs, which is the difference between “open source” and “open.”

Figure 2: Dr. He Ning, Senior Vice President and Chief Technology Officer of SiFive.

Dr. Meng Jianyi, CEO of Zhihe Computing, responded that “this change stems from a previous confusion between standards (instruction set specifications) and implementations (processor implementations).” He stated that RISC-V is a completely free and publicly open specification standard, not specific code and chip design, and anyone can implement their own processor design based on this specification standard. In contrast, the instruction set specifications of x86 and Arm are controlled by single enterprises, thus RISC-V is referred to as an “open standard.”

Figure 3: Dr. Meng Jianyi, CEO of Zhihe Computing.

Processor implementation refers to the process of developing a chip product based on the instruction set specification, along with processor microarchitecture design, resulting in the final chip. The intellectual property formed during this process is independent of the intellectual property corresponding to the aforementioned specification standard. Depending on whether the intellectual property generated by processor implementation is open source, two concepts arise: “commercial implementation” and “open-source implementation.”

In summary, RISC-V is an instruction set specification that is completely publicly open, while processor implementation is the work done by companies developing chips based on RISC-V. Among them, “commercial implementation” is more significant for the ecosystem; only by achieving “commercial implementation” can more companies be attracted to participate in the construction of the RISC-V ecosystem, rather than letting RISC-V remain a “toy” in academia. This is why the industry now emphasizes “rather than open-source implementation.”

“The notion of RISC-V being open source is an inaccurate statement; the RISC-V International Association began correcting this statement two years ago,” said Xu Tao, Chairman and CEO of SiFive Technology. “Open source” is a concept that arose from the industry’s practice of sharing software code, while RISC-V is an open standard that does not inherently involve code sharing. However, the industry can acquire the right to use the RISC-V standard at almost zero cost, which has similarities to “open source.”

Figure 4: Xu Tao, Chairman and CEO of SiFive Technology.

Matthew Bubis, Director of Product Management at Imagination, expressed a similar view. He said there is a common misconception that the RISC-V open standard equates to RISC-V CPUs and products being open source. In fact, while the RISC-V open standard allows developers to freely and openly use the framework to create CPUs, these products may be proprietary intellectual property or developers may choose to open-source them, thus open standards do not imply they must follow this or any other model.

Therefore, the distinction that may arise between using open standards and using closed IP is crucial when various restrictions are introduced around RISC-V, to avoid unexpected outcomes. This confusion could lead to the divergence of standards and pose problems and challenges to the continuous openness that RISC-V relies on. Thus, it is necessary to convey clear messages to ensure that both inside and outside the industry fully understand this distinction.

Figure 5: Matthew Bubis, Director of Product Management at Imagination.

Finding Suitable Business Models

This is another interesting and serious topic. Dr. Bao Yungang discussed in his article that the global market size for various Arm chips has reached over $100 billion, but Arm itself has only about $3 billion in revenue and $300 million in profit. How to provide Arm-level IP to RISC-V SoC chip companies and achieve sustainable profitability is actually a challenging task.

If the same business model as Arm is adopted, then the revenue and profit of RISC-V IP companies will definitely be lower than Arm. In fact, today, RISC-V IP companies worldwide are facing difficulties, with revenues between $10 million and $30 million, basically breaking even. If this gap is not filled, the foundation of the entire RISC-V ecosystem will not be solid enough.

According to Semico Research, it is predicted that by 2025, shipments of RISC-V architecture processor cores will reach 80 billion units. Such a scale of shipments, if it is difficult for the outside world to perceive due to a lack of systematic and large-scale application scenarios, or if each company fights alone, independently expanding their market and application ecosystem with their respective products, failing to form a synergy, it would be regrettable and confusing in terms of profitability.

“Driven by the dual effects of rapid market growth and widespread competition, both sustainable and unsustainable business models will naturally emerge,” said Bubis. However, in Bubis’s view, as the market direction becomes clearer, many companies will need to adjust their models and turn towards the fastest-growing sub-markets to discover the greatest demand in the RISC-V market and ultimately provide the highest quality products and services for these fields, which is a prerequisite for success.

Currently, RISC-V has already achieved good application results in low-power embedded scenarios, including the Internet of Things, but these markets cannot support further development of RISC-V in the future. Therefore, for the entire RISC-V ecosystem to achieve commercial profitability and further development, it must find the next track that can support the development of the entire ecosystem.

On the other hand, successful RISC-V companies today often adopt RISC-V technology in their existing products. For those companies that rely purely on selling RISC-V IP or products, they may need to understand the system further, find system partners, and jointly explore “killer applications,” just as IBM once made Intel successful, and Nokia and TI made Arm successful.

Therefore, for companies, they should make good use of the flexibility brought by the RISC-V open standard to explore how to achieve more computing power forms based on RISC-V architecture, thus seeking opportunities in markets with greater potential. Currently, AI computing scenarios may be the most worthwhile track to invest in.

To this end, Wang Dongsheng, Chairman of SiFive, first proposed the industrial concept of RISC-V Digital Infrastructure (RDI) at the “RDI Ecosystem – Wuhan Innovation Forum 2024” in July this year, calling for upstream and downstream of the industrial chain to jointly build the RDI ecosystem and promote the industrialization of RISC-V. In his view, RDI is both an opportunity for RISC-V to move from invisibility to visibility, from embedded systems to larger markets, and also key to expanding the RISC-V market cake and helping the industry develop efficiently.

Specifically, RDI takes limited ecological scenarios in vertical industries as the entry point, aiming to meet the needs of industry transformation and independent innovation, and actively promotes the systematic and large-scale landing of RISC-V products in various vertical industry scenarios, while expanding RISC-V’s influence and creating new market opportunities for RISC-V companies. At the same time, gradually accumulating application ecology through different industry scenarios to ultimately achieve breakthroughs in strong ecological scenarios is also beneficial to avoid the “first-mover disadvantage” dilemma of excessive one-time investment, thus promoting RISC-V ecosystem construction while ensuring sustainable development for enterprises.

China’s Market Enthusiasm Remains High

“China Chip” has always been a hot topic, with the government, industry, funds, and markets all very interested in understanding what impact the RISC-V instruction set will bring to their products and business models. Especially the open RISC-V instruction set, which has had an open attribute since its inception, is the best alternative to closed, proprietary instruction sets.

For Chinese companies, using the RISC-V instruction set allows them to align with international standards without reinventing the wheel and without the restrictions of “Me Too,” so from both technical and commercial perspectives, RISC-V has the potential to succeed in China, which is of great significance for related industries in China. Market data also confirms this – in 2022, 50% of the 10 billion processors adopting the RISC-V architecture globally came from China, marking that China’s RISC-V development has begun to take shape.

More encouraging than the sustained enthusiasm is the role played by Chinese companies in the industrial chain. Dr. Meng Jianyi pointed out that from the inception of RISC-V, Chinese companies have made significant technological contributions, so the first role played by China is as a “major contributor.” Secondly, due to the open nature of RISC-V, it has become one of the main choices for China to achieve original computing power products. From the numerous innovative projects recently emerging that utilize RISC-V to develop various computing architectures, it can be seen that China also plays the role of a “major (potential) market.”

Bubis’s view may represent the perspective and understanding of international peers towards Chinese companies. “China’s rapid development in the RISC-V field proves the credibility of RISC-V in various applications,” he said. China is in a leading position in the RISC-V field and is closely watched by other countries in the world. The proof of RISC-V’s success is also demonstrated through tape-out mass production and user satisfaction, indicating that RISC-V is an important architecture that can replace closed ISAs. This growth brings more opportunities, making the software and security ecosystems more mature, competition more intense, and ultimately providing better products.

Thus, through its domestic IP providers and ecosystem-related companies, China has played an important role in pushing the global RISC-V industry competition forward, while also supporting the development of the industry internationally by having a huge market ready to adopt RISC-V products.

RISC-V + AI, Has Become a Prairie Fire

Professor Xie Tao from Peking University, Chairman of the RISC-V International Foundation’s Artificial Intelligence and Machine Learning Committee, analyzed in his book “RISC-V + AI Computing System Software Stack Construction” that “large models generate computing power demand, and the heterogeneous computing paradigm is highly compatible with RISC-V’s technological advantages,” which is one of the main reasons for RISC-V architecture gaining favor in the AI field. From a global development trend perspective, both giant companies and startups conform to this judgment.

For example, Google uses SiFive’s X280 as a coprocessor in its AI chips and plans to continue using SiFive designs in the next generation of AI systems; Meta’s AI MTIA chip uses two Andes Technology AX25V100 core processors, whose RISC-V IP cores have been recognized, and the second-generation MTIA chip will continue to use and increase the number of cores; Tesla’s Project Dojo chip core contains an integer unit that uses some RISC-V architecture instructions.

In the startup sector, Tenstorrent will develop the next generation of AI chips based on RISC-V architecture technology and SF4X process; Untether.Al’s Boqueria AI accelerator has 1458 RISC-V cores, which can flexibly adapt between low to high power devices; Rivos’s AI chip combines high-performance RISC-V CPU and GPGPU optimized for LLM and data analysis.

In response to the questions “Is RISC-V the most suitable instruction set architecture for AI?” and “In the AI environment, which CPU has an advantage, x86, Arm, or RISC-V?” Xu Tao gave affirmative answers. He analyzed that AI is a rapidly developing emerging application field, and its software and hardware ecosystems are still evolving rapidly. RISC-V, x86, and Arm are on the same starting line in the AI ecosystem, but RISC-V is an open architecture, and its modular architecture design can be more flexible than x86 and Arm, allowing for faster innovation and better adaptation to AI development needs.

Dr. Meng Jianyi emphasized that currently, the core computing power in AI scenarios still relies on GPGPU, which is monopolized by a single vendor’s closed GPGPU architecture. Therefore, whether x86, Arm, or RISC-V architectures can achieve breakthroughs in AI computing requires innovation based on the unique demands of AI computing, deviating from the traditional general-purpose computing architecture.

Under such demands, there is no doubt that the open RISC-V, with its greater flexibility and stronger ecological attributes, will have advantages over x86 and Arm architectures.

Bubis provided his interpretation from the perspective of software and ecology – although all major ISAs will play a role in the future of artificial intelligence, the difference is that traditional software in the AI field is much less than in other applications. Therefore, building an artificial intelligence software ecosystem and various frameworks based on RISC-V is much easier compared to other software ecosystems.

This allows for innovation in artificial intelligence to occur on RISC-V without the need to overcome the challenges posed by the continuity and compatibility issues of traditional ecosystems. Therefore, companies can leverage the advantages of open standards without worrying about software compatibility issues.

“We are seeing more and more RISC-V IP entering various subfields of artificial intelligence, such as data centers, edge, industrial IoT, and consumer devices. With the current growth rate, the prospect of RISC-V keeping pace with other ISAs is bright,” said Bubis.

Overall, the potential and momentum of RISC-V in the AI field are very clear, as it is green, open, vibrant, and continuously evolving, better adapting to the needs of software and algorithm models optimizing in the AI era, serving as a native architecture to support the emergence of new applications and new scenarios.

Currently, the advantages of RISC-V in AI applications mainly include the following four aspects:

Low Power Consumption: With the arrival of generative AI, AI accelerators represented by GPUs are challenging the energy consumption and operation of global data centers, highlighting the importance of low-power architectures. According to Boston Consulting Group (BCG), by 2030, data centers in the U.S. will account for 7.5% of the total electricity consumption, three times that of 2022, nearly one-third of U.S. household electricity consumption, mainly due to large model training and increasing AI queries. RISC-V instruction design is more streamlined, without the burden of historical compatibility, and can be customized and optimized as needed to improve the efficiency of certain specific computations, further reducing power consumption compared to existing architectures.

Flexibility: The RISC-V architecture has followed a modular design and customizable expansion principle from the beginning, allowing for flexible design according to user needs to meet computing power demands in different scenarios such as cloud, edge, and endpoint, including the high computing power requirements for cloud training inference and the optimization deployment needs of large models at the edge and endpoint.

Compatibility: RISC-V allows hardware manufacturers to customize processor cores according to their needs, ensuring software compatibility as long as they adhere to the RISC-V specification.

Openness and Evolution Capability: As an open architecture, RISC-V attracts global companies, institutions, and developers to co-build the ecosystem, rapidly improving the resource pool of software, hardware, algorithms, and operators, promoting the rapid upgrading and iteration of the RISC-V ecosystem in the field of artificial intelligence. With the gradual unification of the RISC-V + AI technology path, RISC-V is expected to form an open and strong ecosystem, becoming the native architecture for AI and providing new driving force for the development of technology in the AGI era.

The Trend of Coexistence of Multiple Architectures Remains Unchanged

On the other hand, as AI development is still in its early stages, its applications and infrastructure have not yet solidified. For a considerable period in the future, there will be a combination of different architectures, fixed circuits, and various programmable accelerators (Hybrid), catering to different application needs with varying PPA, pricing, and usage.

In other words, from CPUs to GPUs to dedicated accelerators, various existing AI inference hardware can support diverse flexibility, performance, efficiency, and adaptability combinations. There is no single best solution that can be applied to all application scenarios. As long as AI modeling and software stacks continue to innovate, architectural combinations are likely to persist. The pursuit of flexibility is unlikely to disappear – artificial intelligence and machine learning frameworks are evolving rapidly, thus it is necessary to retain architectures that can adapt to this development.

As for the question of whether ASICs targeted at specific AI models can replace more general-purpose GPGPUs, Dr. Meng Jianyi’s judgment is, “It cannot be asserted at this time,” because the performance and efficiency advantages of ASICs in specific models are evident, but the specific optimizations for single algorithms also expose the products to significant challenges posed by algorithm iterations.

Therefore, for some time to come, ASICs and GPGPUs are more likely to coexist. In certain application scenarios where the market scale is sufficient and the algorithm demands are clear, ASICs will gain part of the market due to their performance, efficiency, and cost advantages, but the majority of the market will still belong to the more general-purpose GPGPUs.

He suggests that in this trend, attention can also be paid to the performance of RISC-V. RISC-V is not limited to traditional general-purpose computing CPUs; its open characteristics allow for the implementation of different architectures such as ASICs, GPGPUs, and DSAs based on RISC-V. At the same time, leveraging the unified ecological advantages of the RISC-V ecosystem, various architectures can more easily achieve heterogeneous computing integration and innovative attempts at hardware-software collaborative design in application landing.

Therefore, RISC-V may become the “final answer” for future AI inference chips.

Dr. He Ning’s view is that “there will be possibilities for the coexistence of multiple architectures, such as CPUs, GPUs, DPUs, and ASIC units aimed at specific calculations.” After all, moving from homogeneous to heterogeneous and then further to heterogeneous integration is an inevitable evolutionary trend in the development of computing architectures from simple to complex. The goal of heterogeneous integration computing is to fully leverage the advantages of different hardware units to achieve higher performance, efficiency, and more functional expansions, usually involving the collaboration of different types of processors and accelerators to meet increasingly complex computing demands.

However, in the specific implementation of various computing units, it is also possible to base them on the same underlying infrastructure, such as CPUs, GPUs, and DPUs all using RISC-V basic instructions, but achieving different computational purposes through different extensions and coprocessor combinations, such as logical operations, graphical computations, and network processing. This unified base is more conducive to achieving collaboration among different computing units, thereby realizing resource pooling and the integration of software tools and other platforms.

Of course, in this process, it is not ruled out that some companies will attempt to find a balance between the “customization advantages” brought by specific domain architectures (DSA) and the “broad adaptability” of general-purpose CPUs/GPUs. Therefore, how to define “specific domains” is crucial. According to Xu Tao, “If the definition is too narrow, it will fall into a trap.”

To provide a suitable definition, on one hand, companies need to have an in-depth understanding of the relevant fields and then connect the entire technical logic and business logic from applications to architecture; on the other hand, the so-called “balance point” is essentially a trade-off between pursuing the best power consumption, performance, and area with the universal adaptability of hardware. If manufacturers have a good understanding of the long-term stability of their workloads, they can confidently choose more specialized domain-specific architectures, while those seeking longer product lifecycles that can adapt to changing workloads may require a more general approach.

At the same time, given the massive investments in artificial intelligence and machine learning, there are almost no signs that innovation will slow down. Many workload development directions may still carry uncertainty, so suppliers should cautiously weigh whether to incorporate only DSA into their chips or retain powerful computing solutions based on combinations of CPU, GPU, and dedicated accelerators.

Finding a balance between “customization advantages” and “broad applicability” has many experimental directions, including strengthening one direction until its advantages are so substantial that they can replace another direction, as well as simultaneously obtaining the advantages of both directions through heterogeneous computing integration. These attempts, if conducted under an open architecture and unified ecosystem, can be tried in parallel and are more likely to achieve final success, and RISC-V’s openness and unified ecosystem make it possible for the industry to attempt multiple directions; many companies are indeed trying on this path.

Dr. He Ning pointed out that from a technical perspective, the characteristics of DSA lie in providing dedicated optimizations for specific domains, through the design of customized computing units or IP modules tailored to specific needs, achieving precise matching of computing resources with application demands to maximize energy efficiency. For example, dedicated designs for image processing units in multimedia domains and high data reuse rate, high computing unit reuse rate neural network acceleration units for intelligent computing fields provide customized computing solutions that significantly enhance the performance and energy efficiency of specific tasks in dedicated scenarios, facilitating the deep integration and innovative application of new technologies in practical scenarios.

“The advantage of DSA is domain customization. Its disadvantage is also domain customization, which can lead to limited applicability, high development and maintenance costs, and poor compatibility issues.” He suggested that the final choice between DSA and a “broad adaptability” architecture mainly depends on the scenario and demand – when power consumption and efficiency are the main conflicts, and the market demand is sufficient to support the development costs of DSA, adopting a DSA architecture is obviously more conducive to achieving goals. Conversely, general-purpose solutions are more favored.

The advantage of RISC-V lies in its modular, scalable, and easily customizable design philosophy, effectively balancing the needs of both general and specialized domains. Through basic instruction sets and commonly used instruction extensions, it can meet the needs of conventional general-purpose computing, while DSA-specific instruction extensions can address the efficiency demands of domain-specific computing, with instruction extensions built on the RISC-V foundational framework, significantly improving design speed and convenience compared to traditional ASIC implementations of DSA. Thus, RISC-V is the best way to balance the demands of general computing and DSA.

When Will the CUDA Ecosystem Be “Shaken”?

Professor Xie Tao made a vivid analogy, comparing the integration of RISC-V and AI to the combination of a “big house” and a “small house,” with both closely connected to accomplish complex tasks. “‘AI + RISC-V’ is equivalent to a CPU plus a coprocessor. The ‘coprocessor’ may not necessarily be a small house; it could also be a big house, and there could be many of them, forming a cluster concept.”

“We need to promote the establishment of standards; in the past, some standards were academic-oriented, and enterprises did not participate deeply,” said Xie Tao. “RISC-V can unite other companies to jointly build an open-source ecosystem, complementing rather than opposing the existing CUDA ecosystem.” He further added that promoting standards is not the goal but a means; only by seeing sufficiently large dividends can everyone set aside their small selfish interests and gather more companies to form a follow-up effect.

Zhang Xiaodong, Chairman of the Wuzhen Think Tank, observed a phenomenon that those standards that are “well done” often have clear demands and products first, and then establish standards based on their ecosystem, which are often more likely to succeed. Conversely, if a standard is created out of thin air and then expects product developers to comply, the challenge is greater than developing products without standards.

Openness and flexibility, high scalability, advantages in power consumption and efficiency, as well as ecosystem and community support are the main advantages of building AI computing power based on RISC-V. However, at the same time, the opportunities and challenges faced by the RISC-V + AI ecosystem stem from ecological fragmentation, insufficient resource investment, lack of organizational coordination, and weak collaboration between industry, academia, and research.

AI computing scenarios represented by servers are currently one of the primary application target scenarios for RISC-V. In this scenario, the RISC-V industry is not yet fully prepared; the most critical point is that some benchmark products are still needed to lead the development of the ecosystem.

Dr. Meng Jianyi pointed out that in AI computing scenarios, RISC-V benchmark products need to possess the following characteristics:

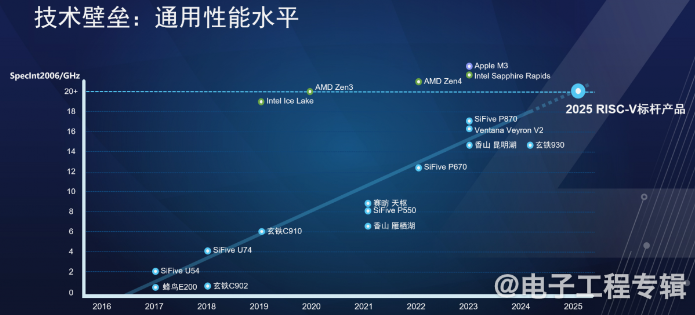

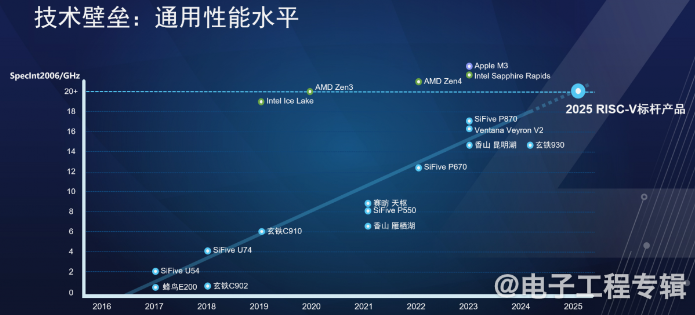

1. Sufficient general computing performance: The benchmark products must achieve computing performance levels comparable to high-end x86 and Arm products (Figure 6).

2. AI computing capabilities tailored to actual application needs: In addition to general computing capabilities, benchmark products must also possess strong AI computing power and achieve better balance in the three key indicators of AI computing scenarios (computing power, memory, and interconnect).

3. Better software ecosystem: Utilize the significant advantages of RISC-V’s unified hardware and software ecosystem, as well as experience in building mature ecosystems for general computing, to construct a more complete software ecosystem for AI computing (Figure 7).

Figure 6: RISC-V chip’s general performance level is continuously improving.

Figure 7: Although the RISC-V general computing ecosystem has gradually matured, continuous investment is still needed.

In terms of application scenarios, in addition to continuously increasing its market share in the embedded processor field, RISC-V’s applications in AI acceleration, edge computing, and smart terminals are also beginning to emerge, and through vector, matrix, and other dedicated acceleration instruction extensions, it continues to enhance its support for AI computing, promising to become the native architecture for AI.

Although RISC-V has broad prospects, to enter high-end application scenarios, its software and hardware ecosystem still needs further improvement. For example, in terms of hardware, the richness of RISC-V products is still insufficient, and their maturity and competitiveness need to be proven over time; in terms of software, RISC-V needs to continuously improve and optimize compatibility in development tools, operating systems, middleware, and application software. Additionally, due to RISC-V’s open characteristics, companies may face challenges related to copyright protection and business models when using and releasing RISC-V products.

Therefore, for RISC-V to become a mainstream architecture in high-end application scenarios, it still requires time for refinement and accumulation, and upstream and downstream companies in the industrial chain need to strengthen their confidence and investment, especially in software ecosystem construction, to promote the early landing of RISC-V in high-end application scenarios.

The automotive industry is another sector that RISC-V aims to capture. The longer product lifecycle of automotive products, coupled with higher safety and quality requirements, will inevitably lead to the gradual adoption of RISC-V products. Correspondingly, the automotive industry has been seeking solutions that can reduce costs and achieve differentiation. As new IP providers enter the market with improved PPA and more innovative safety solutions, RISC-V can achieve this goal by promoting more intense competition.

However, it must be acknowledged that the software ecosystem, core application IP’s PPA, and maturity supporting these industry applications inevitably have shortcomings that need to be improved; more participation from system manufacturers and the transmission of experience are also needed to accelerate the maturity of IP and enhance product competitiveness.

Faster and More Stable Industrialization Landing

In different application fields, the development and landing of the RISC-V industry vary. In the server field, there is still a lot of work to be done in building RISC-V IP platforms, enhancing performance and robustness – for example, the RISC-V International Association is defining a server platform that, in addition to different types of cores, also needs to have compatible buses and peripheral devices, providing a one-stop end-to-end solution for servers.

“We are now entering a stage where the ecosystem is already very mature in terms of compilers, libraries, debugging and analysis tools, security, kernels, distributions, and language runtimes. The biggest challenge is how to make more audiences aware of this increasingly mature ecosystem, thereby establishing confidence in the readiness of RISC-V for broader applications,” said Bubis.

Therefore, the most critical task is to enhance the shared understanding of RISC-V’s current maturity through customer and user participation in RISC-V products. At the same time, quickly adopting feedback on any shortcomings in the ecosystem and then coordinating efforts to address them will help the ecosystem mature faster and increase confidence in RISC-V’s current state. This will not only accelerate the adoption of RISC-V but also enable developers to continue focusing on developing the parts urgently needed by the ecosystem through higher quality feedback.

In this process, Dr. He Ning shared the following three insights from SiFive:

1. The ability to customize and optimize cores must be strong. The reason is that from core development to industrialization landing, if it is done within the same company, the cycle and path are very short, and the core team can immediately adjust based on different needs. This adaptability can provide a strong competitive edge for products and significantly accelerate the speed of product landing. Competitive products, in turn, will drive the landing of the RISC-V architecture, enhancing user confidence in RISC-V.

2. Create influential products. The landing of a leading product in the industry and the resulting demonstration effect will certainly be stronger than those products that have already entered a fierce competition in a red sea, so every project product should at least have the potential to become a leading industry product.

3. To achieve large-scale promotion of the ecosystem, the number of application products must be substantial. SiFive’s principle is: adopt RISC-V architecture wherever possible, and maintain the speed of industrializing multiple chips simultaneously. The higher the proportion of chips adopting the RISC-V architecture, the stronger the scaling effect, and the higher the user awareness and acceptance of RISC-V. As mentioned earlier, to quickly promote the industrialization of RISC-V, SiFive has been pushing the concept of RISC-V Digital Infrastructure (RDI) and emphasizes accelerating the industrialization of RDI by taking vertical industry application as the entry point.

Since the number of software in vertical fields is relatively controllable, and the difficulty of ecological compatibility is relatively small, this is conducive to the early landing of RISC-V solutions, while continuously accumulating the software ecosystem in the process, ultimately penetrating and breaking through more strong ecological scenarios. At the same time, by integrating RDI solutions across different vertical fields, systematically showcasing RISC-V’s value and capabilities is essential to transforming RISC-V from an “invisible” to a “visible” presence, significantly enhancing the influence of RDI and increasing confidence and acceptance of RISC-V across various sectors of society.

Dr. Meng Jianyi specifically emphasized the key point for the industrialization landing of RISC-V – “Applications Define Products.” He explained that in new application scenarios such as AI, the demands generated by applications on software and hardware often undergo frequent and significant changes. Therefore, for products to achieve better commercialization in these scenarios, it is crucial to change the traditional production relationship from “product-driven applications” to a new relationship of “applications define products.”

This requires companies to leverage the flexibility advantages brought by the open RISC-V and make good use of RISC-V’s ecological advantages, adopting a model of collaborative development of software and hardware along with the entire industrial chain to implement the principle of “applications define products,” quickly realizing and iterating products that genuinely meet application needs, only then can breakthroughs be achieved in the industrialization of RISC-V.

This article is copyrighted by “Electronic Engineering Magazine” and is prohibited from being reproduced.