The author states: Among various effective LLM fine-tuning methods, LoRA remains his top choice.

LoRA (Low-Rank Adaptation) is a popular technique for fine-tuning LLMs (Large Language Models), initially proposed by researchers from Microsoft in the paper “LORA: LOW-RANK ADAPTATION OF LARGE LANGUAGE MODELS”. Unlike other techniques, LoRA does not adjust all parameters of the neural network but focuses on updating a small number of low-rank matrices, significantly reducing the computational resources required to train the model.

Since the fine-tuning quality of LoRA is comparable to that of full model fine-tuning, many people refer to this method as a fine-tuning magic tool. Since its release, many have been curious about this technology and want to write code from scratch to better understand the research. Previously, due to the lack of suitable documentation, now, the tutorial has arrived.

This tutorial’s author is renowned machine learning and AI researcher Sebastian Raschka, who states that among various effective LLM fine-tuning methods, LoRA is still his top choice. Therefore, Sebastian specifically wrote a blog titled “Code LoRA From Scratch” to build LoRA from scratch, which he believes is a great way to learn.

In simple terms, this article introduces Low-Rank Adaptation (LoRA) by writing code from scratch. In the experiment, Sebastian fine-tuned the DistilBERT model for a classification task.

The comparison results between LoRA and traditional fine-tuning methods show that using the LoRA method achieved a testing accuracy of 92.39%, which demonstrated better performance compared to only fine-tuning the last few layers of the model (86.22% testing accuracy).

How did Sebastian achieve this? Let’s read on.

Writing LoRA From Scratch

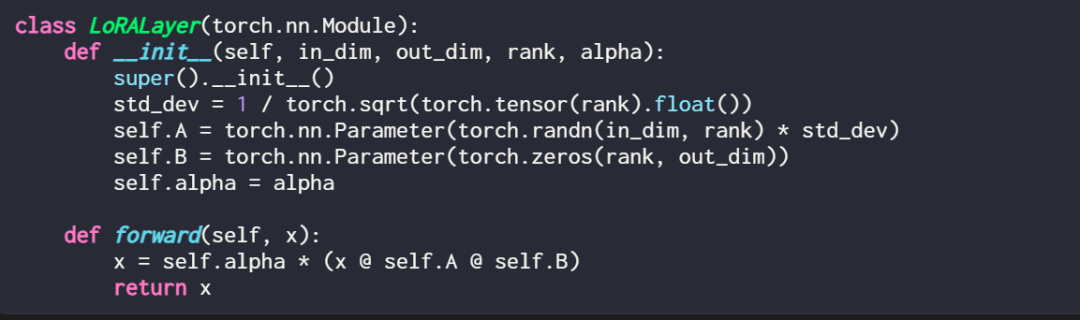

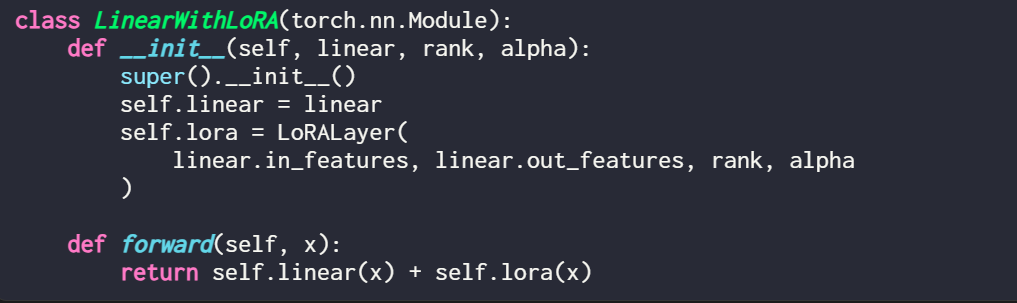

Expressing a LoRA layer in code is as follows:

Here, in_dim is the input dimension of the layer you want to modify with LoRA, and the corresponding out_dim is the output dimension of the layer. The code also adds a hyperparameter, the scaling factor alpha. A higher alpha value means a greater adjustment to the model’s behavior, while a lower value does the opposite. Additionally, this article initializes matrix A with small values from a random distribution and initializes matrix B with zeros.

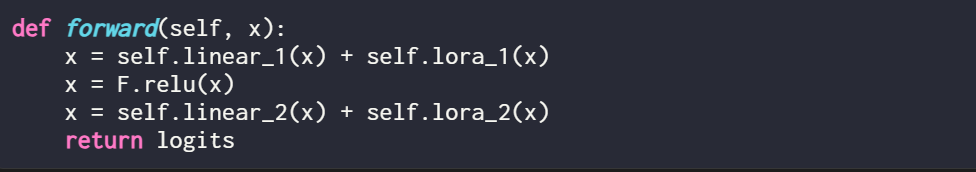

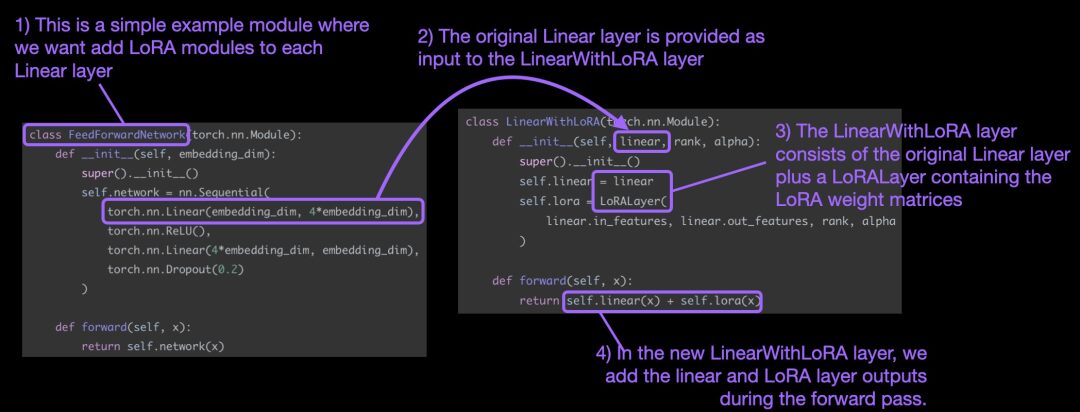

It is worth mentioning that LoRA typically works in the linear (feedforward) layers of neural networks. For example, for a simple PyTorch model or a module with two linear layers (which could be the feedforward module of a Transformer block), its forward method can be expressed as:

When using LoRA, LoRA updates are typically added to the outputs of these linear layers, resulting in the following code:

If you want to implement LoRA by modifying an existing PyTorch model, a simple method is to replace each linear layer with the LinearWithLoRA layer:

These concepts are summarized in the following diagram:

To apply LoRA, this article replaces the existing linear layers in the neural network with the LinearWithLoRA layer that combines the original linear layer and LoRALayer.

How to Get Started with LoRA Fine-Tuning

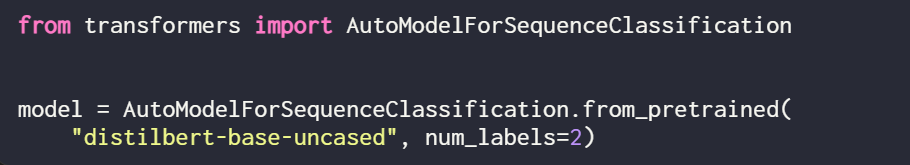

LoRA can be used for models such as GPT or image generation. To illustrate simply, this article uses a small BERT (DistilBERT) model for text classification.

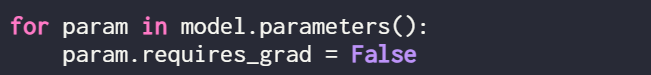

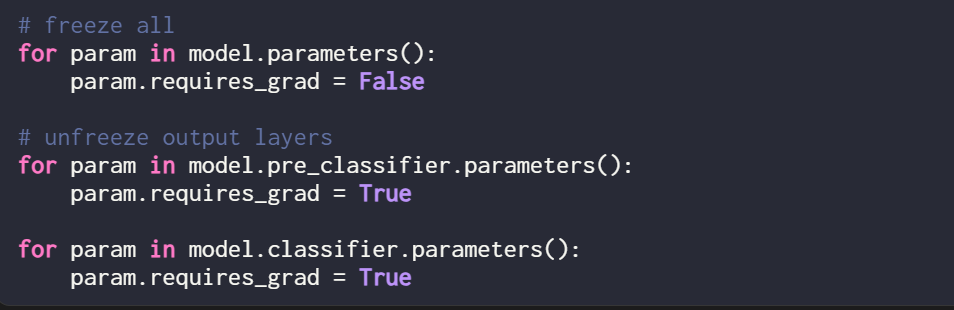

Since this article only trains the new LoRA weights, it is necessary to set requires_grad to False for all trainable parameters to freeze all model parameters:

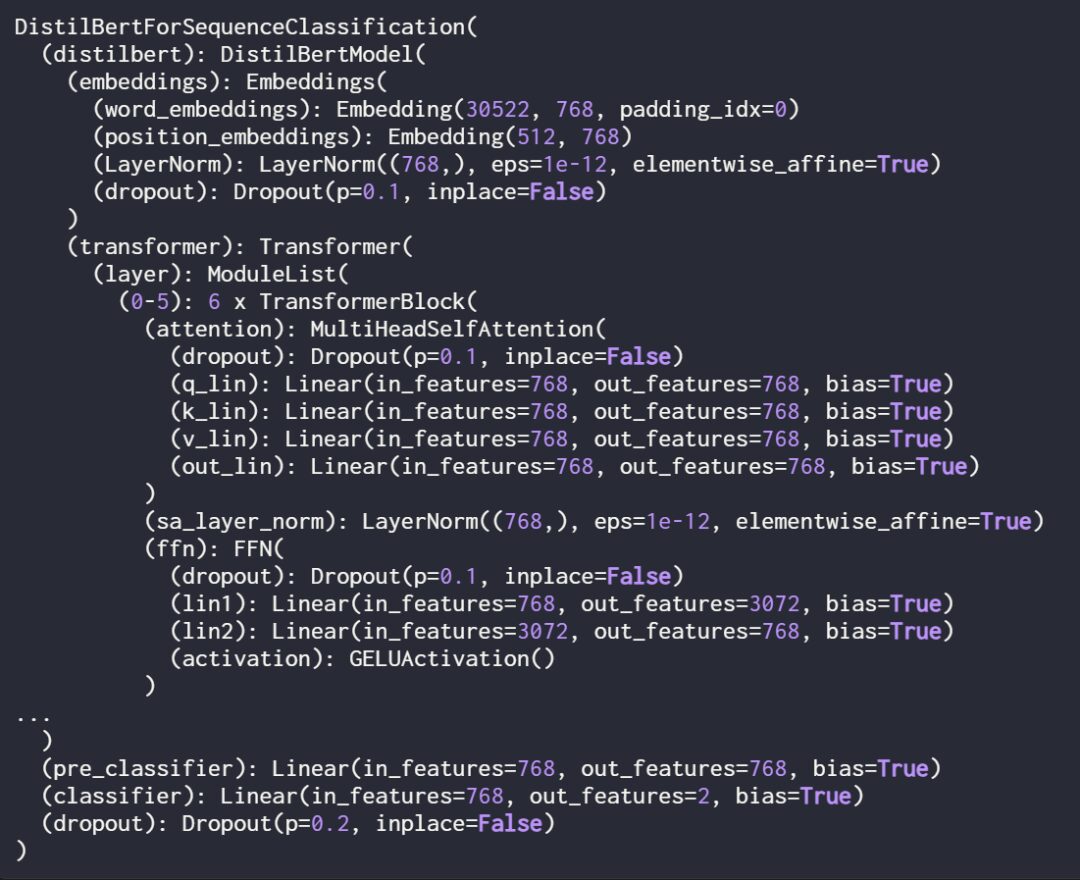

Next, check the model’s structure using print(model):

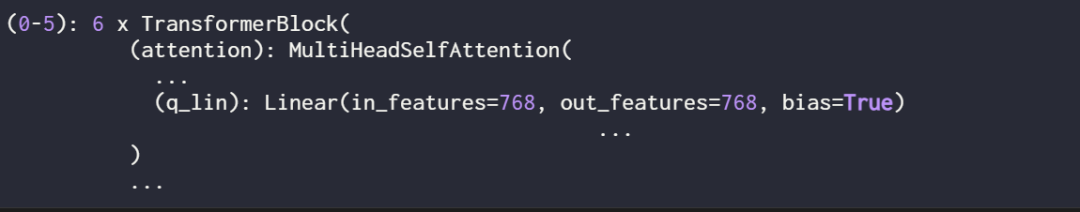

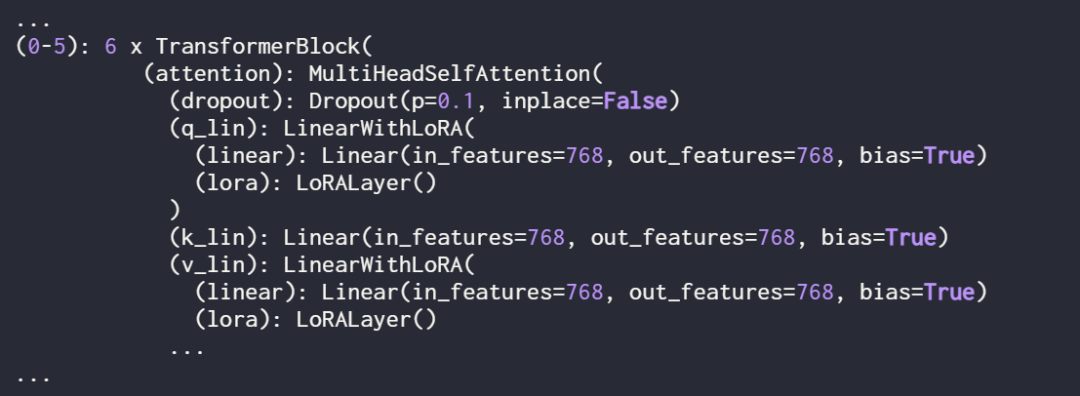

The output shows that the model consists of 6 transformer layers, including linear layers:

Additionally, the model has two linear output layers:

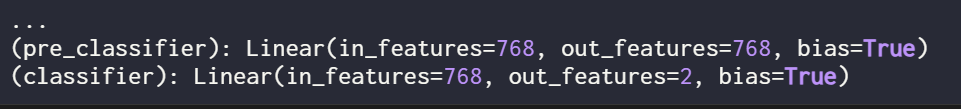

By defining the following assignment function and loop, LoRA can be selectively enabled for these linear layers:

Using print(model) again to check the model for its updated structure:

As seen above, the linear layers have been successfully replaced by the LinearWithLoRA layers.

If using the default hyperparameters shown above to train the model, the following performance will be achieved on the IMDb movie review classification dataset:

-

Training Accuracy: 92.15%

-

Validation Accuracy: 89.98%

-

Testing Accuracy: 89.44%

In the next section, this article will compare these LoRA fine-tuning results with traditional fine-tuning results.

Comparison with Traditional Fine-Tuning Methods

In the previous section, LoRA achieved a testing accuracy of 89.44% under default settings. How does this compare to traditional fine-tuning methods?

To make a comparison, this article conducted another experiment, taking the training of the DistilBERT model as an example, but only updating the last 2 layers during training. The researcher achieved this by freezing all model weights and then unfreezing the two linear output layers:

The classification performance obtained by only training the last two layers is as follows:

-

Training Accuracy: 86.68%

-

Validation Accuracy: 87.26%

-

Testing Accuracy: 86.22%

The results show that LoRA outperforms the traditional fine-tuning method of training only the last two layers, while using 4 times fewer parameters. Fine-tuning all layers requires updating parameters 450 times more than LoRA settings, but the testing accuracy only improves by 2%.

Optimizing LoRA Configuration

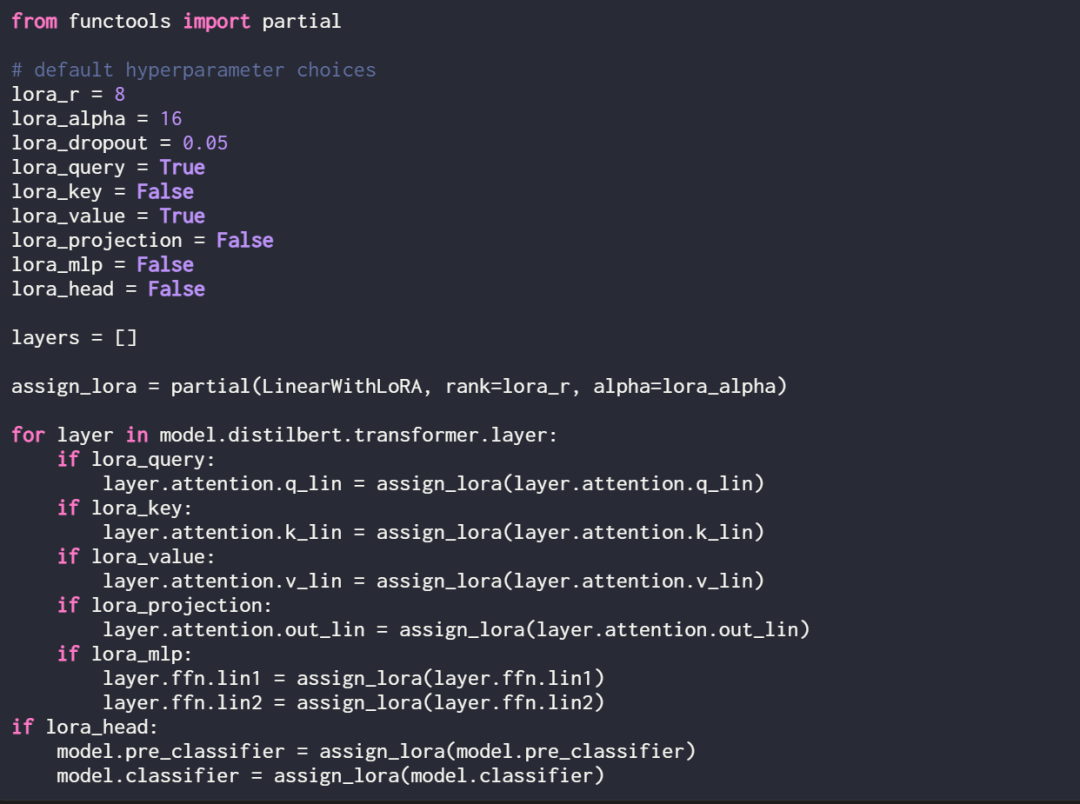

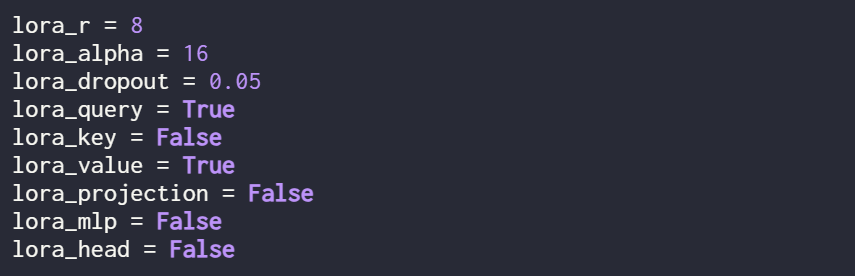

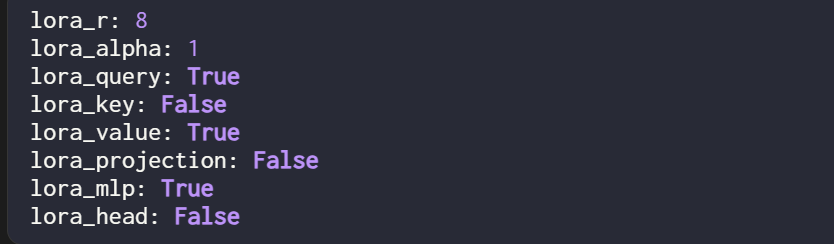

The results mentioned earlier were all obtained under the default settings of LoRA, with the hyperparameters as follows:

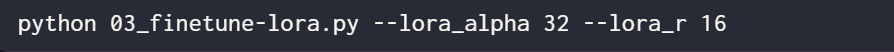

If users want to try different hyperparameter configurations, they can use the following command:

However, the best hyperparameter configuration is as follows:

Under this configuration, the results are as follows:

-

Validation Accuracy: 92.96%

-

Testing Accuracy: 92.39%

It is noteworthy that even with only a small portion of trainable parameters (500k VS 66M) in the LoRA settings, the accuracy is still slightly higher than that achieved through full fine-tuning.

Original link: https://lightning.ai/lightning-ai/studios/code-lora-from-scratch?continueFlag=f5fc72b1f6eeeaf74b648b2aa8aaf8b6

Scan the QR code to add the assistant WeChat

About Us