©PaperWeekly Original · Author | Su Jianlin

Unit | Dark Side of the Moon

Research Direction | NLP, Neural Networks

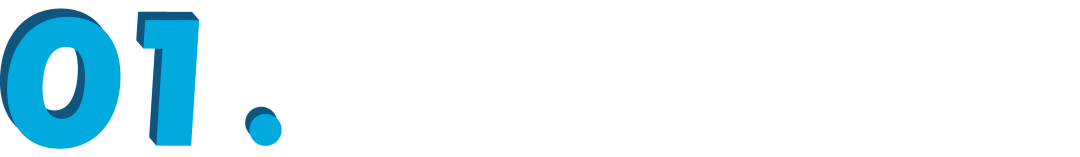

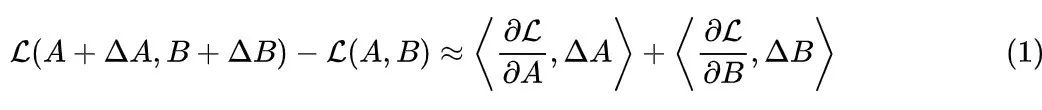

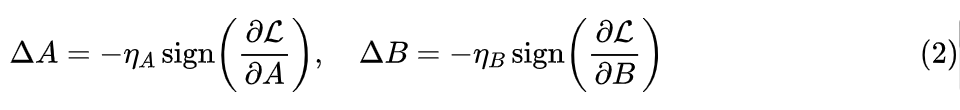

Assigning different learning rates to the two matrices of LoRA can further enhance its performance.

This conclusion comes from the recent paper “LoRA+: Efficient Low-Rank Adaptation of Large Models”[1] (hereafter referred to as “LoRA+”). At first glance, this conclusion may not seem particularly special, because configuring different learning rates introduces a new hyperparameter, and generally speaking, introducing and fine-tuning hyperparameters often leads to improvements.

What is special about “LoRA+” is that it theoretically affirms this necessity and asserts that the optimal solution must have the learning rate of the right matrix greater than that of the left matrix. In short, “LoRA+” serves as a classic example of theory guiding training and proving effective in practice, worthy of careful study.

Conclusion Analysis

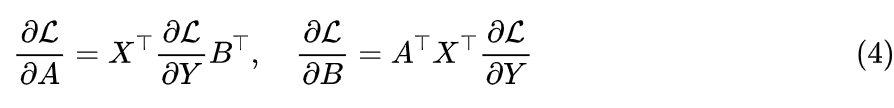

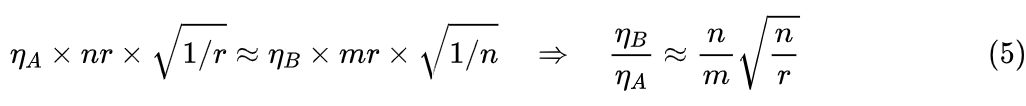

The conclusion of “LoRA+” is: To make LoRA’s effect as close to optimal as possible, the learning rate of weight B should be greater than that of weight A.

Numerical Stability

Equivalent Contribution

Quick Derivation

Article Summary

References

More Reading

#Submission Channel#

Let Your Words Be Seen by More People

How can we make more quality content reach the reader group with a shorter path, reducing the cost for readers to find quality content? The answer is: people you don’t know.

There are always some people you don’t know who know what you want to know. PaperWeekly may serve as a bridge, facilitating collisions of academic inspiration from scholars with different backgrounds and directions, sparking more possibilities.

PaperWeekly encourages university laboratories or individuals to share various quality content on our platform, which can include latest paper interpretations, analysis of academic hotspots, research insights, or competition experience explanations. Our only goal is to make knowledge flow genuinely.

📝 Basic Submission Requirements:

• The article must be an original work, not previously published in public channels. If it is an article published or to be published on other platforms, please clearly indicate

• Manuscripts are recommended to be written in markdown format, and images in the text should be sent as attachments, requiring clear images without copyright issues

• PaperWeekly respects the author’s right to attribution and will provide competitive remuneration for each accepted original first publication, based on a tiered system according to the article’s reading volume and quality

📬 Submission Channel:

• Submission Email:[email protected]

• Please include immediate contact information (WeChat) in your submission, so we can contact the author as soon as the manuscript is selected

• You can also directly add the editor on WeChat (pwbot02) for quick submission, with a note: name-submission

△ Press and hold to add PaperWeekly editor

🔍

Now, you can also find us on “Zhihu”

Enter the Zhihu homepage and search for “PaperWeekly”

Click “Follow” to subscribe to our column