Source: Machine Heart

This article is approximately 1270 words long, recommended reading time is 3 minutes.

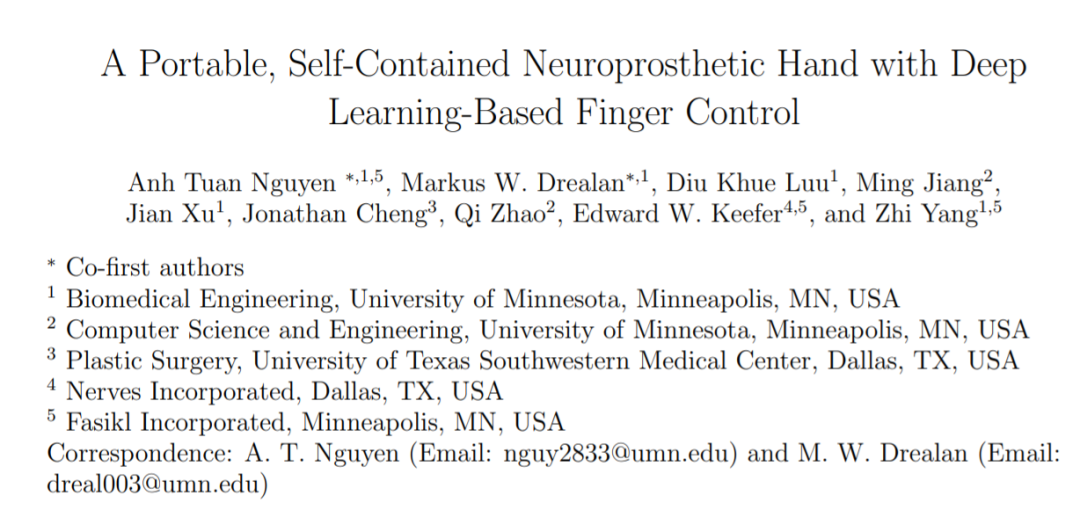

This article introduces an experimental neural prosthetic controlled by embedded deep learning proposed by researchers from the University of Minnesota and other institutions.

How else can Jetson Nano be used?

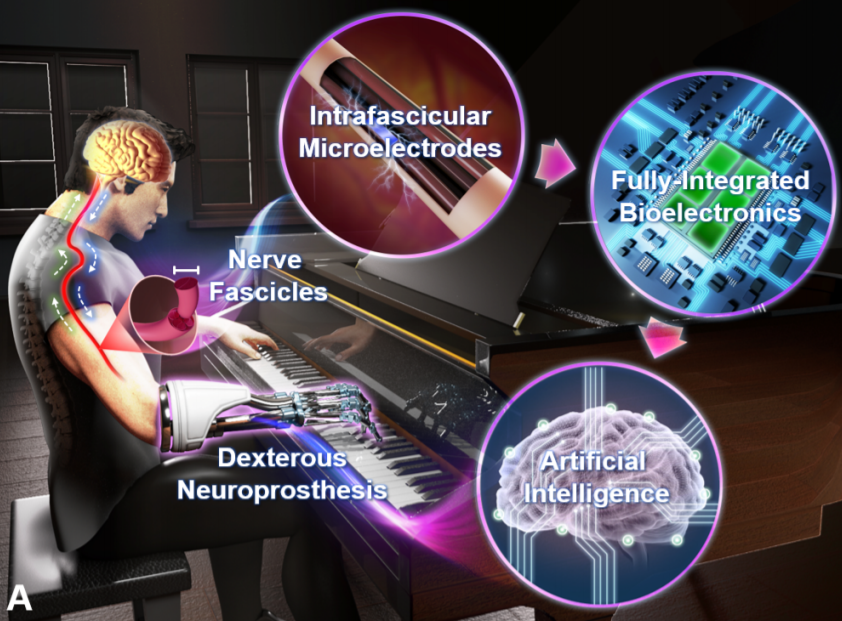

In recent years, deep learning-based neural decoders have become the main method for achieving dexterous and intuitive control of neural prosthetics. Humans have even envisioned a wide range of applications for such prosthetics in the medical field.

However, due to the high computational demands of deep learning, few studies have applied it clinically. The development of edge computing devices offers a potential solution to this problem.In a new paper, researchers from the University of Minnesota and other institutions proposed an experiment on a neural prosthetic controlled by embedded deep learning. The neural decoder in this study is designed based on an RNN architecture and deployed on the NVIDIA Jetson Nano. The NVIDIA Jetson Nano has good AI computing power but is very compact, allowing prosthetic users to control each finger in real-time while also being able to move freely.

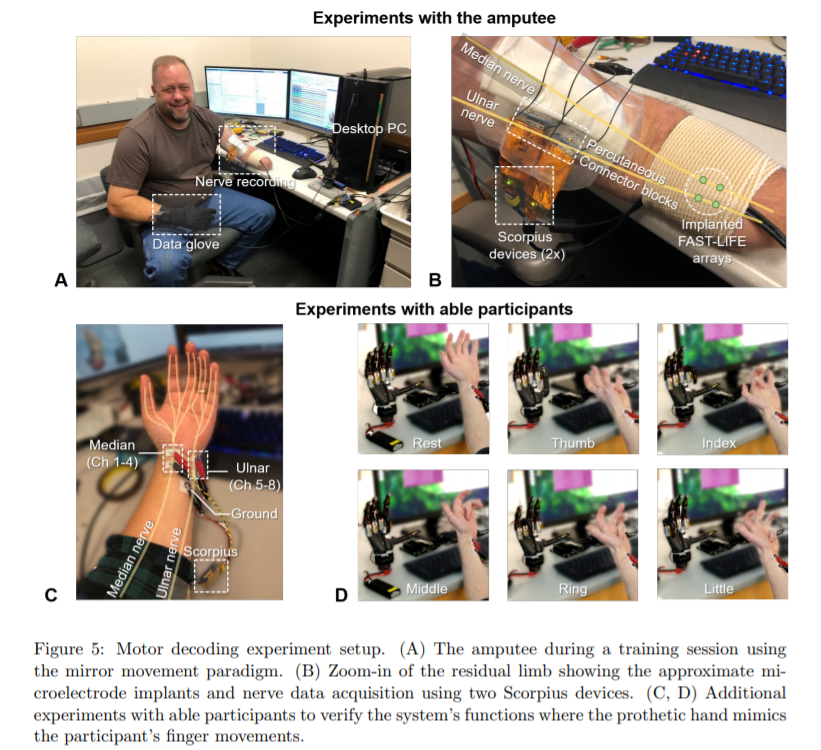

The researchers evaluated the proposed system on a volunteer with a radial amputation using peripheral nerve signals. The experimental results showed that the system could provide robust, accurate (95-99%), and low-latency (50-120 milliseconds) control of individual fingers, both in laboratory and real-world environments.

The researchers stated that, to their knowledge, this system is the first portable platform to effectively implement a deep learning neural decoder in clinical neural prosthetics. This new type of prosthetic system has embedded artificial intelligence and can serve as a foundation for new wearable biomedical devices, helping to accelerate the deployment of deep neural networks in clinical applications. Paper link: https://arxiv.org/pdf/2103.13452.pdfThis achievement is built upon previous research advancements, including the neural bundle microelectrode array proposed by Overstreet et al. in 2019 for connecting nerve fibers and bioelectronic systems; the Neuronix neural interface microchip designed by Nguyen et al. in 2020 for simultaneous neural recording and stimulation; the deep learning motor decoding paradigm optimization proposed by Luu et al. in 2021 to reduce decoder computational complexity; and the software and hardware implementations of SOTA edge computing platforms.System OverviewThe following figure shows the general structure of the neural decoder for the proposed neural prosthetic:

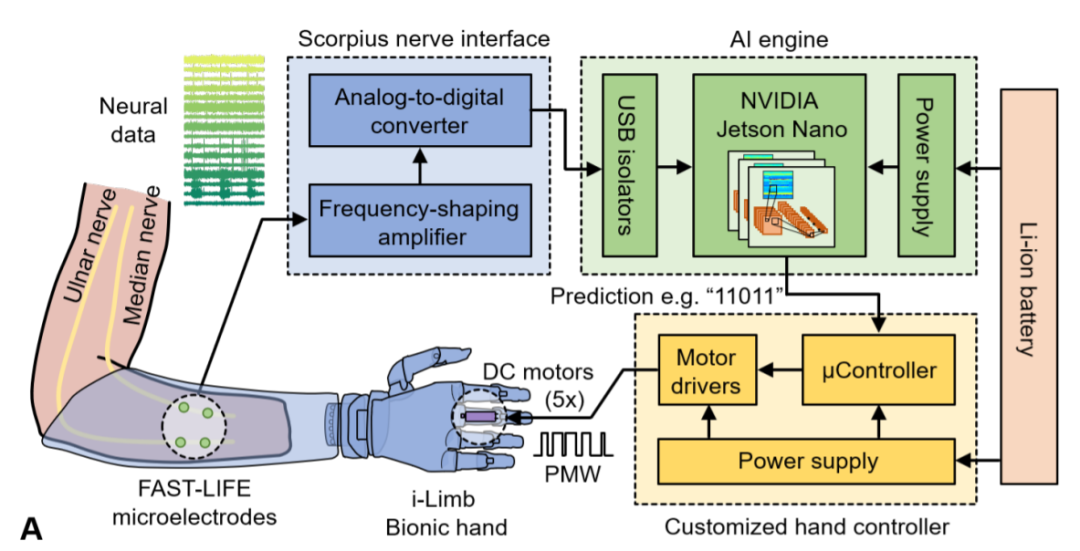

Paper link: https://arxiv.org/pdf/2103.13452.pdfThis achievement is built upon previous research advancements, including the neural bundle microelectrode array proposed by Overstreet et al. in 2019 for connecting nerve fibers and bioelectronic systems; the Neuronix neural interface microchip designed by Nguyen et al. in 2020 for simultaneous neural recording and stimulation; the deep learning motor decoding paradigm optimization proposed by Luu et al. in 2021 to reduce decoder computational complexity; and the software and hardware implementations of SOTA edge computing platforms.System OverviewThe following figure shows the general structure of the neural decoder for the proposed neural prosthetic:

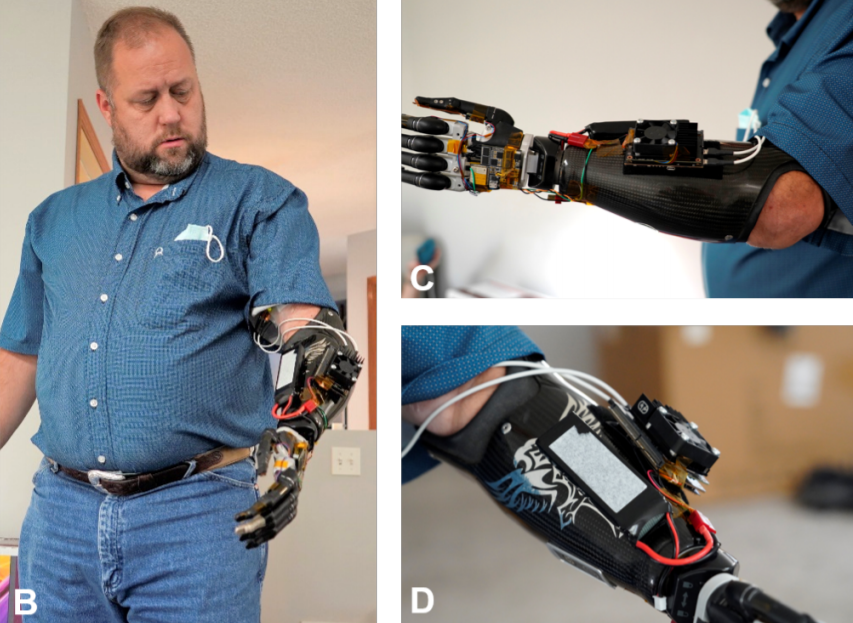

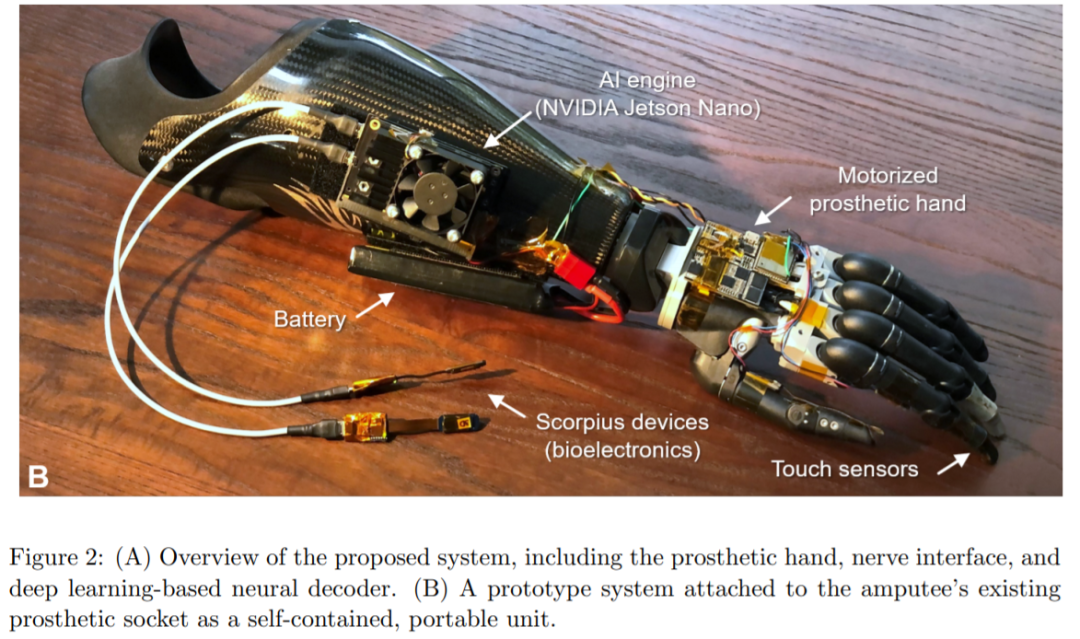

The system includes the Scorpius neural interface, a Jetson Nano with a custom carrier board, a custom arm controller, and a rechargeable lithium battery.The following image is a prototype diagram of the system. The AI engine weighs 90 grams, and the lithium battery (7.4V, 2200mAh) weighs 120 grams. In practical applications, the entire system can be integrated into the prosthetic, replacing the existing ENG sensors and electronics, thus having minimal impact on the weight and aesthetics of the arm.

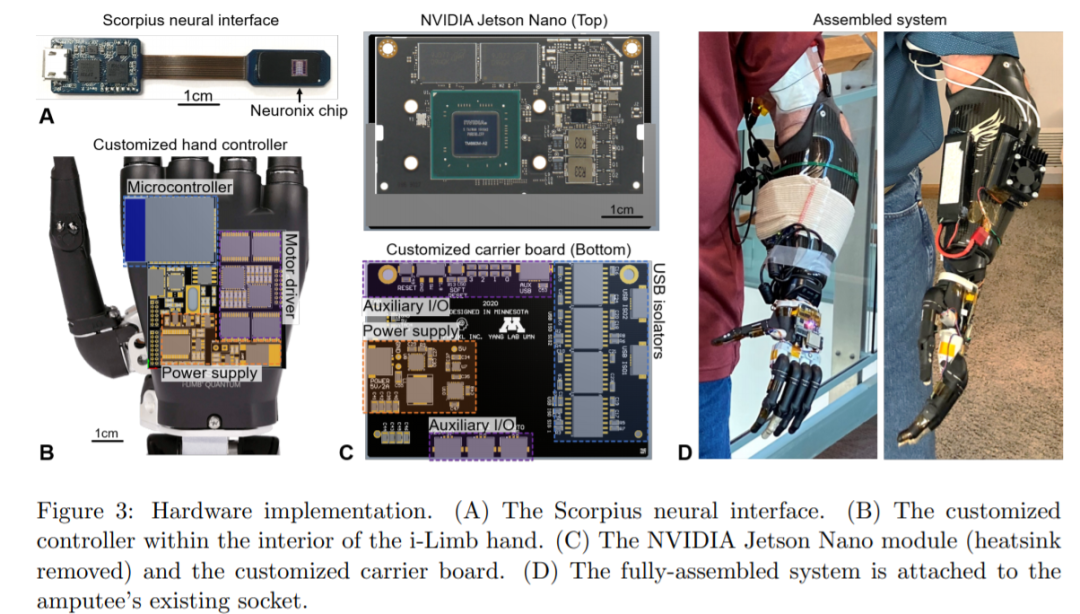

The neural data is obtained through the previously proposed Scorpius neural interface. Each Scorpius device has 8 recording channels, equipped with frequency shaping (FS) amplifiers and high-precision analog-to-digital converters (ADC). Multiple devices can be configured based on the required number of channels. The FS neural recorder has been shown to acquire ultra-low noise neural signals while suppressing artifacts. The raw neural data is directly transmitted to the Jetson Nano computer for further processing.The core of the system is the AI engine supported by the Jetson Nano platform. The researchers designed a custom carrier board to provide power management and I/O connections for the Nano module. This module can operate in 10W mode (4-core CPU 1900 MHz, GPU 1000 GHz) or 5W mode (2-core CPU 918 MHz, GPU 640 MHz), allowing for approximately 2 hours and 4 hours of continuous use, respectively, based on the current battery size.In this system, the trained deep learning model is deployed on the AI engine, converting neural signals in real-time into the true intentions of individual finger movements. The final predictions are sent to the hand controller to drive the prosthetic hand.This prosthetic hand is designed based on the i-Limb prosthetic platform from the UK technology company Touch Bionics, featuring five individually driven fingers. The researchers replaced the original i-Limb default driver with a custom hand controller, allowing direct operation of the DC motors hidden in each finger based on the predictions of the deep learning model. This controller is designed around the ESP32 module, featuring a low-power microcontroller.The following image provides a more detailed view of the hardware implementation of each component:

——END——