This article presents a globally consistent semantic SLAM system (GCSLAM) and a semantic fusion localization subsystem (SF-Loc), achieving accurate semantic map construction and robust localization in complex parking environments. The system utilizes visual cameras (front and surround), IMU, and wheel speed encoders as input sensor configurations. The first part of this work is GCSLAM. GCSLAM introduces a novel factor graph to optimize pose and semantic maps, combining innovative error terms based on multi-sensor data and bird’s-eye view (BEV) semantic information. Additionally, GCSLAM integrates a global parking space management module for storing and managing parking space observation information. SF-Loc is the second part of this work, utilizing the semantic map constructed by GCSLAM for map-based localization. SF-Loc combines matching results and odometric poses with a novel factor graph. The system demonstrates superior performance over existing SLAM systems on two real-world datasets, showcasing excellent capabilities in robust global localization and precise semantic map construction.

SLAM technology faces several challenges when applied to indoor parking lots, including the absence of GNSS signals and performance issues of low-cost sensors in complex lighting and repetitive texture environments. Existing SLAM systems often perform poorly in handling the complexities of indoor parking lots due to insufficient optimization constraints, especially in large and complex parking areas, leading to localization errors and inaccurate map construction.

To address these issues, a globally consistent semantic SLAM system named GCSLAM is proposed, optimizing pose and semantic maps through innovative factor graphs and a global parking space management module. GCSLAM utilizes multi-sensor data and BEV semantic information, designing new parking space association methods and error terms to minimize false detections and noise impacts, accurately determining relationships between parking spaces.

Furthermore, GCSLAM introduces a global vertical error term to constrain the direction of parking spaces and designs a global parking space management module to store and update parking space observations, addressing potential false detections and noise issues caused by the BEV perception module.

After constructing the global map, a semantic fusion localization subsystem SF-Loc is proposed to improve localization accuracy and speed. SF-Loc combines semantic ICP matching and odometry to achieve robust and accurate localization, maintaining good performance even in visually sparse areas. Thus, the combination of GCSLAM and SF-Loc enhances SLAM performance in indoor parking environments, improving the system’s robustness and accuracy.

The experiments were conducted in a complex parking lot with high-density parking spaces. The global map construction and localization results validate the robustness and effectiveness of GCSLAM. Additionally, the localization subsystem SF-Loc was tested on the global map established by GCSLAM, achieving decimeter-level global accuracy. The main contributions of this paper are as follows:

● A globally consistent semantic SLAM system GCSLAM is proposed, based on factor graph optimization, featuring innovative parking space representations and novel geometric-semantic composite error terms for constraints.

● A parking space management module is introduced, which stores parking space observations and updates the global parking space while effectively handling noise and false detections.

● A map-based localization subsystem SF-Loc is proposed, which utilizes factor graph optimization to fuse semantic ICP results and odometric constraints.

● The system is validated in complex real indoor parking lots, demonstrating real-time, high-precision localization and semantic map construction performance.

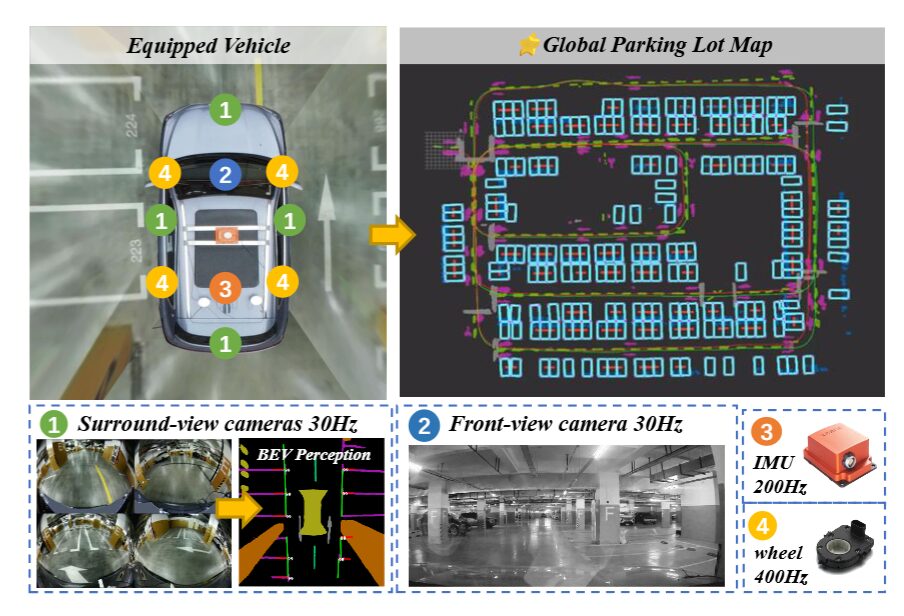

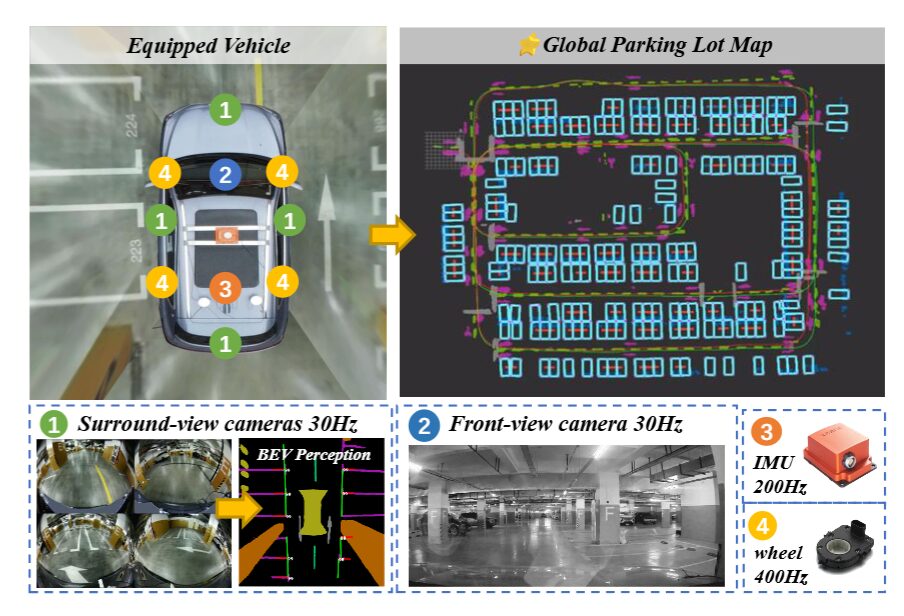

▲Figure 1|Sensor configuration of the proposed system ©️【Deep Blue AI】

▲Figure 1|Sensor configuration of the proposed system ©️【Deep Blue AI】

Early visual SLAM technology was primarily based on filtering methods, but later SLAM systems utilizing bundle adjustment optimization emerged, providing higher accuracy and global consistency. However, monocular camera SLAM faces issues with scale recovery and susceptibility to visual blur. To enhance system robustness and accuracy, various sensor fusion methods, such as MSCKF and VINS-Mono, have been developed, combining visual data with other sensor data.

Despite these multi-sensor fusion methods making progress in certain aspects, they still face challenges when performing automated valet parking (AVP) tasks in indoor parking environments, such as limited salient features and complex lighting conditions. To address these issues, some studies have proposed methods using bird’s-eye view images as input to provide rich ground features. These methods include AVP-SLAM, work by Zhao et al., VISSLAM, and MOFISSLAM, which utilize different technical means to improve localization accuracy and map construction quality.

Although these methods have achieved certain results in improving SLAM performance in indoor parking lots, they are sensitive to noise, and their performance in complex parking environments still needs improvement. Therefore, a new factor graph method is proposed to enhance the robustness and accuracy of SLAM in indoor parking lots.

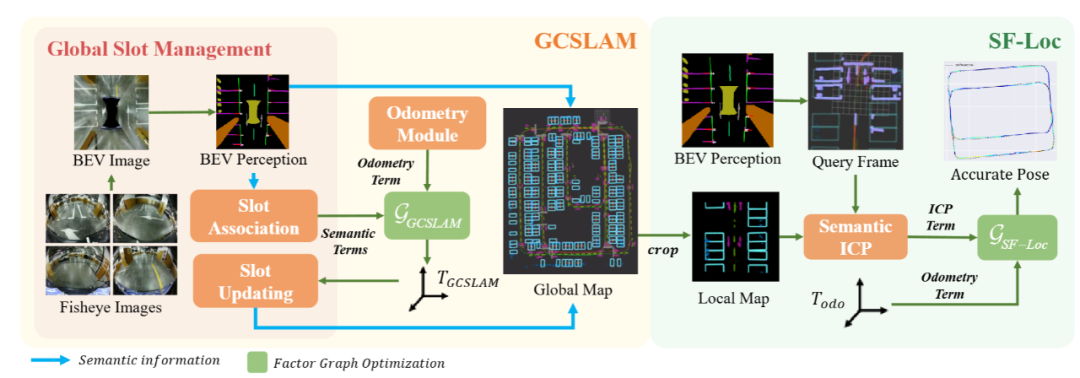

The system employs various sensors as input, including front-facing cameras, IMU, wheel speed encoders, and four surround cameras. The overall framework of this work is shown in Figure 2. The first part is the SLAM system GCSLAM. GCSLAM integrates three modules: a global parking space management module, odometry, and factor graph optimization. The odometry module is loosely coupled with the other modules, making it replaceable and enhancing the system’s flexibility and usability. This work uses VIW as the odometry module. The global parking space management module includes a BEV perception module and parking space association. Furthermore, this global parking space management module matches detection results to global parking spaces and performs parking space association. Based on odometric poses, semantic information, and parking space association results, factor graph optimization can achieve precise pose estimation and global semantic map construction. After establishing the global semantic map, the second part, the localization subsystem SF-Loc, fuses odometric poses with semantic matching results for map-based localization.

▲Figure 2|Schematic diagram of the proposed system ©️【Deep Blue AI】

▲Figure 2|Schematic diagram of the proposed system ©️【Deep Blue AI】■4.1 Factor Graph with Semantic Parking Space Nodes

▲Factor graph structure of the SLAM system ©️【Deep Blue AI】

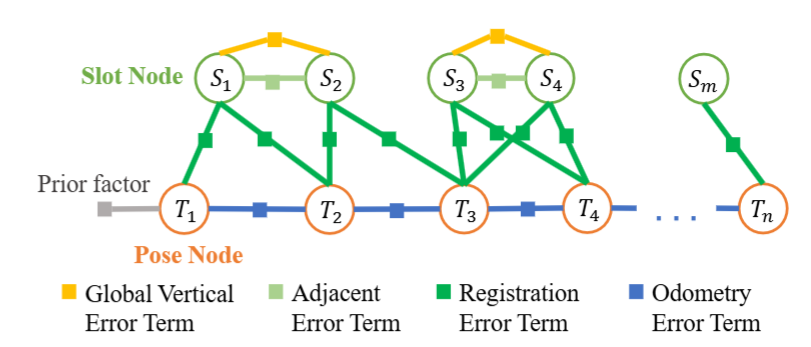

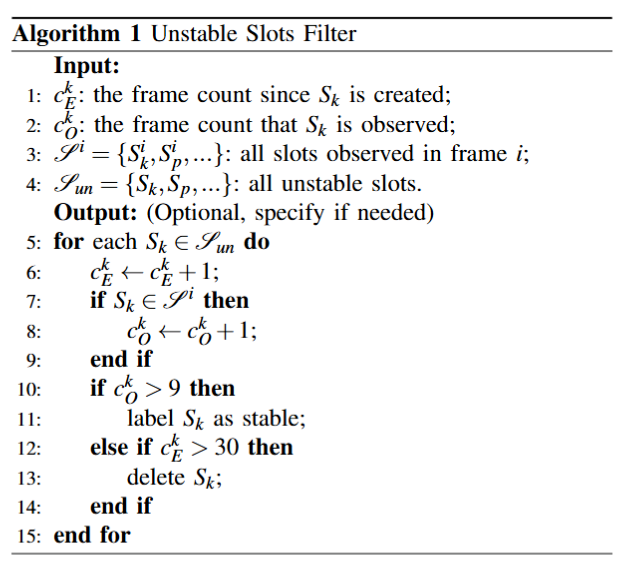

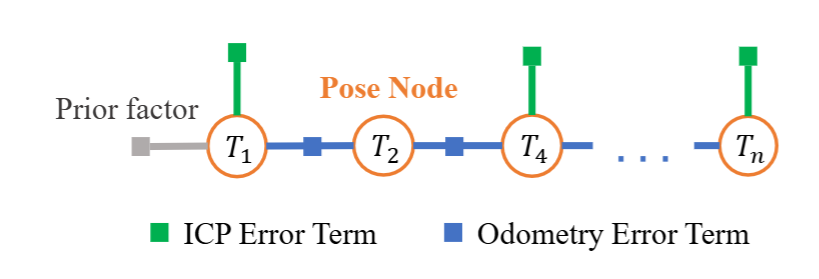

▲Factor graph structure of the SLAM system ©️【Deep Blue AI】This paper treats the SLAM task as a factor graph optimization problem, aiming to estimate the accurate poses of keyframes. The selection of keyframes is based on the inter-frame distances provided by odometry. The factor graph consists of nodes and edges, where nodes represent the variables to be optimized, and edges are error terms constraining these nodes. As shown in Figure 3, GCSLAM constructs a factor graph using two types of nodes and four types of edges, defined as:

●Pose Node: Pose nodes store the 3 degrees of freedom (DoF) of the vehicle pose in the world coordinate system at each frame since the SLAM system assumes the parking lot is flat. The initial values of pose nodes are provided by the odometry module, which runs as a separate thread.

●Parking Space Node: When the BEV perception module detects a parking space, it outputs the endpoint coordinates and direction of its entry edge in pixel coordinates. The midpoint of the entry edge is first transformed to the world coordinates. Then, parking space association is performed to determine the global ID of the observed parking space. This global parking space is represented as . The observation recorded in the current frame is denoted as , representing the coordinates of the midpoint of the parking space entry edge in the vehicle coordinates of frame . The midpoint of the parking space entry edge in the world coordinates is represented as the parking space node.

●Odometry Error Term: Error terms are constructed based on the odometry module. Specifically, the form is as follows:

●Matching Error Term: This error constrains the relationship between and . By transforming the observation to the world coordinates and comparing it with , the matching error can be established as follows:

●Adjacent Error Term: When the keyframe is reached, all are traversed. If the distance between two parking spaces and is less than a specified threshold (2.5 meters), they are considered adjacent. An adjacent error term is established between adjacent parking spaces and . This error term is used to ensure that the directions of adjacent parking spaces are consistent and that there are no gaps between them. The specific form of AET is as follows:

Where represents the entry edge of the parking space, as shown in Figure 4(d). represents the first two dimensions of , which denote the coordinates of the parking space.

●Global Vertical Error: In most parking lots, parking spaces are either perpendicular or parallel to each other. To utilize this information, a new concept of global parking space direction is introduced. is defined as the average width of the first five observed parking spaces. This is because SLAM is relatively accurate at the start without accumulated drift. The global vertical error is only applied to adjacent parking spaces, as there may be inclined parking spaces that are not parallel to other spaces in some parking lots. Such inclined parking spaces are more likely to exist in isolation, so applying global vertical constraints to these parking spaces would be erroneous. The specific expression of the global vertical error is as follows:

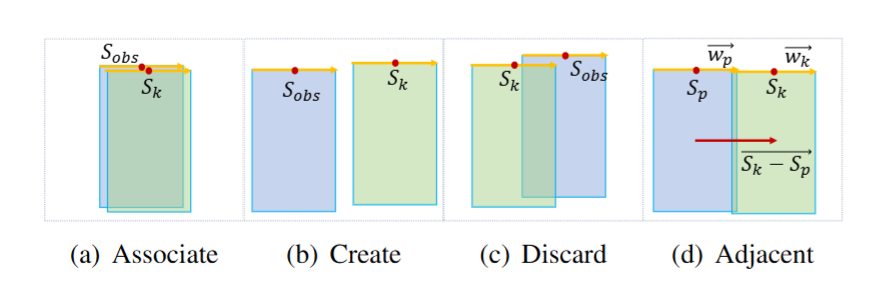

▲Three possible situations when making new observations ©️【Deep Blue AI】

▲Three possible situations when making new observations ©️【Deep Blue AI】

The overall weight of an observation is:

The update formulas for the global parking space and its weight are:

▲Factor graph structure of SF-Loc ©️【Deep Blue AI】

▲Factor graph structure of SF-Loc ©️【Deep Blue AI】There is only one type of pose node , which is the same as the previously introduced pose node. The odometry error term (OET) has also been briefly introduced earlier. Additionally, some nodes are constrained by semantic ICP matching error terms based on semantic relationships.

This paper’s semantic ICP matching algorithm performs matching between local maps and the current point cloud. The local map is a 30m × 30m map extracted from the global map based on previous poses. The current point cloud is derived from BEV semantics. During the semantic ICP process, a kd-tree is used to identify nearest neighbors with the same semantics for each point. Based on the matching relationships of semantic point pairs, the transformation between the current point cloud and the local map is calculated. This process iterates until convergence, providing refined poses.

The semantic ICP error term is a unary edge, providing absolute pose results for matching:

Due to the strong constraints imposed by unary edges and the high noise of semantic segmentation, the frequency of adding ICP unary edges is reduced. An ICP error term is added every 10 frames, and jump detection is performed before adding. The distance between the ICP matching results of the current frame and the previous frame is calculated. If the distance exceeds a threshold of 2 meters, the matching result of the current frame is considered inaccurate. In this case, the ICP error term is not added to the current frame. The semantic ICP error term effectively corrects the accumulated drift of odometry, while the odometry error term alleviates instability jumps in ICP. Therefore, SF-Loc enhances localization accuracy and robustness.

▲Experimental platform and environment ©️【Deep Blue AI】

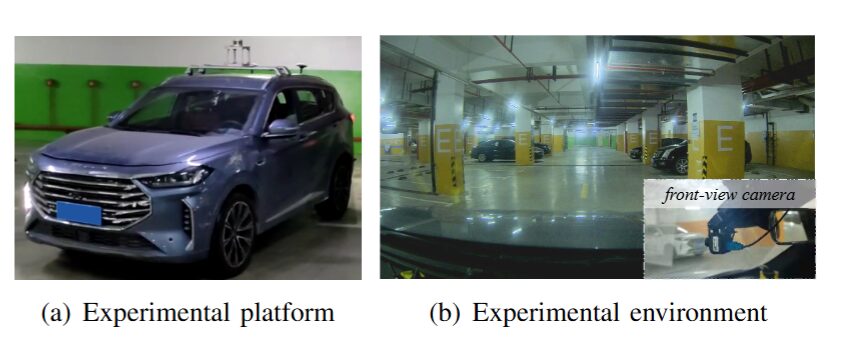

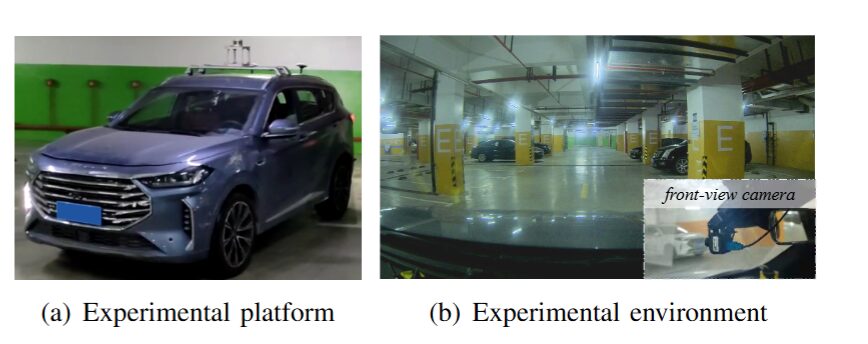

▲Experimental platform and environment ©️【Deep Blue AI】This paper tests the proposed system in two underground parking lots. The environments of the parking lots are shown in Figure 6(b). Both parking lots are approximately 100 meters × 80 meters in size. The test vehicle is shown in Figure 6(a), equipped with four surround fisheye cameras, one front-facing camera, an inertial measurement unit (IMU), wheel speed encoders, and a laser radar (LiDAR). The LiDAR is used only to obtain the ground truth pose for evaluation. All sensors have been calibrated offline. The operating frequency of the IMU is 200Hz, the wheel speed encoder operates at 400Hz, the front-facing camera operates at 30Hz with a resolution of 1920×1080 pixels, and each fisheye camera operates at 30Hz with a resolution of 960×540 pixels. Experiments are conducted on NVIDIA Jetson AGX Xavier.

The experiments were conducted on two representative datasets collected by the test vehicle:

●In Dataset 1, the vehicle completed a square trajectory, returning to the starting point, with a total coverage distance of 379 meters.

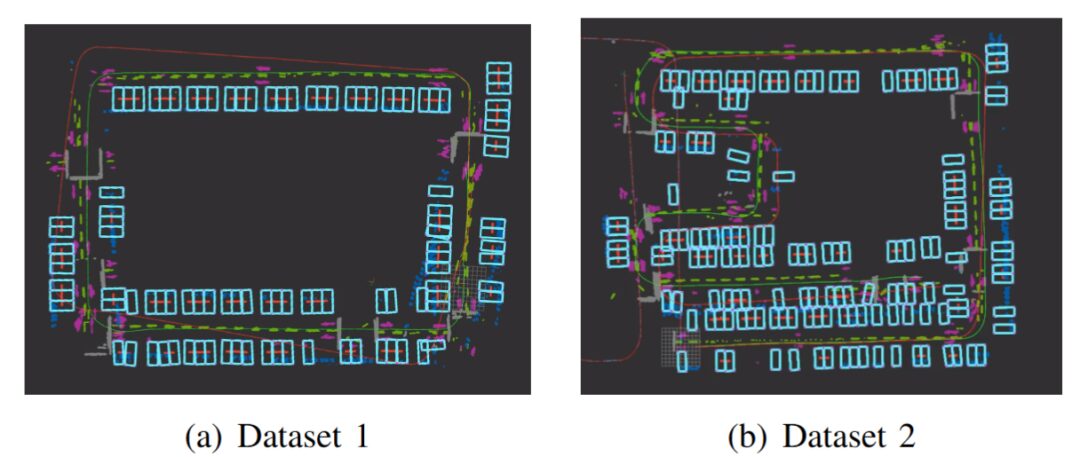

●In Dataset 2, the vehicle freely navigated within the parking lot without returning to the original point, covering 438 meters. The global map constructed by GCSLAM on these datasets is shown in Figure 7.

▲Table 1|Absolute trajectory error in SLAM ©️【Deep Blue AI】

▲Table 1|Absolute trajectory error in SLAM ©️【Deep Blue AI】 ▲Figure 7|Global mapping results of Data 1 and Data 2 ©️【Deep Blue AI】

▲Figure 7|Global mapping results of Data 1 and Data 2 ©️【Deep Blue AI】

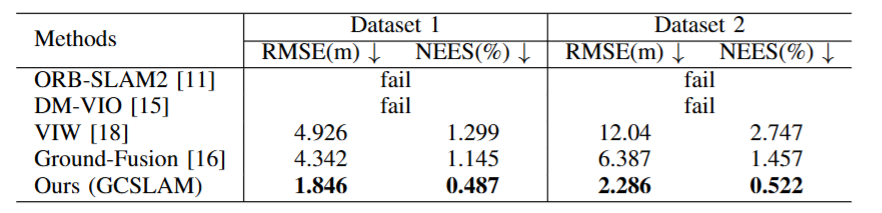

1)Tracking: Due to the lack of open-source existing indoor parking SLAM algorithms and the experiments conducted on computational resources insufficient to run learning-based SLAM on AGX, GCSLAM is compared with some open-source traditional visual SLAM: ORB-SLAM2, VIW, DM-VIO, and Ground-Fusion. Due to the lack of wheel speed encoders, ORB-SLAM2 and DM-VIO failed. In indoor parking lots, due to complex lighting conditions, cameras cannot provide reliable data, while wheel speed encoders can provide more accurate data since the ground is flat, and tire slip can be ignored.

Although most indoor parking SLAM is not open-source and cannot be compared with this method on the same dataset, NEES evaluation can provide insights into algorithm accuracy. Thanks to the new error terms and modules, GCSLAM achieves a NEES of 0.487%, significantly lower than the result of AVP-SLAM (1.33%).

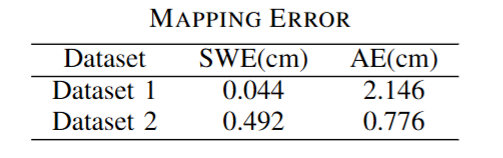

2)Map Construction: Map construction performance is evaluated through two proposed metrics: parking space width error (SWE) and adjacent error (AE). SWE represents the difference between the average parking space width in the global map and the actual parking space width, indicating the gap between the global map and the real world. AE represents the distance between adjacent parking spaces, which should theoretically be zero. Figure 7 shows the global map. Table II presents the accuracy results of GCSLAM’s map construction, demonstrating the precision of the proposed algorithm.

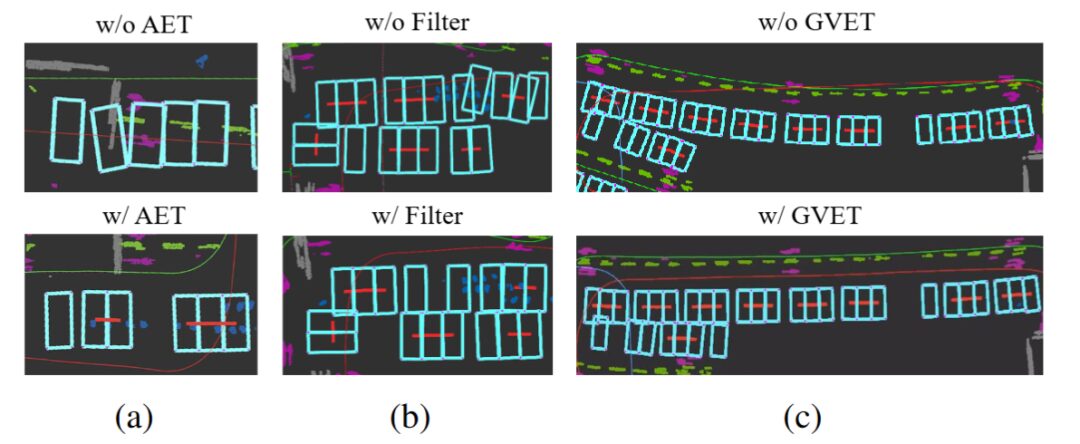

3)Ablation Study: This paper also evaluates the effectiveness of different modules in map construction. The impact of different modules on map construction is shown in Figure 8. In Figure 8, the top image shows the results without different modules, while the bottom image shows the results with these modules. It can be observed that removing AET (Adjacent Error Term) leads to irregularities between parking spaces. GVET (Global Vertical Error Term) significantly alleviates tilt issues during long-distance straight driving. If the unstable parking space filter is not used, the global map will contain many erroneous parking spaces.

▲Ablation study on (a) AET (Adjacent Error Term), (b) unstable parking space filter, and (c) GVET (Global Vertical Error Term) ©️【Deep Blue AI】

▲Ablation study on (a) AET (Adjacent Error Term), (b) unstable parking space filter, and (c) GVET (Global Vertical Error Term) ©️【Deep Blue AI】■5.2 Evaluation of SF-Loc

▲Table 3|Localization Error ©️【Deep Blue AI】

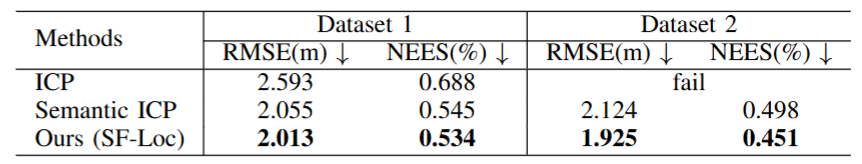

▲Table 3|Localization Error ©️【Deep Blue AI】Since SF-Loc is localization based on a known map, this paper focuses on evaluating its accuracy within the global map. Therefore, GCSLAM trajectories are used as ground truth data. The global map used in this paper is shown in Figure 7. The semantic ICP is the matching algorithm introduced in Section 3. As shown in Table III, semantic ICP significantly improves accuracy compared to the original ICP. Furthermore, due to the incorporation of the semantic fusion factor graph, the performance of SF-Loc exceeds that of the semantic ICP results.

▲Experimental platform and environment ©️【Deep Blue AI】

▲Experimental platform and environment ©️【Deep Blue AI】This paper presents GCSLAM, a novel system for tracking and map construction in indoor parking lots. GCSLAM integrates an innovative factor graph and new error terms, enabling robust and high-precision map construction in complex parking environments. Additionally, this paper develops a map-based localization subsystem, SF-Loc. SF-Loc effectively improves localization accuracy by fusing matching results and odometric poses based on a novel factor graph. The algorithm is validated through real-world datasets, demonstrating the system’s effectiveness and robustness.©️【Deep Blue AI】

Ref:

Deep Blue Knowledge Planet is now open!!

Covers multiple hot fields🔥

Urban offline salon gatherings🧑🎓

A technical exchange community breaking down information barriers👏

Recommended Reading:

The University of Hong Kong collaborates with Shanghai AI LAB to propose the first integrated model for human-computer interaction

Horizon throws out a key move: an end-to-end autonomous driving framework based on visual language models, focusing on extreme “human-like” capabilities