A professional community focused on the AIGC field, paying attention to the development and application of large language models (LLMs) such as Microsoft & OpenAI, Baidu Wenxin Yiyan, and iFlytek Spark, focusing on market research of LLMs and the AIGC developer ecosystem. Welcome to follow!

With the emergence of ChatGPT, large language models have achieved significant technological breakthroughs in generating coherent text and following instructions. However, they face challenges such as content inaccuracies and safety issues in reasoning and solving complex content.

Researchers from Google DeepMind and the University of Southern California have proposed “SELF-DISCOVER.” This is a universal framework for large language models that can autonomously discover the inherent reasoning structure of tasks to address complex reasoning problems typical of prompt methods.

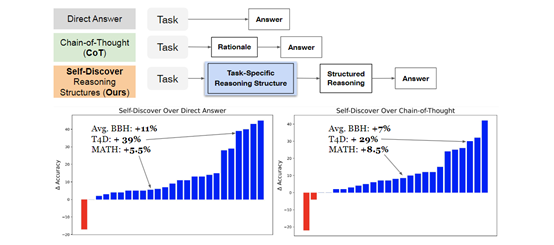

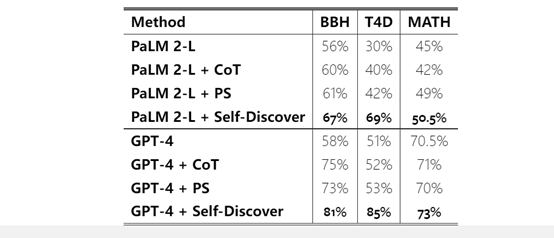

To test performance, researchers evaluated SELF-DISCOVER on multiple complex reasoning benchmarks, including Big Bench Hard, Thinking for Doing, and MATH.

Compared to methods that only use Chain of Thought (CoT), SELF-DISCOVER achieved up to a 42% performance improvement on 21 tasks. In social agent reasoning tasks, it increased the accuracy of GPT-4 to 85%, improving by 33% over the previous best method.

Paper link: https://arxiv.org/abs/2402.03620

Currently, traditional prompt methods have certain limitations when dealing with complex reasoning problems. For example, Chain of Thought implicitly assumes a certain reasoning process, failing to fully leverage the strengths of different modules.

Moreover, while Chain of Thought is well-suited for linear and stepwise problems, it may not be helpful for complex issues that require nonlinear thinking or consideration of multiple intersecting factors.

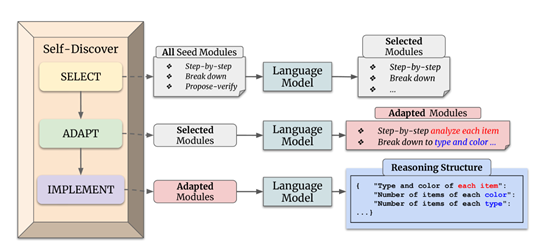

SELF-DISCOVER can guide large language models to autonomously select, adjust, and combine a set of given atomic reasoning modules to form a reasoning structure for solving specific tasks.

This structure not only combines the advantages of multiple reasoning modules but is also uniquely customized for each task, greatly enhancing the model’s reasoning and problem-solving capabilities.

Task Layer, Self-Discovery Reasoning Structure

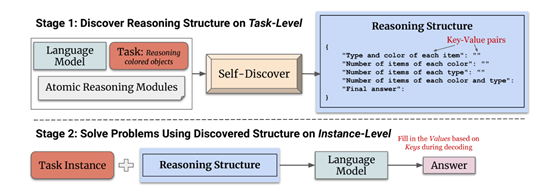

This is the first phase of SELF-DISCOVER, primarily discovering the reasoning structure for solving the task at the task level, guided by three sub-modules.

SELECT Module: Selects the key modules for solving the given task from a set of atomic reasoning modules. These atomic reasoning modules contain various high-level problem-solving heuristics, such as “step-by-step thinking” and “decomposing into sub-tasks.” The SELECT module determines which modules are crucial for solving the task based on several task examples.

ADAPT Module: Refines each reasoning module selected by the SELECT module to make it more suitable for the given task. For example, refining “decomposing into sub-tasks” to “first calculate each arithmetic operation.”

IMPLEMENT Module: Implements the refined reasoning modules from the ADAPT module into a structured action plan, converting natural language descriptions into key-value pair formatted JSON structures, clearly defining what content needs to be generated at each step.

Using the Discovered Structure to Solve Tasks

After generating a reasoning structure closely related to the task in the first phase, the second phase uses this structure to solve all instances of the task.

It appends the structure after each instance, prompting the language model to fill in each value step by step according to the structure, ultimately arriving at the answer.

Compared to other methods, SELF-DISCOVER has three major advantages: 1) The discovered reasoning structure integrates the advantages of multiple reasoning modules;

2) It is highly efficient, requiring only 3 additional reasoning steps at the task level; 3) The discovered structure reflects the inherent characteristics of the task, making it more interpretable than optimized prompts.

The research also found that SELF-DISCOVER performs best on tasks requiring world knowledge. This is because the comprehensive use of multiple reasoning modules allows the model to understand problems from different perspectives, whereas using only Chain of Thought may overlook some important information.

Additionally, compared to methods that require a large number of repeated queries, SELF-DISCOVER has demonstrated extremely high efficiency. It only requires one query each time, while ensemble methods achieving similar performance require 40 times the number of queries, meaning it can save a lot of computational resources.

This article is based on the SELF-DISCOVER paper. If there is any infringement, please contact for removal.

END