Follow our public account, click the top right corner of the public account homepage ” ··· “, set a star mark, and keep up with the latest news on smart automotive electronics and software.

Follow our public account, click the top right corner of the public account homepage ” ··· “, set a star mark, and keep up with the latest news on smart automotive electronics and software.

The software-defined vehicle is inseparable from the future central computing architecture; the two complement each other.

The above image shows the central computing architecture conceived by Texas Instruments. The author designed a vehicle central computing architecture, choosing two NVIDIA Orin chips for the vehicle computing center, one focusing on ADAS, named Orin A, and the other focusing on the cockpit, named Orin B.

The above image shows Renesas’ central computing architecture. Since Renesas does not have the best MCU, it only shows the MCU and the central computing center (server), which includes the AD/FAS smart driving server and the IVI cockpit server. For the Zonal module, it includes sensors, actuators plus gateways, switches plus MCU, which is essentially the gateway. For those parts that mainly use the CAN network, switches are not needed, as the CAN network is not that complicated; the Ethernet network part requires Ethernet switches.

The Ethernet switch selected is Marvell’s latest third-generation automotive Ethernet switch 88Q5112, which is a 9-port Ethernet switch. It integrates 6 physical layers, including 3 100/10BASE-T1(S), two 1000/100BASE-T1, and one 100BASE-T1/TX. It can correspond to 5 Zonal ECUs plus one diagnostic OBD. The diagnostic OBD uses 1000Base-T1. Currently, except for smart driving and cockpit, 100Mbps bandwidth is not needed; Zonal mainly still refers to body and comfort networks. CAN, CAN-FD or Flexray is sufficient for chassis and power transmission.

The above image is the internal framework of the 88Q5112, which supports a rich set of TSN, including 802.1AS-2020, 802.1Qat / Qav / Qbu / Qbv / Qci / Qcr, as well as the safety assurance of 802.1CB.

The above image shows the camera input diagram for the two Orins, Orin can input a maximum of 16-lane MIPI CSI-2. Additionally, C-PHY and D-PHY are used for virtual channels; an 8-megapixel camera requires 4 lanes, and less than 2 million pixels requires only 1 lane; exceeding 2 million requires 2 lanes. To be safe, 2 lanes are used for 2 million pixels, and all sensor models are for reference only. For the smart driving part, the front main camera can use three 2.5-megapixel cameras corresponding to short, medium, and long distances, or one 8-megapixel camera, or two 2.5-megapixel cameras for stereo vision. The remaining 4-6 cameras can use 2.5-megapixel or 2-megapixel cameras. Currently, the only deserializing chip corresponding to over 2 million pixels is ADI’s MAX9296, with MAX9295 for serialization, completely monopolizing the market. MAX9296 has adjustable rates of 1.5G, 3G, and 6G, and can handle up to 16 million pixels input.

The electronic rearview mirror, also known as the Camera Monitor System or E-mirror, replaces the rearview mirror with a screen and requires two video inputs. Due to the small display size, a resolution of 1 million pixels is sufficient. This display can add ADAS functions, thus falling under the ADAS system.

In the cockpit area, video inputs mainly include 360-degree surround view, AR navigation, and driver monitoring (DMS). The 360-degree surround view uses relatively advanced 2-megapixel cameras, outputting to MAX9286. AR navigation does not require too high a resolution, 1.3 million pixels is sufficient. DMS is currently developing towards higher resolution, with a trend of introducing ToF cameras, tentatively set at 2 million pixels.

Camera data can also be transmitted via Ethernet. For a 2-megapixel camera in RAW10 format at 30fps, the bitrate is about 0.6Gbps; if in RGB888 format, the bitrate is about 1.44Gbps, and an 8-megapixel camera has a bitrate of about 5.7Gbps. Currently, there are Ethernet physical layer chips corresponding to 10Gbps, and transmitting 8-megapixels is completely feasible. High-resolution cameras generally output in RAW8 or RAW10 format, while low-resolution ones generally output in YUV422 format. The advantage of Ethernet transmission is that it can use a single pair of unshielded twisted pairs, while MIPI CSI-2 requires coaxial cable transmission. The cost of Ethernet connectors is also significantly lower than that of MIPI CSI-2 connectors. Ethernet also facilitates data shaping, traffic control, etc., using TSN, and the communication exchange method of connecting Ethernet switches is more flexible than MIPI CSI-2. The cost of Ethernet physical layer chips is also lower than that of deserializing chips, but higher than that of serializing chips. The downside of Ethernet is that debugging is exceptionally complex, and the debugging equipment is very expensive, and most engineers are not familiar with Ethernet debugging.

Ethernet can also bridge cameras, such as Marvell’s 88QB5224.

If the MIPI CSI-2 interface of the SoC is used up, but it is still necessary to connect cameras, Ethernet bridging can be considered, as shown in the above image. The 88QB5224 supports the IEEE802.3ch standard, supporting up to 10G, and connecting 4 2-megapixel or 2.5-megapixel cameras is not a problem.

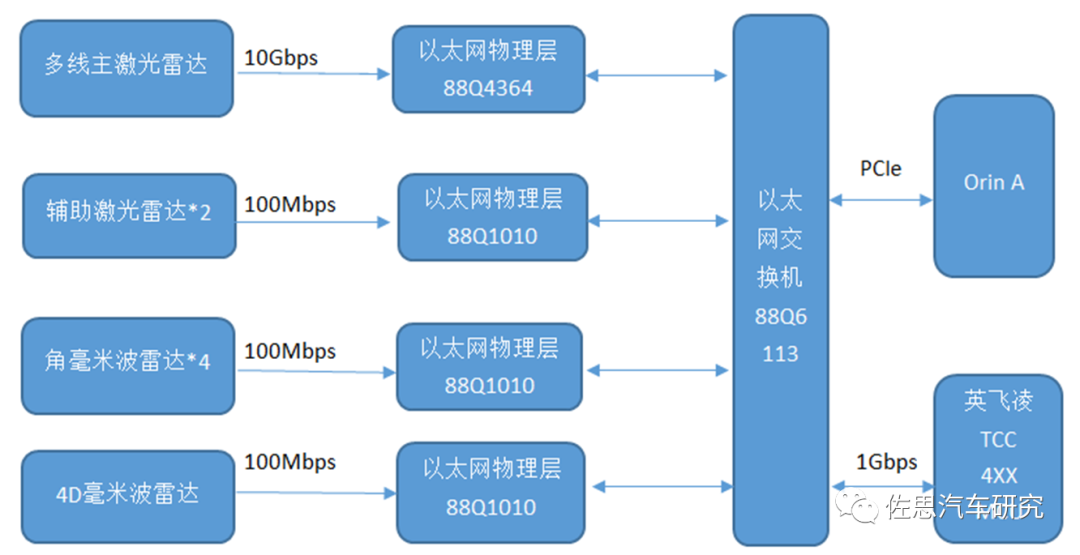

The above image shows the input cluster of LiDAR and millimeter-wave radar sensors.

LiDAR may require 1-3 units, with 1 main LiDAR. Considering the progress of LiDAR technology, sufficient bandwidth headroom is reserved, supporting up to 10Gbps, equivalent to the resolution of a 12-megapixel camera; of course, LiDAR is 3D. Currently, any type of LiDAR requires a bandwidth of 1Gbps. Auxiliary LiDAR usually serves as blind spot detection, with a very low resolution; a bandwidth of 100Mbps is sufficient. Corner radar is generally millimeter-wave radar, which currently mostly uses CAN-FD output, but Orin supporting CAN-FD can be a bit troublesome, so Ethernet is also used, and corner radar generally supports Ethernet output, usually requiring a bandwidth of 10Mbps. 4D millimeter-wave radar outputs a large amount of data, and a bandwidth of 100Mbps is sufficient. Finally, the MCU for controlling the chassis selects Infineon’s latest TCC4XX series, with a bandwidth of 1Gbps being sufficient.

Marvell’s 88Q4364 and Broadcom’s BCM8989X are currently the only two physical layer chips supporting 10G automotive Ethernet.

The 88Q6113 is Marvell’s latest third-generation 11-port Ethernet switch.

The above image is the internal framework of the 88Q6113; regarding TSN, it supports 802.1Qav/Qbv, 802.1Qat, 802.1AS.

The above image shows the video output cluster of Orin B, Orin’s display output has only one, which is DisplayPort (DP) 1.4a. It can also be configured as HDMI output or eDP output, but Orin has up to 7 USB outputs, including 3 USB3.2 and 4 USB2.0. USB and DisplayPort can be easily converted, and DisplayPort can be converted to other formats. Currently, high-resolution displays mainly use DP or eDP inputs, but in the automotive field, LVDS is still the main direction, with the development direction being eDP input, such as the current 2K or 4K automotive OLED screens. This saves a lot of format conversion; DP can also be converted to LVDS, such as the PS8625 from Spary, with a maximum bandwidth of 2.7Gbps and a maximum resolution of 1920*1200. By the way, this is the chip used by Tesla. The bandwidth of USB2.0 is relatively low, suitable for a small screen in the back row, with a resolution of about 1280*720, which is also sufficient for AR-HUD. USB3.2 is sufficient to support 2K resolution. With Orin’s powerful GPU capabilities, outputting 5 2K screens should be relatively easy. Orin A also requires 3 video outputs, one transmitted to the central display CID, which can manually switch signal sources using Orin A’s DP output, while the other two are electronic rearview mirrors, using Orin’s USB3.2 output, which can be converted to LVDS.

The audio part of the cockpit can refer to ADI’s design. This is top-tier cockpit audio, which also includes active noise cancellation inside the vehicle and road noise reduction. A key premise for good sound quality is that the noise inside the vehicle must be low. The HeadUnit is Orin B. The outdated MOST bus requires optical fibers, which is very cumbersome and has been abandoned by the times. The diagram also lacks a branch to the T-Box for E-Call.

The above image shows the peripherals of the cockpit. Generally, the PCIe port of the SoC connects to the PCIe of the Bluetooth and WiFi wireless module, but the two Orins will occupy the highest level of PCIe. Additionally, board-to-board connections sometimes use PCIe, so a PCIe to Ethernet adapter from MICROCHIP, the LAN7431, is designed. The Ethernet switch is selected as the 7-port 88EA6321. Orin’s USB interfaces are quite occupied, so a USB HUB from MICROCHIP, the USB4914, needs to be added. The MCU for the cockpit still chooses Renesas’ S4 or the traditional RH850. USS is an ultrasonic sensor, which generally uses the DSI3 bus for connection, with 1 master and 11 slaves. The ultrasonic sensor system can connect to Orin using UART+SPI, which is relatively cumbersome.

The network topology of NVIDIA DRIVE 6.0 in Safety mode

Finally, here is the network topology diagram of NVIDIA’s latest autonomous driving system DRIVE 6.0 for reference. DRIVE 6.0’s network topology has two modes: BASE and Safety, with the above image showing the Safety mode. In Safety mode, the CPU of the Ethernet switch is bypassed, so OTA is not supported. At the same time, the maximum MTU size is reduced from 16k to 1500 bytes. Here, Tegra refers to Orin, and Aurix refers to Infineon TC397T. The Ethernet switch 1 is Marvell’s 88Q5072, and the Ethernet switch 2 is 88Q6113. LAN7431 is Mircochip’s PCIe to Ethernet chip. The Safety mode sacrifices some switch performance for safety, so a high-grade Ethernet switch 88Q6113 is added, while actually one 88Q5072 is sufficient.

The network topology of NVIDIA DRIVE 6.0 in Base mode

This is the network topology Base mode of DRIVE 6.0, with the Ethernet switch being 88Q5072. Orin has two 10Gbps lines, one connecting to the Ethernet switch and the other connecting to the central gateway, which is the in-vehicle backbone Ethernet line.

NVIDIA also specifically mentioned the in-vehicle Ethernet TSN standard API, which includes time synchronization via 802.1AS(gPTP), sequencing and traffic shaping via 802.1Qav, 802.1Qbv, 802.1Qbu/802.3br, 802.1CB, and 802.1Qci, failure tolerance via 802.1Qat and 802.1Qcc.

The future central computing architecture is somewhat similar to a PC, where all data processing relies on a single CPU. The basic middleware AUTOSAR and operating systems like Linux, QNX, and Android above it form a system similar to Windows, which is essentially transparent to most programmers; they only design application software. This is the true software-defined vehicle.