This article aims to introduce the memory usage methods in the Linux kernel, detailing the various regions of memory allocation and their functions.

1. Physical Memory Allocation

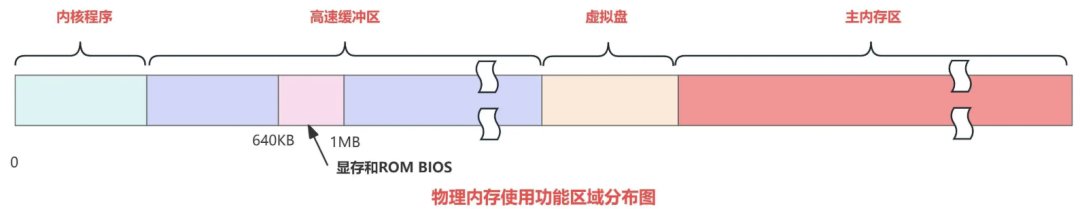

1. Physical Memory Allocation Diagram

General Overview:

The entire physical memory is divided into four blocks.

Block 1: Linux Kernel Program

This is the Linux kernel that our series focuses on.

Block 2: High-Speed Buffer

This block is a high-speed buffer for block devices such as hard drives and floppy disks. When a process needs to read data from a block device, the system will first read the data into the high-speed buffer; when data needs to be written to the block device, the system also first places the data into the high-speed buffer, which is then written to the device by the block device driver.

In other words, all read and write operations by the system are mediated by the buffer!

Block 3: Optional Virtual Disk Area

For systems with RAM virtual disks, a portion of the main memory area is also allocated for storing data for the virtual disk.

Block 4: Main Memory Area Available for All Programs

When the kernel program uses the main memory area, it must also first apply to the kernel’s memory management module for allocation before it can be used.

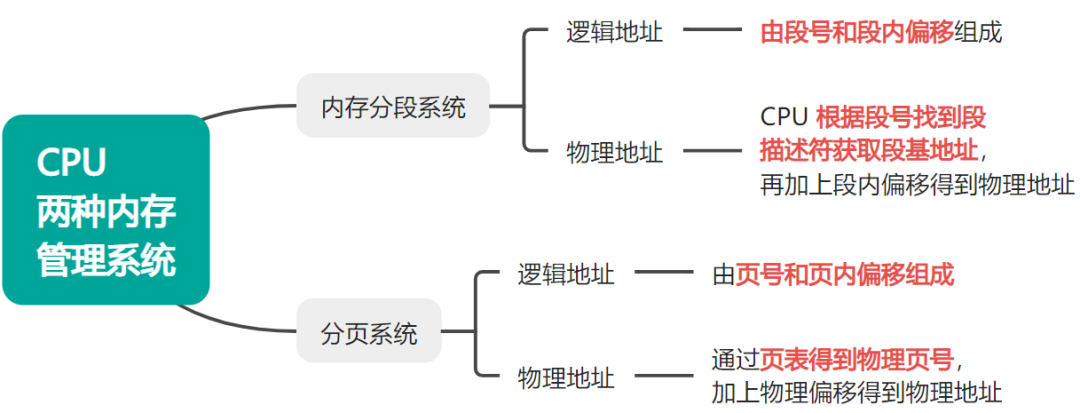

2. Two Memory Management Mechanisms

A Picture is Worth a Thousand Words

1. Memory Segmentation System

Concept

Segmentation system: divides the program’s logical space into several segments, such as code segment, data segment, stack segment, etc. Each segment has different attributes and lengths, with logical addresses composed of segment numbers and offsets within the segment. The CPU finds the segment descriptor (stored in the segment descriptor table, such as the Global Descriptor Table GDT or Local Descriptor Table LDT) based on the segment number to obtain the base address of the segment, and then adds the offset within the segment to get the physical address.

Characteristics

Segment sizes are not fixed, determined by the program’s logical structure, and segment lengths can vary. For example, the code segment stores program instructions, while the data segment stores global variables, with each segment’s length depending on actual needs.

2. Paging System

Concept

Paging system: divides physical memory and process virtual address space into fixed-size pages (commonly 4KB). Logical addresses are divided into page numbers and offsets within the page, which are mapped from virtual page numbers to physical page numbers through a page table (multi-level page tables include page directory tables and page tables), and combined with the offset within the page to obtain the physical address.

Characteristics

Page sizes are fixed, determined by hardware, usually 4KB. The process address space is evenly divided into fixed-size pages, facilitating management and mapping.

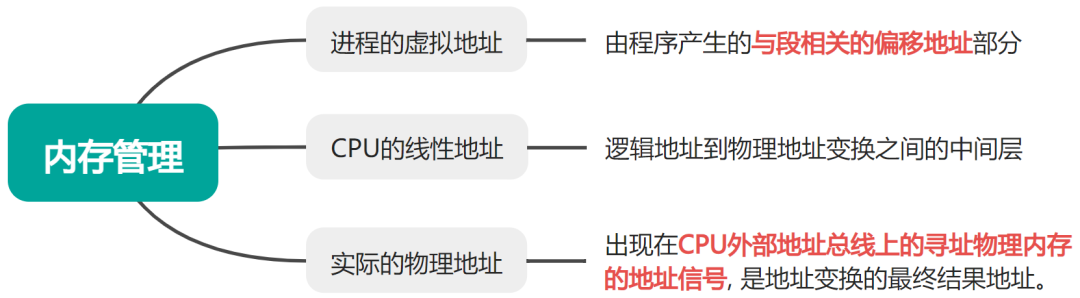

3. Three Types of Addresses

1. Virtual Address

Refers to the offset address part related to the segment generated by the program.

In Intel protected mode, it refers to the offset address within the length limit of the code segment (assuming the code segment and data segment are identical). Application programmers only need to deal with logical addresses, while segmentation and paging mechanisms are completely transparent to them, only involving system programmers.

2. Linear Address

Is the intermediate layer between logical address and physical address transformation. Program code generates logical addresses, or offsets within segments, and adding the corresponding segment’s base address generates a linear address.

If paging is enabled, the linear address can be transformed again to produce a physical address. If paging is not enabled, then the linear address is directly the physical address. The linear address space capacity of Intel 80386 is 4GB.

3. Physical Address

Refers to the address signal for addressing physical memory that appears on the CPU’s external address bus, which is the final result of the address transformation.

With the above groundwork, we can explain the knowledge of virtual memory well! Are you ready?

4. Virtual Memory

1. Definition:

From a physical perspective:

Virtual memory is an array composed of N contiguous byte-sized units stored on disk (or main memory cache) outside of main memory.

From a logical perspective

Virtual memory is the perfect interaction between hardware exceptions, hardware address translation, main memory, disk files, and kernel software, which is decoupling the process from its physical memory resources. It provides each process with a large, consistent, and private address space.

2. The Past and Present of VM

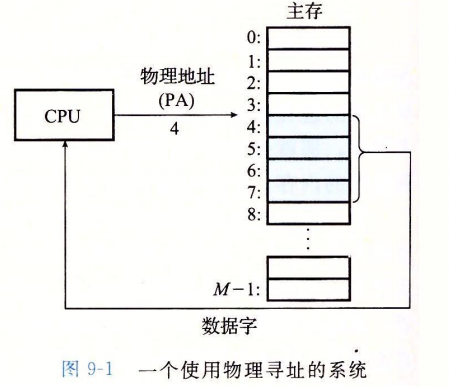

2.1 Physical Memory and Physical Addressing

The main memory of a computer system is also known as physical memory, which is an array composed of M contiguous byte-sized units. Each byte has a unique physical address (PA). The address of the first byte is 0, the next byte’s address is 1, and so on. Given this simple structure, the most natural way for the CPU to access memory is to use physical addresses. We call this method physical addressing.

Example Demonstration

The context of this physical addressing example is a load instruction that reads a 4-byte word starting from physical address 4.When the CPU executes this load instruction, it generates a valid physical address, which is passed to main memory via the memory bus. Main memory retrieves the 4-byte word starting from physical address 4 and returns it to the CPU, which stores it in a register.

2.2 Limitations

① Limited Physical Memory

Physical addressing directly accesses physical memory, and the memory space available to programs is limited by the actual installed physical memory size. If the memory required by a program exceeds the physical memory capacity, the program cannot run properly.

② Difficult Management by the Operating System

The operating system needs to allocate and manage physical memory for each program, keeping track of each program’s occupied memory areas and states. The difficulty and complexity of management increase significantly. At the same time, facing memory fragmentation, the operating system also lacks effective solutions.

Memory fragmentation occurs due to frequent memory allocation and release operations, resulting in both internal fragmentation and external fragmentation. Internal fragmentation refers to allocated memory blocks being larger than the program’s actual needs, with the excess portion being unusable; external fragmentation refers to the presence of many small free blocks in memory that cannot be merged into larger blocks to meet the needs of larger programs.

③ Interference Between Processes

Under physical addressing, programs directly access physical memory, and there is no effective isolation of memory spaces between different processes. One program can arbitrarily access and modify the memory areas of other programs or even the operating system kernel, leading to interference between programs, and in severe cases, causing system crashes.

Faced with so many problems, how can we solve them? The answer is virtual memory! Let’s look at the following explanation:

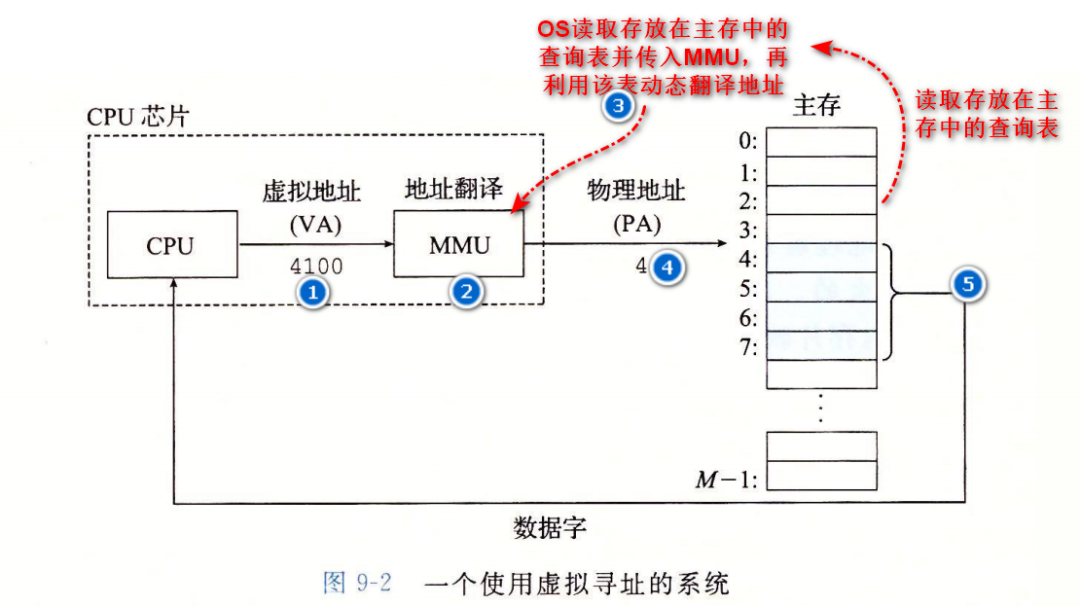

2.3 Virtual Memory and Virtual Addressing

Using virtual addressing, the CPU accesses main memory by generating a virtual address (VA), and this virtual address is converted into the appropriate physical address before being sent to memory. The task of converting a virtual address to a physical address is called address translation. Similar to exception handling, address translation requires close cooperation between CPU hardware and the operating system. The dedicated hardware on the CPU chip called the Memory Management Unit (MMU) uses a query table (i.e., page table) stored in main memory to dynamically translate virtual addresses, and the contents of this table are managed by the operating system.

2.4 VM Corresponds to Solving PM Issues

① Breaking Through Memory Space Limitations:

Virtual memory utilizes external storage devices such as hard disks as an extension of memory, providing programs with a much larger virtual address space than physical memory. The memory available for programs is no longer limited to the actual physical memory capacity. When a program requires more memory than the physical memory can provide, some temporarily unused data or code will be swapped out to the hard disk’s virtual memory area (commonly referred to as swap space or page file), and when these data or code are needed again, they will be swapped back into physical memory. This way, even with limited physical memory, larger programs can run, greatly expanding the memory space available for programs.

② Reducing Memory Management Complexity

VM will be the unified memory management interface provided by the operating system for each process. Therefore, by managing VM, all processes can be managed!

The operating system uses the virtual memory management system to uniformly manage physical memory and virtual memory. The virtual memory management system is responsible for maintaining the page table, handling page swapping in and out, etc., so that programs do not need to worry about the specific allocation and management details of physical memory. Programs only need to perform memory operations within their own virtual address space, and the operating system will automatically convert virtual addresses to physical addresses and handle situations of insufficient memory.

③ Avoiding Interference Between Processes

Each process has its own independent virtual address space, and the operating system manages the mapping of each program’s virtual addresses to physical addresses through the page table. The virtual address spaces of different programs are isolated from each other, so one program cannot directly access another program’s virtual memory area, let alone arbitrarily modify the memory data of other programs or the operating system kernel. Even if a program encounters an error and accesses an illegal virtual address, it will only affect that program itself and will not cause serious impacts on other programs and the operating system. This isolation mechanism greatly enhances the security and stability of the system.

3. The Role of Virtual Memory

Actually, this has already been explained earlier; virtual memory solves the three major problems corresponding to physical addressing, which is the role of virtual memory. However, there is an even simpler way to say it:

Because virtual memory provides each process with a large, consistent, and private memory space, the role of virtual memory can be summarized as: large, consistent, and private. Once these three adjectives are clarified, the role of VM is also clarified.

5. The Role of Virtual Memory

1. Large – As a Caching Tool

Virtual memory uses external disks as a cache for main memory, greatly enhancing the actual memory space available for processes. The term large reflects the role of VM as a caching tool.

The specific process analysis is as follows:

1. Page – Data Transmission Unit

1.1 Overview of Pages

When physical memory resources are alarmed, temporarily unused code and data in main memory will be swapped out to disk, and when needed again, they will be swapped back. So what is the data exchange unit between memory and disk? What is its format?

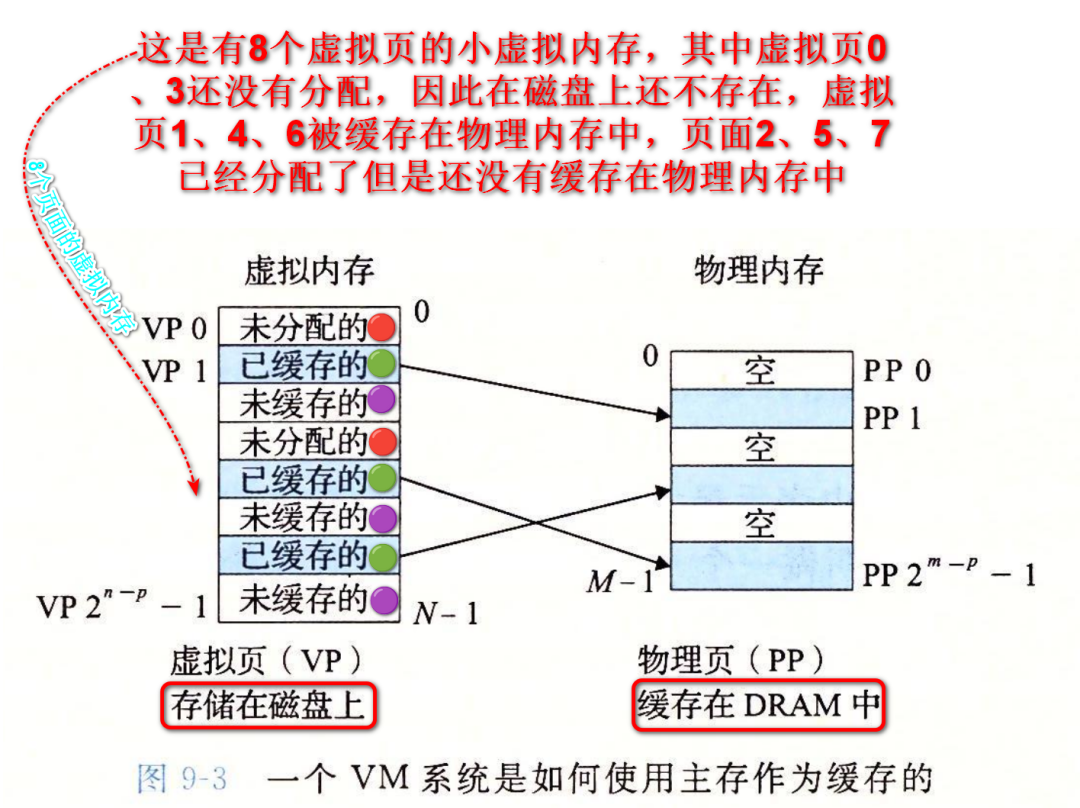

In the virtual memory system, the virtual memory on the disk is divided into fixed-size data blocks called virtual pages (VP). Physical memory is also divided into pages of the same size as virtual pages, referred to as physical pages (PP), also known as page frames. By using pages as the transmission unit for data transfer, the data transfer format between memory and disk is unified.

1.2 Collection of Transmission Units – Three Situations

Based on the different usage situations of virtual pages, at any given time, virtual pages can be divided into the following three categories, which are also referred to as collections of transmission units:

① Unallocated

Pages that have not yet been allocated (or created) by the VM system. Unallocated blocks do not have any data associated with them and therefore do not occupy any disk space.

② Allocated but Not Cached

Allocated pages that are not cached in physical memory [there is already corresponding byte data on disk for the virtual memory, but it is not cached in physical memory]

③ Allocated and Cached

Currently cached allocated virtual pages in physical memory

The following PTE will explain how the process distinguishes which situation this is.

1.2 Diagram of Transmission Unit Collection

2. Page Table – Mapping Structure Table from VM to PM

We know that the final data is stored in physical memory, while the process (programmer) faces virtual memory. How is the conversion from VM to PM achieved? The answer is through the page table.

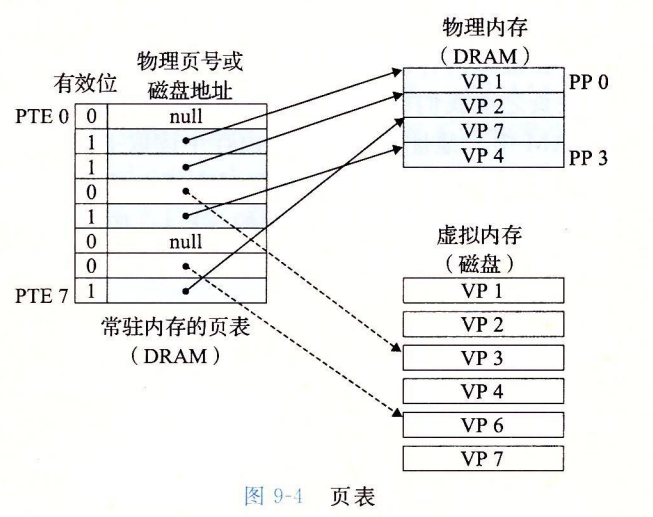

The page table is an important data structure in the operating system kernel, essentially a K-V array, recording the conversion relationship from virtual addresses to physical addresses.

1. Essence of the Page Table – PTE Array

The data structure of the page table is essentially an array of Page Table Entries (PTE). Each page table entry corresponds to PM. Therefore, as long as the operating system obtains the page table, it can find the corresponding item in the page table using the virtual page number in VM as an index, completing the conversion from VM to PM.

2. PTE Structure

So what does the specific K-V structure look like? Here is a brief formula:

PTE = Valid Bit + Address Field

Valid Bit

The valid bit indicates whether the virtual page is currently cached in DRAM.

Here, valid bit 0 indicates not cached (page fault), while 1 indicates cached (page hit)

Address Field

The address field is used to establish the mapping relationship between virtual pages and physical page frames. The address field can have two situations:

- 1. Empty.

This indicates that the virtual page has not yet been cached in physical main memory.

- 2. Not empty.

If the valid bit is 1, it indicates a physical address, meaning it is cached; if the valid bit is 0, it indicates the starting position of the virtual page on the disk, meaning it is pending to be cached.

In summary, the function of the PTE is to check whether the virtual page is cached in main memory and find the corresponding PM data page for the VM.

3. Actual Demonstration

In a system with 8 virtual pages and 4 physical pages, the page table shows that four virtual pages (VP1, VP2, VP4, and VP7) are currently cached in DRAM. Two pages (VP0 and VP5) have not yet been allocated, while the remaining pages (VP3 and VP6) have been allocated but are currently not cached.

3. Actual VM to PM Conversion Process

1. Generate PM, Update Page Table

Virtual Address Format = Virtual Page Number + Address Offset

If we call the malloc function, the CPU will calculate a virtual address, where the virtual address is divided into a virtual page number and an address offset. After generating this virtual address, the operating system will update the page table data structure in memory, establishing a relationship between this virtual page and physical memory.

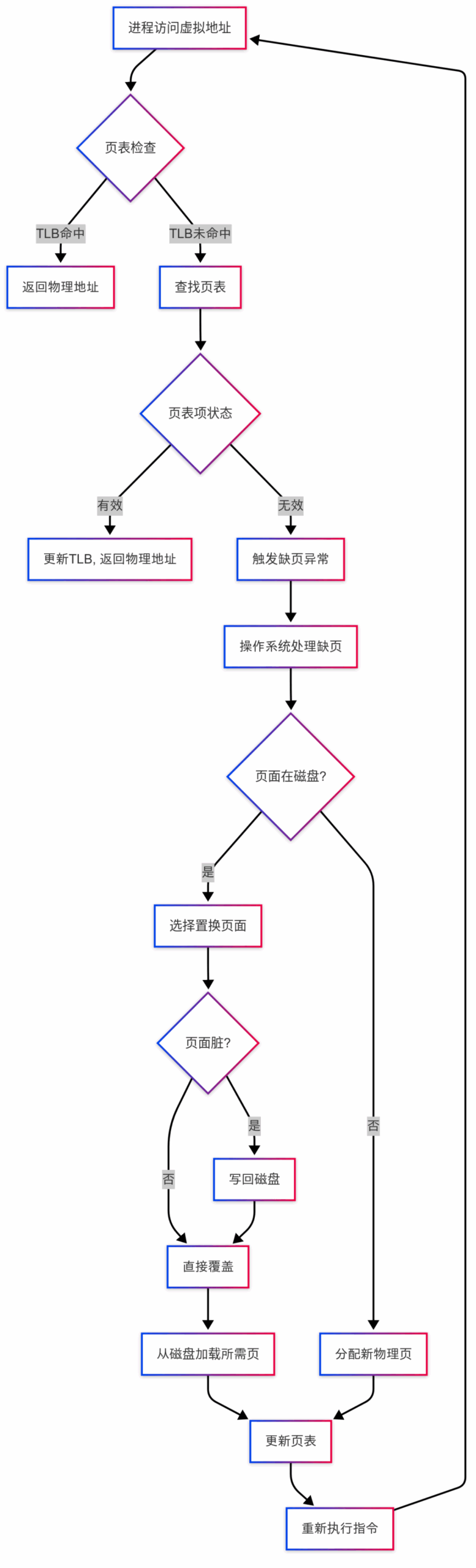

2. MMU Translates to PM

Then, the CPU will pass the calculated PM into the firmware inside the CPU chip – the MMU (Memory Management Unit) for address translation. At the same time, the CPU will first check the TLB (Translation Lookaside Buffer) for existing page tables; if not found, it will read the page table from main memory into the MMU, using the first part of the virtual address – the virtual page number as an index to find the corresponding item in the page table, which is the PTE. Then it checks the valid bit and valid address of the PTE. First, check the valid address; if it is NULL, it indicates not allocated. If the valid address is not empty, then check the valid bit; if the valid bit is 1, it indicates a physical address, if the valid bit is 0, it indicates the valid address points to the starting address of the virtual page on the disk.

TLB When the processor needs to access memory, it generates a virtual address. At this point, the MMU (Memory Management Unit) first queries the TLB to see if there is a conversion entry corresponding to that virtual address (including virtual page number and corresponding physical page frame number, etc.). If it exists (i.e., TLB hit), the MMU can directly use the physical page frame number from the TLB, quickly converting the virtual address to a physical address, thus quickly accessing physical memory and greatly improving address conversion speed. If there is no corresponding entry in the TLB (i.e., TLB miss), the MMU needs to query the page table in memory to complete the address conversion, which is relatively slower.

3. If the Valid Bit is 1, Find Physical Memory via Page Table

4. If the Valid Bit is 0, Reload the Virtual Page into Memory

When the valid bit is 0, it indicates that the virtual page is not cached in physical memory, which is a page fault. At this point, the operating system will determine the cause of the page fault. Is it due to not being loaded into memory or a permission issue? If it is determined that the page has not been loaded into memory, the operating system will find an available physical page frame in physical memory (if none is found, it will use page replacement algorithms to swap a page out to disk to free up space). Then the operating system will swap the missing virtual page from external devices such as the disk into physical memory, update the page table entry, set the valid bit to 1, and fill in the correct physical page frame.

Then, the CPU will retry the instruction that caused the page fault. At this point, because the correct cache is already in physical memory, it can be found!

Complete Workflow of VM

This is the complete workflow diagram:

About Xiaokang

😉 Hehe, I am Xiaokang, a programmer who loves Linux and low-level development. Below is my WeChat, welcome to learn and communicate with you!

If this article is useful to you, feel free to follow, comment, and share it so more friends can see it~~~🙈