Published in 1968, “Do Androids Dream of Electric Sheep?” boldly imagined that robots would possess human traits, capable of thinking, sleeping, and dreaming, prompting reflections on the relationship between cold creations and life.[1]Now, that cyberpunk world seems less distant. Chip structures have highly mimicked biological brains, beginning to possess the five senses and increasingly human-like characteristics. This is the neuromorphic chip, a chip that boasts an energy efficiency over a thousand times stronger than existing CPUs or GPUs.[2]So far, neuromorphic computing remains in the research phase, but the continuous industrialization actions indicate that this technology will create the first batch of pioneers.[3]

From Biological Brain to Chip

Neuromorphic computing, also known as brain-inspired computing, refers to architectures built by referencing the structure of biological brain neurons and their processing patterns. It is an advanced computing form that breaks away from the traditional von Neumann architecture, and the chips designed based on this architecture are neuromorphic chips.In simple terms, it means embedding the human brain into a chip. Although this term may sound obscure, artificial intelligence (AI) technologies that also draw inspiration from the human brain have already entered thousands of households.[4]However, neuromorphic chips are devices whose architecture is closer to that of the human brain.

A Type of Brain-Inspired Chip

Neuromorphic computing is also a type of brain-inspired chip (Brain-inspired Computing, also known as brain-inspired computing).Currently, the precise definition and scope of brain-inspired chips are not unified in academia and industry. Generally, they can be roughly divided into neuromorphic chips (based on Spiking Neural Networks, SNN) and deep learning processors (based on Artificial/Deep Neural Networks, ANN/DNN). The former approaches the biological brain from a structural level, focusing on designing chip structures based on the models and organizational structures of human brain neurons, while the latter does not follow the neuronal organizational structure but designs chip structures around mature cognitive computing algorithms.[5]To simply explain the principles of both, deep learning processors perform dimensionality reduction, converting multi-dimensional problems into one-dimensional information flows; neuromorphic chips perform dimensionality expansion, aiming to get closer to the human brain’s thinking methods through multi-dimensional spatiotemporal transformations, thus achieving better energy efficiency, computing power, and efficiency.[6]

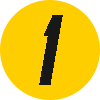

Comparison of von Neumann architecture and neuromorphic architecture, image source丨Nature Computational Science[7]

Deep learning processors belong to another sub-industry. As early as 2012, the Institute of Computing Technology of the Chinese Academy of Sciences developed the world’s first chip architecture supporting deep neural network processing, known as Cambricon.[8]Current players include Mythic, Graphcore, Gyrfalcon Technology, Groq, HAILO, Greenwaves, Google, Horizon, Cambricon, and others.The two directions are not independent or mutually exclusive but rather intersect and merge. For tasks where deep learning excels, such as simulating human vision or natural language interaction, deep learning networks will continue to be used; for other tasks less suited to deep learning, such as olfaction, robotic control, multimodal, and even cross-modal storage, new architecture neuromorphic chips will be adopted.Both the research and industry sectors are gradually blurring the boundaries between the two, but in reality, many papers, reports, or articles referring to brain-inspired chips are specifically referring to neuromorphic chips. This article will adopt a more precise description—neuromorphic chips.

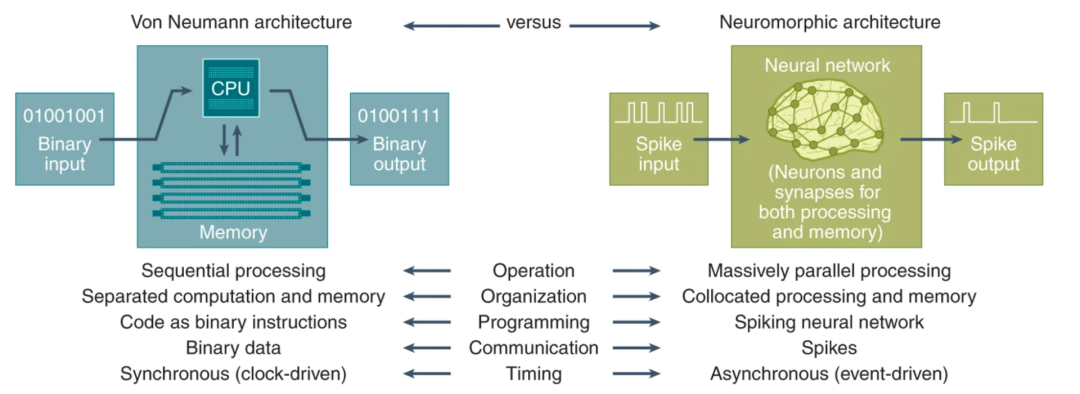

Characteristics of Two Major Platforms in Brain-Inspired Computing[9]

Characteristics of Biological Brains

Biological brains can achieve various functions such as perception, movement, thinking, and intelligence, but due to their complexity, our understanding of the brain is still very limited, which poses challenges for neuromorphic chips in terms of cognitive principles, hardware implementation, intelligent algorithms, and brain integration.[10]Current research shows that in biological brains, neuronal cells achieve signal transmission and adjustment functions through dendrites, synapses, etc., while neurons communicate with each other in the form of pulse signals. In fact, the structure and function of a single neuron are not complex, but through the large-scale neural networks interconnected by synapses, various complex learning and cognitive functions can be achieved.[11]Additionally, biological brains are extremely different from mainstream artificial chip structures:

- The information processing structure of neurons and synapses not only has higher efficiency but also enables large-scale parallel processing;[12]

- Traditional computing systems’ von Neumann architecture separates computation and storage, while biological brains integrate storage and processing, lacking separate memory; moreover, biological brains do not have dynamic random-access memory, hash hierarchy structures, or shared memory, etc.;[13] (Refer to the article “Storage-Compute Integrated Chips, Potential Stocks in the AI Era” from Guokr Hard Technology)

- Biological brain memory is not static; it includes both frequently repeated long-term memory and quickly forgotten short-term memory, with the transition between the two reflected in synaptic changes, i.e., the transformation of long/short-term plasticity;[14]

- Computers primarily process digital signals, while biological brains use mixed signals, with digital signals rapidly transmitted for internal communication and more effective analog chemical forms for processing by neurons and synapses.[15]

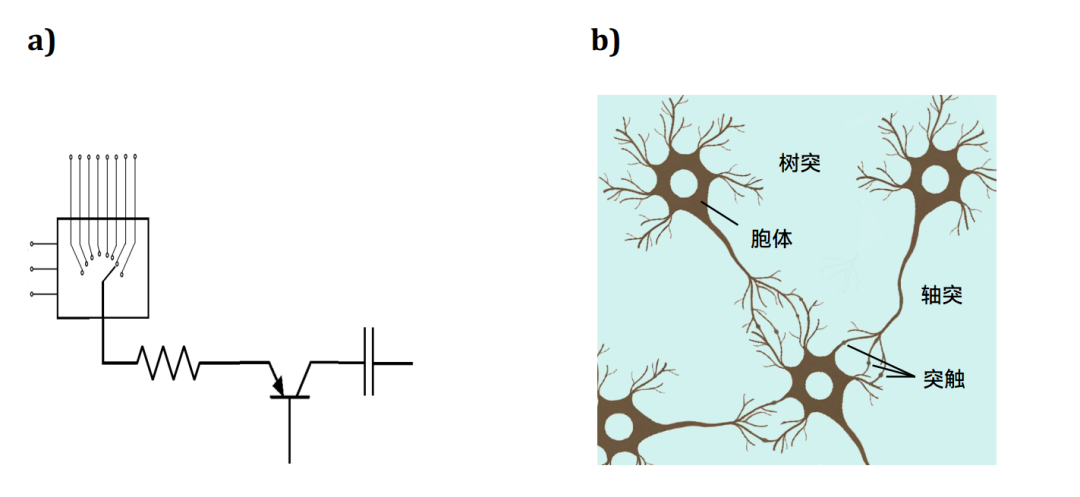

Comparison of Traditional Circuit Structure (left) and Brain Structure (right)[16]

However, it is not to say that artificial devices have no advantages. If CMOS can be used to construct devices of the same scale as biological brains, the two will exhibit different areas of advantage. Neuromorphic chips will ultimately be designed and manufactured by combining the strengths of both biological brains and artificial devices.

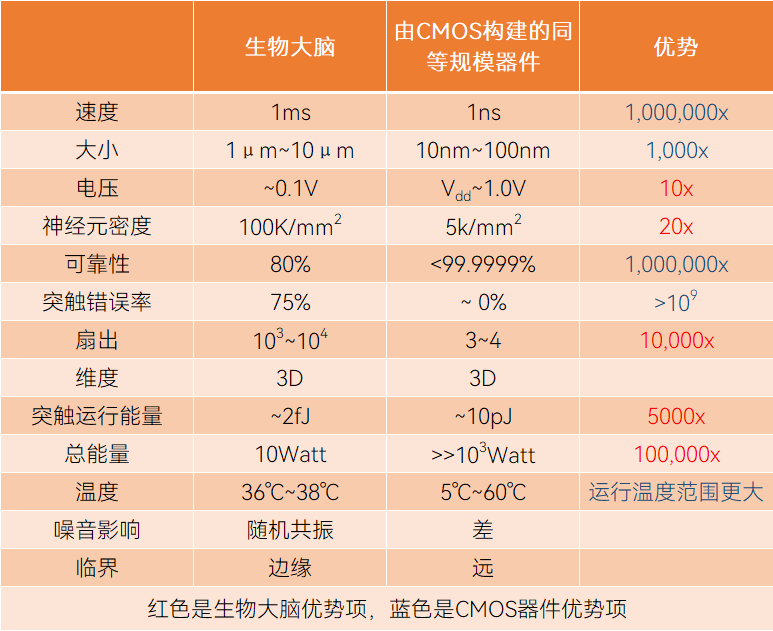

Biological Brain Compared to Equivalent Scale CMOS Devices[16]

Three Main Implementation Forms

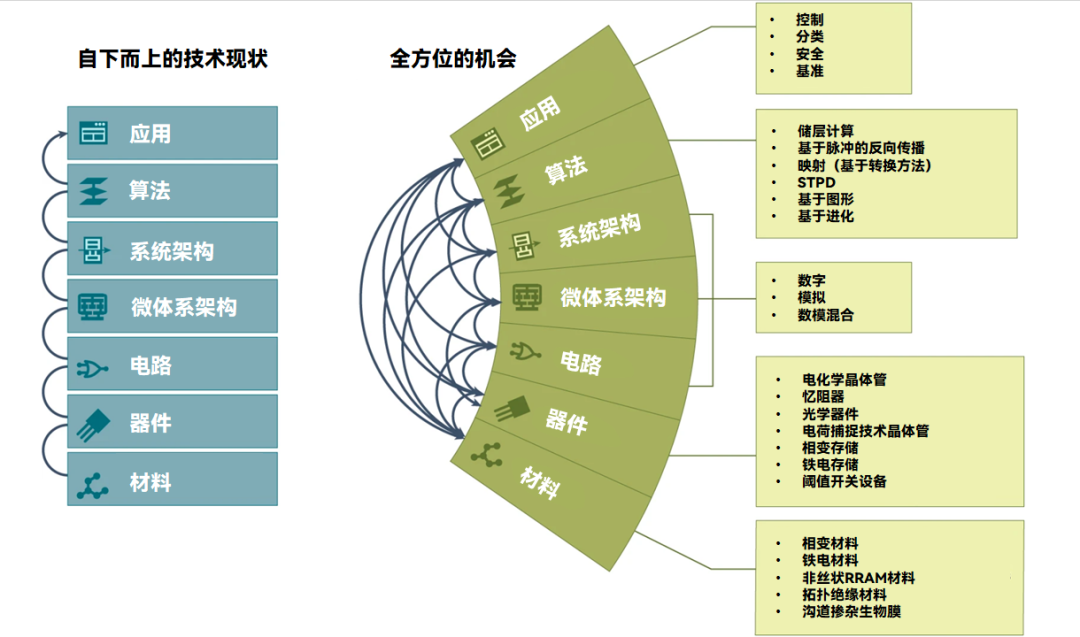

As early as 1952, research began modeling the nervous system as an equivalent circuit[17]. It wasn’t until the 1980s that Carver Mead, one of the inventors of very-large-scale integration (VLSI) at Caltech, was inspired by this to create the term “neuromorphic” to describe devices and systems that mimic certain functions of biological nervous systems.[18]Scientists, including Carver Mead, have spent over 40 years researching this technology, with the ultimate goal of simulating analysis systems for human sensory and processing mechanisms, such as touch, vision, hearing, and thinking. Now, the neuromorphic chip industry has developed its initial form.An ideal neuromorphic chip is backed by the collision of multiple disciplines, including pursuing biomimetic materials, constructing neurons and synapses at the device level, achieving neural network connections at the circuit level, and implementing brain-like thinking capabilities at the algorithm level.[19]Different neuromorphic chips involve a wide range of materials, devices, and processes, and they will drive algorithms and applications from the bottom up through materials, devices, circuits, and architectures.[7]

Fields and Opportunities Involved in Neuromorphic Chips, image source丨Nature Computational Science, modified[7]

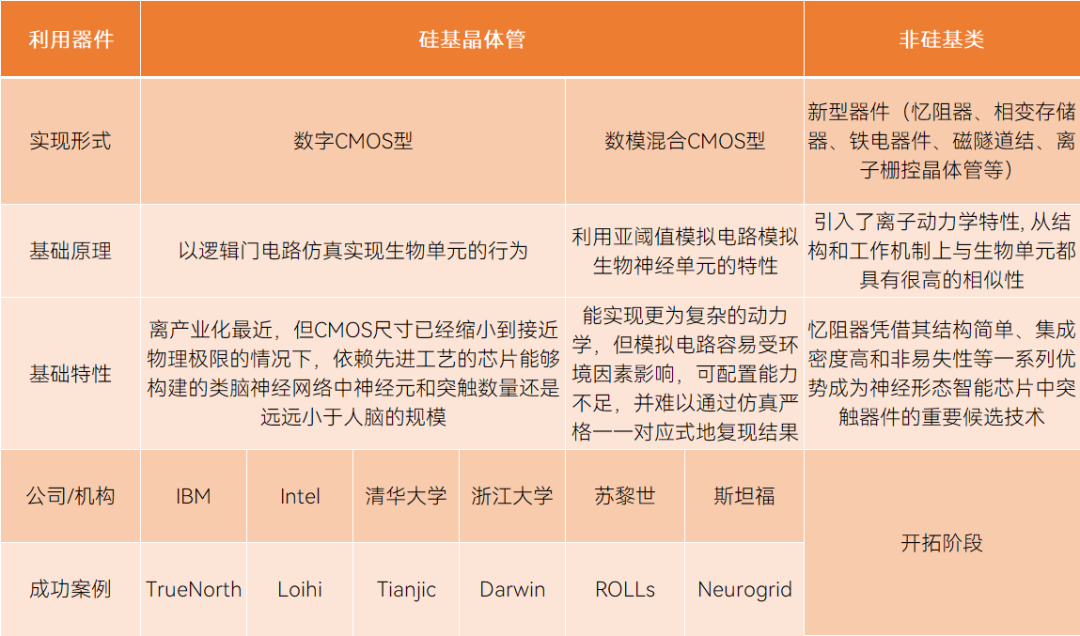

So far, the construction of neuromorphic chips is basically consistent, including three parts: neuron computation, synaptic weight storage, and routing communication, while adopting the Spiking Neural Network (SNN) model.[9]However, based on different materials, devices, and circuits, they can be divided into three schools: neuromorphic systems dominated by analog circuits (mixed-signal CMOS type), fully digital circuit neural systems (digital CMOS type), and mixed-signal neuromorphic systems based on new devices (memristors are candidate technologies).The two CMOS-based methods can continue to utilize existing manufacturing technologies to build artificial neurons and their connections, but simulating the behavior of a single neuron or synapse requires multiple CMOS devices to form circuit modules, thus limiting integration density, power consumption, and functional simulation accuracy; new devices, from the perspective of biomimetic devices, simulate neurons and synapses at the device level, showing significant advantages in power consumption and learning performance, but are still in the exploratory stage.[12]Among them, digital CMOS type is currently the most easily industrialized form. On one hand, the technology and manufacturing maturity are high; on the other hand, it does not have some concerns and limitations of analog circuits.

Three Implementation Forms of Neuromorphic Chips, table compiled by Guokr Hard Technology

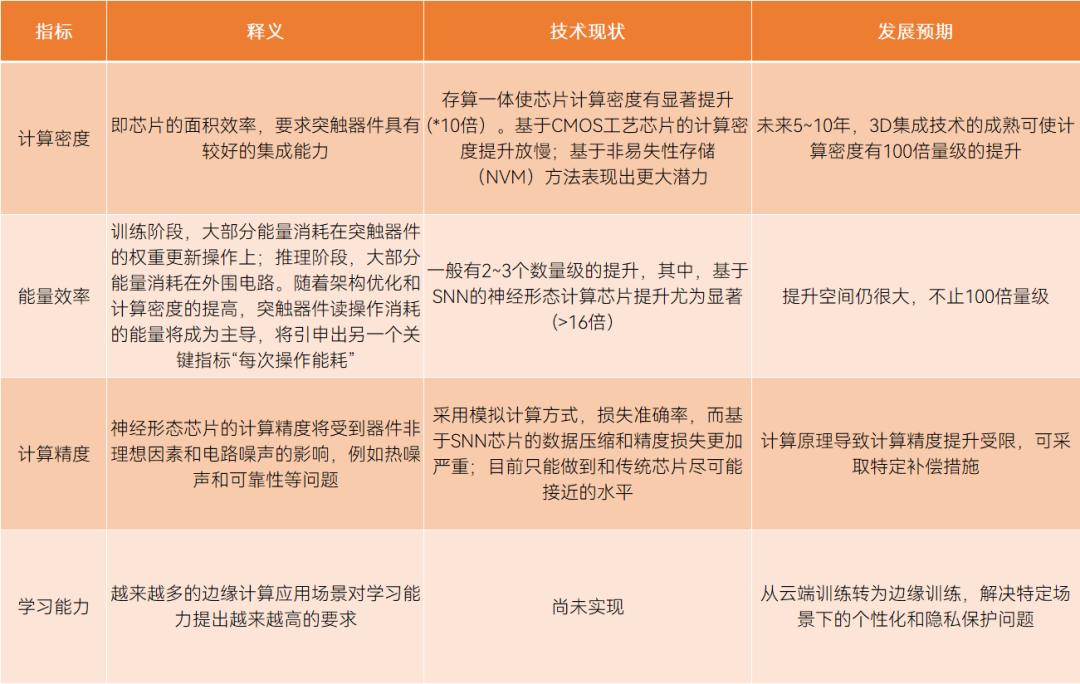

How to measure the quality of a neuromorphic chip? Its competitiveness is mainly evaluated based on four indicators: computing density, energy efficiency, computing accuracy, and learning ability.[20]

Four Key Indicators and Current Status of Neuromorphic Chips, table compiled by Guokr Hard Technology

Source丨Nature Electronics[20], “New Economic Guide”[21]

Addressing Urgent Industry Needs

Why develop neuromorphic chips? Their commercial value lies in the ability to continuously self-learn under low power consumption and minimal training data conditions, and ideally, in the same AI task, the energy consumption of neuromorphic chips is reduced by over a thousand times compared to traditional CPUs or GPUs.In the digital age, the computing speed of computers is increasing rapidly, even defeating world champions in chess. Therefore, people affectionately refer to computers as PCs, but their energy efficiency and intelligence levels still fall far short of biological brains.[22]For example, AlphaZero is a behemoth composed of 5,000 Google TPU processors, with each unit consuming as much as 200W.[23]Similarly, IBM once simulated a cat’s cortical model (equivalent to one percent of the human brain) on the Deep Blue supercomputer platform, requiring nearly 150,000 CPUs and 144TB of main memory, with an energy consumption of 1.4MW.[24]In contrast, the human brain, composed of about 85 billion neurons, is connected by a trillion (1015) synapses, capable of executing one quintillion operations per second, yet this massive system consumes only 20W for everyday tasks[25]. Meanwhile, a two-year-old child can effortlessly recognize familiar people from many angles, distances, and lighting conditions, demonstrating intelligence far exceeding any existing computing system.[22]Thus, by mimicking the structure of biological brains, neuromorphic chips indeed possess energy efficiency characteristics. Their unique event-triggered computation mechanism does not trigger computational behavior when no dynamic information is generated. Additionally, they excel at complex spatiotemporal sequence analysis; although the rate of a single neuron is low, due to their similarity to biological brain mechanisms, they can perform large-scale parallel computations, responding much faster than existing solutions.It can be said that neuromorphic chips have the potential to become the saviors of the present, addressing three major issues facing the industry: first, the massive scale of data; second, the increasingly diverse digital forms, where many data can no longer be solved by manual editing or processing, requiring intelligent handling; third, the growing demand for low latency in applications, where traditional single computing architectures encounter performance and power consumption bottlenecks.Moreover, neuromorphic chips align with the concept of green computing. Computing power has become an economic indicator alongside electricity, and energy-intensive computing methods cannot bear the burden; energy-optimized approaches are the optimal solution to the problem. It is estimated that data centers consume about 200 terawatt-hours (TWh) of electricity annually, a figure equivalent to the annual electricity consumption of some countries.[26]

Path to Large-Scale Commercialization

Although neuromorphic chips have many advantages, they only excel in specific fields and will not replace traditional computing platforms. Traditional digital computing chips like CPUs and GPUs excel at precise calculations, while neuromorphic chips excel in unstructured data, image recognition, classification of noisy and uncertain datasets, new learning systems, and reasoning systems.Quantum computing, which can disrupt specific fields of computation, follows the same logic; it cannot operate independently of existing computing systems. Future advanced computing systems will inevitably require the collaboration of traditional digital chips, neuromorphic chips, and quantum computing.[27]Currently, neuromorphic chips are difficult to design and manufacture, and a large-scale market has not yet formed. At the same time, the industry consensus is that investment in neuromorphic chips lags far behind artificial intelligence or quantum technology.[27]A small chip encompasses knowledge from semiconductor manufacturing technology, brain science, computational neuroscience, cognitive science, and statistical physics[28]. Manufacturing such a chip involves key roles such as physicists, chemists, engineers, computer scientists, biologists, and neuroscientists, making it undoubtedly challenging to have so many roles work on the same task and speak the same language.However, its disruptive value has accelerated the global commercialization process. Data shows that the neuromorphic chip market is expected to grow from $22.743 million in 2021 to $550 million by 2026, with a compound annual growth rate of 89.1%.[29]Furthermore, if fundamental technical issues are resolved in the coming years, by 2035, the global neuromorphic chip market could account for 18% of the overall artificial intelligence market, reaching $22 billion.[30]So, what issues need to be addressed to promote large-scale commercialization?

- First, the design issue: The brain processes complex information in real-time while consuming very little energy. Understanding this efficient working mechanism and applying it to chips is challenging. Taking the recently commercialized digital CMOS type as an example, interconnecting multiple fully digital asynchronous design chips, the effectiveness and timeliness of chip connections, as well as software-level interconnection calculations, distributed computing, and flexible partitioning, are all significant hurdles to overcome;

- Second, the manufacturing issue: Utilizing silicon-based transistor routes can reuse existing manufacturing technologies, while non-silicon routes must solve issues related to underlying processes, manufacturing yields, and supporting large-scale production. Even if these issues are resolved and experimental chips are produced, the stability of product-level supply must also be considered;

- Third, software and ecosystem issues: Neuromorphic chips are entirely different from existing architectures, and many developers in the community are constructing their spiking neural network algorithms at the bottom level and burning software into hardware for experimentation through low-level libraries, which is clearly not a scalable solution. For large-scale commercialization, software toolchains are crucial;

- Fourth, the lack of killer applications: Whether in robotics, autonomous driving, or large-scale industrial optimization, the logic should be application-driven technology development, continuously building ecosystems based on this. The prevailing view is that neuromorphic technology will first find applications in consumer electronics, mobile terminals, and industrial IoT.

Players in Neuromorphic Chips

Globally, institutions involved in the development of neuromorphic computing chips mainly fall into three categories: tech giants like Intel, IBM, Qualcomm, universities/research institutions represented by Stanford and Tsinghua, and startups.[31]

Statistics of Neuromorphic Computing Players, image source丨Zhiyuan[32]

International Development Status

Internationally, there is significant research effort on neuromorphic chips, with notable institutions including MIT, Stanford University, Boston University, the University of Manchester, and Heidelberg University. Tech giants like Intel, IBM, Qualcomm, and Samsung are leading the way, while startups include BrainChip, aiCTX, Numenta, General Vision, Applied Brain Research, and Brain Corporation.[32]In terms of research and implementation, Intel and IBM’s experimental chips are the most representative.

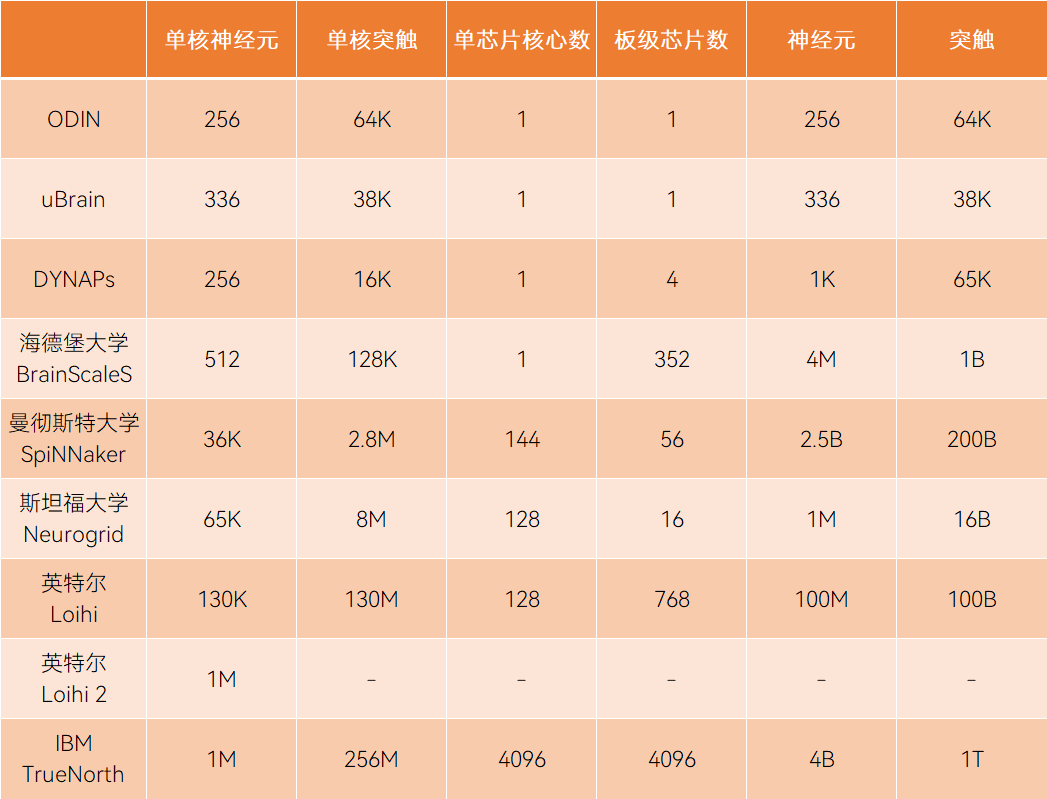

Currently known detailed parameter comparisons of neuromorphic chips, table compiled by Guokr Hard Technology

Reference丨IEEE[33]

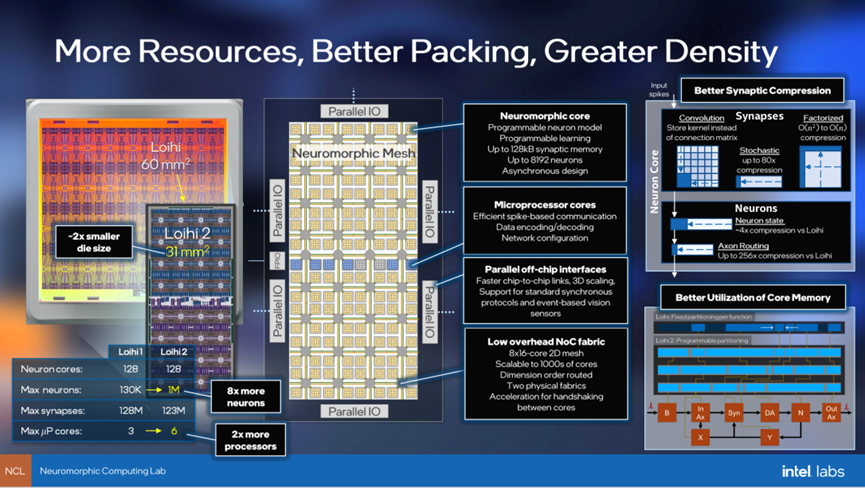

Intel’s Loihi is a fully digital design neuromorphic chip. In 2017, Intel developed the first Loihi. In 2021, Intel launched the second generation Loihi2 based on this, produced using Intel 4 process technology, with a single chip neuron count reaching 1 million.Intel’s neuromorphic chips have made significant progress in perception fields, including gesture recognition, visual reasoning, and odor sensing with up to 3,000 times the learning data. Additionally, Intel has developed a data center rack system called Pohoiki Springs, which integrates 768 Loihi chips into the size of five standard servers.To make neuromorphic chips more user-friendly, Intel has also launched an open-source software framework called Lava, which allows applications to be built without specialized hardware, seamlessly running on heterogeneous architectures of traditional and neuromorphic processors, enabling researchers and application developers to further develop based on each other’s results.[34]Intel is not in a hurry to commercialize neuromorphic chips; unlike small companies maintaining specific applications, Intel views it as a general technology and will consider all commercial opportunities at a level exceeding one billion dollars.

Overview of Intel’s Loihi and Loihi2

TrueNorth is IBM’s experimental chip, developed over nearly a decade. Since 2008, the US DARPA program has funded this project. In 2011, IBM launched the first generation of TrueNorth.By 2014, the number of neurons in IBM’s second generation TrueNorth increased from 256 to 1 million, and the number of programmable synapses increased from 262,144 to 256 million, capable of executing 46 billion synaptic operations per second, with a total power consumption of 70mW (20mW per square centimeter), and an overall volume only one-fifteenth that of the first generation brain-like chip.[5]Notably, in 2019, IBM also launched a neuromorphic supercomputer called Blue Raven, with processing capabilities of 64 million neurons and 16 billion synapses, consuming only 40W, equivalent to a household light bulb.[35]

Domestic Development Status

Domestic research includes top institutions such as Tsinghua University, Zhejiang University, Fudan University, and the Chinese Academy of Sciences, while in recent years, numerous startups have emerged, such as Lingxi Technology, Shishi Technology, and Zhongke Neuromorphic. Among them, Tsinghua University’s Tianji chip and Zhejiang University’s Darwin chip are the most representative.

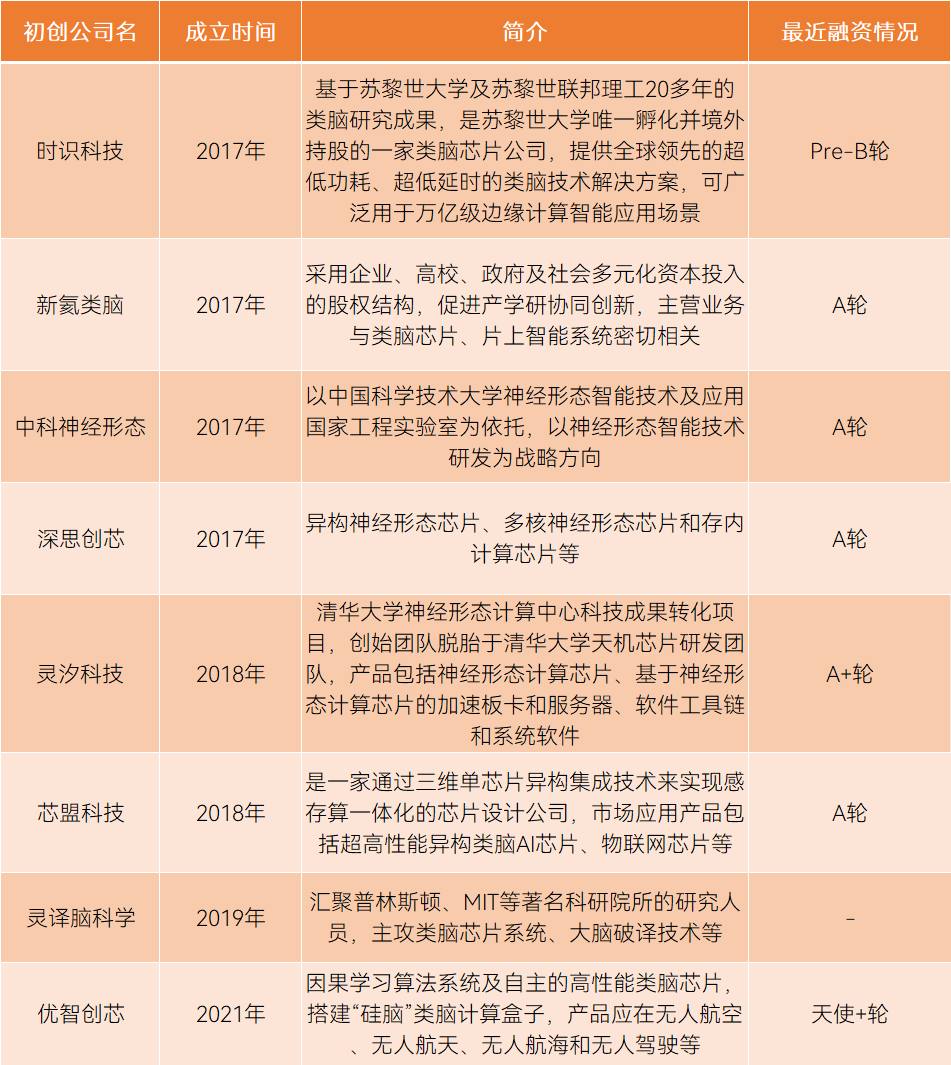

Statistics of Domestic Neuromorphic Chip Startups, table compiled by Guokr Hard Technology

Source丨Company websites, “New Economic Guide”[21], Quantum Bit[36]

Tsinghua University’s neuromorphic chip is the most representative experimental chip in China. The first generation Tianji chip, developed in 2015, used a 110nm process and was merely a small sample. In 2017, the second generation Tianji chip began to achieve advanced results, made using a 28nm process, consisting of 156 functional cores (FCore), containing about 40,000 neurons and 10 million synapses. Compared to the first generation, the density increased by 20%, speed improved by at least 10 times, and bandwidth increased by at least 100 times.[37]To enhance the practicality of neuromorphic chips, Tsinghua University has also independently developed a software toolchain that supports automatic mapping and compilation from deep learning frameworks to the Tianji chip. According to Tsinghua University’s plans, the next generation Tianji chip will be based on 14nm or more advanced processes and will have even more powerful functions.[38]Another representative is the brain-like computer jointly developed by Zhejiang University and the ZhiJiang Laboratory, which has a neuron count comparable to that of a mouse’s brain. This computer contains 792 second-generation Darwin chips, supporting 120 million spiking neurons and 7.2 billion synapses, with typical operating power consumption of only 350W to 500W.[39]In fact, China’s technological strength in the field of neuromorphic chips is already at a globally leading level, with global players all at the starting line in this field.It can be said that this is a technology worth investing in. However, it is also a tough nut to crack, a vast track that requires materials, devices, processes, architectures, and algorithms to be indispensable, and it is a field that must navigate in the dark. At the same time, it will inevitably encounter issues related to application scenarios and the cost-benefit ratio.However, the market is like this; those who dare to challenge blank fields can become the first batch to reap the benefits.

References:

[1] Philip K. Dick. Do Androids Dream of Electric Sheep[M]. Doubleday, 1968. [First Edition].

[2] Davies M, Srinivasa N, Lin T H, et al. Loihi: A neuromorphic manycore processor with on-chip learning[J]. Ieee Micro, 2018, 38(1): 82-99.https://doi.org/10.1109/mm.2018.112130359

[3] Hello Zhangjiang: Shishi Technology’s Qiao Ning: Renaming Brain-like Intelligence, Becoming the “Pioneer”. 2021.11.9.https://mp.weixin.qq.com/s/IIxy8E9tfqt6XHk_bfFidg

[4] Arrow: Neuromorphic Computing and Artificial Intelligence: Neuromorphic Computing Chips and AI Hardware. 2020.4.16.https://www.arrow.com/zh-cn/research-and-events/articles/neuromorphic-computing-chips-and-ai-hardware

[5] Tao Jianhua, Chen Yunjie. Development Status and Reflections on Brain-like Computing Chips and Brain-like Intelligent Robots[J]. Bulletin of the Chinese Academy of Sciences, 2016, 31(07):803-811.

[6] Xinhua News Agency: China’s Brain-like Computing Pioneer Shi Leping: Exploring the “Unmanned Zone” in AI. 2021.9.26.https://www.tsinghua.edu.cn/info/1662/87321.htm

[7] Schuman C D, Kulkarni S R, Parsa M, et al. Opportunities for neuromorphic computing algorithms and applications[J]. Nature Computational Science, 2022, 2(1): 10-19.https://doi.org/10.1038/s43588-021-00184-y

[8] Chen Y, Luo T, Liu S, et al. Dadiannao: A machine-learning supercomputer[C]//2014 47th Annual IEEE/ACM International Symposium on Microarchitecture. IEEE, 2014: 609-622.https://doi.org/10.1109/micro.2014.58

[9] Deng Lei. Research on the Computing Model and Key Technologies of Heterogeneous Fusion Brain-like Computing Platforms[D]. Tsinghua University, 2017.

[10] Li F, He Y, Xue Q. Progress, challenges and countermeasures of adaptive learning[J]. Educational Technology & Society, 2021, 24(3): 238-255.https://www.jstor.org/stable/27032868

[11] Deng Yabin, Wang Zhiwei, Zhao Chenhui, Li Lin, He Shan, Li Qiuhong, Shuai Jianwei, Guo Donghui. Overview of Brain-like Neural Networks and Neuromorphic Devices and Their Circuits[J]. Computer Applications Research, 2021, 38(08):2241-2250

[12] Wang Zongwei, Yang Yuchao, Cai Yimao, Zhu Tao, Cong Yang, Wang Zhiheng, Huang Ru. Intelligent Chips and Device Technologies for Neuromorphic Computing[J]. China Science Foundation, 2019, 33(06):656-662.

[13] Wan C, Cai P, Wang M, et al. Artificial sensory memory[J]. Advanced Materials, 2020, 32(15): 1902434.https://doi.org/10.1002/adma.201902434

[14] Wu Y, Wang X, Lu W. Dynamic resistive switching devices for neuromorphic computing[J]. Semiconductor Science and Technology, 2021.https://doi.org/10.1088/1361-6641/ac41e4

[15] Sterling P, Laughlin S. Principles of neural design[M]. MIT press, 2015.

[16] Schuller I K, Stevens R, Pino R, et al. Neuromorphic computing–from materials research to systems architecture roundtable[R]. USDOE Office of Science (SC)(United States), 2015.https://doi.org/10.2172/1283147

[17] Hodgkin A L, Huxley A F. A quantitative description of membrane current and its application to conduction and excitation in nerve[J]. The Journal of physiology, 1952, 117(4): 500.https://doi.org/10.1113/jphysiol.1952.sp004764

[18] Mead C. Adaptive retina[M]//Analog VLSI implementation of neural systems. Springer, Boston, MA, 1989: 239-246.https://doi.org/10.1007/978-1-4613-1639-8_10

[19] Wu Chaohui. Brain-like Computing—Building a “Artificial Super Brain”[N]. People’s Daily, 2022-1-11.http://ent.people.com.cn/n1/2022/0111/c1012-32328291.html

[20] Zhang W, Gao B, Tang J, et al. Neuro-inspired computing chips[J]. Nature electronics, 2020, 3(7): 371-382.https://doi.org/10.1038/s41928-020-0435-7

[21] Liu Xing, Li Xingyu. Analysis of the Industrialization Development Prospects of Neuromorphic Computing Chips[J]. New Economic Guide, 2021,(03):31-34.

[22] Shi Hanmin. The Wisdom and Wonder of Science—Numerical Mysticism and Facing Complexity[M], Higher Education Press, 2010: 103–105

[23] Furber S. Large-scale neuromorphic computing systems[J]. Journal of neural engineering, 2016, 13(5): 051001.https://doi.org/10.1088/1741-2560/13/5/051001

[24] Ananthanarayanan R, Esser S K, Simon H D, et al. The cat is out of the bag: cortical simulations with 109 neurons, 1013 synapses[C]//Proceedings of the conference on high performance computing networking, storage and analysis. 2009: 1-12.https://doi.org/10.1145/1654059.1654124

[25] Wu K J. Google’s new AI is a master of games, but how does it compare to the human mind[J]. Smithsonian, 2018, 10.

[26] Jones N. How to stop data centres from gobbling up the world’s electricity[J]. Nature, 2018, 561(7722): 163-167. https://doi.org/10.1038/d41586-018-06610-y

[27] Mehonic A, Kenyon A J. Brain-inspired computing needs a master plan[J]. Nature, 2022, 604(7905): 255-260.https://doi.org/10.1038/s41586-021-04362-w

[28] Zhiyuan Community: A Summary of the Past and Present of Brain-like Computing | Researcher Li Guoqi from the Institute of Automation, Chinese Academy of Sciences. 2022.5.7.https://mp.weixin.qq.com/s/9-FW5ZxJt4fthNhQI10qHA

[29] Markets And Markets: Neuromorphic Computing Market by Offering, Deployment, Application (Image Recognition, Signal Recognition, Data Mining), Vertical (Aerospace, Military, & Defense, Automotive, Medical) and Geography (2021-2026).https://www.marketsandmarkets.com/Market-Reports/neuromorphic-chip-market-227703024.html

[30] Yole: Neuromorphic Computing and Sensing.2021.5.https://s3.i-micronews.com/uploads/2021/05/Yole-D%C3%A9veloppement-Neuromorphic-Computing-and-Sensing-2021-Sample.pdf

[31] Shi Leping, Pei Jing, Zhao Rong. Neuromorphic Computing Aimed at Artificial General Intelligence[J]. Artificial Intelligence, 2020, 4(01):6-15.

[32] Zhiyuan: Deep Dive into Global Biomimetic Chip Plans! 15+ Companies Have Joined, The Path to Artificial Brain Future Computing. 2020.4.26.https://mp.weixin.qq.com/s/VW21Q7IZOiSX9lCmw2jEFA

[33] Paul A, Song S, Das A. Design technology co-optimization for neuromorphic computing[C]//2021 12th International Green and Sustainable Computing Conference (IGSC). IEEE, 2021: 1-6.https://doi.org/10.1109/igsc54211.2021.9651556

[34] ZhiIN: Intel Launches Loihi 2 and New Lava Software Framework, Collaborating with More Partners to Promote Further Development of Neuromorphic Computing. 2021.10.1.https://mp.weixin.qq.com/s/gDdTwxqmhj7wudEgqCib-g

[35] ARFL: World’s largest Neuromorphic digital synaptic supercomputer.https://afresearchlab.com/technology/artificial-intelligence/worlds-largest-neuromorphic-digital-synaptic-super-computer/

[36] Quantum Bit: Startups Raising Hundreds of Millions, Why is This “Artificial Super Brain” Track Not a Gimmick?. 2022.8.18.https://mp.weixin.qq.com/s/sXNK2piMFa3QI17DijNcJg

[37] Tsinghua University: Tsinghua University’s Brain-like Computing Center Team Led by Professor Shi Leping Published a Cover Article in “Nature”. 2019.8.1.https://www.tsinghua.edu.cn/info/1181/34641.htm

[38] Tsinghua University: Tsinghua University’s General Artificial Intelligence Chip “Tianji Chip” Featured on the Cover of “Nature”—Seeking “Tianji” with “Brain-like”. 2019.8.12.https://www.tsinghua.edu.cn/info/1182/50148.htm

[39] Xinhua News: The Largest Neuron-Scale Brain-like Computer is Born. 2020.9.11.http://www.xinhuanet.com/science/2020-09/11/c_139360379.htm

Disclaimer: This article is a network reprint, and the copyright belongs to the original author. If there are any copyright issues with the videos, images, or text used in this article, please inform us immediately, and we will delete the content! The content of this article reflects the original author’s views and does not represent the views of this public account or its authenticity.