Author | kkkneiSource | Lei Technology Digital 3C Group

For reprints, please contact the original source

In 2010, Apple submitted its first answer sheet regarding “in-house development” in the semiconductor field – the Apple A4, a SoC that powered the iPhone 4, providing robust performance.

Since then, Apple has embarked on the path of “in-house development”.

Mark Gurman, a technology reporter at Bloomberg, revealed in the latest issue of the “Power On” newsletter that Apple is planning to develop its own camera sensors to increase its control over imaging capabilities. The in-house developed camera sensors are expected to be used in iPhones, virtual reality devices, and autonomous vehicles.

It is well known that since the birth of the iPhone, almost every generation has adopted a “custom” camera sensor, which allowed it to lead the smartphone market in the first decade. However, in the last decade, the hardware level of the iPhone has lagged far behind the Android camp.

“Exclusive customization” has given Apple a higher say in the iPhone’s imaging system, but this “obsession” has also led to disappointing actual performance in imaging for consumers. Can Apple’s plan to develop its own camera sensors really bring the iPhone back to the pinnacle of smartphone imaging?

Apple is Forced to Develop Its Own Camera Sensors

Every time Apple begins to explore in-house development projects, it is basically related to “money”.

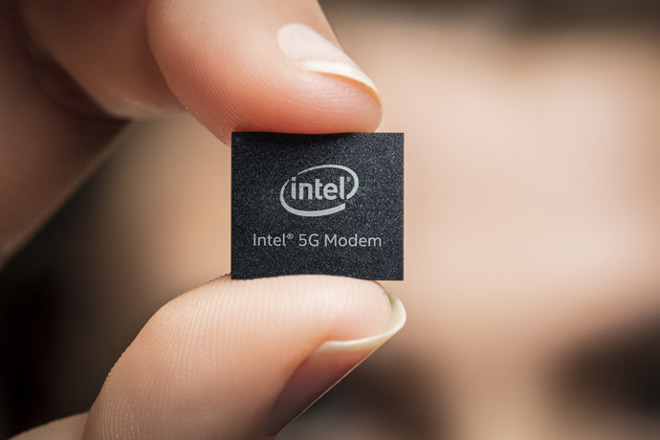

For example, in the patent dispute with Qualcomm, Apple chose to side with Intel, and since the iPhone 8 series, it has been equipped with 4G modems developed by Intel, with the iPhone 11 series having the strongest modem performance, specifically the XMM 7660 model. However, the actual performance has been less than satisfactory.

In this dispute, Apple realized a truth: to avoid being controlled, it must “develop in-house”. Although Apple understands this truth, in-house development cannot be completed just by talking; Apple has integrated Intel’s mobile business team for several years, but the in-house modem project has made no progress.

Similarly, Apple’s in-house development of display panels has faced challenges.

As we entered the full-screen era, Apple’s panel suppliers have primarily been Samsung and LG, especially the former, which has almost secured over 90% of the orders for the “Pro” series iPhones. Such a proportion is unacceptable for Apple in terms of strategy and pricing. However, the reality is that Samsung has been cultivating the OLED field for decades, and Apple can only rely on investing in next-generation panel technology to “overtake on a curve”.

Unfortunately, Apple’s in-house development of MicroLED panels has not gone smoothly either, with reports indicating that Apple has paused the development of medium-sized panels pending technological breakthroughs.

In terms of imaging, the collaboration between Sony and Apple began with the iPhone 4S, and almost every subsequent generation of iPhones has been equipped with custom camera sensors from Sony. This year, Apple faces the biggest demand in imaging – virtual reality.

The Apple Vision Pro, as Apple’s first virtual reality device, is equipped with 12 cameras, 6 microphones, and 5 sensors. Among them, Sony provides 4 IMX416 sensors for imaging and 1 IMX418 sensor for body behavior recognition. This indicates that even in the virtual reality field, Apple still heavily relies on Sony for imaging hardware.

Of course, excessive reliance on a single vendor for hardware is not conducive to Apple’s “price control”, which has led to the overall price of the Apple Vision Pro reaching 25,000 RMB. Such an exorbitant price makes it difficult for this product to reach households, which naturally hinders Apple’s progress into new fields. Apple’s development of its own camera sensors is, on one hand, to gain more say and clarify hardware needs; on the other hand, it allows Apple to produce at a lower cost and control prices more precisely.

For “Apple fans”, whether Apple can gain control or manage prices is not important; what matters is whether the self-developed camera sensors can enhance the imaging capabilities of the iPhone.

Can Unbinding from Sony Save iPhone Photography?

Since 2010, Apple has collaborated with Sony to exclusively customize the camera sensors for every generation of iPhones, but as we enter the era of computational photography, Apple’s pursuit of hardware has not been as high as before.

In 2013, the iPhone 5S debuted Sony’s first stacked sensor – the Sony IMX145 (derivative version). Although the pixel count remained at 8 million, the separate chip construction of pixel units and circuit units led to a significant improvement in image quality compared to the previous generation. The following year, Sony added phase detection autofocus technology to this sensor, enhancing its focusing speed and completion rate in various scenes.

Aside from continuously adding new technologies, the iPhone rarely undergoes large-scale upgrades in sensor hardware. For example, Apple used the Sony IM145 and its derivatives for three generations, covering three models. It wasn’t until last year that the iPhone finally saw an evolution in pixel count and sensor size, spanning 8 years.

It seems that Apple does not place much emphasis on parameter iteration but rather focuses on technological needs. The iPhone 14 Pro/Max was the first to use the Sony IMX803 sensor, which has a pixel count of 48 million and a size of 1/1.3 inches. In this generation, the iPhone finally adopted QuadBayer pixel arrangement for four-in-one output, resulting in a unit pixel size of 2.44um.

Looking at the Android camp, Samsung has already entered the 200 million pixel era, while manufacturers like Xiaomi and Vivo have adopted 1-inch sensors comparable to those in compact cameras. In terms of hardware alone, the iPhone’s “exclusive customization” does not have any advantages.

Currently, the biggest issues with iPhone imaging remain the lack of tonal range, poor image quality, and insufficient zoom capabilities. Additionally, due to the involvement of computational photography, the iPhone also experiences various strange bugs during pixel synthesis. The most bizarre issue is the ghosting caused by sapphire glass, but this problem is not entirely Apple’s fault.

On the iPhone 15 Pro Max, Apple showcased its camera module design capabilities. The newly added 5x optical zoom utilizes four refractions to form images in a limited space and relies solely on sensor-shift stabilization to eliminate shake noise, without highlighting or altering the design language, yet upgrading zoom capabilities. However, to avoid changing the overall camera deco design language of the iPhone, this compromise has not brought any breakthroughs in imaging.

Ultimately, Apple cannot design sensors on its own; even though the lens group design can be continuously modified, it cannot achieve the desired effects. Once Apple begins to develop its own camera sensors, the controllable range will significantly increase, providing designers with more favorable space. Although it is uncertain whether the imaging capabilities of the iPhone will improve after Apple develops its own sensors, at least future iPhones will not have to face such “awkward” compromises.

Betting on In-House Development, Apple Takes a Different Path

Looking back at Apple’s development history, it is not difficult to find that every time an “in-house developed” product is launched, it brings a huge impact to the market.

After the A4 chip on the iPhone 4 received unanimous praise from the market, Apple launched the more significantly modified Apple A5 chip on the iPhone 4S in 2011. With the accumulated reputation from the previous generation and the charm of this in-house developed chip, its sales saw a substantial increase. Statistics show that the cumulative sales of the iPhone 4S reached 72 million units, while the previous generation iPhone 4 sold 39 million units.

In 2020, after Apple released the first desktop chip of Apple Silicon, the Apple M1, the Mac product line also saw significant sales growth. In 2021, the market share of MacBooks reached an astonishing 10%, a 26% increase compared to the same period last year.

According to an IBM executive in an interview, after Apple transitioned from Intel to Apple Silicon, it saved at least $2.5 billion in procurement costs each year. For example, the manufacturing cost of the Apple M1 is between $40-50, while the price of an Intel Core i5 processor is $200.

For Apple, developing key components in-house not only provides marketing hype but also saves a significant amount in procurement costs, while also allowing for the design of these components according to demand, achieving three benefits in one. More importantly, imaging has become the most competitive aspect in the smartphone field, and the main battleground for manufacturers. Apple’s use of in-house developed camera sensors is beneficial for providing stronger imaging performance for products like the iPhone and Apple Vision Pro.

Of course, aside from the A-series and M-series chips, the progress of in-house development for other core components has been quite slow. The in-house modem has made little progress after several years, let alone the camera sensors that are still in the planning stage. However, it can be anticipated that in-house development is the direction for Apple’s future, and how soon it can be implemented and the performance of the first-generation products may still require some waiting.