The DSP (Digital Signal Processing) functionality in FPGAs can be used for AI acceleration, with its core advantages being hardware-level parallel computing capabilities and low latency characteristics, but it requires algorithm optimization and hardware architecture upgrades.

FPGA Technology

The global FPGA market was valued at $6.2 billion in 2021 and is expected to grow at a compound annual growth rate (CAGR) of 7.6%, increasing from $6.7 billion in 2022 to $13.9 billion by 2032.

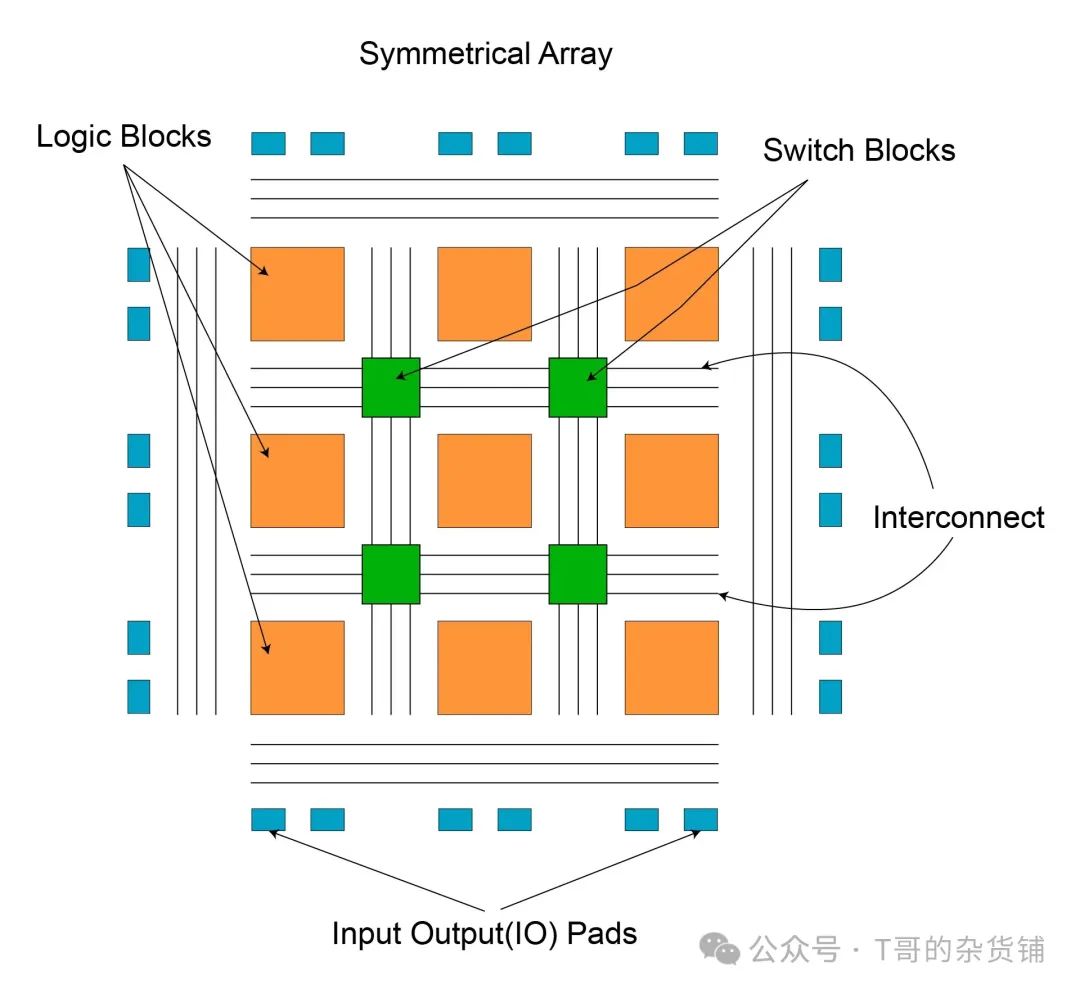

FPGAs (Field Programmable Gate Arrays) have been around for decades. These devices consist of a range of logic blocks and a mechanism for programming these blocks and their interactions, namely digital signal processing.

FPGAs are general-purpose programmable chips that can be customized for different applications. Unlike traditional chips, FPGAs can be modified multiple times for various uses. Developers use hardware description languages (HDLs) such as Verilog and VHDL to express the functionality/configuration of the FPGA.

Modern FPGAs, when correctly reprogrammed, can function similarly to application-specific integrated circuits (ASICs) to meet the needs of specific applications, just like traditional ASICs. FPGAs can also surpass graphics processing units (GPUs) in data processing acceleration.

Features, Functions, and Applications of FPGAs

Configurable logic components and memory are features of FPGA devices. General-purpose FPGAs can be configured as needed to run and support various applications.

To ensure FPGA compatibility with specific systems, thanks to the modular nature of FPGA design, end users can build various hardware designs.

End users or programmers can implement hardware designs using hardware description languages such as Verilog HDL, VHDL, and SystemC.

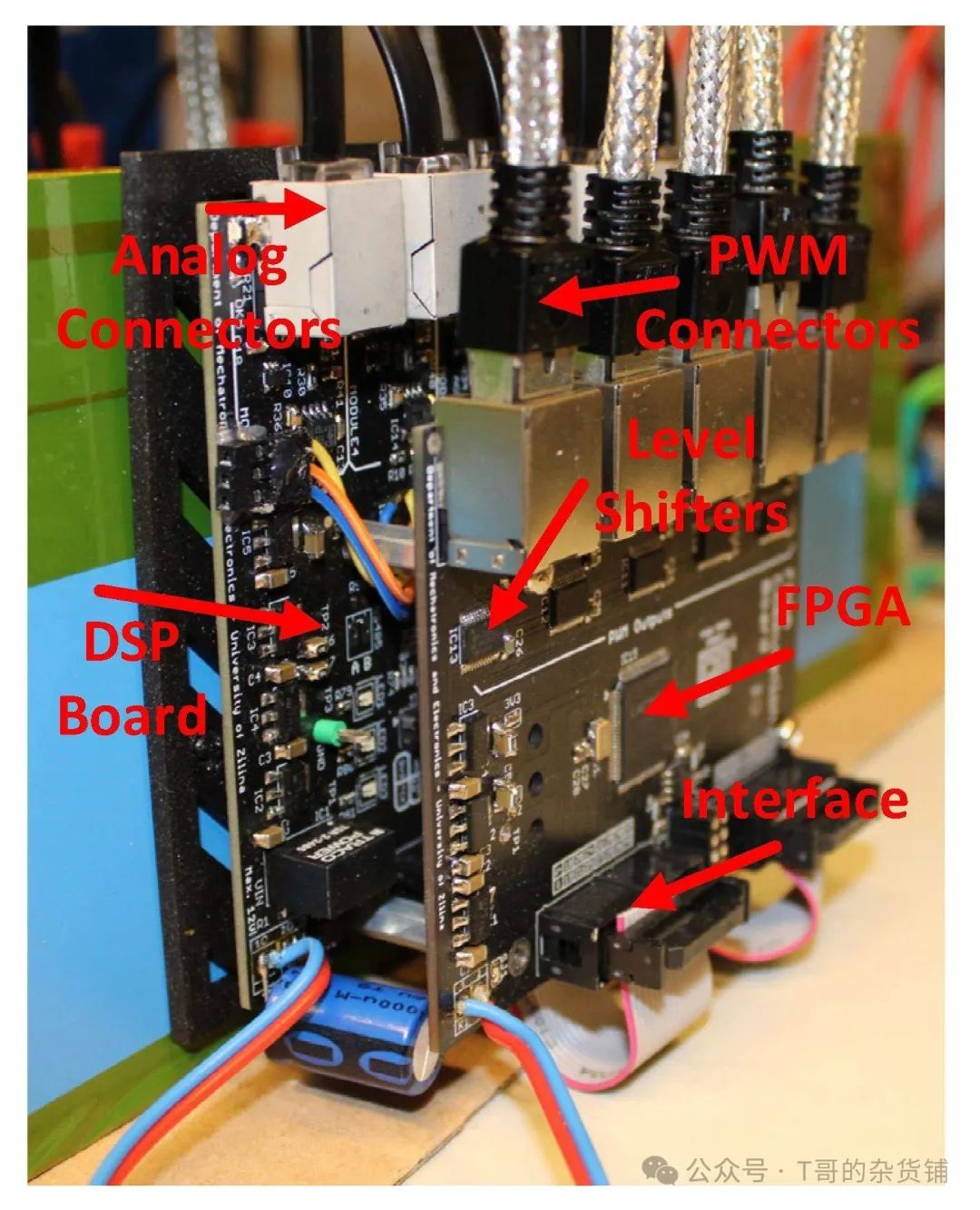

Additionally, FPGA-based SoCs are equipped with embedded memory, ARM Cortex-M embedded processors, and DSP modules, forming standalone systems designed for specific purposes.

For example, there is no longer a need for external storage devices to support the operation of FPGA devices capable of processing massive datasets.

Thanks to the flexibility of FPGA design, designers can choose to implement system components/functions in embedded processors, create hardware components, or utilize the available logic resources in the FPGA to implement these functions.

The typical cost of FPGAs varies based on their functionality.

However, the most complex options are priced around $1,000. FPGAs are more effective signal processing units than traditional DSPs due to their cost-effectiveness and additional features (including embedded processors, memory, and hardware flexibility and configurability).

Overview of Digital Signal Processing (DSP)

| According to surveys, the global digital signal processor market is expected to reach $18.5 billion by 2027, with a compound annual growth rate of 7.5% from 2022 to 2027. (Future Market Insights) |

|---|

The process of evaluating and altering signals to improve or enhance their efficiency or performance is known as digital signal processing (DSP).

It involves processing analog and digital signals using various mathematical and computational techniques to generate signals of higher quality than the original.

DSP is primarily used to identify errors and filter and compress transmitted analog signals. This is a form of signal analysis performed by digital signal processors or other devices capable of executing DSP-specific processing algorithms.

DSP typically converts analog signals into digital signals before applying signal processing techniques and algorithms. For example, when applied to audio signals, DSP can help minimize noise and distortion.

Examples of DSP applications include audio signal processing, digital image processing, speech recognition, biomedical applications, and others.

Using FPGAs for Digital Signal Processing

The ongoing digital transformation across various industries requires abandoning traditional methods in favor of interconnected, digital, and improved data management.

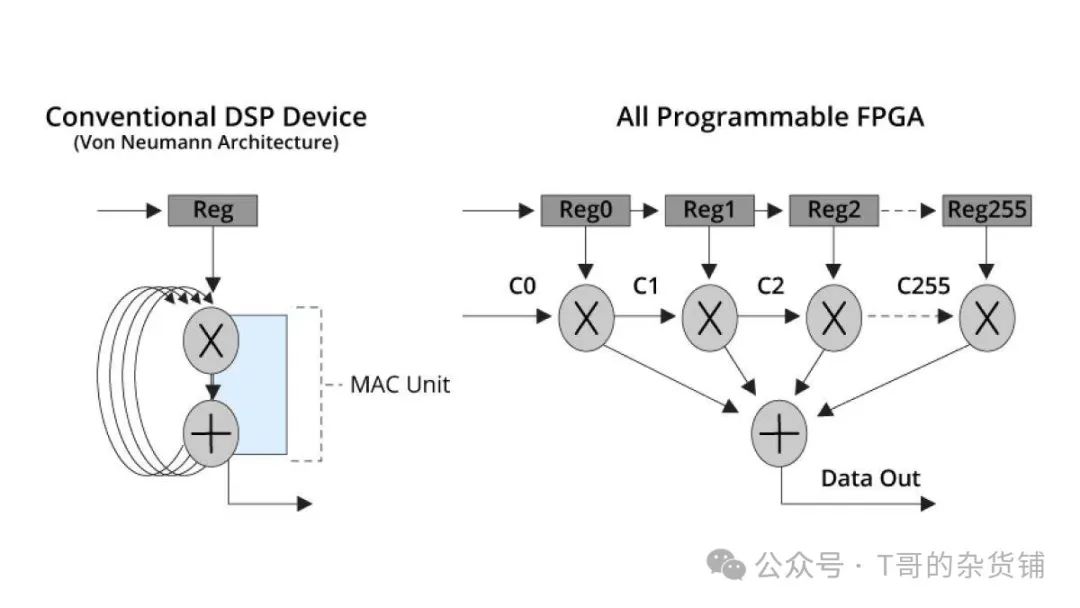

While traditional digital signal processors (DSPs) are still used for signal analysis and data aggregation, hardware and technology limitations mean that digital signal processing must be more flexible to perform well in current facilities and systems.

As a result, a range of more complex signal processing solutions is needed, and field-programmable gate arrays (FPGAs) fall into this category.

To better understand the necessity of adaptive signal processing technologies, a deeper exploration of the limitations of standard digital signal processing is required. The time-consuming programming process of DSP software is sometimes seen as a barrier.

However, the main issue with traditional DSP lies in its rigid architecture. DSP processors are known for their inflexible hardware architecture.

This is where field-programmable gate arrays become relevant in the field of artificial intelligence and outperform GPUs and ASICs in digital signal processing.

Latency:

Using field-programmable gate arrays (FPGA DSP) for digital signal processing can reduce latency compared to GPUs or CPUs. Since they operate in a bare-metal environment without an operating system, FPGAs and ASICs are faster than GPUs and CPUs.

Power:

Another advantage of using field-programmable gate arrays (FPGAs) for digital signal processing in power-constrained applications is their superiority over GPUs (and CPUs). Applications running on bare-metal FPGA architectures consume less power.

Flexibility: FPGAs vs. ASICs

The production cycle for AI-ASICs typically ranges from 12 to 18 months. Implementing changes in ASIC design takes much longer, while FPGA design changes require reprogramming, which can take anywhere from hours to weeks.

Although field-programmable gate arrays (FPGAs) are known for their programming difficulty, they offer advantages such as reconfigurability and faster cycle times.

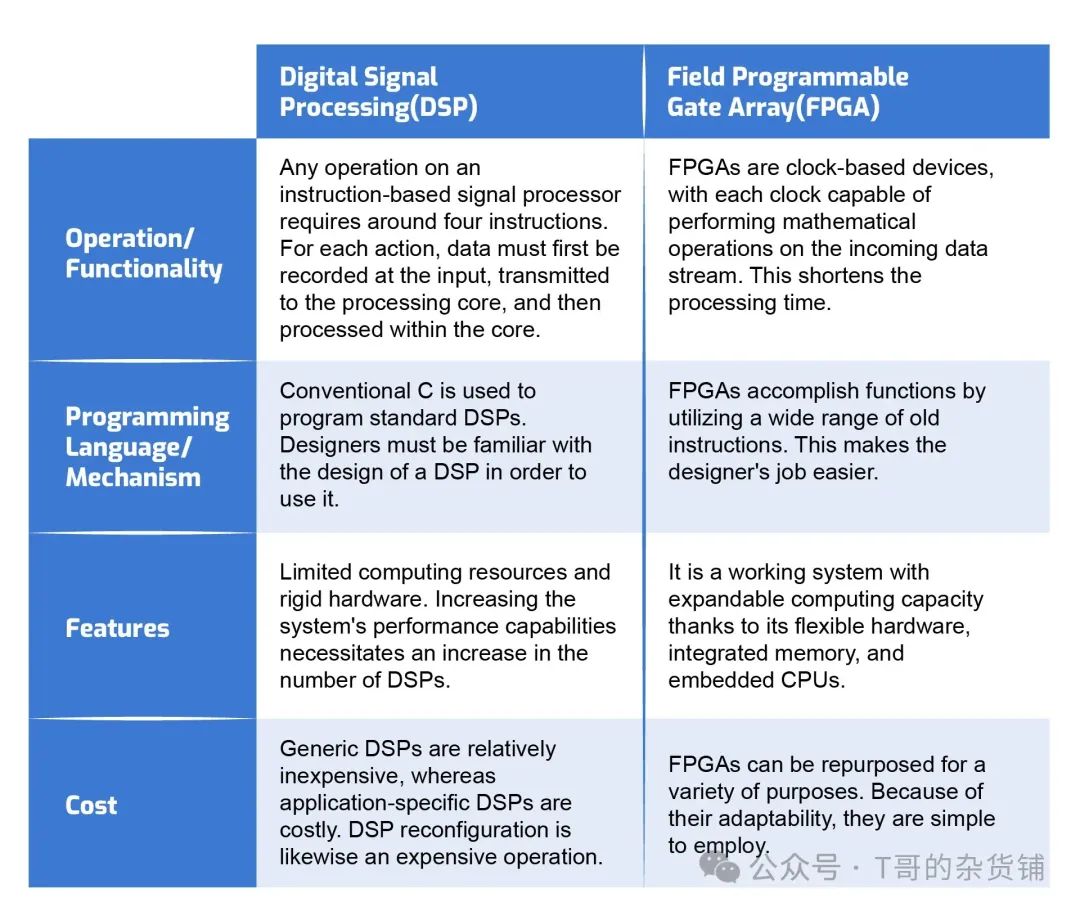

Differences Between DSP and FPGA

AI Accelerator

| The global AI market was valued at $93.5 billion in 2021 and is expected to expand at a compound annual growth rate (CAGR) of 38.1% from 2022 to 2030. |

|---|

An AI accelerator is a type of computer hardware specifically designed to meet the demands of AI. It accelerates activities such as artificial neural networks (ANN), machine learning (ML), and machine vision.

AI accelerators are high-performance parallel processing machines optimized for efficiently handling AI workloads such as neural networks.

Traditionally, computer scientists focused on inventing algorithmic approaches for specific problems and implementing them using high-level procedural languages.

FPGA-Based Acceleration Services

FPGA-based systems can analyze data and perform complex tasks faster than virtualized systems.

While not everyone can reprogram FPGAs for specific purposes, cloud services bring FPGA-based data processing services closer to users.

Some cloud service providers have even launched a new service called Acceleration as a Service (AaaS), allowing users to leverage FPGA accelerators.

When using AaaS, you can leverage FPGAs to accelerate various workloads, including:

- Training machine learning models

- Processing big data.

- Video stream analysis.

- Running financial calculations.

- Accelerating databases.

Some FPGA manufacturers are developing cloud-based FPGAs for AI workload acceleration and other high-performance computing applications.

The Intel Xeon CPU and FPGA acceleration stack offered to Alibaba Cloud customers includes two popular software development processes: RTL and OpenCL.

Microsoft is another large company joining the race to develop effective AI platforms.

Their Brainwave project provides FPGA technology for accelerating deep neural network inference. Like Alibaba Cloud, they use Intel’s Stratix 10 FPGA.

While Intel leads the FPGA industry in AI application acceleration, another well-known FPGA manufacturer, Xilinx, also plans to join the competition.

Xilinx has released a new SDAccel integrated development environment designed to help FPGA developers collaborate with various cloud platforms.

Latest Developments in FPGA-Based AI

Historically, field-programmable gate arrays used for digital signal processing have required more learning time to implement than traditional programming techniques.

Loading algorithms onto FPGAs has been a major bottleneck. The gap between migrating standard AI algorithms to FPGAs or dedicated FPGA-SOC implementations is being bridged by leading FPGA manufacturers’ products on AI accelerator hardware platforms and software development kits.

Xilinx ML Suite

The Xilinx ML Suite provides programmers with the ability to install and optimize fast machine learning inference. It supports various popular machine learning frameworks, including Python and RESTful API, as well as Caffe, MxNet, and TensorFlow.

The xDNN universal inference processor is also a highlight of Xilinx. This high-speed, energy-efficient xDNN processing engine, using Xilinx Alveo data center accelerator cards, currently surpasses many popular CPU and GPU platforms in raw performance and energy efficiency for real-time inference workloads.

The CNN engine is the xDNN inference processor that supports various common CNN networks.

Through the Xilinx xfDNN software stack, the xDNN engine can be integrated into well-known ML frameworks such as Caffe, MxNet, and TensorFlow.

Vitis™ AI is a complete platform for developing AI inference that can run on Xilinx boards, devices, and Alveo™ data center accelerator cards. It includes a comprehensive range of AI models, optimized deep learning processing unit (DPU) cores, tools, libraries, and AI example architectures for edge and data centers. Its exceptional efficiency and ease of use allow it to fully leverage the AI acceleration potential of Xilinx FPGAs and adaptive SoCs.

Intel AI Toolkit

An Intel AI hardware engine, including FPGAs, now supports efficient execution of neural network models from various deep learning training frameworks.

With Intel’s freely available Open Visual Inference & Neural Network Optimization (OpenVINO™) toolkit, models from TensorFlow™, MXNet, or Caffe can be optimized and converted into formats suitable for any common Intel hardware target and accelerator.

By using OpenVINO™ for conversion, programmers can also run the same DNN models on various Intel target platforms and accelerators (such as CPUs, CPUs with integrated graphics, Movidius, and digital signal processors using field-programmable gate arrays), thus attempting to find the best price-performance match on actual hardware.

AI Acceleration with CPUs, GPUs, and DSPs

AI Acceleration with Central Processing Units (CPUs)

With their unparalleled general programmability, Intel® Xeon® Scalable processors have become the most popular AI server platform from cloud to edge.

CPUs are heavily used in the data engineering and inference stages, while the training phase combines CPUs with GPUs and AI accelerators more frequently.

Additionally, CPUs can now handle packed low-precision data types, and their SIMD units are gradually becoming wider, driven by video and gaming workloads.

Due to performance improvements, CPUs are now also used to execute AI tasks. For sparse DNNs, small to medium-scale parallel DNNs, and low-batch applications, CPUs are the preferred choice.

Accelerating AI with Graphics Processing Units (GPUs)

GPUs are specialized semiconductors with fast processing capabilities, particularly excelling in computer graphics and image processing.

The NVIDIA Jetson device series exemplifies how technology brings accelerated AI performance to the edge in a compact form factor.

For instance, the NVIDIA Jetpack SDK can be used to run neural networks on the NVIDIA Jetson Nano development board.

It includes a 128-core GPU and a quad-core ARM CPU, along with optimized Keras and TensorFlow libraries, capable of seamlessly running and simplifying the setup of most neural network backends and frameworks.

With the launch of Xe GPUs, Intel has now become a significant player in the discrete graphics card market.

Intel’s Xe GPUs focus on efficiency while targeting AI workloads and machine learning operations.

As a result, various Intel GPU XE series models provide cutting-edge performance while consuming less power.

Accelerating AI through Digital Signal Processing (DSP)

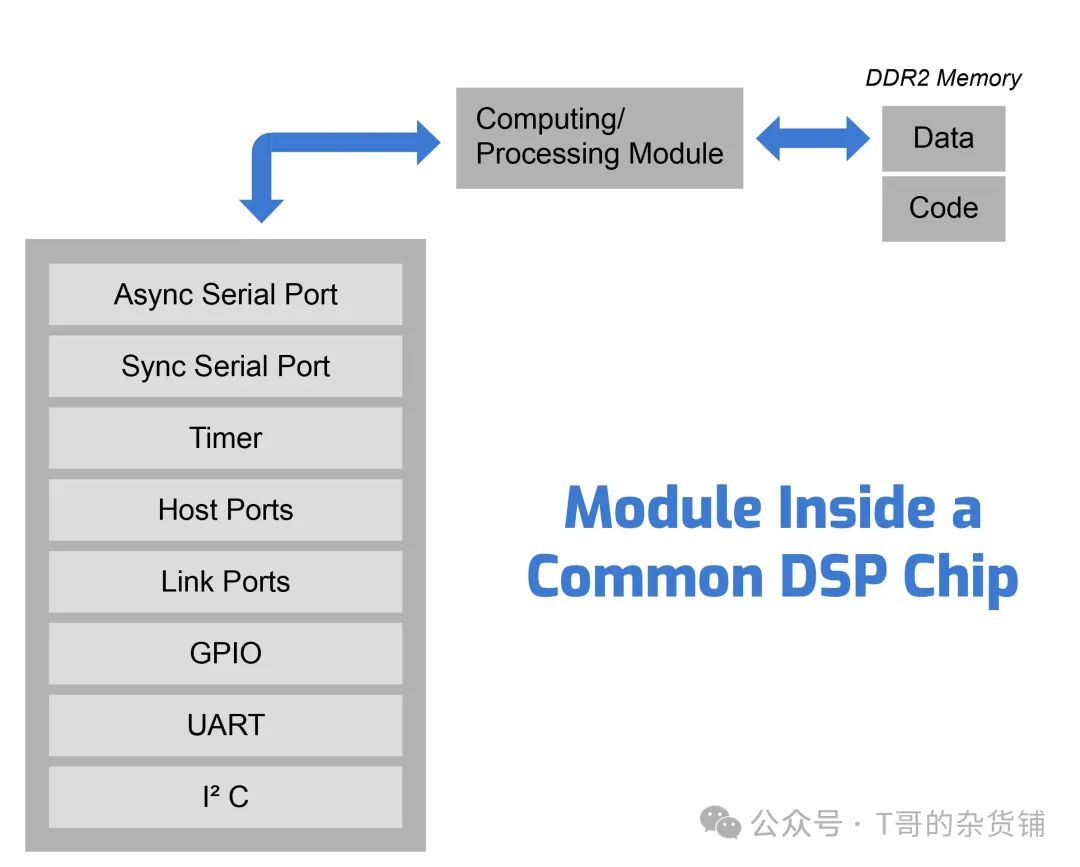

DSPs (Digital Signal Processors) provide incredible processing power, making today’s mobile networks and ultra-high-speed internet possible.

AI initially resembled digital signal processing more than video games. Both DSP and AI receive data, process it through a series of mathematical operations, and then output results.

Despite achieving extremely high performance levels, digital signal processing typically has higher CPU independence than video game graphics.

As communication standards become increasingly complex, devices are using more and more DSPs, which are optimized for various aspects of standards; however, the interfaces between DSPs are primarily managed by the DSPs themselves.

To maximize energy efficiency, these systems are often designed to operate almost entirely independently of the CPU.

DSPs are primarily designed to run in a pipelined manner, utilizing on-chip memory for data processing and interacting across communication stack stages between DSPs to maximize performance and minimize power consumption.

Conclusion

Technologies such as artificial intelligence and machine learning are rapidly evolving and require further acceleration. Looking to the future, using field-programmable gate arrays for digital signal processing will become the ideal choice for creating AI applications, as FPGA toolchains from Xilinx and Intel support AI (ML/DL) acceleration.

Applications benefiting from the rapid deployment capabilities of FPGA-based AI systems include machine vision, autonomous driving, driver assistance, and data centers.

ClickFollow,Make Friends

THE END

THE END

The content represents the author’s personal views, and unauthorized reproduction is prohibited.

The opinions and data in the article are for reference only, and any commercial use is at your own risk.

If there are violations or infringements, please send a private message for deletion.

Reposting, liking, and showing love are all encouragement!