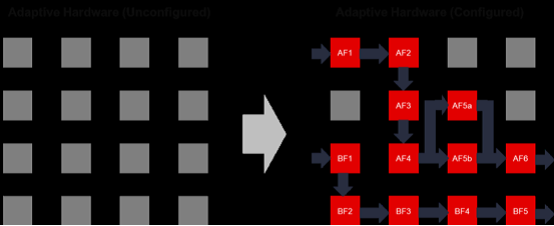

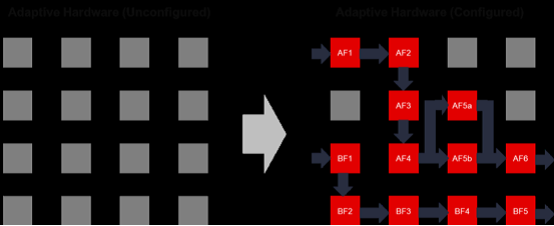

Advantages of Adaptive Platforms

Successful Use Cases of Adaptive Computing

Conclusion

Click to read the original text for more information

Advantages of Adaptive Platforms

Successful Use Cases of Adaptive Computing

Conclusion

Click to read the original text for more information