29

Tuesday

June 2021

Verification Room

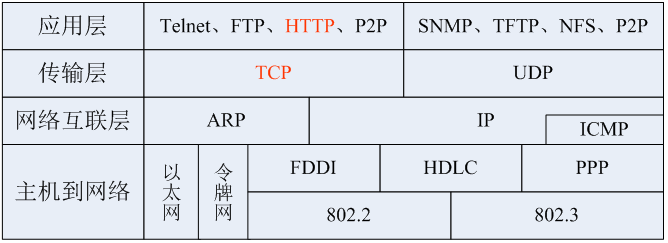

As we all know, the TCP/IP protocol suite is the foundation of the Internet and is currently the most popular networking form. Among them, the Hypertext Transfer Protocol (HTTP), which operates at the application layer of TCP/IP, is an application protocol that can be used for distributed, collaborative, and hypermedia information systems, and is also an important basis for data communication on the World Wide Web. HTTP communicates based on the B/S architecture, with server-side implementations such as Apache httpd and Nginx, while client-side implementations are mainly web browsers. With the development of the Internet and the advent of Web 2.0, HTTP has been widely used in web applications, mobile client apps, H5, and mini-programs, among other hypermedia systems. The hierarchical structure of the TCP/IP four-layer reference model is shown in Figure 1.

Figure 1: The Hierarchical Structure of the TCP/IP Four-Layer Reference Model

1

What is HTTP/3?

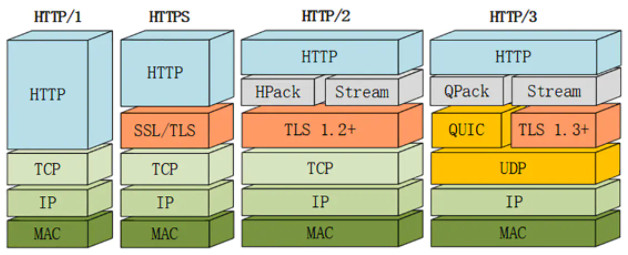

HTTP/3 is the third version of the HTTP protocol standard, primarily implemented using the QUIC protocol based on UDP to make web browsing faster and more secure. Currently, although HTTP/3 is still in draft status, browsers such as Google Chrome, Microsoft Edge, and Firefox have been experimentally supporting HTTP/3 since April 2020. The QUIC protocol is a low-latency Internet transport layer protocol based on UDP, developed by Google. QUIC was initially developed by Google and first released in 2013, and was introduced to the IETF (the organization responsible for maintaining standards for unconnected protocols) in 2015 to begin its standardization process, which is still under development. The hierarchical structure of the TCP/IP reference model for different versions of HTTP is shown in Figure 2.

Figure 2: The Hierarchical Structure of the TCP/IP Reference Model for Different Versions of HTTP

2

Why Do We Need HTTP/3?

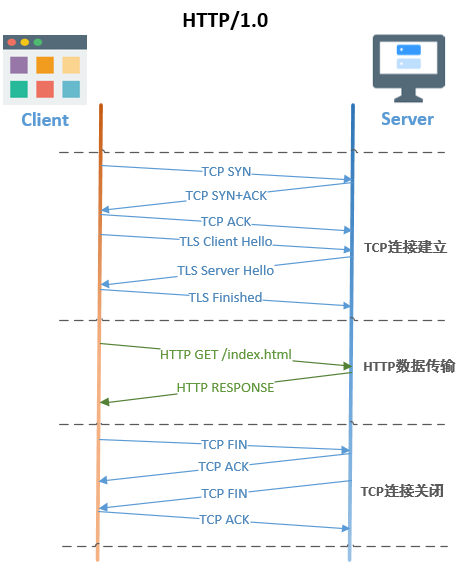

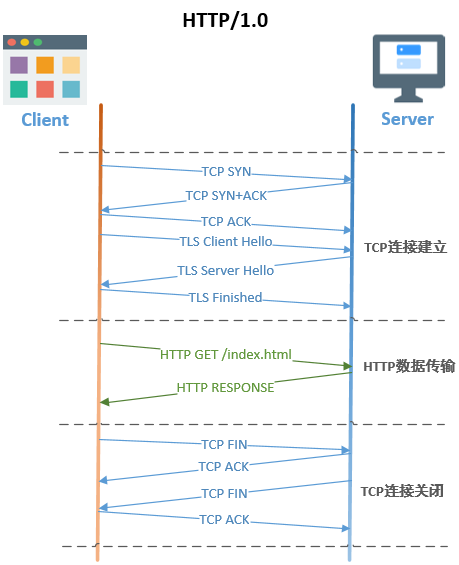

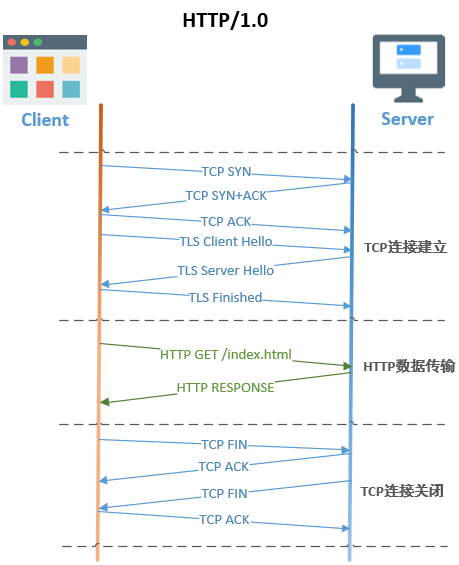

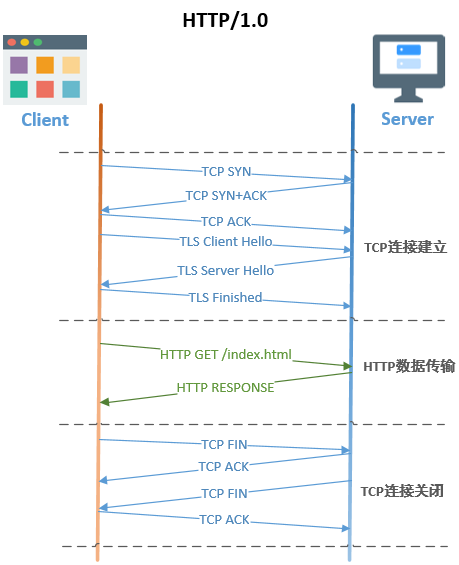

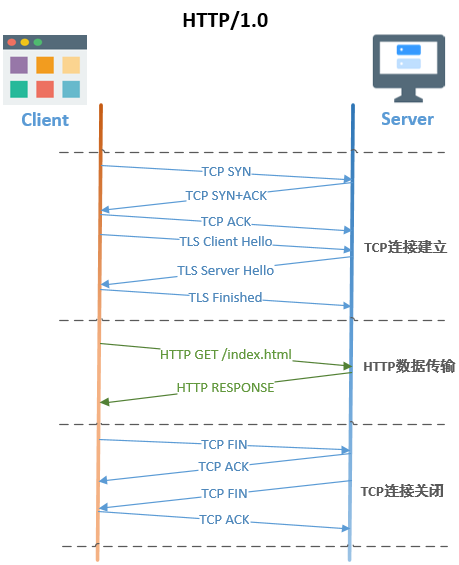

In HTTP/1.0, each request between the client and server creates a new TCP connection, and each request must wait for the TCP and TLS to complete a three-way handshake before it can be executed, resulting in delays for each request. Additionally, once the TCP connection is established, it requires a “slow start” warm-up time, which is determined by the TCP congestion control algorithm to avoid flooding the network with unmanageable packets before congestion occurs. However, this slow start process can lead to underutilization of available network bandwidth. The request/response steps based on TCP+TLS in HTTP/1.0 are shown in Figure 3.

Figure 3: Request/Response Steps Based on TCP+TLS in HTTP/1.0

In HTTP/1.1, long connections (Keep-alive) are enabled by default, allowing clients to reuse TCP connections and transmit multiple HTTP requests and responses over a single TCP connection, which effectively reduces the overhead of establishing TCP connections. However, requests still have to be serialized one after another, and a single TCP connection can only perform one HTTP request/response exchange at a time. If the current request is blocked, the TCP connection cannot be reused, leading to low efficiency in the use of a single TCP connection and slow processing of concurrent requests. As the number of resource requests from websites increases, the concurrency of requests becomes larger, and the effect of reusing TCP connections on improving page display efficiency diminishes. The transmission of reused TCP connections in HTTP/1.1 is shown in Figure 4.

Figure 4: Transmission of Reused TCP Connections in HTTP/1.1

In HTTP/2, the concept of “HTTP streams” allows different request/response exchanges to be multiplexed over the same TCP connection, enabling concurrent processing of multiple HTTP requests over a single TCP connection, which improves both the speed of HTTP request processing and the efficiency of using a single TCP connection. At the same time, HTTP/2 uses the HPACK algorithm to compress header data, reducing the data size and shortening the data transmission duration. The request/response steps in HTTP/2 are shown in Figure 5. However, the role of TCP is to transmit a complete byte stream from one endpoint to another in the correct order. When TCP packets carrying certain bytes are lost on the network path, it creates gaps in the byte stream. When TCP detects a packet loss, it often attempts to fill the gaps by retransmitting the packets. Even if the lost data only involves a single request, TCP cannot deliver the successfully delivered bytes after the lost bytes to the application, resulting in unnecessary delays for other requests, a phenomenon commonly referred to as head-of-line blocking.

Figure 5: Request/Response Steps in HTTP/2

HTTP/3 uses QUIC for transmission, further extending the multiplexing of HTTP/2. The same QUIC connection allows QUIC streams to share, and the QUIC streams are independently transmitted, meaning that a single packet loss will not cause the connection to stop and wait or affect other data transmissions, effectively avoiding head-of-line blocking. Additionally, QUIC combines the three-way handshake of TCP with the TSL 1.3 handshake, providing encryption and authentication by default when establishing a connection, thus establishing connections faster and more securely. Even if the client and server need to establish a new QUIC connection, the waiting time before data transmission is much shorter than using TLS with TCP. Furthermore, the QUIC protocol uses stream IDs instead of the IP and port of the TCP protocol, allowing for connection migration. For example, when a mobile device switches from a 4G/5G network to WiFi, both the underlying IP and port will change. However, since the stream ID of QUIC remains unchanged, the connection remains intact, allowing continued data transmission. The request/response steps based on QUIC in HTTP/3 are shown in Figure 6. The round-trip time comparison of HTTPS connections based on different transport protocols is shown in Figure 7.

Figure 6: Request/Response Steps Based on QUIC in HTTP/3

Figure 7: Comparison of Round-Trip Times for HTTPS Connections Based on Different Transport Protocols

3

How to Use HTTP/3

On the server side, many cloud vendors have begun to support HTTP/3, such as Tencent Cloud’s load balancer, Alibaba Cloud’s CDN services, and Cloudflare. In 2019, Cloudflare open-sourced the QUIC implementation library Quiche and provided transformation solutions for HTTP/3 support on clients such as Android, iOS, and Curl tools, as well as on the Nginx web server. It is important to note that server-side support for HTTP/3 must configure the header attribute containing Alt-svc, as the HTTP/3 request process involves the client first initiating a TCP connection to check whether the server’s response header contains the Alt-svc header. If it does, it attempts to connect using UDP port 443. Alt-Svc (Alternative Service) lists the alternative access methods for the current site, generally used to provide support for emerging protocols like QUIC while maintaining backward compatibility.

On the browser side, browsers such as Google Chrome, Microsoft Edge, and Firefox have been experimentally supporting HTTP/3 since April 2020. For example, in Chrome, set the Experimental QUIC protocol to enable in chrome://flags, and after restarting, HTTP/3 can be used.

4

The Application Value of HTTP/3

With the rapid rise of mobile applications, more than 50% of Internet traffic is now transmitted wirelessly. However, in areas with insufficient wireless coverage, the TCP head-of-line blocking issue severely affects the web browsing experience, while HTTP/3 can significantly improve the online experience in areas where high-speed wireless networks are unavailable.

Some preliminary experiments by Google have demonstrated that QUIC, as the underlying transport protocol for some popular services, greatly improves network transmission speed and user experience. For instance, the buffering rate of YouTube video streams deployed with QUIC as the underlying transport protocol decreased by 30%, Google search latency reduced by more than 2%, and throughput increased by more than 3% for PC clients and over 7% for mobile devices.

HTTP/3 is a brand new web protocol based on the QUIC protocol and integrates the next-generation secure transmission protocol TLS 1.3 encryption, which not only reduces the handshake delay for establishing connections but also eliminates the TCP head-of-line blocking issue, while supporting connection migration, thus becoming a strong support for the future of the World Wide Web.

In the financial sector, HTTP/3 also helps enhance the overall network experience, particularly in weak network scenarios. Additionally, with the emergence of new business scenarios such as short videos and live streaming, the demands for stable high bandwidth and low latency in the financial industry are becoming increasingly prominent. HTTP/3 can flexibly adapt to the network requirements of different business scenarios (such as RPC/short videos/live streaming) within the same app by adjusting congestion control algorithms/parameters to the connection granularity, thereby maximizing the transmission capabilities provided by infrastructures like 4G/5G.

Previous Recommendations

The Cornerstone of Real-time Financial Data Lakes: A Brief Analysis of Real-time Data Lake Iceberg Principle

A Brief Analysis of Voice Emotion Recognition Technology

Confirmed by Eye Contact, You Are Indeed You — A Brief Introduction to Iris Recognition Technology

A Brief Analysis of PaaS Cloud Platforms

The Evolution Direction of Future Big Data Platforms — Lake and Warehouse Integration