A Deep Dive into Multi-Head Attention: The Versatile Core of GPT

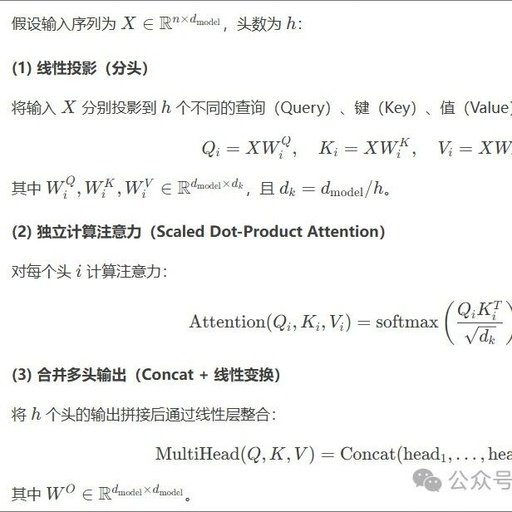

In the realm of deep learning, the attention mechanism is akin to a master of its craft. Originally emerging in machine translation, Attention quickly became a powerful tool for addressing long sequence dependency issues, enabling models to focus on truly important information. This is similar to how, in a noisy gathering, your brain automatically filters … Read more