As the generative AI wave triggered by ChatGPT sweeps across the globe, artificial intelligence is undergoing a paradigm revolution from “tool rationality” to “general intelligence”.

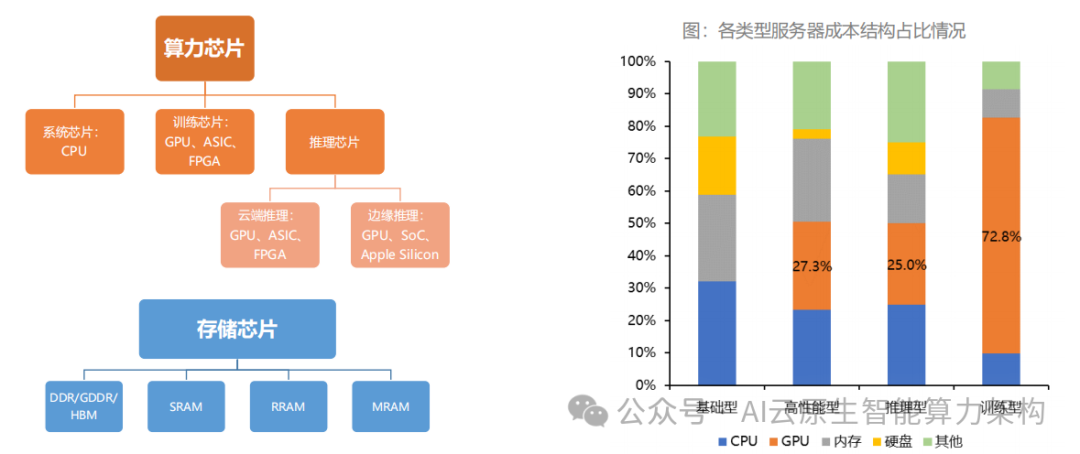

The underlying support for this revolution is the continuous breakthroughs in computing power chips in terms of architecture innovation, performance boundaries, and ecosystem construction.

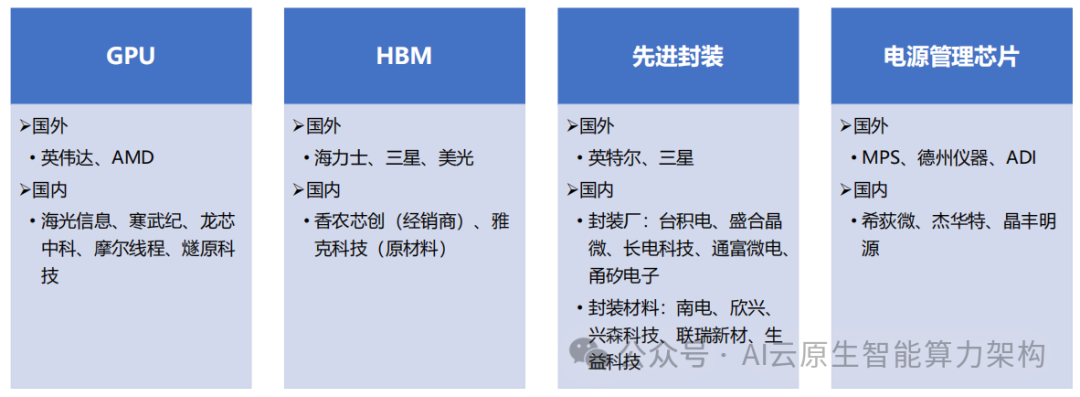

From the enhancement of single-core performance in GPUs to the collaborative innovation of HBM and advanced packaging, and from overseas technology monopolies to the breakthrough of domestic computing power ecosystems, the evolution trajectory of computing power chips is profoundly reshaping the competitive landscape of the global technology industry.

1. Technological Evolution of Computing Power Chips: From General Computing to Heterogeneous Integration Paradigm Revolution

(1) Deep Adaptation of GPU Architecture and Ecological Barriers

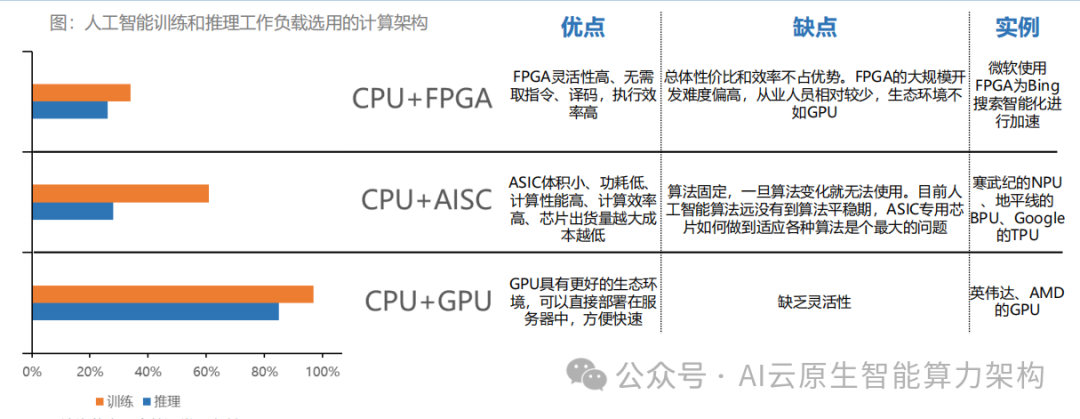

The reason GPUs have become the core carrier of AI computing power is due to their deep optimization architecture for matrix operations. Taking NVIDIA’s H100 as an example, its Transformer Engine is specifically designed for self-attention mechanisms, allowing dynamic allocation of FP16/BF16 precision calculations, improving training speed by 30% while maintaining model accuracy.

This deep synergy between hardware and algorithms has created an insurmountable technological barrier—after 20 years of accumulation, the CUDA ecosystem has formed a complete system that includes over 500 tool libraries and 3 million developers, with 95% of operators in mainstream frameworks like PyTorch and TensorFlow relying on CUDA acceleration, constituting a “hardware + software + developer” moat.

However, the performance ceiling of GPUs is gradually becoming apparent. As transistor sizes approach atomic levels, the leakage power issues of processes below 12nm lead to diminishing marginal returns in computing power improvements, forcing the industry to shift towards heterogeneous computing architectures. AMD’s MI300X integrates 12 Zen4 CPU cores and 5 CDNA3 GPU cores, achieving an 8-fold increase in computing density, showcasing the potential of CPU+GPU+NPU heterogeneous integration.

(2) HBM Technology: The Key to Breaking the “Memory Wall”

The explosive growth in the parameter scale of AI models (GPT-4 has 1.7 trillion parameters) has made storage bandwidth a new bottleneck. Traditional GDDR6 has a bandwidth of only 600GB/s, which cannot meet the real-time invocation needs of trillion-parameter models. HBM, through 3D stacking technology (TSV silicon through-silicon vias), vertically integrates 4-12 layers of DRAM, breaking the single-chip bandwidth barrier to over 3TB/s (HBM3), more than 5 times that of GDDR6.

This technological innovation brings not only performance improvements but also a transformation in computing paradigms—NVIDIA’s H100, equipped with 80GB HBM3, can directly store hundreds of billions of parameter models on-chip, reducing data transport energy consumption by 90%.

Industry chain data shows that global HBM shipments will reach 490 million GB in 2024, a year-on-year increase of 58%, with SK Hynix, Samsung, and Micron occupying 99% of the market share.

However, supply chain risks are also significant: by Q4 2023, SK Hynix’s HBM3 orders have been booked until 2025, with supply shortages driving prices up—selling at $15 per GB, which is 10 times that of ordinary DRAM.

(3) Advanced Packaging: The “Digital Bridge” Connecting Heterogeneous Computing Power

As Moore’s Law slows, advanced packaging has become key to continuing the “silicon myth.” TSMC’s CoWoS (Chip-on-Wafer-on-Substrate) technology achieves 2.5D integration, placing HBM and GPU/CPU on the same silicon interposer, increasing inter-chip interconnect bandwidth to 900GB/s (H100), a 10-fold improvement over traditional packaging.

This technology needs to overcome three major challenges: first, interposer manufacturing (line width ≤ 5 microns), second, micro-bump bonding (spacing ≤ 50 microns), and third, thermal design (single-chip power consumption exceeding 700W).

The global advanced packaging market shows an oligopolistic structure: TSMC’s CoWoS capacity accounts for 60%, supporting 80% of NVIDIA’s high-end GPU packaging; ASE and Samsung share the remaining market through EMIB and X-Cube technologies. The global 2.5D/3D packaging market reached $15 billion in 2023, expected to grow to $40 billion by 2028, with a CAGR of 22%.

2. The Path to Breakthrough for Domestic Computing Power Chips: From Technological Following to Ecological Breakthrough

(1) Architecture Innovation: Establishing Differentiated Advantages in Niche Areas

Faced with NVIDIA’s first-mover advantage, domestic manufacturers have chosen a strategy of “scene focus + architecture innovation.” Cambricon’s MLU370 adopts a mixed-precision computing architecture, achieving an energy efficiency ratio of 20TOPS/W in image recognition inference scenarios, three times that of GPUs, and has entered the supply chains of companies like SenseTime and Megvii.

Haiguang Information’s Deep Computing No. 2, based on the x86 instruction set and a “CUDA-like” ecosystem (ROCm platform), achieves 95% operator compatibility with PyTorch, breaking a 10% market share in the internet industry’s model fine-tuning scenarios.

More aggressive innovation comes from the field of dedicated chips. Huawei’s Ascend 910D adopts the Da Vinci architecture, optimizing matrix operations through 3D Cube computing units, achieving FP16 computing power of 280 TFLOPS, supporting the training of trillion-parameter models like Pengcheng and Pangu, becoming the only domestic training chip comparable to the A100.

(2) Ecosystem Construction: Breaking the Dilemma of “Easy to Create Hardware, Hard to Build Ecosystem”

The core of ecosystem construction is to break the “developer lock-in.” NVIDIA’s CUDA has over 500 mathematical libraries and more than 200 industry solutions, making the cost of developer migration extremely high. Domestic manufacturers adopt a “dual-track” strategy: in the short term, they lower the threshold by being compatible with CUDA (e.g., Moore Threads MTT S3000 supports CUDA 11.6 API), and in the long term, they build an independent ecosystem—Huawei’s MindSpore has formed a closed loop of “framework – toolchain – hardware,” supporting training for over 50 large models, achieving partial replacement of TensorFlow in scientific computing.

Government and enterprises collaborate to accelerate ecosystem implementation. Cities like Shenzhen and Beijing have established “Xinchang Computing Power Centers,” mandating that government AI systems use domestic chips, driving a 40% annual increase in orders for Cambricon and Haiguang Information.

By 2024, the penetration rate of domestic AI chips in the financial and energy sectors will increase from 18% to 38%, forming a positive cycle of “policy guidance – scene implementation – ecosystem feedback.”

(3) Industry Chain Collaboration: Breaking the Shortcomings of “Strong Design, Weak Manufacturing”

The biggest pain point for domestic computing power chips lies in the manufacturing process. SMIC’s 14nm process yield reaches 95%, but processes below 7nm are limited by the supply of EUV lithography machines, resulting in a 2-3 generation gap with TSMC.

The breakthrough path lies in “Chiplet + Advanced Packaging”: Chipone Microelectronics’ Chiplet design platform has achieved interconnection of 4 GPU chips, with equivalent computing power reaching 70% of the A100.

Longji Technology’s 2.5D packaging capacity is expected to exceed 100,000 pieces/month by 2024, supporting the integrated packaging of HBM and domestic GPUs.

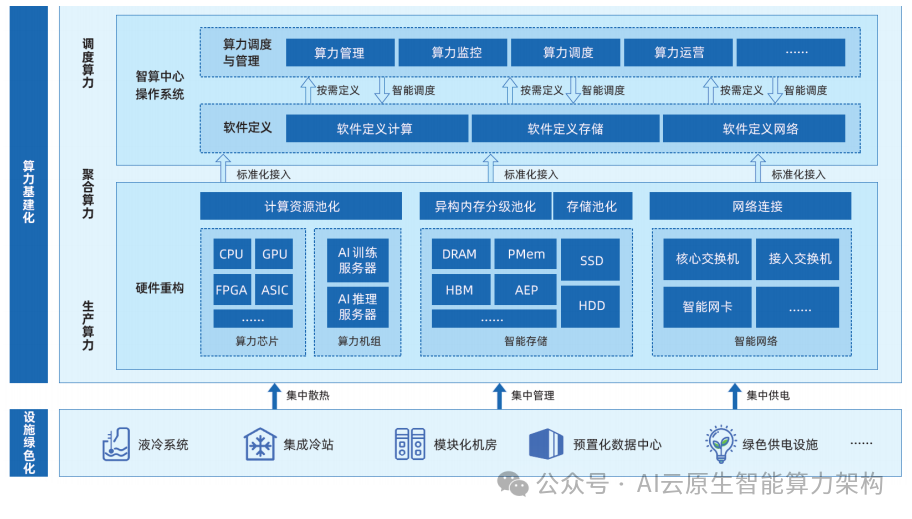

Image: Computing Power Infrastructure of Intelligent Computing Centers

3. Future Competition Focus: From Performance Comparison to Systematic Warfare

(1) Computing Power Network: Restructuring Global Computing Power Resource Layout

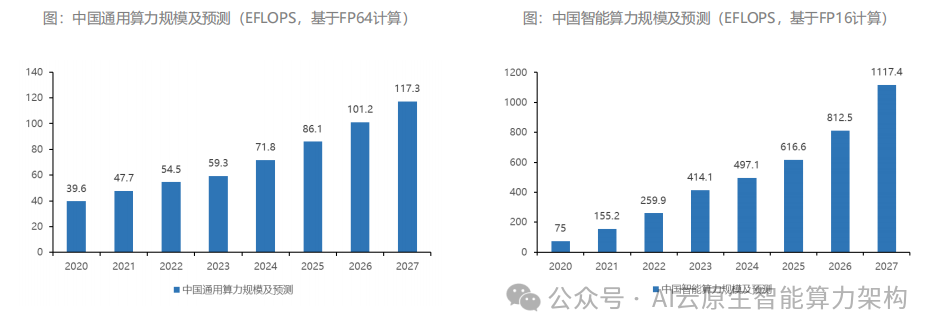

With the advancement of the “East Data West Computing” project, China’s computing power infrastructure is shifting from “dispersed construction” to “centralized scheduling.”

Shenzhen plans to reach a computing power scale of 18,000 PFLOPS (FP32) by 2025, improving GPU utilization from 30% to 75% through a computing power scheduling platform.

This transformation poses new requirements for computing power chips: they need to support remote cluster management (e.g., RDMA technology) and dynamic load balancing (e.g., intelligent network card integration). Huawei’s Ascend Atlas 900 has achieved second-level fault recovery for tens of thousands of cards.

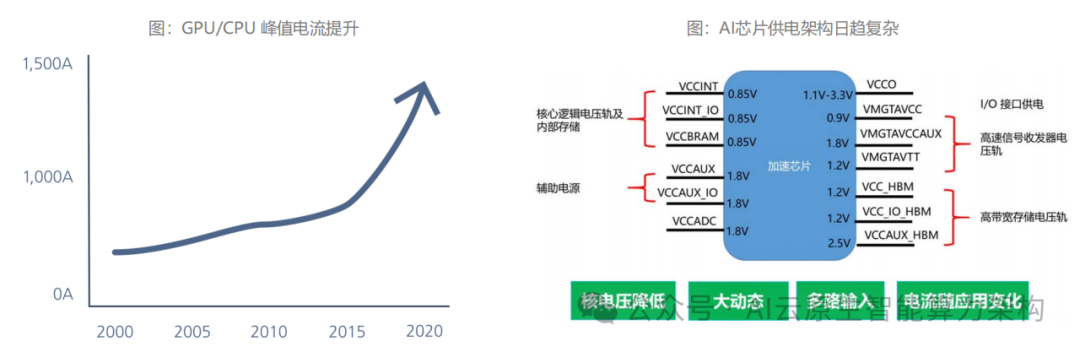

(2) Green Computing Power: Backside Power Supply Technology Initiates Energy Efficiency Revolution

High computing power comes with high power consumption, with a single AI server’s power consumption exceeding 5kW, making data center PUE (Power Usage Effectiveness) a key indicator.

Backside power supply technology (BSPDN) reduces over 20 layers of metal interconnects by migrating the power network to the back of the chip, lowering IR drop from 50mV to 20mV, increasing computing power density by 44% while reducing energy consumption by 15%.

Intel’s 20A process has integrated PowerVia technology, TSMC’s 2nm process plans to implement backside power supply solutions, and domestic manufacturers like SMIC are collaborating with Xidi Micro to develop a 48V high-voltage power supply architecture, expected to be commercialized in 2024.

(3) Computing Power Security: Building a Self-Controlled Industrial Defense Line

The U.S. export controls on A100 and H100 continue to escalate, with new restrictions on H800 and A800 added in October 2023, forcing the domestic establishment of a “computing power backup” system.

From a technical perspective, three major security boundaries need to be overcome: chip design (the localization rate of EDA tools needs to increase to 30%), manufacturing processes (the localization rate of equipment for processes below 12nm needs to reach 20%), and ecological security (the market share of domestic AI frameworks needs to exceed 25%).

In 2023, the National Fund Phase II invested a total of 5 billion yuan in Cambricon and Haiguang Information, accelerating the construction of a self-sufficient computing power supply chain.

Defining the Intelligent Computing Era in Reconstruction

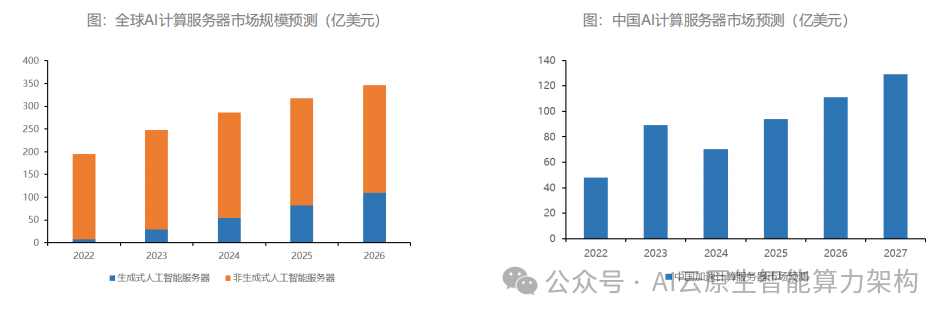

The competition in the AI intelligent computing era is essentially a systematic competition of “technical architecture + industrial ecology + resource scheduling.” As computing power chips evolve from single hardware to “computing power network nodes,” their evolution logic has transcended technology itself—it is both a strategic bargaining chip in great power games (with 70% of global AI computing power concentrated in the U.S. and China) and the core engine of industrial transformation (with the global computing power chip market expected to reach $300 billion by 2030).

For China, the breakthrough path lies in “dual-chain integration”: on the technology chain, achieving “curve overtaking” through cross-generation technologies like HBM, Chiplet, and backside power supply.

On the ecological chain, building a self-innovation system relying on government guidance, scene implementation, and developer cultivation.

When computing power chips become the “universal language” of the digital economy, only by mastering its core definition can one anchor the direction in the wave of the intelligent computing era and lead the paradigm shift of the next technological revolution.

- Breaking News! Surpassing NVIDIA H100! In-Depth Analysis of Huawei Ascend 910D AI Chip! 2025

- In-Depth Analysis of Three Fine-tuning Methods for Large Models 2025

-

Trillion-Dollar Track! In-Depth Analysis of AI Application Storage Architecture and Solutions! 2025

-

Breaking News! Open Source! Meta Releases Complex Reasoning Retrieval Model ReasonIR-8B! 2025

-

Trillion-Dollar Space! Research Report on Financial Intelligent Computing Power Infrastructure Solutions 2025

-

Breaking News! Alibaba Releases Qwen3 Hybrid Reasoning Open Source Large Model! Performance Ranks First in the World! 2025

-

Enterprise-level Large Model Inference and Deployment Platform 2025

- Breaking News! Baidu Makes a Big Move! Wenxin Large Model 4.5 Turbo Released! 2025

- Breaking News! OpenAI Predicts Revenue Will Reach $125 Billion by 2029, Surpassing NVIDIA and Meta! 2025

- Breaking News! Meta Releases Open Source Large Model Llama 4! 2025

- Breaking News! NVIDIA H20 Chip Fully Banned from Sale In-Depth Analysis! 2025

- Trillion-Dollar Market! Research Report on Chinese Intelligent Computing Center Service Providers 2025

-

Trillion-Dollar Market! In-Depth Research Report on Domestic Intelligent Computing Chips 2025!

-

Deep Reflection! Will DeepSeek Lead to a Significant Decrease in Demand for Intelligent Computing Center Power? 2025

-

Trillion-Dollar Market! Research Report on State-Owned Enterprises’ Cloud-Native Digital Transformation Solutions 2025

-

Breaking News! Open Source! Zhiyu GLM-Z1 Large Model with 32B Parameters Performance Comparable to DeepSeek-R1 with 671B Parameters! 2025

-

Breaking News! Trillion-Dollar Space! Domestic Top 3! Horizon End-to-End Intelligent Driving Solution Released 2025

-

Breaking News! ByteDance Makes a Big Move! Doubao 1.5 Deep Reflection Large Model Released! Ranks First in the World!

-

Breaking News! Trillion-Dollar Market! By the End of 2027, State-Owned Enterprises Must Complete 100% of Information System Transformation Work!

-

Breaking News! First to Achieve “Image Thinking”! OpenAI Releases New Models o3 and o4-mini 2025

-

In-Depth Analysis of NVIDIA and Domestic Mainstream Giants’ Humanoid Robot Ecosystem Layout 2025

-

Latest! Summary of the Impact of the U.S. “Reciprocal Tariff” Policy and Strategies of Various Countries 2025

-

Trillion-Dollar Space! Explosive Growth! Research Report on Industrial Humanoid Robot Applications 2025

-

Accelerated Replacement! Research Report on Domestic Intelligent Computing Ecosystem 2025

-

Continuously Accelerating! Research Report on Core Technologies of Embodied Intelligence 2025

-

Trillion-Dollar Track! Accelerating Explosion! In-Depth Analysis of AI Intelligent Computing Chip Industry Chain 2025

-

Trillion-Dollar Space! Research Report on Medical AI Industry Development 2025

-

In-Depth Analysis of the Development Path of China’s Humanoid Robot Industry and Technology under the Global Tariff War 2025

-

Heterogeneous Intelligent Computing Scheduling Practice Solutions 2025

-

In-Depth Analysis of DeepSeek Large Model Technology Features 2025

-

Intelligent Computing Center Network Security White Paper 2025

-

Breaking News! The Butterfly Effect of the U.S. Tariff Storm on the Global Economy 2025

-

Research Report on Global Artificial Intelligence Computing Power Development Trends 2025

-

Technical Steps for Enterprise-Level Large Model Deployment 2025

-

Stormy Times! In-Depth Analysis of End-to-End Issues in Intelligent Autonomous Driving 2025

-

Breaking News! Ant Group Open Sources Domestic GPU Cluster Training Producing 290 Billion Parameter Ling-Plus Large Model!

-

Trillion-Dollar Track! Research Report on Multi-Cloud Multi-Chip Computing Industry Development 2025

-

Breaking News! Alibaba Releases the World’s First Full-Modal Large Model Qwen2.5-Omini 2025

-

Trillion-Dollar Blue Ocean! Research Report on China’s Low Altitude Economy Industry Chain 2025

-

Breaking News! The Era of Visual Intelligence Has Arrived! Alibaba Releases Visual Reasoning Large Model QVQ-Max! 2025

-

Trillion-Dollar Space! Quarterly Development Summary of Humanoid Robots 2025

-

Solutions for Training and Fine-tuning Enterprise-Level Large Models 2025

-

Annual Salary Exceeding One Million! Research Report on the Generative Artificial Intelligence Industry Chain and Job Salaries! 2025

-

Trillion-Dollar Track! Latest Research Report on the Humanoid Robot Industry Chain 2025

-

Trillion-Dollar Market! In-Depth Analysis of Core Technologies in Large Model Network Architecture 2025

-

In-Depth Analysis of Core Architecture Technologies for Artificial Intelligence Large Models 2025

-

Hundred Model War! Continuous Explosion! In-Depth Analysis of Tencent’s Mixed Yuan Large Model Matrix 2025

-

Research Report on Operator Intelligent Computing Center Network Technology 2025

-

Breaking News! Open Source! NVIDIA’s General Humanoid Robot Foundation Large Model GROOT N1 In-Depth Analysis 2025

-

Explosion! Multiple Trillion-Dollar Tracks! In-Depth Analysis of NVIDIA CEO Jensen Huang’s GTC Keynote Speech 2025

-

In-Depth Analysis of Mainstream Large Model Training and Inference Architectures 2025

-

Research Report on the Development of the Intelligent Computing Industry Chain 2025

-

Breaking News! The World’s First Humanoid Robot Simulation Framework Released and Open Sourced 2025

-

Trillion-Dollar Space! Research Report on the Development of China’s Artificial Intelligence Industry Chain 2025

-

Storms Are Rising Again! GPU vs. ASIC in AI Chips Who Will Dominate! 2025

-

Trillion-Dollar Market! Research Report on AI Large Model Applications by International Financial Giants 2025

-

Breaking News! Shocking the World! The First General Embodied Intelligence Foundation Large Model Released 2025

-

In-Depth Analysis of Core Components of the GenAI Technology Stack 2025

-

The Future Has Arrived: In-Depth Research Report on AI Applications 2025