The application range of AI IP in chips is gradually expanding.

Looking back over the past decade, AI has brought many conveniences to our daily lives, including helping to achieve safe and reliable automated traffic systems, personalized home services, and immersive entertainment experiences. These tangible scenarios depend on underlying chip technology.

Now, more and more smart applications rely on deep learning neural networks, and the large demand for deep learning and machine learning requires computationally intensive methodologies and powerful chip designs as support. The underlying chip technology must be able to run high-level algorithms and explore applications such as object recognition, speech, super-resolution imaging, and facial recognition in real-time.

Recent innovations in neural network processing and deep learning algorithms have driven new hardware demands for AI SoCs in processing, storage, and connectivity, sparking a new round of investment in the semiconductor market. As companies undergo digital transformation and layout for the metaverse, the market demand for proven dedicated AI IP will continue to grow to effectively reduce integration risks and accelerate time to market.

The three elements of AI applications: perception, decision-making, and response

In the mid-1950s, the term “AI” was first regarded as a discipline. By 2015, with advancements in processor technology and AI algorithms, AI had reached a level in certain areas that could rival or even surpass humans. This led to an explosive growth in investment in the AI field, transitioning AI from mainframes to embedded applications.

Generally speaking, AI is divided into “weak AI” (or narrow AI, which refers to the ability to solve specific tasks) or “strong AI” (or general AI, which refers to the ability of machines to find solutions to unfamiliar tasks). Existing AI systems are primarily based on the concept of weak AI, while strong AI systems are expected to be deployed in the coming years.

Perception, decision-making, and response are the three basic components of most AI applications. For example, smart speakers perceive your voice, process requests between cloud and local processors, and respond with real-time audio data. More broadly, we can define AI as the ability to recognize the environment and maximize goal achievement.

In recent years, AI has continued to develop rapidly, with machine learning algorithms achieving higher levels of recognition accuracy, even surpassing humans. NASA once used neural network technology to analyze data obtained from telescopes to discover new planets, significantly speeding up the engineering team’s data analysis process, and NASA successfully discovered the eighth planet through this technology.

Although the AI market is still in its infancy, many non-traditional semiconductor companies have begun to invest heavily in order to stake a claim in the AI market. System companies such as Google, Facebook, Amazon, and Alibaba are developing their own custom integrated circuits (ASICs) to support their AI software needs and business models, a development that was unimaginable ten years ago.

Segmented markets for AI application growth

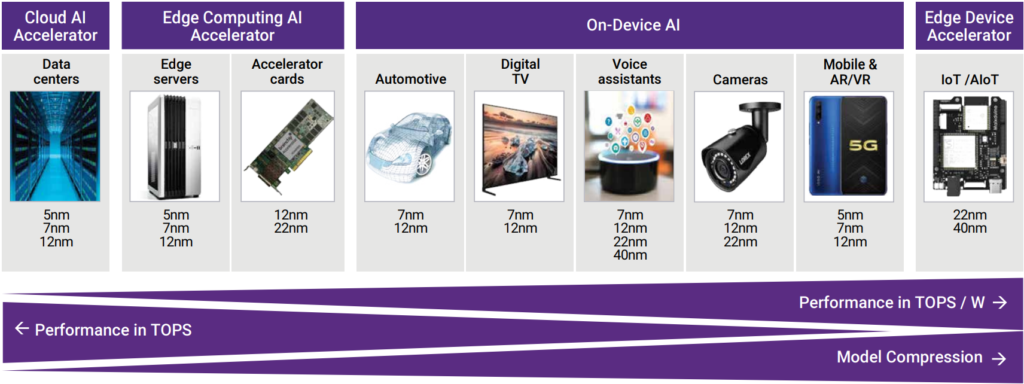

AI is gradually shifting from academia to embedded applications, a transition made possible by advancements in process technology, microprocessors, and AI algorithms. In all markets, including automotive, mobile devices, digital homes, data centers, and the Internet of Things (IoT), the functionality of chips is becoming increasingly diversified, such as supporting facial recognition, improving human-computer interaction, and natural language understanding.

As shown in the image above, cloud AI accelerators (on the far left) have the highest performance. Chip developers of cloud AI accelerators maximize performance to solve large-scale problems by employing complex AI algorithms and training methods, reducing final costs by limiting training time and energy consumption. Hardware innovation has helped developers save years of development time and enabled them to discover key solutions faster, such as vaccine and drug innovations.

However, not all problems can be solved in the cloud, and many AI accelerator architectures are being modified to support edge computing and on-device AI. We expect these areas to be the largest growth points for hardware. Today, on-device AI applications are already very widespread, applicable to advanced driver-assistance systems (ADAS), digital TVs, speech and language recognition, AR/VR headsets, and various other applications. In this segmented market, mobile devices will continue to drive innovation, especially in the latest process nodes.

Last year, the AI processing capability of mobile processors increased more than fourfold from 10 trillion operations per second (TOPS) to over 20 TOPS, with unlimited room for improvement in future performance and performance per watt.

The core DNA composition of AI SoC

Each AI segmented market has different goals and challenges. Over time, adding AI capabilities to chips has highlighted the fundamental architectural weaknesses of AI chips. Modifying chip architectures that incorporate deep learning capabilities will not only impact highly customized solutions but also general AI chip designs.

This means that the selection and integration of IP determine the foundational efficiency of AI chips, constituting the core “DNA” of AI chips. For instance, introducing custom processors or processor arrays can accelerate the large-scale matrix multiplication required in AI applications.

-

Specialized processing: Specialized processing is needed to manage the constantly changing computational intensity brought about by machine learning and deep learning tasks. SoCs with neural network capabilities must adapt to heterogeneous and large-scale parallel matrix multiplication. -

Memory performance: Memory performance has become a key design consideration to support new complex AI models. AI models occupy a large amount of memory, necessitating efficient memory access to handle special coefficients used for deep learning, such as weights and activations. -

Data connectivity: Real-time data connectivity tools between sensors, such as linking CMOS image sensors and deep learning accelerators as critical components. Once AI models are trained and compressed, they can be executed through many different interface IP solutions. This requires reliable and proven real-time interfaces for chip-to-chip, chip-to-cloud, sensor data, and accelerator-to-host connections. -

Security: Security is an integral part of AI, crucial for protecting AI systems, data, and communication related to user safety, privacy, and commercial investment. AI presents new challenges in terms of security vulnerabilities, leading to increased attention on how to protect data from hacking.

AI IP Fuels the Future of AI

Conclusion