The technology for cross-embedded hardware platforms and operating systems is one of the core challenges in current embedded system development, especially in fields such as the Internet of Things (IoT), robotics, and industrial automation. Compatibility issues between different hardware architectures (such as ARM, x86, RISC-V) and operating systems (such as Linux, RTOS, Zephyr) urgently need to be addressed. Below is a summary of key technologies and solutions:

The technology for cross-embedded hardware platforms and operating systems is one of the core challenges in current embedded system development, especially in fields such as the Internet of Things (IoT), robotics, and industrial automation. Compatibility issues between different hardware architectures (such as ARM, x86, RISC-V) and operating systems (such as Linux, RTOS, Zephyr) urgently need to be addressed. Below is a summary of key technologies and solutions:

1. Hardware Abstraction Layer (HAL) and Standardized Interfaces

-

Layered Architecture Design: The hardware abstraction layer (HAL) shields the underlying hardware differences, including hardware driver layers, hardware abstraction layers, and device driver layers, ensuring that the upper application code can adapt to different hardware without modification..

-

Universal Interface Layer: Provides a unified API that supports multi-core heterogeneous processors (such as Intel Xeon, ARM Cortex), simplifying cross-platform development..

-

Real-time Performance Adjustment: By monitoring hardware operating states (such as CPU usage, memory usage), dynamically adjust the operating system configuration to ensure stability and efficiency..

2. Containerization and Lightweight Virtualization

-

Cross-architecture Container Migration: Run ARM virtual machines on x86 hosts, compile algorithms, and migrate them to embedded hardware, combining ROS to achieve cross-platform communication (such as deploying SLAM algorithms)..

3. Model Conversion and AI Inference Optimization

-

Cross-platform AI Deployment: Convert models using ONNX intermediate format (e.g., PyTorch → Ascend chip .om format) to adapt to different AI accelerators..

-

Edge Computing Optimization: Improve the inference efficiency of CNNs on embedded devices..

4. Standardized Toolchains and Open-source Ecosystem

-

Cross-compilation Support: Toolchains like Bazel and Qt simplify cross-platform development, such as ARM adaptation of TensorFlow Lite..

CMAKE&AIBOX Firmware

Building applications that can span different operating systems, hardware platforms, and various compilers is key to enhancing code reusability and reaching a broader user base. However, the complexity of the real world lies in the fact that different computing environments often come with unique system characteristics, API interfaces, library dependencies, and compiler behavior differences. This means that while we strive to write highly portable source code, in many cases, especially when dealing with low-level interactions, performance optimizations, or integrating specific third-party libraries, we must confront and manage the differences brought by these platforms and toolchains.

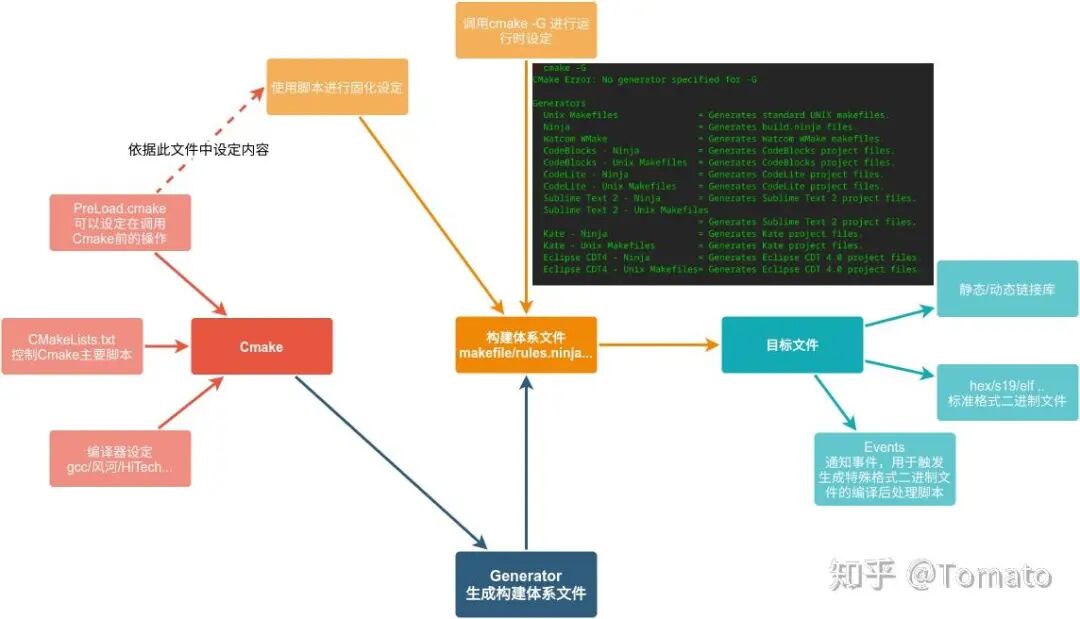

CMake, as a powerful and widely used cross-platform build tool, provides a standardized way to describe the build process of projects and can generate native build systems (such as Visual Studio project files, Makefiles, Ninja files, etc.) across various operating systems (such as Windows, Linux, macOS). One of CMake’s core capabilities is its ability to perceive and recognize the environment in which it is running or the target build.

CMake, as a powerful cross-platform build tool, has the core advantage of being able to generate native build systems in different operating system environments.Although CMake provides powerful tools for handling platform differences, best practices still recommend minimizing platform dependencies in the code as much as possible. Isolating platform-related logic into separate modules or files and providing an abstraction layer can significantly improve code maintainability and cross-platform capability. AIBOX, developed based on CMAKE, can be easily ported to different embedded hardware and operating systems. Currently, AIBOX’s firmware successfully runs on Rockchip’s RK3588/RK3576 and NVIDIA’s Jetson Orin embedded hardware platforms. The embedded hardware platform used by DJI’s MiaoSuan 3 is the Jetson Orin Nano.

AIBOX, developed based on CMAKE, can be easily ported to different embedded hardware and operating systems. Currently, AIBOX’s firmware successfully runs on Rockchip’s RK3588/RK3576 and NVIDIA’s Jetson Orin embedded hardware platforms. The embedded hardware platform used by DJI’s MiaoSuan 3 is the Jetson Orin Nano.

- CAPS(Cross-Platform AI Powered Solutions)“Cross-platform AI software services”CAPS AI software services: Breaking through AI computational boundaries, flexibly adapting across platforms.The core technology of CAPS lies in virtualization and middleware framework technology, allowing AI models to adapt to different hardware architectures without cumbersome adjustments. Therefore, whether on the server side or edge side of AI solutions, it can significantly reduce development costs for enterprises and improve AI deployment efficiency.

MicroIP has successfully integrated Mediatek Genio 700, enabling the AI module to run 10 different AI applications simultaneously, further showcasing CAPS’s leading advantages in AI hardware and software integration.MicroIP showcased CAPS “cross-platform AI software services” at EW2025, covering multiple different AI edge computing platforms such as Mediatek, Kneron, Xilinx, and Rockchip, fully demonstrating the flexibility and performance advantages of AI running across platforms from AIoT devices to FPGA DPUs.

RK3576 vs. NVIDIA Jetson Orin Nano

Below is a detailed comparison between Rockchip’s RK3576 and NVIDIA’s Jetson Orin Nano, covering key aspects such as CPU, GPU/NPU, AI computing power, interfaces, power consumption, and applicable scenarios.:

1. CPU Performance Comparison

| Model | CPU Architecture | Core Count | Clock Speed | Process Technology |

|---|---|---|---|---|

| RK3576 | 4×Cortex-A72 + 4×Cortex-A53 | 8 cores | [email protected], [email protected] | 8nm |

| Orin Nano | 6×Arm Cortex-A78AE | 6 cores | Up to 1.5GHz | 5nm |

Conclusion:

-

RK3576 adopts a traditional big.LITTLE architecture, suitable for multitasking, but has weaker single-core performance.

-

Orin Nano has a more advanced Cortex-A78AE architecture, stronger single-core performance, suitable for real-time computing tasks..

2. GPU and AI Computing Power

| Model | GPU/NPU | AI Computing Power | Architecture |

|---|---|---|---|

| RK3576 | Mali-G52 MC3 + 6TOPS NPU | 6 TOPS (INT8) | ARM Mali |

| Orin Nano | 1024-core Ampere GPU + 32 Tensor Cores | 40 TOPS (INT8) | NVIDIA Ampere |

Conclusion:

-

RK3576 NPU is suitable for lightweight AI inference, supporting various deep learning frameworks (TensorFlow/PyTorch).

-

Orin Nano has significant advantages in AI computing with its Ampere GPU + Tensor Cores, especially suitable for CUDA/TensorRT optimized applications..

3. Video Codec Capabilities

| Model | Decoding Capability | Encoding Capability |

|---|---|---|

| RK3576 | 8K@30fps (H.265/AV1) | 4K@60fps (H.265) |

| Orin Nano | 4K@60fps (H.265) | Only 1080P@30fps (CPU soft encoding) |

Conclusion:

-

RK3576 is stronger in video encoding and decoding, suitable for 8K playback and multi-camera input.

-

Orin Nano has weaker encoding capabilities, but sufficient decoding capabilities for robotic vision..

4. Memory and Storage

| Model | Memory | Storage | Expandability |

|---|---|---|---|

| RK3576 | 16GB LPDDR4x | eMMC 5.1 / UFS 2.1 | PCIe 2.0, SATA |

| Jetson Orin Nano | 8GB LPDDR5 (68GB/s) | Supports NVMe M.2 | PCIe Gen3 |

Conclusion:

-

RK3576 has a larger memory capacity, suitable for multi-tasking AIoT devices.

-

Orin Nano has higher bandwidth with its LPDDR5, suitable for low-latency AI computing.

Jetson Orin Nano and RK3576 have significant differences in peripheral design flexibility, mainly reflected in core board solutions, memory expansion, and interface customization. Below are the limitations of Orin Nano and the comparison with RK3576:

1: Core Board Solution Limitations

-

Orin Nano:

Must use NVIDIA’s official module (such as Jetson Orin Nano 8GB/4GB core board), users cannot design the SoC part themselves and can only develop carrier boards based on NVIDIA’s standard modules. The pin definitions are fixed, although some interfaces (such as PCIe, USB, MIPI CSI) can be expanded, the overall architecture is limited by NVIDIA’s hardware design.

-

RK3576:

Fully self-designed, allowing free customization of PCB layout, power management, peripheral circuits, etc., suitable for specific industry needs (such as industrial control, commercial display). Supports more flexible memory configurations (such as LPDDR4x up to 16GB, while Orin Nano is fixed at only 8GB).

Conclusion:Orin Nano’s core board solution limits deep hardware customization, while RK3576 allows for complete self-design.

2: Memory Expandability

-

Orin Nano:

Fixed memory capacity (4GB or 8GB LPDDR5), cannot upgrade or replace with larger memory, memory bandwidth is limited (the 8GB version is about 68GB/s), which may become a bottleneck for AI inference.

RK3576:

Supports LPDDR4x, up to 16GB, users can choose different capacities according to their needs, and can pair with higher bandwidth memory (such as LPDDR5, requires specific design), suitable for high-throughput applications.

Conclusion:Orin Nano’s memory is not upgradeable, while RK3576 can flexibly adjust memory configurations.

3: Storage Interfaces

-

Orin Nano:

No eMMC, relies on NVMe SSD or SD card storage, industrial applications may be limited, PCIe Gen3 channels are limited (7 channels), which may be limited when expanding storage or peripherals.

-

RK3576:

Supports eMMC 5.1 / UFS 2.1, more suitable for embedded devices, PCIe 2.0 + SATA 3.0, can flexibly match various storage solutions.

Conclusion:Orin Nano’s storage solution is relatively singular, while RK3576 is more adaptable to diverse storage needs.

4: Peripheral and Interface Flexibility

-

Orin Nano:

MIPI CSI-2 camera interface is fixed (up to 8 virtual input channels), cannot increase physical interfaces, USB 3.2 Gen2 only has 3 ports, which may be insufficient when expanding multiple peripherals.

-

RK3576:

Can customize the number of camera interfaces (e.g., 3×MIPI CSI-2 4Lane), supports dual Gigabit Ethernet, WiFi 6/5G modules, with stronger network expandability.

Conclusion:Orin Nano has a fixed number of interfaces, while RK3576 can optimize peripheral configurations for specific scenarios.

5: Power Consumption and Thermal Design

-

Orin Nano:

TDP 7-15W, but may approach 25W (Super Mode) under high load, relying on NVIDIA’s recommended cooling solutions, the fanless design is limited, and prolonged high load may lead to frequency throttling.

-

RK3576:

Lower power consumption (1.2W-6W), suitable for fanless embedded devices, can customize cooling solutions (such as passive cooling or small fans).

Conclusion:Orin Nano’s cooling solution is relatively fixed, while RK3576 can optimize power consumption to adapt to harsh environments.

Limitations of Orin Nano vs. Advantages of RK3576

|

Feature |

Jetson Orin Nano |

RK3576 |

|

Core Board Solution |

Must use NVIDIA module |

Fully self-designed |

|

Memory Expandability |

Fixed 4GB/8GB LPDDR5 |

Customizable 16GB LPDDR4x |

|

Storage Interfaces |

Only NVMe/SD card |

Supports eMMC/UFS/SATA |

|

Peripheral Interfaces |

Fixed MIPI/USB/PCIe |

Customizable camera/network |

|

Thermal Design |

Relies on official recommended solutions |

Can optimize fanless solutions |

-

Development Kit Cost

|

Model |

Development Board Price |

Expandability |

|

RK3576 |

About 100-200 USD |

Open hardware design, can be freely modified |

|

Jetson Orin Nano |

249 USD (official development kit) |

Must use NVIDIA design |

Conclusion:

RK3576 development board is cheaper and supports self-customization (such as changing memory, storage).

Orin Nano development kit price is fixed, but includes JetPack SDK and CUDA ecosystem support.

-

Applicable Scenarios and Expandability

|

Feature |

RK3576 |

Jetson Orin Nano |

|

Core Board Design |

Supports integrated design |

Must use NVIDIA official module |

|

Cooling Solutions |

Fanless or small heatsink |

Recommended active cooling (under high load) |

|

Industrial Applicability |

Wide temperature support (-30°C~80°C) |

Operating temperature 0°C~80°C |

|

Expansion Interfaces |

Can freely add dual Ethernet, WiFi 6, etc. |

Fixed interfaces (PCIe Gen3, MIPI CSI-2) |

Summary:

If you need ultra-small, lightweight, self-designed → RK3576 (such as industrial control, portable devices, robotics, drones).。

-

Size and Weight Comparison

|

Model |

Board Weight |

Development Board/System Weight |

Remarks |

|

RK3576 |

About 100g |

Fanless design, metal casing optional |

Suitable for lightweight embedded devices10 |

|

Orin Nano |

About 200-300g (including heatsink) |

Officially recommended active cooling solution |

May require additional cooling modules under high load |

Conclusion:

RK3576 is lighter (core board only 20-30g), suitable for fanless, low-power designs.

Physical Size Comparison

|

Model |

Size (Length × Width) |

Core Board/Development Board Form |

Remarks |

|

RK3576 |

70mm × 50mm |

Self-integrated design |

Suitable for industrial embedded devices, flexible layout adjustment |

|

Orin Nano |

About 100mm × 80mm |

Must use NVIDIA official module, carrier board can be customized but core board is fixed |

Size cannot be adjusted |

Conclusion:

RK3576 core board is smaller (60×42mm vs. 70×45mm), and supports self-designed carrier boards, offering higher flexibility.