The increasing intelligence in vehicles is leading to a greater reliance on some technologies that were previously marginalized.

The level of autonomy in vehicles is continuously increasing, fundamentally changing the technologies people choose, how they are used, how they interact, and how they will evolve throughout the vehicle’s lifecycle.

The entire vehicle architecture is being reshaped to enable artificial intelligence to be applied across a wide range of functions, prompting increased investment in previously marginalized technologies and a closer integration of the perception sensors needed by both human drivers and machines. Therefore, while all technologies will improve, especially in terms of safety, the combination and application of these technologies are changing.

One of the most obvious changes involves radar—an acronym for radio detection and ranging—a technology that is well-established and understood by most engineers. In the past, radar was sidelined due to a lack of resolution. However, it is increasingly becoming a hallmark of automotive transformation.

Radar, LiDAR, and Cameras

Unlike cameras and LiDAR, which are based on optical principles, radar operates on the propagation of radio waves. Amit Kumar, Director of Product Management at Cadence’s Computational Solutions Division, states, “Radar works by continuously transmitting radio waves, with the frequency increasing/decreasing in a known pattern over time. The radio waves propagate until they encounter an object and reflect back to the radar receiver. The advantage of radar over vision-based systems is its inherent ability to detect Doppler or frequency shifts. This helps the backend system calculate the distance, direction, and speed of objects, which are fundamental elements of several ADAS functions the vehicle will perform.”

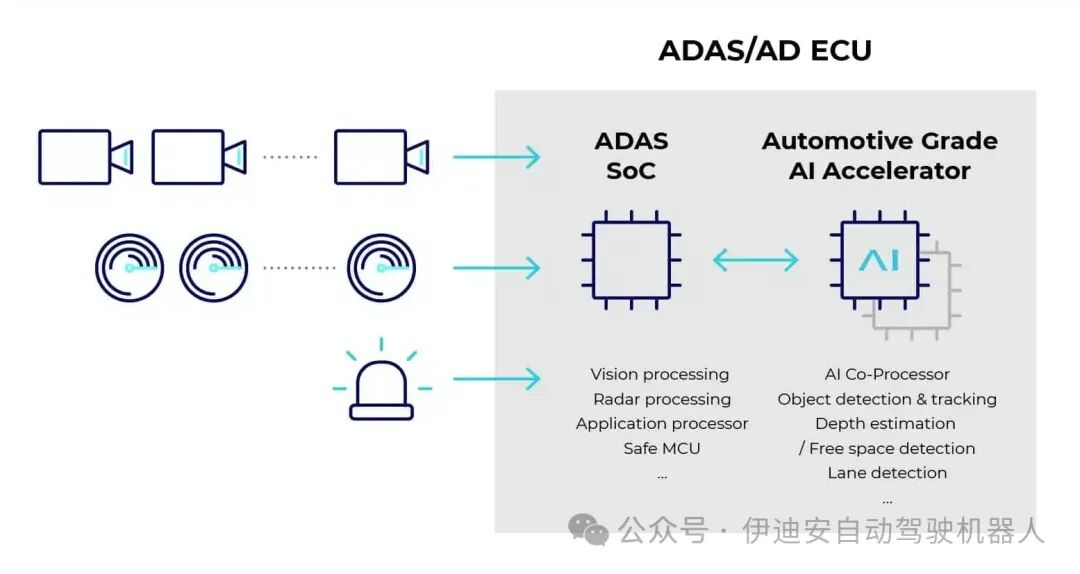

A classic radar processing chain involves a multi-antenna front end (MIMO – multiple input, multiple output) that transmits radio waves and receives data such as distance FFT (Fast Fourier Transform), Doppler FFT, and angle (azimuth and elevation), which are then extracted and processed on processing blocks (e.g., DSP or hardware accelerators). This generates a 3D point cloud that informs the vehicle of its position relative to the surrounding environment and identifies various objects within that frame.

“As autonomous driving (AD) technology matures and consumer understanding increases, the specific conditions under which AD functions can operate safely (also known as the operational design domain (ODD)) will determine their practicality and desirability,” notes Guilherme Marshall, Director of Automotive Go-to-Market for Arm in Europe, the Middle East, and Africa. “For example, in areas where fog, rain, or snow are prevalent, you want your car to incorporate these conditions into its AD ODD. However, despite recent technological advances in image-based perception, low visibility significantly reduces its performance.”

Nevertheless, radar is a cost-effective complement to cameras. In the primary computational channel, camera and radar multimodal systems can enhance perception performance under challenging conditions. At SAE levels 3 and above, high-definition radar (and/or LiDAR) can also be used for redundancy.

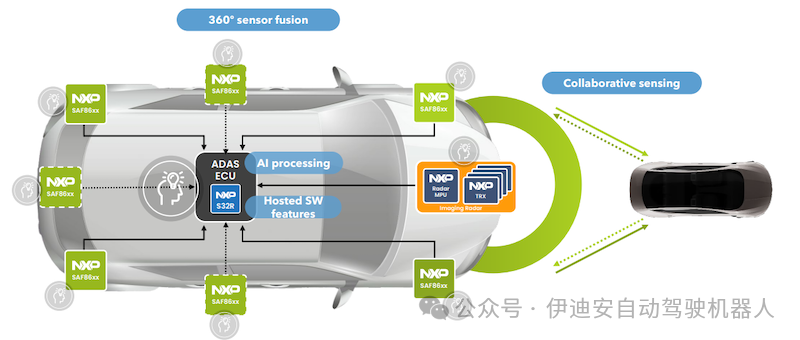

In a few years, SAE level 3 and 4 vehicles may be equipped with up to nine radars, including those for internal sensing. “To control the bill of materials while also paving the way for software-defined sensors, OEMs are increasingly seeking to ‘simplify’ radar sensor nodes. In future E/E architectures, radar preprocessing algorithms may be executed more centrally in existing HPCs (such as regional and/or AD controllers),” Marshall says.

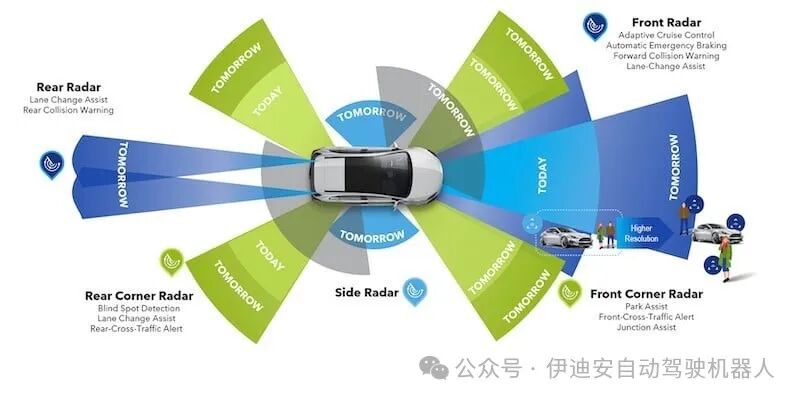

The number of ADAS functions varies depending on the type of radar installed in the vehicle. For example:

-

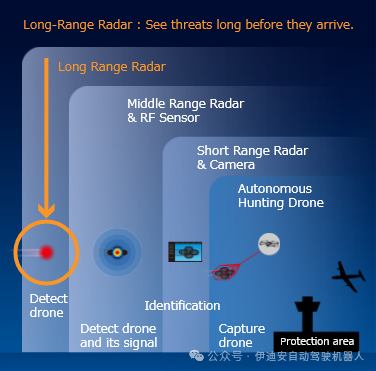

Long-range radar (LRR) will enable functions such as long-distance object detection (e.g., 300 meters at narrow angles) and assist with automatic emergency braking (AEB) collision warnings and adaptive cruise control.

-

Mid-range radar (MRR) can typically detect objects up to 150 meters away and has a wider angle range, aiding in issuing cross-traffic alerts or detecting vehicles approaching intersections.

-

Short-range radar (SRR) has a very wide angle range but does not see far. It is used for functions closer to the vehicle, such as cyclist and pedestrian detection, rear collision warnings, lane change assistance, etc.

“Frequency Modulated Continuous Wave (FMCW) radar is currently the most commonly used in passenger and commercial vehicles,” says Kumar from Cadence. “It is very precise, allowing for more accurate distance and speed measurements, thereby reducing false positives. It can also measure the distance and speed of multiple objects simultaneously. When deployed alongside vision systems and LiDAR, it can create a complete perception sensor suite. Today’s vehicle architectures employ multiple sensor redundancies, enabling vehicles to operate safely in semi-automated or fully automated modes.”

Radar is a key aspect of ADAS applications, although mandates do not specify how ADAS should be implemented, its prevalence and mandatory requirements are increasing. These may include all camera-based systems or a mix of camera and radar. “Unless the application can be safely implemented with just one system (camera-based or radar-based), the industry predicts that both types of sensors will continue to advance,” says Ron DiGiuseppe, Manager of Automotive IP at Synopsys. “If we look at some forecasts, we see that the shipment of radar in vehicles continues to grow. In my view, OEMs will use both cameras and radar simultaneously, and this trend will continue, so the use of radar will not decrease.”

The number of radars in vehicles varies depending on the OEM and application. For certain autonomous driving implementations, Cruise includes over ten radars, while Google Waymo has six. “The number varies by vehicle, but six is the standard number—one forward radar, one rear radar, and then short-range radars for blind spot detection,” DiGiuseppe says. “If you want internal digital cockpit occupant monitoring, that number will change. You could add two more internally, so it varies based on the number of applications.”

Better Radar

Scalable radar solutions are also evolving alongside traditional radar solutions. “For example, you might install mid-range radar at the corners of the vehicle, and at the front of the vehicle, you are likely to install long-range radar because the goal is to have very good predictions of what is about to happen,” says Adiel Bahrouch, Director of Business Development for Silicon IP at Rambus. “The same goes for rear radar; on the sides, you might install mid-range or short-range radar. These are all radars, but you will see different technologies because the purposes and targets of the radar are slightly different. I have also seen some solutions where developers are trying to provide a configurable platform, meaning that by changing the configuration, you will also change the radar’s range to support certain purposes or targets, which means a scalable solution. You have a baseline, and then you can choose all options in a single package or chip set, which OEMs really like because it gives them a single point of contact, a solution, a configurable platform. Then, depending on the purpose, you will serve certain applications.”

Radar is a mature technology, but in the automotive field, especially in autonomous driving, radar alone is not enough, so further development and efforts to improve radar performance are needed. “For example, when we compare cameras and radar, the information content of cameras is very rich, and the resolution is very high,” Bahrouch explains. “It can distinguish colors, shapes, etc. Radar has some unique characteristics, but in terms of resolution and quality, it is very poor. However, cameras have many good features and are inexpensive. OEMs like cameras; they perform well, but only if the lighting and weather conditions are good. When faced with extreme weather or lighting conditions, cameras perform poorly, and that is where radar excels. Radar is not sensitive to lighting or weather conditions. It is reliable. This technology is well-known. But that said, it performs poorly in terms of resolution. For example, when we talk about 3D radar (the traditional radar in use), 3D refers to radar that can detect distance, angle/direction, and speed. Radar can provide this information in a single measurement.”

The advantage of radar comes from its ability to perceive electromagnetic waves and measure the reflection of these waves based on speed and delay. And because it is electromagnetic, it is not sensitive to light, fog, or rain. However, due to the lower information content compared to cameras or LiDAR, the industry has invested heavily in developing the next generation of 4D radar, which can also measure height. “With 3D radar, you cannot detect whether it is a large vehicle or a small vehicle, a building or a non-building,” Bahrouch points out. “With cameras, you can also obtain this information, which is why 4D radar is being introduced. It is also known as imaging radar, which improves the performance of traditional radar in terms of quality, resolution, and information because now you can also detect or distinguish buildings, cars, tunnels, pedestrians, etc.”

Figure: Example of NXP Radar SoC

Integration with Other Technologies

As vehicle intelligence continues to increase, how radar will remain stable over time remains to be seen, especially as more AI-driven advanced features enter automotive architectures. But it can represent how much change is still possible in vehicles.

David Fritz, Vice President of Hybrid and Virtual Systems at Siemens Digital Industries Software, states, “If you just want to implement ADAS functions, then there is no doubt that radar plays a role, and it has been around for a long time. Humans cannot perceive certain things under certain conditions, but radar can, which can be very useful. It is excellent for emergency braking, cars in blind spots, etc., so there is no doubt that radar is useful. But as we move from ADAS to autonomous driving, the complexity of the sensor array is inversely proportional to the intelligence of the vehicle. If you have one of these new Focused Transformer (FoT) AI solutions, we are now seeing these solutions emerge, they will infer, ‘I see snow. There is fog. I should slow down.’ But in ADAS, you still need driver involvement. The driver may not be good at driving in the snow; they may think that just because it is snowing, they can still drive at 45 miles per hour because that is the speed limit. Having additional sensors to compensate for experiential differences is a good thing.”

In making vehicles smarter, the trend is that the complexity and cost of the sensor array decrease in proportion to the increase in intelligence. “This is because you are competing with human drivers,” Fritz points out. “We don’t have radar. We have experience to take on that role, and if we can enhance our vision, wear glasses or something, then we have a smarter camera. Additionally, other sensing methods like cameras are also evolving to prevent them from failing in adverse weather conditions. There is a lot happening, and cameras may be the most cost-effective method. We are now seeing vehicles equipped with 12 to 18 cameras, and if we look at things from multiple angles and have intelligence to process that information, overall, the demand for radar seems to be decreasing.”

Bahrouch from Rambus also hopes to see AI technology in ADAS used to enhance camera or radar information. “Assuming the camera can recognize patterns and speed tags, etc., but to recognize them, you need to train the system to be able to recognize them. The training part is where AI technology comes in to achieve this. For radar, there is some research around how to train this data, learning from existing history and information, and predicting what will happen in the future. The same goes for camera pattern recognition, and a lot of training has been done there. Then, when we combine these different technologies, there will also be a lot of training, and AI will play a role.”

Looking ahead to level 4 and 5 autonomous vehicles, AI will be a key technological driver, but currently, even if it won’t always be this way, the development is heading towards extremes. “We are seeing systems with 64 and 128 CPU cores,” Fritz observes. “This is inversely proportional to intelligence. In other words, if you have many heuristic algorithms, many algorithms running and checking each other, then regardless of what the sensor array provides, you may need many CPU cores to gain that knowledge to make informed decisions. But for many new AI technologies, this is not an effective approach. You may need four or eight CPU cores, but you also have other fine-tuned accelerators that can process this information more efficiently and then feed that data into the CPU complex so it can make the informed decisions that need to be made. AI models will change the number of cores and push the necessary intelligence to the edge of the vehicle, closer to the sensors. We see this happening everywhere. The benefit of this solution is that if you have a minor collision, it is not a $20,000 problem. You replace a camera that costs $1.50, so in the long run, this is more cost-effective for the vehicle, maintenance, and fleet survival.”

Figure: Introduction to NXP Radar Solutions

Connecting Everything Together, but Differently

In short, this means applying AI locally starting from the sensors. Radar, cameras, and LiDAR will increasingly connect to other systems, all of which need to be adjusted based on whether the vehicle provides driving assistance, limited autonomous driving capabilities, or full autonomous driving. For example, touch screens need to be managed very differently at each level.

“Now, everyone thinks AI is just asking the co-driver what to do,” says Satish Ganesan, Senior Vice President and General Manager of Synaptics’ Intelligent Sensing Division. “It is much more than that. We want to use distributed AI to enhance the usability of products, making the end-user feel better. Therefore, we have AI processors primarily used for different applications. For example, in the automotive field, we will do localized dimming of glass. But we also developed a technology that if the driver touches the passenger screen, it will not respond. It enhances safety by saying, ‘Oh no, you cannot reach out to do that.’ If the road is icy, it won’t let you play with anything. We will detect the angle of the touch and sensors on the seat and then say, ‘Hey, it is not coupled with the right person. We won’t let you touch that screen.’”

As vehicle autonomy continues to increase, these interactions may undergo significant changes. “There are two paths to take,” says Peter Schaefer, Executive Vice President and Chief Strategy Officer at Infineon Technologies. “The first path is to continuously improve and provide customers with autonomous driving capabilities. The second path is fully autonomous vehicles. We must prepare the vehicle architecture to become a software-defined car, which means we can introduce software and services into the vehicle and consumers throughout the vehicle’s lifecycle. As consumers, we want to upgrade after purchasing a product. We also want the vehicle to have all these innovative features and strong performance. We do not want it to stagnate or become non-functional. It needs to remain functional at all times.”

Original Article:semiengineering.com/radar-ai-and-increasing-autonomy-are-redefining-auto-ic-designs

Author:Ann Mutschler Translated by: Joyce Edited by: Mike

This article is based on the Creative Commons text sharing agreement: https://creativecommons.org/licenses/by-sa/4.0/