Skip to content

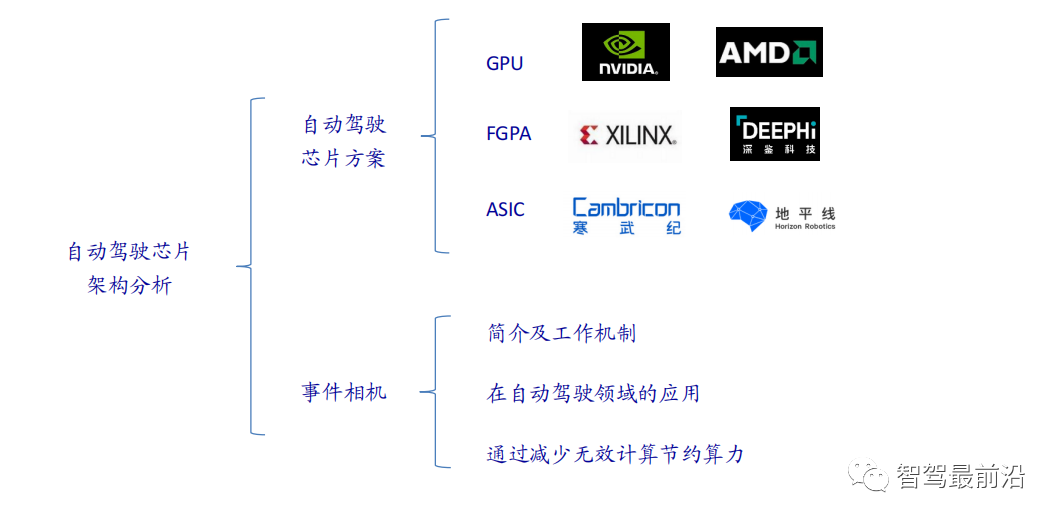

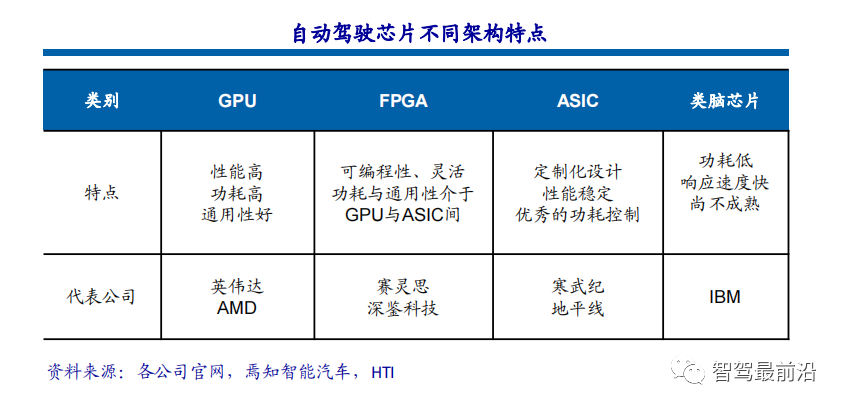

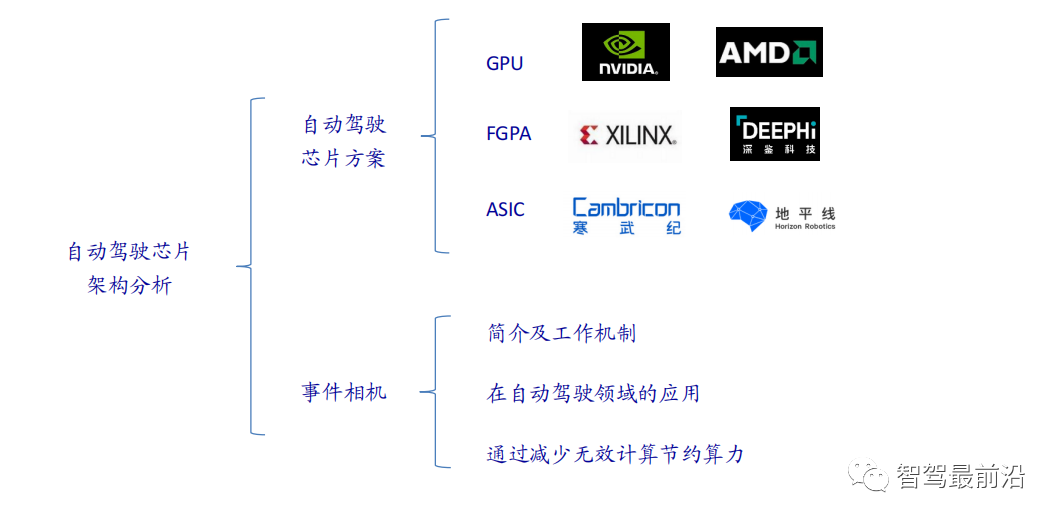

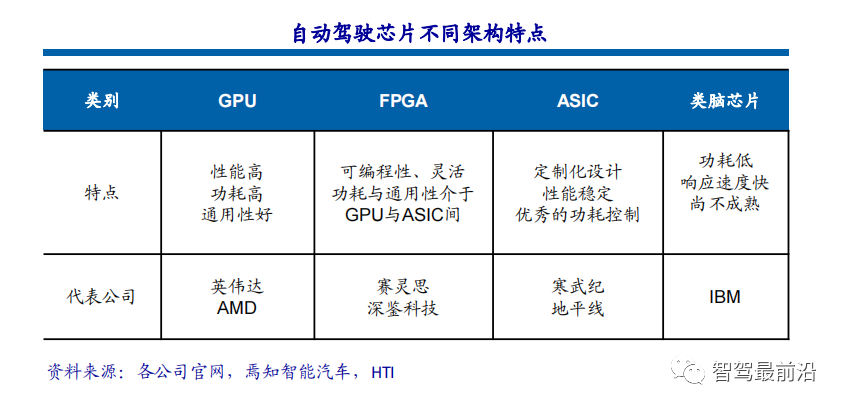

The current mainstream AI chips can be mainly divided into three categories: GPU, FPGA, and ASIC. Both GPU and FPGA are relatively mature chip architectures and belong to general-purpose chips. ASIC is a chip customized for specific AI scenarios. The industry has confirmed that CPU is not suitable for AI computing, but is still indispensable in the field of AI applications.

Comparison between GPU and CPU Architectures

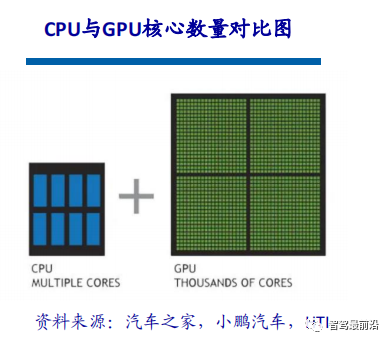

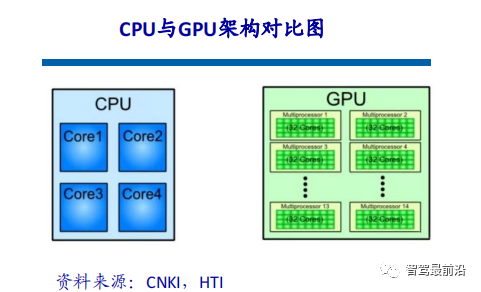

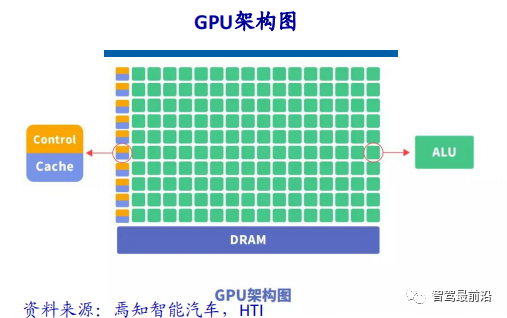

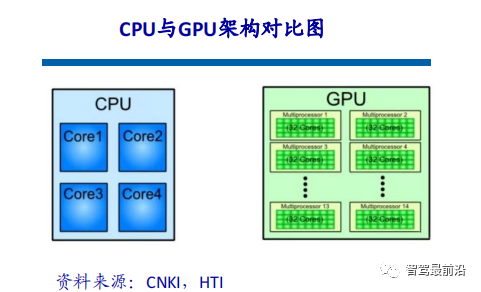

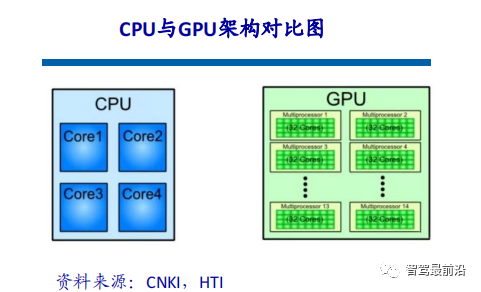

CPU follows the Von Neumann architecture, where the core is to store programs/data and execute them in a serial order. Therefore, the architecture of the CPU requires a large amount of space to accommodate storage units (Cache) and control units (Control), while the computational units (ALU) occupy only a small part. This limits the CPU‘s ability to perform large-scale parallel computations, making it relatively better at handling logical control.

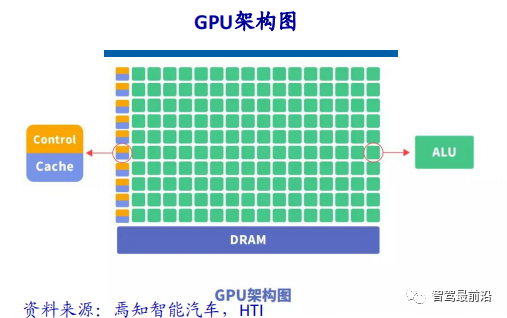

GPU (Graphics Processing Unit) is a large-scale parallel computing architecture composed of numerous computing units, originally derived from CPU to specifically handle image parallel computing data, designed for simultaneous processing of multiple parallel computing tasks. The GPU also contains basic computing units, control units, and storage units, but its architecture is significantly different from that of the CPU, as shown in the architecture diagram below.

Compared to the CPU, less than 20% of the CPU chip space is ALU, while over 80% of the GPU chip space is ALU. This means that the GPU has more ALUs for data parallel processing.

Differences between GPU and CPU

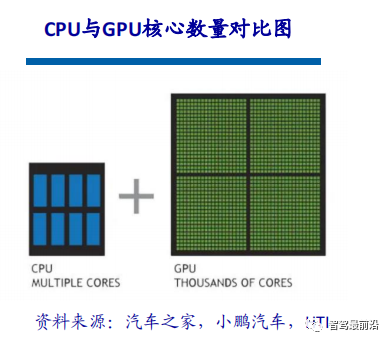

The CPU consists of several cores optimized for sequential serial processing, while the GPU has a large-scale parallel computing architecture composed of thousands of smaller, more efficient cores designed for simultaneous processing of multiple tasks.

The significant differences between CPU and GPU arise from their design goals, targeting two different application scenarios. The CPU requires strong generality to handle various data types, while logical judgments introduce numerous branch jumps and interrupts. This complexity makes the internal structure of the CPU exceptionally complicated. Conversely, the GPU is faced with highly uniform, independent large-scale data and a pure computing environment that does not require interruptions.

Overview of GPU Acceleration Technology

For deep learning, hardware acceleration currently relies primarily on the use of graphics processing units. Compared to traditional CPU, the core computing capability of GPU is several orders of magnitude greater and is more conducive to parallel computing.

The multi-core architecture of GPU contains thousands of stream processors that can execute computations in parallel, significantly reducing model computation time. With companies like NVIDIA and AMD continuously advancing their GPU support for large-scale parallel architectures, GPU for general computing has become a crucial means to accelerate parallel applications.

Currently, GPU has reached a relatively mature stage. Utilizing GPU to train deep neural networks can fully leverage the efficient parallel computing power of its thousands of computing cores, drastically reducing time and requiring fewer servers in scenarios that use massive training data. If appropriately optimized for suitable deep neural networks, a single GPU card can equate to the computing power of dozens or even hundreds of CPU servers, making GPU the preferred solution in the industry for training deep learning models.

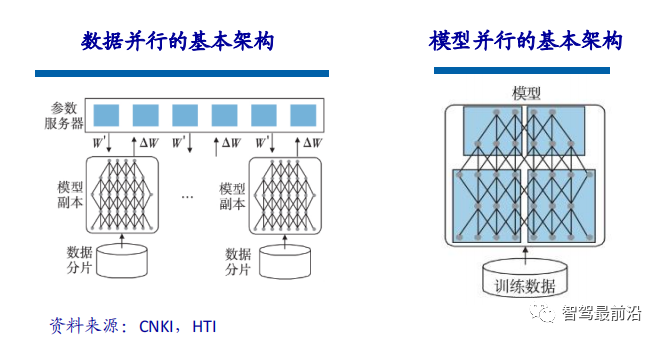

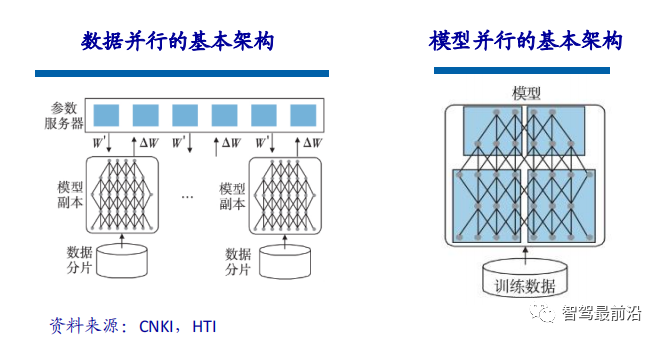

When the scale of the trained model is relatively large, model training can be accelerated through data parallelism, which involves partitioning the training data and using multiple model instances to train multiple chunks of data simultaneously. In the implementation of data parallelism, since the same model is used for training with different data, the bottleneck affecting model performance lies in parameter exchanges between multiple CPUs or GPUs. According to the parameter update formula, all gradients computed by the models need to be submitted to the parameter server and updated to the corresponding parameters. Therefore, the division of data chunks and the bandwidth with the parameter server may become bottlenecks limiting the efficiency of data parallelism.

In addition to data parallelism, model parallelism can also be used to accelerate model training. Model parallelism refers to splitting a large model into several partitions, held by multiple training units, which collaborate to complete the training of the large model.

GPU Accelerated Computing

GPU accelerated computing refers to the simultaneous use of graphics processing units (GPUs) and CPUs to accelerate the running speed of scientific, analytical, engineering, consumer, and enterprise applications. The GPU accelerator was first introduced by NVIDIA in 2007 and has since supported high-efficiency data centers for government laboratories, universities, companies, and small to medium-sized enterprises worldwide. GPUs can accelerate applications running on platforms ranging from cars, mobile phones, and tablets to drones and robots.

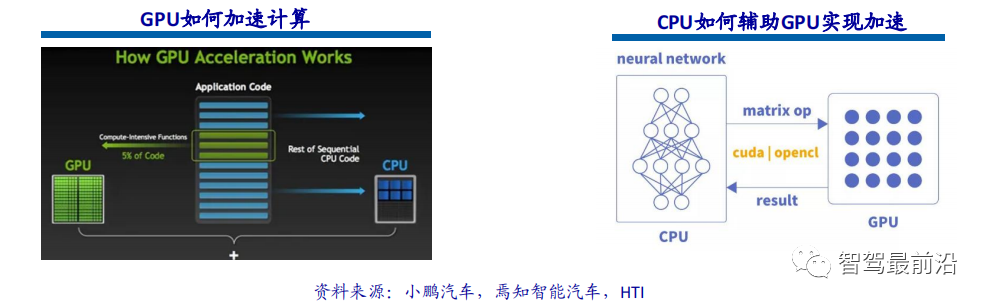

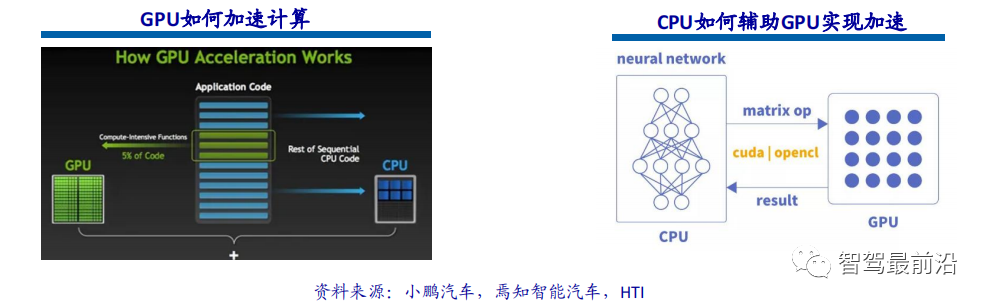

GPU accelerated computing can provide extraordinary application performance by offloading compute-intensive portions of application workloads to the GPU, while the CPU continues to run the rest of the program code. From the user’s perspective, the application runs noticeably faster.

Currently, GPU is only used for parallel matrix multiplication and addition operations, while the construction of neural network models and data flow transmission still occurs on the CPU. The interaction process between CPU and GPU includes: obtaining GPU information, configuring GPU ID, loading neuron parameters to GPU, GPU accelerating neural network computation, and receiving GPU computation results.

Why GPUs Are So Important in Autonomous Driving

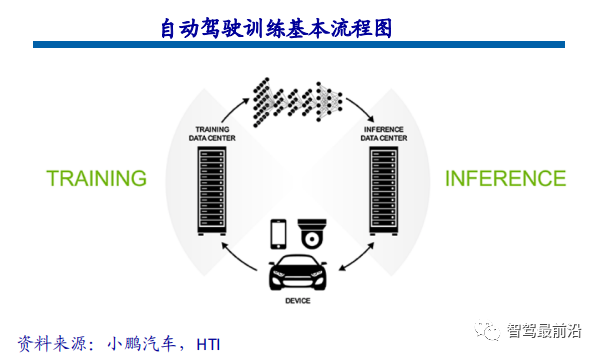

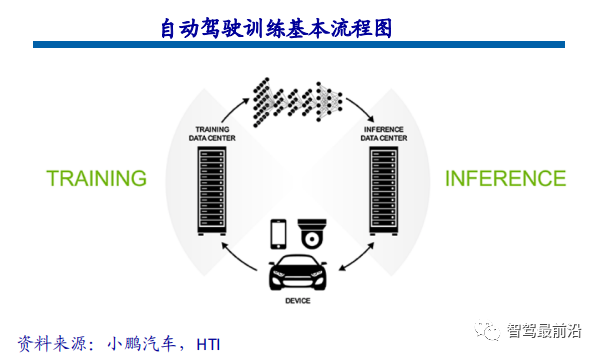

One of the most critical technical domains in autonomous driving technology is deep learning. AI based on deep learning architectures is now widely applied in various fields of the automotive industry, including computer vision, natural language processing, sensor fusion, target recognition, and autonomous driving. From autonomous driving startups to internet companies and major OEM manufacturers, all are actively exploring the use of GPUs to build neural networks for achieving ultimate autonomous driving.

Since the birth of GPU accelerated computing, it has provided a multi-core parallel computing architecture for enterprise data, supporting data sources that previous CPU architectures could not handle. In comparison, to complete the same deep learning training tasks, the cost of using GPU computing clusters is only one-two hundredth of that of CPU computing clusters.

GPUs Are the Key to Autonomous Driving and Deep Learning

Whether enabling cars to perceive their surrounding environment in real-time or quickly plan driving routes and actions, all these require the rapid response of a car’s brain, posing a significant challenge to computer hardware manufacturers. The process of autonomous driving constantly requires deep learning or AI algorithms to cope with infinite possible situations, and the vigorous development of AI, deep learning, and autonomous driving has ushered in a golden age for GPU computing.

Another important parameter of GPU is its floating-point computing capability. Floating-point counting uses floating-point numbers of different lengths to represent a number, while fixed-point numbers correspond to it. During the iterative process of autonomous driving algorithms, a high degree of precision is required, necessitating support for floating-point operations.

Definition and Structure of FPGA Chips

FPGA (Field-Programmable Gate Array) is a product further developed from programmable devices such as PAL, GAL, and CPLD. It emerged as a semi-custom circuit in the field of application-specific integrated circuits, solving the shortcomings of custom circuits while overcoming the limitations of the number of gate circuits in original programmable devices.

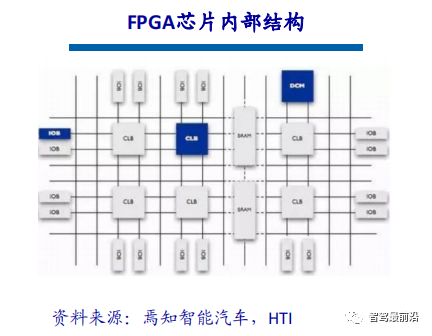

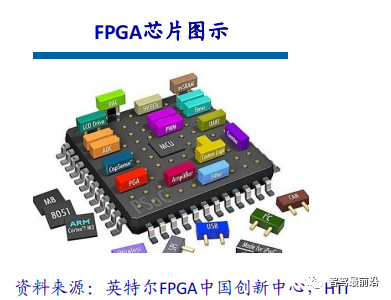

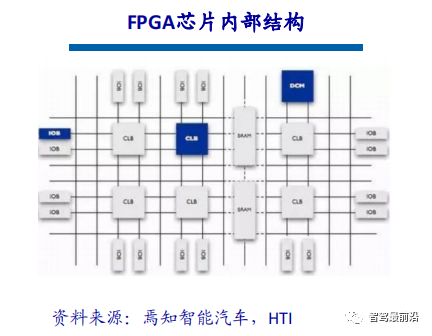

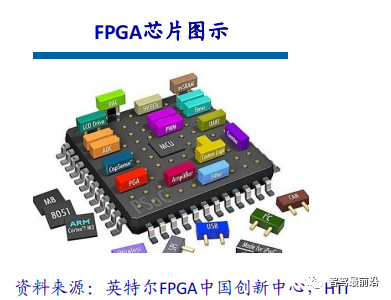

FPGA chips are mainly composed of six parts: programmable input/output units, basic programmable logic units, complete clock management, embedded block RAM, rich routing resources, and embedded lower-level functional units and dedicated hardware modules. Currently, mainstream FPGAs are still based on lookup table technology, which has far exceeded the basic performance of earlier versions and integrated commonly used functionalities (such as RAM, clock management, and DSP) into hard cores (ASIC type) modules.

Working Principle of FPGA

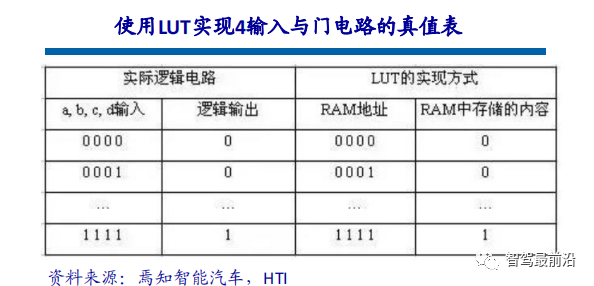

Since FPGA needs to be reprogrammed repeatedly, its basic structure for implementing combinational logic cannot be completed using fixed NAND gates like ASIC, but must adopt a structure that is easy to reconfigure. Lookup tables can meet this requirement well, and currently mainstream FPGAs use SRAM-based lookup table structures, while some military and aerospace-grade FPGAs use Flash or fuse and anti-fuse technology for their lookup table structures. The repeated configuration of FPGA is achieved by altering the contents of the lookup tables through a programming file.

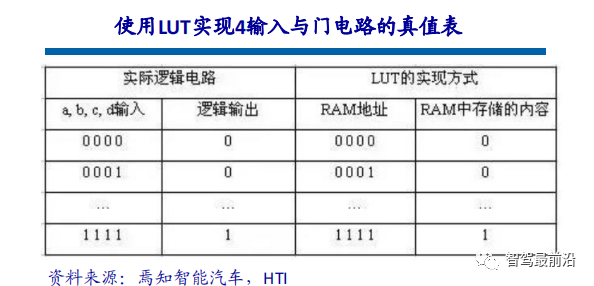

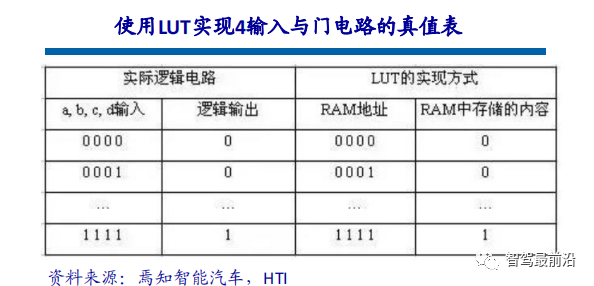

A lookup table (LUT) is essentially a RAM. Currently, FPGAs mostly use 4-input LUTs, so each LUT can be viewed as a RAM with 4 address lines. After users describe a logic circuit using schematics or HDL languages, the PLD/FPGA development software automatically calculates all possible results of the logic circuit and writes the truth table (i.e., results) into RAM in advance. Thus, every time a signal is input for logical computation, it is equivalent to inputting an address to look up the table, finding the corresponding content, and then outputting it.

When the scale of the trained model is relatively large, model training can be accelerated through data parallelism, which involves partitioning the training data and using multiple model instances to train multiple chunks of data simultaneously. In the implementation of data parallelism, since the same model is used for training with different data, the bottleneck affecting model performance lies in parameter exchanges between multiple CPUs or GPUs. According to the parameter update formula, all gradients computed by the models need to be submitted to the parameter server and updated to the corresponding parameters. Therefore, the division of data chunks and the bandwidth with the parameter server may become bottlenecks limiting the efficiency of data parallelism.

In addition to data parallelism, model parallelism can also be used to accelerate model training. Model parallelism refers to splitting a large model into several partitions, held by multiple training units, which collaborate to complete the training of the large model.

GPU Accelerated Computing

GPU accelerated computing refers to the simultaneous use of graphics processing units (GPUs) and CPUs to accelerate the running speed of scientific, analytical, engineering, consumer, and enterprise applications. The GPU accelerator was first introduced by NVIDIA in 2007 and has since supported high-efficiency data centers for government laboratories, universities, companies, and small to medium-sized enterprises worldwide. GPUs can accelerate applications running on platforms ranging from cars, mobile phones, and tablets to drones and robots.

GPU accelerated computing can provide extraordinary application performance by offloading compute-intensive portions of application workloads to the GPU, while the CPU continues to run the rest of the program code. From the user’s perspective, the application runs noticeably faster.

Currently, GPU is only used for parallel matrix multiplication and addition operations, while the construction of neural network models and data flow transmission still occurs on the CPU. The interaction process between CPU and GPU includes: obtaining GPU information, configuring GPU ID, loading neuron parameters to GPU, GPU accelerating neural network computation, and receiving GPU computation results.

Why GPUs Are So Important in Autonomous Driving

One of the most critical technical domains in autonomous driving technology is deep learning. AI based on deep learning architectures is now widely applied in various fields of the automotive industry, including computer vision, natural language processing, sensor fusion, target recognition, and autonomous driving. From autonomous driving startups to internet companies and major OEM manufacturers, all are actively exploring the use of GPUs to build neural networks for achieving ultimate autonomous driving.

Since the birth of GPU accelerated computing, it has provided a multi-core parallel computing architecture for enterprise data, supporting data sources that previous CPU architectures could not handle. In comparison, to complete the same deep learning training tasks, the cost of using GPU computing clusters is only one-two hundredth of that of CPU computing clusters.

GPUs Are the Key to Autonomous Driving and Deep Learning

Whether enabling cars to perceive their surrounding environment in real-time or quickly plan driving routes and actions, all these require the rapid response of a car’s brain, posing a significant challenge to computer hardware manufacturers. The process of autonomous driving constantly requires deep learning or AI algorithms to cope with infinite possible situations, and the vigorous development of AI, deep learning, and autonomous driving has ushered in a golden age for GPU computing.

Another important parameter of GPU is its floating-point computing capability. Floating-point counting uses floating-point numbers of different lengths to represent a number, while fixed-point numbers correspond to it. During the iterative process of autonomous driving algorithms, a high degree of precision is required, necessitating support for floating-point operations.

Definition and Structure of FPGA Chips

FPGA (Field-Programmable Gate Array) is a product further developed from programmable devices such as PAL, GAL, and CPLD. It emerged as a semi-custom circuit in the field of application-specific integrated circuits, solving the shortcomings of custom circuits while overcoming the limitations of the number of gate circuits in original programmable devices.

FPGA chips are mainly composed of six parts: programmable input/output units, basic programmable logic units, complete clock management, embedded block RAM, rich routing resources, and embedded lower-level functional units and dedicated hardware modules. Currently, mainstream FPGAs are still based on lookup table technology, which has far exceeded the basic performance of earlier versions and integrated commonly used functionalities (such as RAM, clock management, and DSP) into hard cores (ASIC type) modules.

Working Principle of FPGA

Since FPGA needs to be reprogrammed repeatedly, its basic structure for implementing combinational logic cannot be completed using fixed NAND gates like ASIC, but must adopt a structure that is easy to reconfigure. Lookup tables can meet this requirement well, and currently mainstream FPGAs use SRAM-based lookup table structures, while some military and aerospace-grade FPGAs use Flash or fuse and anti-fuse technology for their lookup table structures. The repeated configuration of FPGA is achieved by altering the contents of the lookup tables through a programming file.

A lookup table (LUT) is essentially a RAM. Currently, FPGAs mostly use 4-input LUTs, so each LUT can be viewed as a RAM with 4 address lines. After users describe a logic circuit using schematics or HDL languages, the PLD/FPGA development software automatically calculates all possible results of the logic circuit and writes the truth table (i.e., results) into RAM in advance. Thus, every time a signal is input for logical computation, it is equivalent to inputting an address to look up the table, finding the corresponding content, and then outputting it.

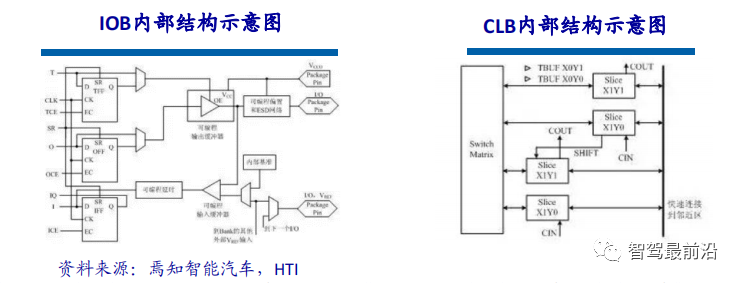

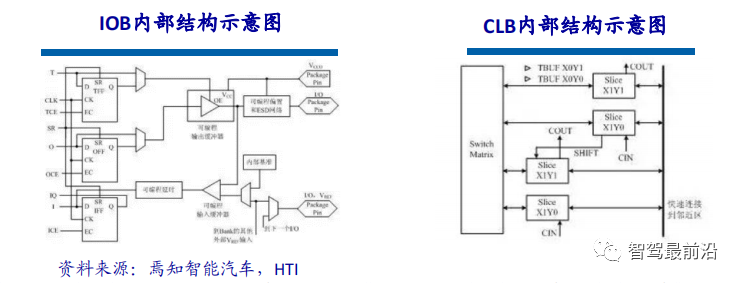

The programmable input/output units, referred to as I/O units, are the interface part between the chip and external circuits, meeting the driving and matching requirements of input/output signals under different electrical characteristics. The I/O in FPGA is categorized into groups, with each group capable of independently supporting different I/O standards. Through flexible software configuration, it can adapt to different electrical standards and I/O physical characteristics, adjusting driving currents and changing pull-up and pull-down resistors. Currently, the frequency of I/O ports is also increasing, with some high-end FPGAs supporting data rates up to 2Gbps through DDR register technology.

CLB is the basic logic unit within FPGA. The actual quantity and characteristics of CLB will vary by device, but each CLB contains a configurable switch matrix, which consists of 4 or 6 inputs, some selection circuits (such as multiplexers), and flip-flops. The switch matrix is highly flexible and can be configured to process combinational logic, shift registers, or RAM. In Xilinx FPGA devices, CLBs are composed of multiple (generally 4 or 2) identical slices and additional logic.

The “Chip” Killer in Autonomous Driving

Autonomous driving and advanced driver assistance systems (ADAS) are undergoing transformation, presenting new complex demands for computing and sensor functionalities. FPGA possesses unique advantages incomparable to other chip solutions, making it an excellent choice to meet the evolving needs of the autonomous driving industry. FPGA is a special technology in the chip field that can be repeatedly configured through software tools while having rich I/O interfaces and computing units. Therefore, FPGA can simultaneously handle both pipeline parallelism and data parallelism according to the specific needs of application scenarios, inherently featuring high computing performance, low latency, and low power consumption.

FPGA offers multiple advantages, including high throughput, high energy efficiency, and real-time processing, making it highly compatible with the technical demands of autonomous driving. The standards and requirements for advanced driver assistance systems (ADAS) and in-vehicle experience (IVE) applications are evolving rapidly. System designers are primarily concerned with excellent flexibility and faster development cycles while maintaining a higher performance-to-power ratio. By combining reprogrammable FPGAs with an increasing number of automotive-grade products, automotive designers can meet design requirements and stay ahead in the ever-changing automotive industry.

The programmable input/output units, referred to as I/O units, are the interface part between the chip and external circuits, meeting the driving and matching requirements of input/output signals under different electrical characteristics. The I/O in FPGA is categorized into groups, with each group capable of independently supporting different I/O standards. Through flexible software configuration, it can adapt to different electrical standards and I/O physical characteristics, adjusting driving currents and changing pull-up and pull-down resistors. Currently, the frequency of I/O ports is also increasing, with some high-end FPGAs supporting data rates up to 2Gbps through DDR register technology.

CLB is the basic logic unit within FPGA. The actual quantity and characteristics of CLB will vary by device, but each CLB contains a configurable switch matrix, which consists of 4 or 6 inputs, some selection circuits (such as multiplexers), and flip-flops. The switch matrix is highly flexible and can be configured to process combinational logic, shift registers, or RAM. In Xilinx FPGA devices, CLBs are composed of multiple (generally 4 or 2) identical slices and additional logic.

The “Chip” Killer in Autonomous Driving

Autonomous driving and advanced driver assistance systems (ADAS) are undergoing transformation, presenting new complex demands for computing and sensor functionalities. FPGA possesses unique advantages incomparable to other chip solutions, making it an excellent choice to meet the evolving needs of the autonomous driving industry. FPGA is a special technology in the chip field that can be repeatedly configured through software tools while having rich I/O interfaces and computing units. Therefore, FPGA can simultaneously handle both pipeline parallelism and data parallelism according to the specific needs of application scenarios, inherently featuring high computing performance, low latency, and low power consumption.

FPGA offers multiple advantages, including high throughput, high energy efficiency, and real-time processing, making it highly compatible with the technical demands of autonomous driving. The standards and requirements for advanced driver assistance systems (ADAS) and in-vehicle experience (IVE) applications are evolving rapidly. System designers are primarily concerned with excellent flexibility and faster development cycles while maintaining a higher performance-to-power ratio. By combining reprogrammable FPGAs with an increasing number of automotive-grade products, automotive designers can meet design requirements and stay ahead in the ever-changing automotive industry.

The true value of autonomous driving chips lies in the utilization of the computing engine, which is the difference between theoretical performance and actual performance. FPGA contains numerous routing links and a large number of small storage units. This combination of resources allows designers to create customized data feed networks for their computing engines to achieve higher utilization levels. Programmable logic provides customers with a high degree of flexibility to adapt to the ever-changing demands of emerging application areas such as ADAS and autonomous driving. Improved interface standards, algorithm innovations, and new sensor technologies all require adaptable platforms that can support both software and hardware changes, which is precisely the strength of FPGA chips.

FPGA chips have scalability. Scalable chips change the number of programmable logics, mostly using pin-compatible packaging. This means that developers can create a single ECU platform to host low, medium, and high versions of ADAS function packages and scale costs by selecting the required minimum density chip as needed.

FPGA chips allow developers to create unique differentiated processing solutions that can be optimized for specific applications or sensors. This is not achievable with ASSP chips, even those providing dedicated accelerators; their usage is limited and fundamentally available to all competitors. For example, long-term customers of Xilinx have created high-value IP libraries that are accessible only to them, and these functionalities can be utilized across various products of the company. Since the 90nm node, Xilinx chips have been highly cost-effective for large-volume automotive applications, with over 160 million Xilinx chips applied in the industry.

Definition and Characteristics of ASIC

ASIC chips can be classified into TPU chips, DPU chips, and NPU chips based on their end functions. Among them, TPU refers to Tensor Processing Unit, specifically designed for machine learning. For example, Google developed a programmable AI accelerator for the Tensorflow platform in May 2016, which can operate when the internal instruction set changes or updates algorithms. DPU stands for Data Processing Unit, providing engines for computing scenarios like data centers. NPU is a Neural Processing Unit that simulates human neurons and synapses at the circuit level, directly processing large-scale electronic neuron and synapse data using deep learning instruction sets.

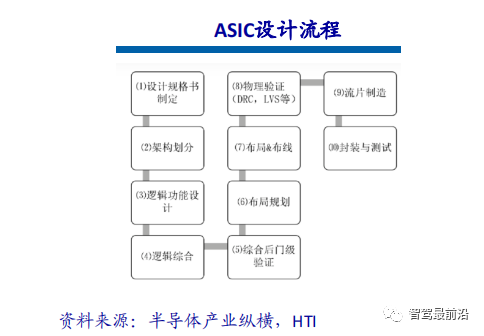

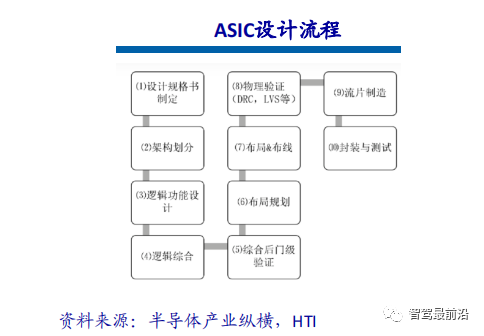

ASIC has two design methods: fully customized and semi-customized. Fully customized relies on a massive investment of human resources and time to complete the entire integrated circuit design process independently, although it is more flexible and performs better than semi-customized ASIC, its development efficiency is significantly lower.

Significant Performance Improvements

ASIC chips are very suitable for artificial intelligence application scenarios. For example, NVIDIA‘s first chip designed from scratch for deep learning, the Tesla P100, has a data processing speed 12 times that of its GPU series launched in 2014. Google‘s TPU chip, customized for machine learning, has elevated hardware performance to a level equivalent to that of current chips seven years after the development of Moore’s Law. Just as the CPU changed the massive computers of the past, AI ASIC chips will significantly alter the landscape of today’s AI hardware devices. For instance, the renowned AlphaGo used about 170 GPUs and 1200 CPUs, requiring an entire machine room with high-power air conditioning and multiple experts for system maintenance. If all used dedicated chips, it would likely only require the space of a standard storage box, with significantly reduced power consumption.

The ASIC technology route is limited and open. Chip companies need to develop towards mainstream networks, models, and operators related to driving. For the same performance, the chip area is smaller, costs lower, and power consumption is less. The future potential of the ASIC technology route is significant; choosing the ASIC route does not mean developing different ASICs for different vehicle models or conducting different verifications. This is because the functions that different vehicle models need to achieve are roughly the same, and the chip faces limited openness regarding models and operators, allowing rapid algorithm iteration without affecting the chip’s support for upper-level functions. Collaboration between car manufacturers and chip design companies for differentiated customization is a better choice. Even for differentiated customization, 50% of the chip’s internal components are still general-purpose. Chip design companies can perform differentiated designs based on existing versions to achieve partial differentiated functions.

Comparison of Mainstream Architecture Solutions: Three Mainstream Architectures

FPGA is a product further developed from programmable devices such as PAL and GAL. It emerged as a semi-custom circuit in the field of application-specific integrated circuits, solving the shortcomings of custom circuits while overcoming the limitations of the number of gate circuits in original programmable devices. Advantages: can be programmed infinitely, has lower latency, and possesses both pipeline and data parallelism, with the strongest real-time performance and highest flexibility. Disadvantages: high development difficulty, only suitable for fixed-point operations, and relatively expensive.

The graphics processing unit (GPU), also known as the display core, visual processor, or display chip, is a microprocessor specialized in performing image and graphics-related calculations on personal computers, workstations, game consoles, and some mobile devices (such as tablets and smartphones). Advantages: provides a foundational structure for multi-core parallel computing with an extremely high number of cores, supporting large-scale data parallel computations with higher floating-point computation capabilities. Disadvantages: management control capability (weakest), power consumption (highest).

ASIC, or application-specific integrated circuit, refers to integrated circuits designed and manufactured to meet the specific needs of particular users and electronic systems. Currently, using CPLD (Complex Programmable Logic Devices) and FPGA (Field Programmable Gate Arrays) for ASIC design is one of the most popular methods. Advantages: as a product closely integrated with integrated circuit technology and specific user systems, it has advantages such as smaller size, lighter weight, lower power consumption, improved reliability, enhanced performance, increased confidentiality, and reduced cost compared to general-purpose integrated circuits. Disadvantages: insufficient flexibility and higher costs than FPGA.

Limitations of Power Theory: TOPS Performance Does Not Equate to Actual Performance

With the rise of ADAS and autonomous driving technologies, along with the gradual advancement of software-defined vehicles, the demand for computing power and massive data processing capabilities in smart cars has surged. The traditional chip “stacking” solution can no longer meet the computing power requirements for autonomous driving. Chips ultimately serve the vehicle’s onboard computing platform, and in the context of “software-defined vehicles,” addressing the support issue for intelligent driving system computing platforms cannot be achieved solely through chip power stacking.

Chips are the stage for software; the standard for measuring chip quality depends on whether the software on the chip can maximize its effectiveness. The chips with the same computing power that can run software more efficiently are the “better chips.” The primary factors determining the true value of computing power are memory (SRAM and DRAM) bandwidth, actual operating frequency (i.e., supply voltage or temperature), and the batch size of the algorithm.

The TOPS of a single chip is a key indicator but not the only one. Autonomous driving is a complex system requiring collaboration between vehicles, roads, clouds, and edges. Therefore, its competition involves not only chips but also hardware-software collaboration, platforms, and toolchains, among others. The infinite expansion of chip computing power and hardware embedding will not be the trend of the future; hardware must also match reality. High performance is accompanied by high power consumption and low utilization issues.

Introduction to Event Cameras

Introduction and Working Mechanism

The inspiration for event cameras comes from the vision of human eyes and animals, also referred to as silicon retinas. Biological vision is only sensitive to areas with changes; event cameras capture the occurrence or change of events.

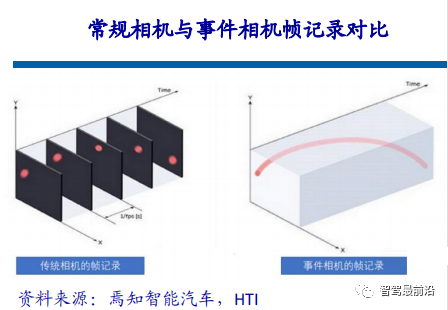

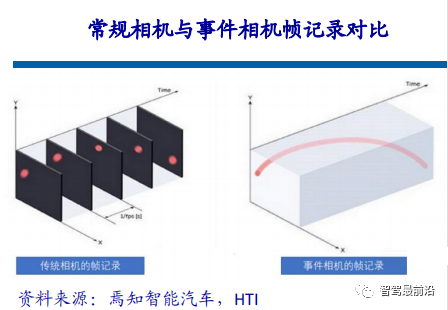

In traditional vision fields, the information returned by cameras is synchronized. Synchronization means that at a certain moment t, the camera exposes and fills all pixels in a matrix to return a photograph. All pixels on a photo correspond to the same moment. As for video, it is merely a series of images, with varying time intervals between adjacent images, known as frame rate (time latency). Event cameras, akin to the human brain and eyes, skip irrelevant backgrounds and directly perceive the core of a scene, creating pure events rather than data.

The working mechanism of event cameras is that when the brightness at a certain pixel location changes and reaches a certain threshold, the camera returns an event in the aforementioned format, where the first two items are the pixel coordinates of the event, the third item is the timestamp of the event, and the last item is the value of polarity (0 or 1, or -1 or 1), indicating whether the brightness has increased or decreased.

Thus, within the camera’s field of view, as long as there is a change in pixel values, an event will be returned. All these events occur asynchronously (even the smallest time interval cannot be completely simultaneous), so the timestamps of the events are all different. Due to the simplicity of the return process, it possesses lower latency characteristics compared to traditional cameras, capturing pixel changes within very short time intervals, with latencies at the microsecond level.

Applications in Autonomous Driving

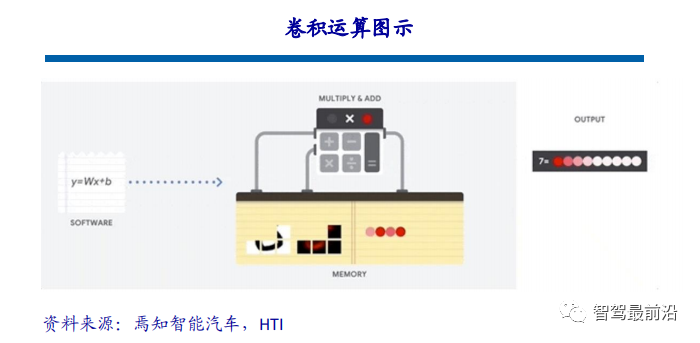

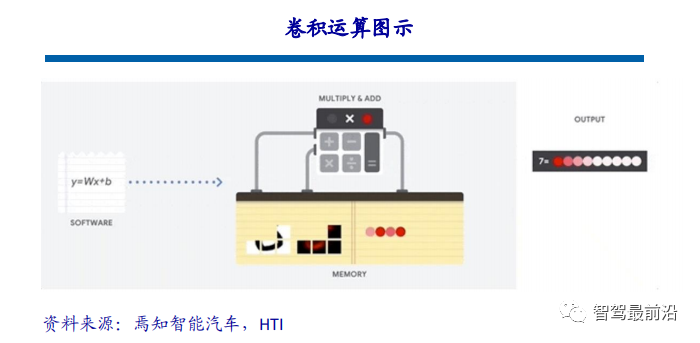

Currently, the visual recognition algorithms used in the autonomous driving field are primarily based on convolutional neural networks. The essence of visual algorithm computation involves repeated convolution operations. This computation is not complex, essentially involving addition, subtraction, multiplication, and division, which is a type of product accumulation operation. However, this simple operation is prevalent in convolutional neural networks, placing high demands on processor performance.

Taking ResNet-152 as an example, this is a 152-layer convolutional neural network that requires about 22.6 billion computations to process an image of size 224*224 pixels. If this network processes a 1080P camera at 30 frames per second, the required computing power skyrockets to 33 trillion calculations per second, which is enormous.

Saving Computing Power by Reducing Ineffective Calculations

In the field of autonomous driving, 99% of visual data processed by AI is useless background. For example, detecting a ghost probe involves a very small area of change, but traditional visual processing still needs to handle 99% of the unchanged background area, which not only wastes a significant amount of computing power but also consumes time. Alternatively, like finding a diamond in gravel, AI chips and traditional cameras need to identify every grain of sand to filter out the diamond, while humans can detect the diamond with a single glance. The time taken by AI chips and traditional cameras can be 100 or even 1000 times that of humans.

In addition to reducing redundant information and having almost no latency, the advantages of event cameras also include low latency, which prevents blurring when capturing high-speed objects. Traditional cameras, due to a certain exposure time, can cause blurring, while event cameras almost do not. Moreover, event cameras possess true high dynamic range; due to their characteristics, traditional cameras may “go blind” in strong or weak light conditions, but pixel changes still occur, allowing event cameras to still see the objects before them.

Welcome to All Automotive Industry Chain (including Power Battery Industry Chain) Angel Round,ARound Enterprises to Join the Group (Will recommend to 800 automotive investment institutions, including top institutions);There are communication groups for leaders of scientific and technological innovation companies, automotive industry complete vehicles, automotive semiconductors, key components, new energy vehicles, intelligent connected vehicles, aftermarket, automotive investment, autonomous driving, Internet of Vehicles, and dozens of other groups. Please scan the administrator’s WeChat to join the group (Please indicate your company name)

The true value of autonomous driving chips lies in the utilization of the computing engine, which is the difference between theoretical performance and actual performance. FPGA contains numerous routing links and a large number of small storage units. This combination of resources allows designers to create customized data feed networks for their computing engines to achieve higher utilization levels. Programmable logic provides customers with a high degree of flexibility to adapt to the ever-changing demands of emerging application areas such as ADAS and autonomous driving. Improved interface standards, algorithm innovations, and new sensor technologies all require adaptable platforms that can support both software and hardware changes, which is precisely the strength of FPGA chips.

FPGA chips have scalability. Scalable chips change the number of programmable logics, mostly using pin-compatible packaging. This means that developers can create a single ECU platform to host low, medium, and high versions of ADAS function packages and scale costs by selecting the required minimum density chip as needed.

FPGA chips allow developers to create unique differentiated processing solutions that can be optimized for specific applications or sensors. This is not achievable with ASSP chips, even those providing dedicated accelerators; their usage is limited and fundamentally available to all competitors. For example, long-term customers of Xilinx have created high-value IP libraries that are accessible only to them, and these functionalities can be utilized across various products of the company. Since the 90nm node, Xilinx chips have been highly cost-effective for large-volume automotive applications, with over 160 million Xilinx chips applied in the industry.

Definition and Characteristics of ASIC

ASIC chips can be classified into TPU chips, DPU chips, and NPU chips based on their end functions. Among them, TPU refers to Tensor Processing Unit, specifically designed for machine learning. For example, Google developed a programmable AI accelerator for the Tensorflow platform in May 2016, which can operate when the internal instruction set changes or updates algorithms. DPU stands for Data Processing Unit, providing engines for computing scenarios like data centers. NPU is a Neural Processing Unit that simulates human neurons and synapses at the circuit level, directly processing large-scale electronic neuron and synapse data using deep learning instruction sets.

ASIC has two design methods: fully customized and semi-customized. Fully customized relies on a massive investment of human resources and time to complete the entire integrated circuit design process independently, although it is more flexible and performs better than semi-customized ASIC, its development efficiency is significantly lower.

Significant Performance Improvements

ASIC chips are very suitable for artificial intelligence application scenarios. For example, NVIDIA‘s first chip designed from scratch for deep learning, the Tesla P100, has a data processing speed 12 times that of its GPU series launched in 2014. Google‘s TPU chip, customized for machine learning, has elevated hardware performance to a level equivalent to that of current chips seven years after the development of Moore’s Law. Just as the CPU changed the massive computers of the past, AI ASIC chips will significantly alter the landscape of today’s AI hardware devices. For instance, the renowned AlphaGo used about 170 GPUs and 1200 CPUs, requiring an entire machine room with high-power air conditioning and multiple experts for system maintenance. If all used dedicated chips, it would likely only require the space of a standard storage box, with significantly reduced power consumption.

The ASIC technology route is limited and open. Chip companies need to develop towards mainstream networks, models, and operators related to driving. For the same performance, the chip area is smaller, costs lower, and power consumption is less. The future potential of the ASIC technology route is significant; choosing the ASIC route does not mean developing different ASICs for different vehicle models or conducting different verifications. This is because the functions that different vehicle models need to achieve are roughly the same, and the chip faces limited openness regarding models and operators, allowing rapid algorithm iteration without affecting the chip’s support for upper-level functions. Collaboration between car manufacturers and chip design companies for differentiated customization is a better choice. Even for differentiated customization, 50% of the chip’s internal components are still general-purpose. Chip design companies can perform differentiated designs based on existing versions to achieve partial differentiated functions.

Comparison of Mainstream Architecture Solutions: Three Mainstream Architectures

FPGA is a product further developed from programmable devices such as PAL and GAL. It emerged as a semi-custom circuit in the field of application-specific integrated circuits, solving the shortcomings of custom circuits while overcoming the limitations of the number of gate circuits in original programmable devices. Advantages: can be programmed infinitely, has lower latency, and possesses both pipeline and data parallelism, with the strongest real-time performance and highest flexibility. Disadvantages: high development difficulty, only suitable for fixed-point operations, and relatively expensive.

The graphics processing unit (GPU), also known as the display core, visual processor, or display chip, is a microprocessor specialized in performing image and graphics-related calculations on personal computers, workstations, game consoles, and some mobile devices (such as tablets and smartphones). Advantages: provides a foundational structure for multi-core parallel computing with an extremely high number of cores, supporting large-scale data parallel computations with higher floating-point computation capabilities. Disadvantages: management control capability (weakest), power consumption (highest).

ASIC, or application-specific integrated circuit, refers to integrated circuits designed and manufactured to meet the specific needs of particular users and electronic systems. Currently, using CPLD (Complex Programmable Logic Devices) and FPGA (Field Programmable Gate Arrays) for ASIC design is one of the most popular methods. Advantages: as a product closely integrated with integrated circuit technology and specific user systems, it has advantages such as smaller size, lighter weight, lower power consumption, improved reliability, enhanced performance, increased confidentiality, and reduced cost compared to general-purpose integrated circuits. Disadvantages: insufficient flexibility and higher costs than FPGA.

Limitations of Power Theory: TOPS Performance Does Not Equate to Actual Performance

With the rise of ADAS and autonomous driving technologies, along with the gradual advancement of software-defined vehicles, the demand for computing power and massive data processing capabilities in smart cars has surged. The traditional chip “stacking” solution can no longer meet the computing power requirements for autonomous driving. Chips ultimately serve the vehicle’s onboard computing platform, and in the context of “software-defined vehicles,” addressing the support issue for intelligent driving system computing platforms cannot be achieved solely through chip power stacking.

Chips are the stage for software; the standard for measuring chip quality depends on whether the software on the chip can maximize its effectiveness. The chips with the same computing power that can run software more efficiently are the “better chips.” The primary factors determining the true value of computing power are memory (SRAM and DRAM) bandwidth, actual operating frequency (i.e., supply voltage or temperature), and the batch size of the algorithm.

The TOPS of a single chip is a key indicator but not the only one. Autonomous driving is a complex system requiring collaboration between vehicles, roads, clouds, and edges. Therefore, its competition involves not only chips but also hardware-software collaboration, platforms, and toolchains, among others. The infinite expansion of chip computing power and hardware embedding will not be the trend of the future; hardware must also match reality. High performance is accompanied by high power consumption and low utilization issues.

Introduction to Event Cameras

Introduction and Working Mechanism

The inspiration for event cameras comes from the vision of human eyes and animals, also referred to as silicon retinas. Biological vision is only sensitive to areas with changes; event cameras capture the occurrence or change of events.

In traditional vision fields, the information returned by cameras is synchronized. Synchronization means that at a certain moment t, the camera exposes and fills all pixels in a matrix to return a photograph. All pixels on a photo correspond to the same moment. As for video, it is merely a series of images, with varying time intervals between adjacent images, known as frame rate (time latency). Event cameras, akin to the human brain and eyes, skip irrelevant backgrounds and directly perceive the core of a scene, creating pure events rather than data.

The working mechanism of event cameras is that when the brightness at a certain pixel location changes and reaches a certain threshold, the camera returns an event in the aforementioned format, where the first two items are the pixel coordinates of the event, the third item is the timestamp of the event, and the last item is the value of polarity (0 or 1, or -1 or 1), indicating whether the brightness has increased or decreased.

Thus, within the camera’s field of view, as long as there is a change in pixel values, an event will be returned. All these events occur asynchronously (even the smallest time interval cannot be completely simultaneous), so the timestamps of the events are all different. Due to the simplicity of the return process, it possesses lower latency characteristics compared to traditional cameras, capturing pixel changes within very short time intervals, with latencies at the microsecond level.

Applications in Autonomous Driving

Currently, the visual recognition algorithms used in the autonomous driving field are primarily based on convolutional neural networks. The essence of visual algorithm computation involves repeated convolution operations. This computation is not complex, essentially involving addition, subtraction, multiplication, and division, which is a type of product accumulation operation. However, this simple operation is prevalent in convolutional neural networks, placing high demands on processor performance.

Taking ResNet-152 as an example, this is a 152-layer convolutional neural network that requires about 22.6 billion computations to process an image of size 224*224 pixels. If this network processes a 1080P camera at 30 frames per second, the required computing power skyrockets to 33 trillion calculations per second, which is enormous.

Saving Computing Power by Reducing Ineffective Calculations

In the field of autonomous driving, 99% of visual data processed by AI is useless background. For example, detecting a ghost probe involves a very small area of change, but traditional visual processing still needs to handle 99% of the unchanged background area, which not only wastes a significant amount of computing power but also consumes time. Alternatively, like finding a diamond in gravel, AI chips and traditional cameras need to identify every grain of sand to filter out the diamond, while humans can detect the diamond with a single glance. The time taken by AI chips and traditional cameras can be 100 or even 1000 times that of humans.

In addition to reducing redundant information and having almost no latency, the advantages of event cameras also include low latency, which prevents blurring when capturing high-speed objects. Traditional cameras, due to a certain exposure time, can cause blurring, while event cameras almost do not. Moreover, event cameras possess true high dynamic range; due to their characteristics, traditional cameras may “go blind” in strong or weak light conditions, but pixel changes still occur, allowing event cameras to still see the objects before them.

Welcome to All Automotive Industry Chain (including Power Battery Industry Chain) Angel Round,ARound Enterprises to Join the Group (Will recommend to 800 automotive investment institutions, including top institutions);There are communication groups for leaders of scientific and technological innovation companies, automotive industry complete vehicles, automotive semiconductors, key components, new energy vehicles, intelligent connected vehicles, aftermarket, automotive investment, autonomous driving, Internet of Vehicles, and dozens of other groups. Please scan the administrator’s WeChat to join the group (Please indicate your company name)