Since the launch of ChatGPT, many first-wave generative AI applications have emerged as variants of chat systems using Retrieval-Augmented Generation (RAG) models on document corpora. While significant efforts are being made to enhance the robustness of RAG systems, different teams are beginning to construct the next generation of AI applications, focusing on a common theme: agents. Unlike large language models that provide results based on zero-shot prompts where users input in open text fields without additional input, agents allow for more complex interactions and orchestration. In particular, agent systems incorporate concepts of planning, looping, reflection, and other control structures that leverage the inherent reasoning capabilities of the models to complete tasks end-to-end. By combining the ability to use tools, plugins, and function calls, agents are empowered to perform more general tasks.Single agent architectures excel when the problem definition is clear and does not require feedback from other agent roles or users; whereas multi-agent architectures tend to thrive when collaboration and multiple execution paths are needed..

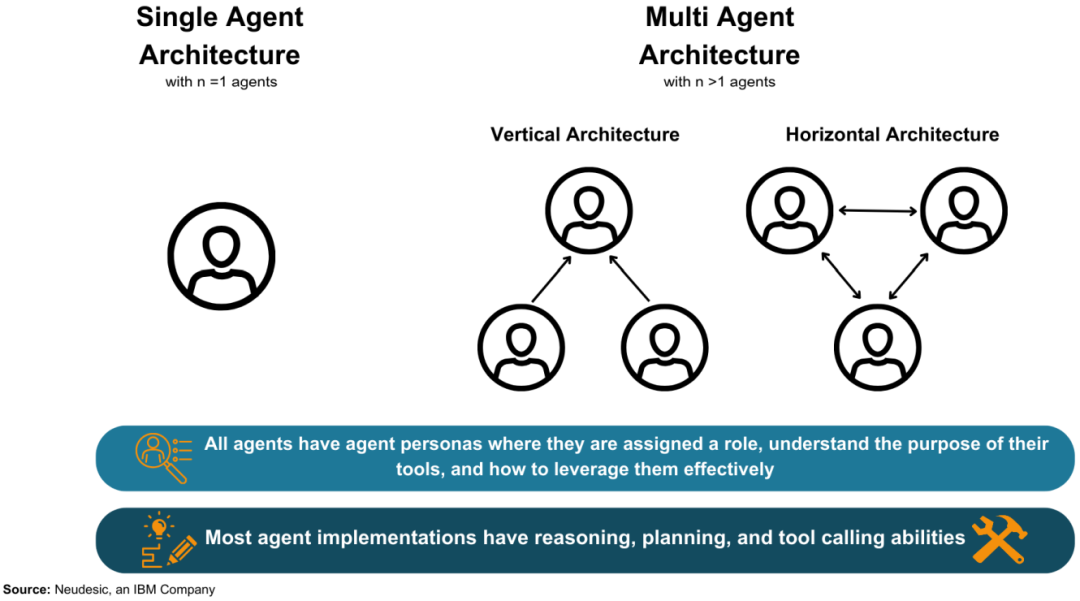

Classification of AgentsAI agents are defined as language model-driven entities capable of planning and taking actions to achieve goals over multiple iterations. Agent architectures can consist of a single agent or multiple agents working collaboratively to solve problems.Figure 1: A visual representation of single agent and multiagent architectures and their underlying characteristics and capabilities.

-

Definition of AI Agents: AI agents are described as language model-driven entities capable of planning and taking actions to achieve goals over multiple iterations.

-

Single Agent vs. Multi Agent Architectures: AI agent architectures can consist of a single agent or multiple collaborating agents to address specific problems.

-

Agent Roles (Agent Persona): Each agent is assigned a role or persona, which includes any instructions specific to that agent. Roles also involve descriptions of the tools that the agent can utilize.

-

Tools: In the context of AI agents, tools refer to any functions that the model can invoke, allowing agents to interact with external data sources.

-

Single Agent Architecture: Driven by a single language model, it independently performs all reasoning, planning, and tool execution. The single agent model lacks feedback mechanisms from other AI agents but may include options for human-provided feedback.

-

Multi Agent Architecture: Involves two or more agents that can utilize the same language model or different sets of language models. Each agent typically has its unique role.

-

Vertical vs. Horizontal Architectures: Multi agent architectures are further divided into vertical and horizontal types. In vertical architectures, one agent acts as a leader, while in horizontal architectures, all agents participate equally, sharing information and tasks.

-

Components of an Agent: According to the definitions in the text, an agent consists of three fundamental components: “brain, perception, and action” to meet the minimal requirements for understanding, reasoning, and acting upon its environment.

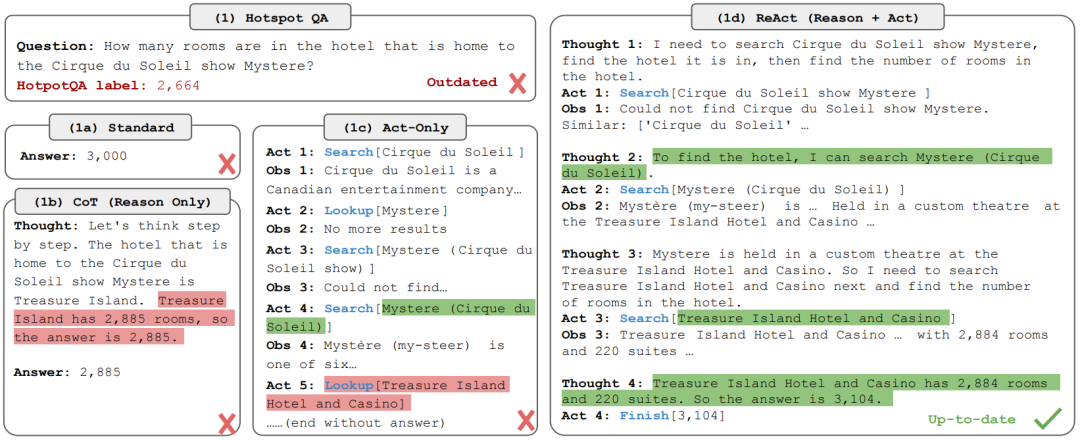

Single Agent ArchitectureSingle agent architecture is driven by a language model that independently executes all reasoning, planning, and tool execution.Successful execution of goals by a single agent relies on appropriate planning and self-correcting capabilities.Single agents are particularly useful for tasks that involve direct function calls and do not require feedback from other agents.Example of Single Agent Approach:ReAct: The agent first writes down thoughts about the given task, then performs actions based on those thoughts and observes the output; this loop can repeat until the task is completed.Figure 2: An example comparing the ReAct method with other methods

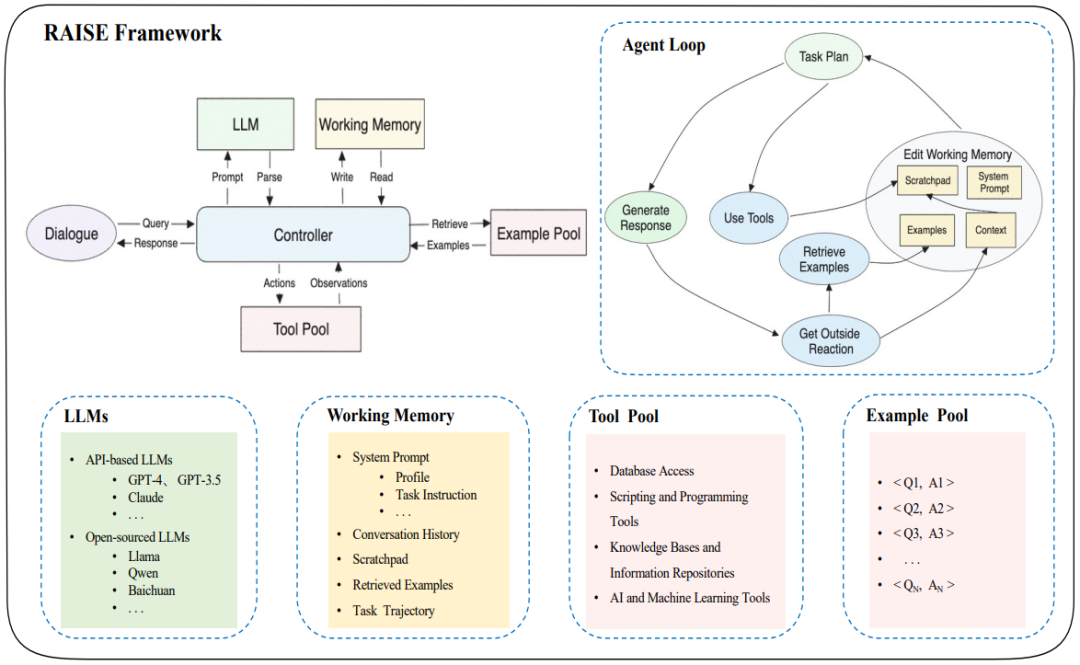

RAISE: Builds on the ReAct method by adding a memory mechanism that mimics human short-term and long-term memory, using a scratchpad for short-term storage and a dataset similar to previous cases for long-term storage.

Figure 3: A diagram illustrating the RAISE method

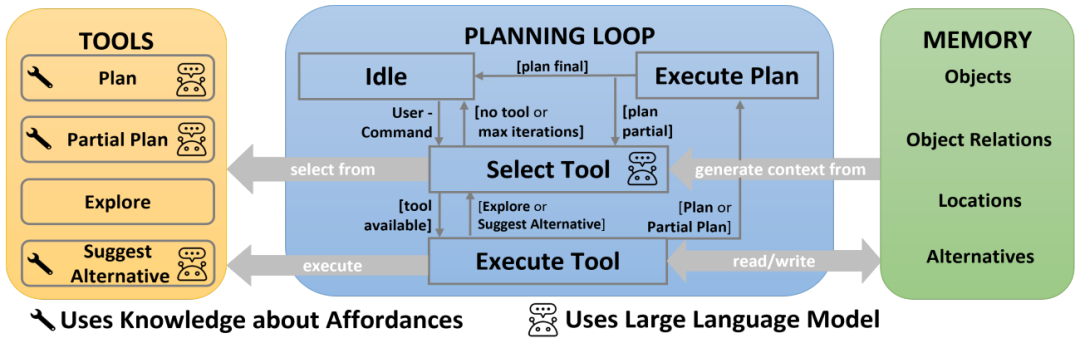

Reflexion: A single agent mode that uses language feedback for self-reflection, providing specific and relevant feedback to the agent using metrics such as success states, current trajectories, and persistent memory.AutoGPT + P: A method addressing the reasoning limitations of agents that command robots in natural language, combining object detection and Object Function Mapping (OAM) with an LLM-driven planning system.

Figure 4: A diagram of the AutoGPT+P method

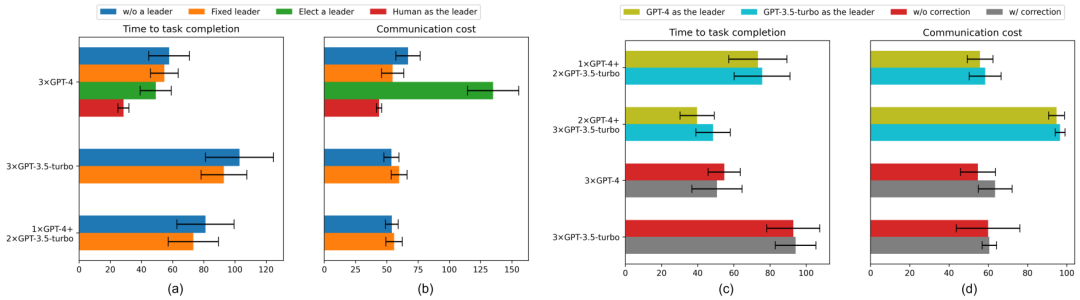

LATS: A single agent method that uses trees for planning, action, and reasoning, selecting actions through tree search algorithms and using environmental feedback and language model feedback for self-reflection after executing actions.Despite advancements in single agent architectures, they still face challenges in understanding complex logic, avoiding hallucinations, and improving performance on tasks requiring diversity, exploration, and reasoning.Multi Agent ArchitectureInvolves two or more agents, each of which can use the same language model or different sets of language models.Multi agent architectures facilitate goal achievement through communication and collaborative planning among agents.These architectures often involve dynamic team building and intelligent division of labor among team members during planning, execution, and evaluation phases.Multi agent architectures are divided into two main categories: vertical architectures and horizontal architectures. In vertical architectures, there is a dominant agent, while in horizontal architectures, all agents participate equally in task discussions.Examples of Multi Agent Architectures:Embodied LLM Agents Learn to Cooperate in Organized Teams: Investigated the impact of leadership agents on overall team effectiveness, finding that organized teams of leadership agents completed tasks faster than unled teams.Figure 5: Teams of agents with designated leaders achieved superior performance

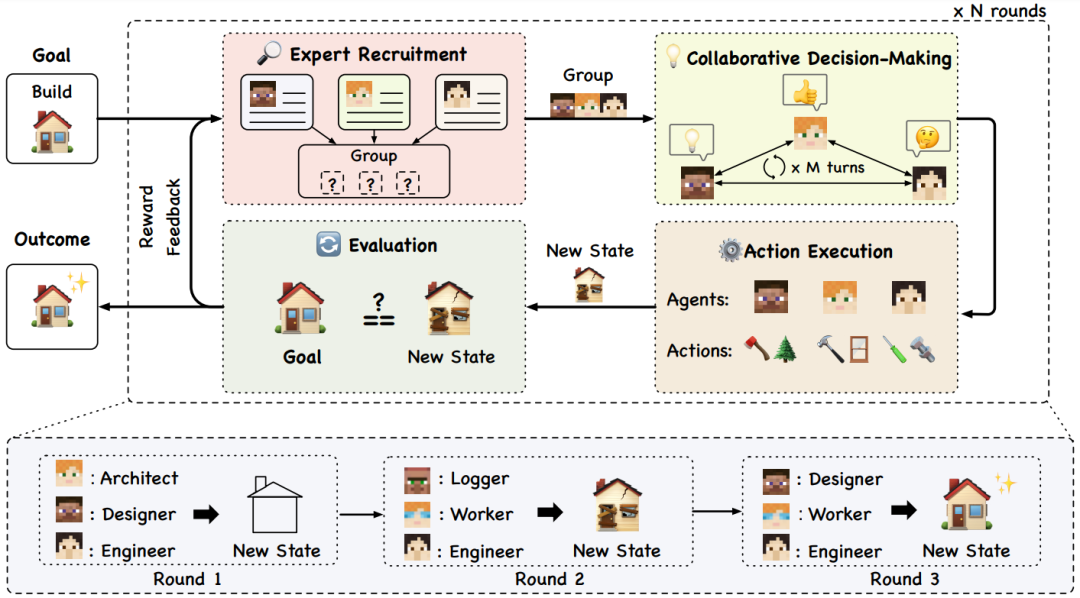

DyLAN (Dynamic LLM-Agent Network): Created a dynamic agent structure focused on complex tasks such as reasoning and code generation, optimizing teams by dynamically evaluating and ranking the contributions of agents.AgentVerse: Helps guide agents to reason, discuss, and execute more effectively by defining strict phases for task execution (recruitment, collaborative decision-making, independent action execution, and evaluation).Figure 6: A diagram of the AgentVerse method

MetaGPT: Addresses the issue of ineffective chatting between agents by requiring them to generate structured outputs (such as documents and charts) instead of sharing unstructured chat information.Despite the promising prospects of AI agent technology, challenges remain, including establishing comprehensive benchmarking, ensuring real-world applicability, and mitigating harmful biases in language models. Furthermore, the transition from static language models to more dynamic, autonomous agents aims to provide a comprehensive understanding and guidance for research utilizing existing or developing custom agent architectures.

THE LANDSCAPE OF EMERGING AI AGENT ARCHITECTURES FOR REASONING, PLANNING, AND TOOL CALLING: A SURVEYhttps://arxiv.org/pdf/2404.11584.pdfRecommended Reading

-

• Direct Preference Optimization Methods for Aligning LLM Preferences: DPO, IPO, KTO

-

• 2024: ToB, Agent, Multimodal

-

• Have RAGs Really Gone into Production? (Part 1)

-

• The Development and Case Studies of Agents to Multimodal Agents to Multimodal Multi-Agent Systems (12,000 words, 20+ references, 27 figures)

Feel free to follow my public account “PaperAgent“, where I share a large model (LLM) article every day to exercise our thinking, with simple examples and not-so-simple methods to enhance ourselves.