Produced by Zhien Intelligent Chip

Produced by Zhien Intelligent Chip

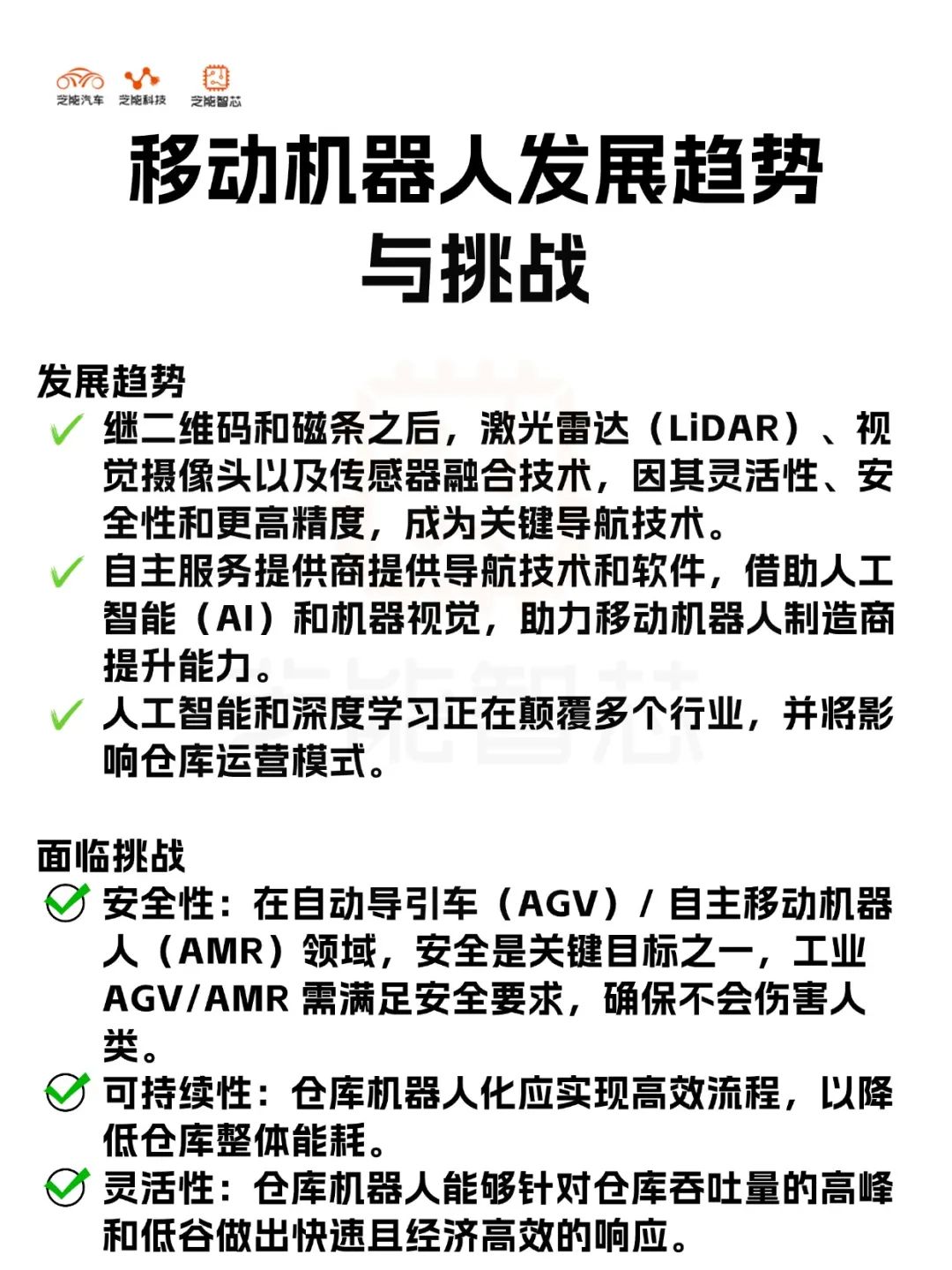

With the rapid development of sensors, electronic devices, and artificial intelligence technologies, mobile robots are evolving from single-function automation tools into complex systems with high autonomy and intelligence.

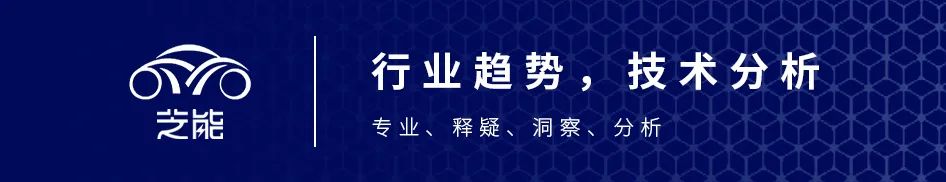

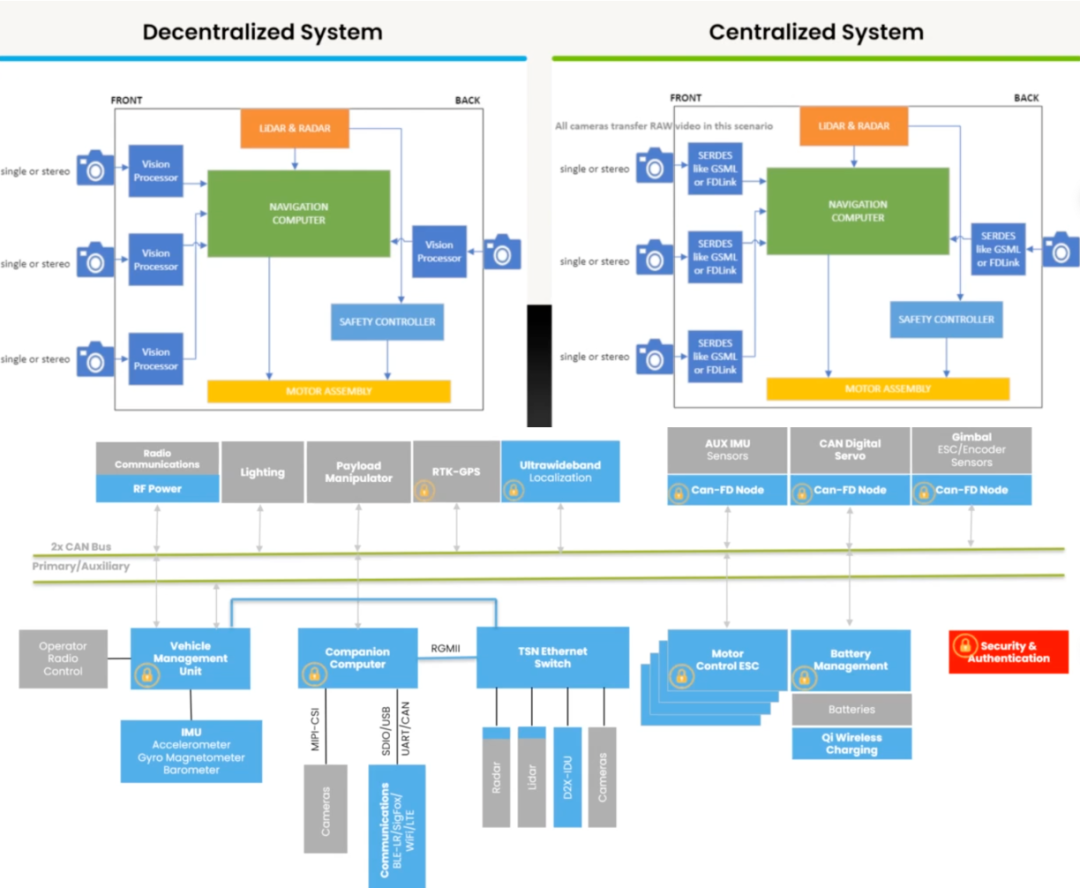

Based on NXP’s “Exploring Mobile Robotics: Real-Time Control, Autonomy, and Performance Optimization,” we will examine the four enabling technologies for mobile robots—edge processing, power, sensors, and communication—and focus on NXP’s innovative solutions in these areas.

NXP’s MCX series microcontrollers bring efficient edge computing and high reliability to mobile robots through the integration of a Neural Processing Unit (NPU) and error correction features; Gallium Nitride (GaN) technology and emerging battery chemistry enhance power efficiency and endurance; advancements in sensors and SLAM algorithms improve environmental perception; while 5G and mesh networking technologies drive collaboration and remote control capabilities among robots.

The integration of these technologies not only drives leaps in mobile robot performance but also opens up vast prospects for industrial automation, service robots, and other applications.

This article will explore the working principles of these technologies, NXP’s specific contributions, and future trends.

Part 1

Part 1

The Technological Foundation of Mobile Robots

NXP’s Edge Processing and Power Solutions

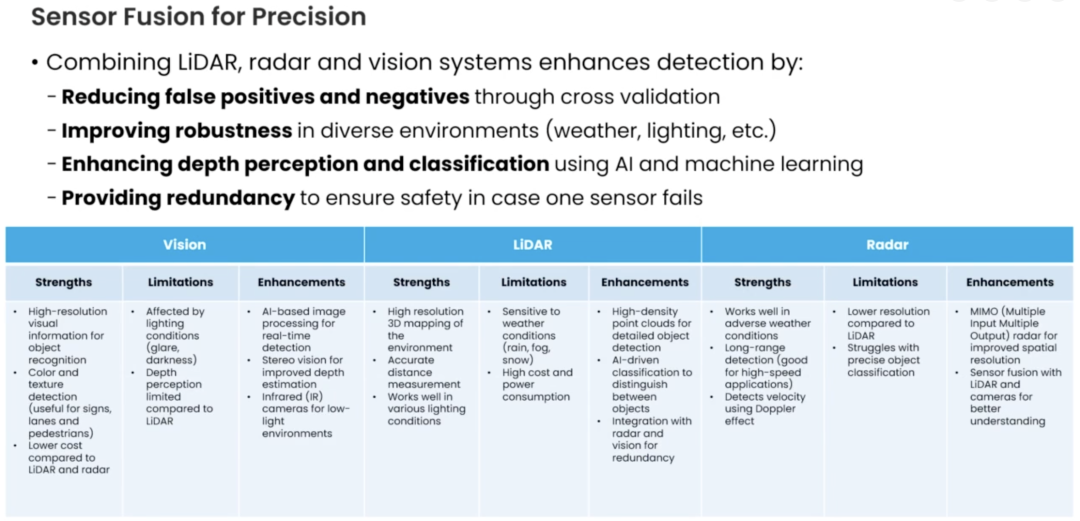

The field of mobile robotics is undergoing a technological revolution, with LiDAR, visual cameras, and sensor fusion technologies gradually replacing traditional QR codes and magnetic strips, becoming key navigation technologies for achieving higher flexibility, safety, and accuracy.

As autonomous service providers offer advanced navigation solutions and software support through artificial intelligence and machine vision technologies, mobile robot manufacturers can enhance the performance and functionality of their products. Particularly in warehouse operations, the application of artificial intelligence and deep learning signifies a major shift in industry models.

● The development trends of robots also face numerous challenges:

◎ The primary focus is on safety, especially in the operating environments of Automated Guided Vehicles (AGVs) and Autonomous Mobile Robots (AMRs), where ensuring personnel safety is crucial;◎ Sustainability is also a core issue, aiming to improve warehouse process efficiency through automation, thereby reducing overall energy consumption;◎ To address fluctuations in warehouse throughput, it is particularly critical to develop flexible robotic systems that can respond quickly and cost-effectively to changes.

● Edge Processing: The Intelligent Leap from MCU to NPU

The core of mobile robots lies in real-time decision-making and autonomy, and advancements in edge processing technology are key to achieving this goal.

Twenty years ago, Arm launched the Cortex-M series, laying the technological foundation for low-power microcontrollers (MCUs). Today, the NXP MCX N series MCUs based on Cortex-M33 elevate edge processing to new heights.

This series integrates the eIQ Neutron Neural Processing Unit (NPU), supporting complex models such as Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs), achieving up to 42 times the machine learning throughput compared to pure CPUs, while reducing power consumption and wake-up time.

MR-VMU-RT1176 is a vehicle management controller that integrates IMU control and network interfaces. It is based on the high-performance i.MX RT1176 dual-core MCU, featuring Cortex-M7 (1.0GHz) and Cortex-M4 (400MHz) cores.

● On the software side, it runs Zephyr RTOS and Cognipilot vehicle control software, with NuttX RTOS and PX4 autopilot available for drone applications.

● The module and carrier board comply with DroneCode PixHawk V6X – RT standards. It is equipped with a rich set of sensors, including dual 6-axis IMUs, barometers, magnetometers, 3-axis magnetometers, and GNSS modules.

● It features external encrypted QSPI Flash, supporting ECC to ensure fault-tolerant operation of Cache and TCM, and includes an SDCARD interface.

● In terms of network connectivity, it supports 2-wire 100Base-T1 Ethernet (with TSN), and has three CAN FD buses (with CAN-SIC transceivers).

It also integrates SE050/51 EdgeLock security elements, providing USB-C, I²C, SPI, UART, dual GPS interfaces, and features 12 PWM outputs, utilizing dual redundant power inputs, covering ADC, RC, DSM, NFC, and other functions.

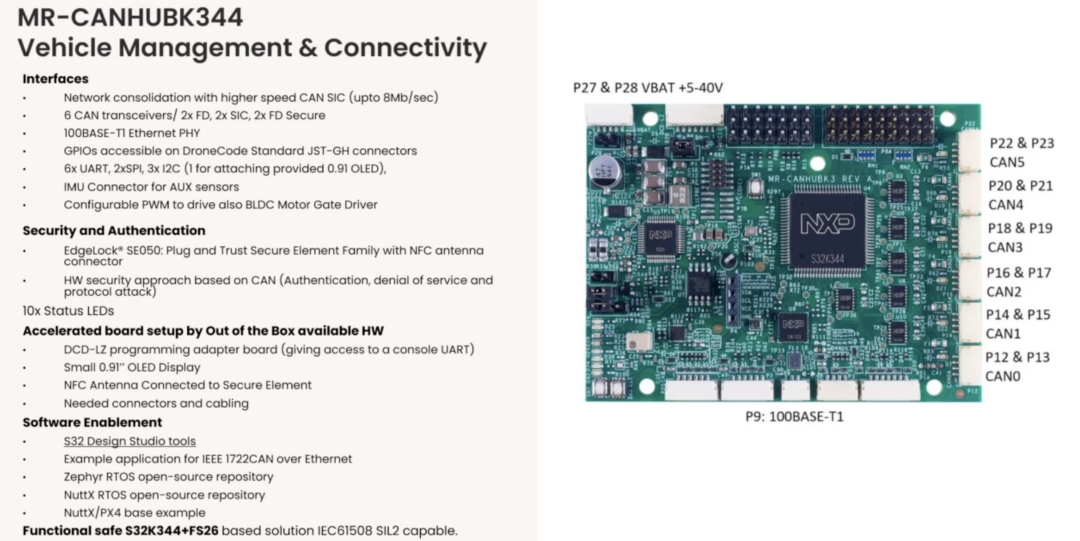

MR-CANHUBK344 is a product for vehicle management and connectivity.

● In terms of interfaces, it supports high-speed CAN SIC (up to 8Mb/sec) for network integration, equipped with 6 CAN transceivers (2 FD, 2 SIC, 2 FD Secure), and has a 100BASE-T1 Ethernet PHY, accessible GPIO via DroneCode standard JST-GH connectors, with 6 UARTs, 2 SPIs, and 3 I²Cs (one for connecting a 0.91-inch OLED), and has an IMU connector for auxiliary sensors, along with configurable PWM for driving BLDC motor drivers.

● Regarding safety and certification, it employs the EdgeLock® SE050 security element (with NFC antenna connector), providing hardware security protection based on CAN (covering authentication, denial of service, and protocol attack prevention).

The product is equipped with 10 status LED indicators. The out-of-the-box hardware accelerates board setup, including the DCD-LZ programming adapter board (for accessing console UART), a 0.91-inch small OLED display, an NFC antenna connecting to the security element, and the necessary connectors and cables.

● On the software support side, it provides S32 Design Studio tools, with IEEE 1722 CAN over Ethernet example applications, relying on the Zephyr RTOS and NuttX RTOS open-source codebases, along with NuttX/PX4 basic examples.

Additionally, the functional safety solution based on S32K344 + FS26 complies with IEC61508 SIL2 standards.

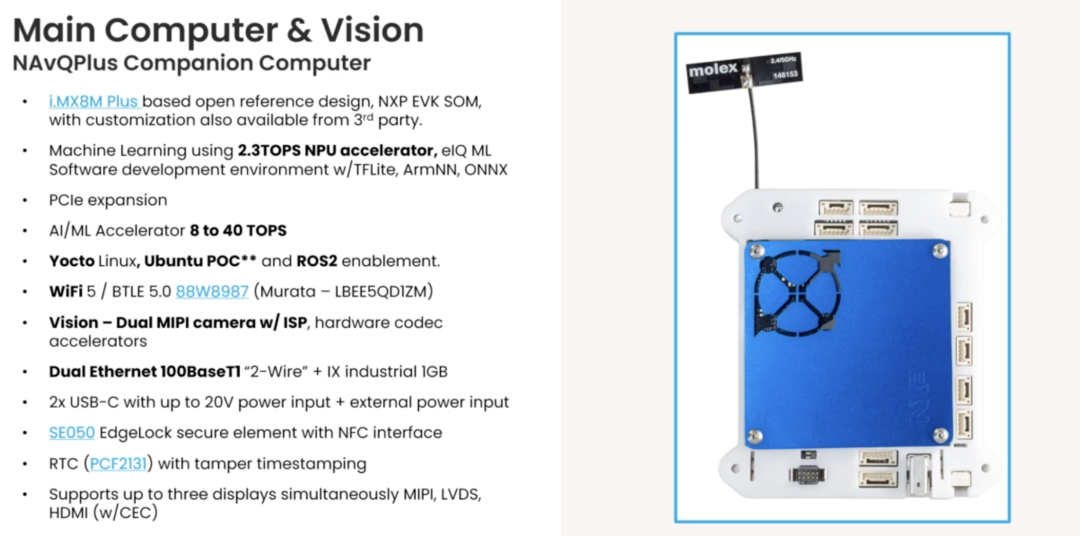

NAvQPlus Companion Computer is a main computer with visual devices.

It is based on the i.MX8M Plus open reference design, utilizing the NXP EVK SOM, which can also be customized by third parties.

● It has machine learning capabilities, leveraging a 2.3TOPS NPU accelerator, paired with the eIQ ML software development environment, supporting TFLite, ArmNN, ONNX. It has PCIe expansion capabilities, with AI/ML accelerator performance ranging from 8 to 40 TOPS.

● On the system side, it supports Yocto Linux, Ubuntu POC, and ROS2.

● In terms of network connectivity, it is equipped with WiFi 5 / BTLE 5.0 (Murata LBEE5QDIZM module).

● The visual capabilities are prominent, featuring dual MIPI cameras, integrated ISP, and hardware codec accelerators.

● It has dual Ethernet interfaces, including 100BaseT1 “2-Wire” and IX industrial 1GB interfaces. It features 2 USB-C interfaces, supporting up to 20V power input, along with an external power input interface.

● On the security front, it integrates the SE050 EdgeLock security element with an NFC interface. It is equipped with an RTC (PCF2131), providing tamper-proof timestamp functionality.

● For display, it supports simultaneous connections to MIPI, LVDS, and HDMI (with CEC) display devices.

Part 2

Part 2

Breakthroughs and Synergies in Sensor and Communication Technologies

Sensor technology is the core driving force for mobile robots to perceive their environment.

The advancements in visual systems benefit from NPU support, making real-time image inference possible. For example, the MCX N series NPU can process dozens of high-definition images per second, enabling object detection and classification, suitable for warehouse robot picking or service robot interactions.

LiDAR plays a crucial role in 3D depth perception. The ultra-fast pulse characteristics of GaN devices enhance the resolution of LiDAR to centimeter-level, combined with Simultaneous Localization and Mapping (SLAM) algorithms, improving navigation accuracy by approximately 50%.

For instance, in unconstrained environments (such as outdoor inspections), the fusion of SLAM and LiDAR allows robots to dynamically adjust their paths and avoid obstacles.

Machine learning further optimizes 3D data processing by training models to recognize complex scenes (such as crowded areas), enhancing situational awareness capabilities.

From an engineering perspective, the challenges of sensor technology lie in data fusion and real-time performance. NXP’s edge processing solutions address the latency issues of multi-sensor data processing through hardware acceleration (such as DSP co-processors) and low-power designs, ensuring rapid responses of robots to their environments.

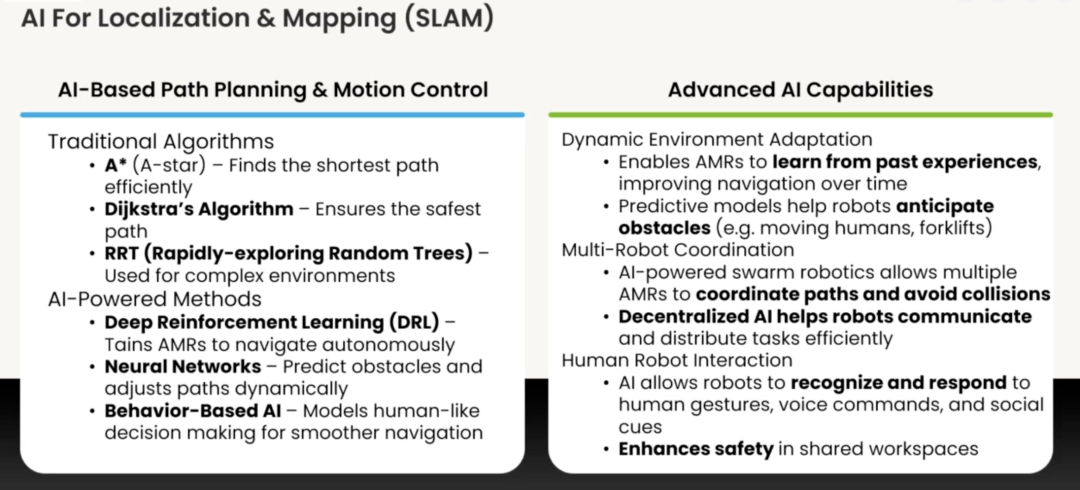

In AI applications for localization and mapping (SLAM), path planning and motion control are critical components. Traditional algorithms such as A* can efficiently find the shortest path, Dijkstra’s algorithm ensures path safety, and Rapidly-exploring Random Trees (RRT) algorithms are suitable for complex environments.

AI-enabled methods include Deep Reinforcement Learning (DRL) for training Autonomous Mobile Robots (AMRs) to achieve autonomous navigation; neural networks can predict obstacles and dynamically adjust paths; behavior-based AI can simulate human decision-making, making navigation smoother.

In advanced AI capabilities, dynamic environmental adaptation allows AMRs to learn from past experiences, optimizing navigation over time, while predictive models help robots anticipate obstacles (such as moving personnel, forklifts, etc.).

In multi-robot coordination, AI-driven swarm robotics technology enables multiple AMRs to coordinate paths and avoid collisions, while decentralized AI facilitates efficient communication and task allocation among robots.

In human-robot interaction, AI enables robots to recognize and respond to human gestures, voice commands, and social cues, enhancing safety in shared workspaces.

Conclusion

Mobile robots are at the forefront of technological innovation, with breakthroughs in edge processing, power, sensors, and communication technologies injecting new vitality into their development.

NXP enhances the intelligence and reliability of robots through the MCX series MCUs, GaN solutions, and communication modules, promoting their widespread application in industrial, service, and medical fields. From error correction features ensuring memory integrity to NPU-accelerated machine learning, and 5G-supported swarm collaboration, NXP’s solutions provide solid support for the mobile robot ecosystem.