One

Background Introduction

Human perception of the physical world has evolved from subjective feelings to sensors and then to sensor networks, as shown in Figure 1. As sensors become smaller and data collection becomes more widespread, a prominent issue has also emerged: the high deployment cost of sensing systems. Especially as the sensing range and scale continue to grow, the difficulty and cost of deploying and maintaining large-scale sensing systems for long-term stable operation are increasing. Taking indoor positioning as an example, we can currently deploy corresponding devices and systems to achieve high-precision multi-target positioning within specific indoor areas. However, this is just a drop in the bucket compared to the entire physical world where human activities occur, so we are still far from truly ubiquitous computing.

Figure 1: Changes in Human Perception Methods

On the other hand, with the development of the Internet of Things, our living and working spaces are now filled with various wireless signals (such as WiFi, LoRa, RFID). In addition to communication, wireless signals undergo reflection, scattering, and refraction as they propagate through space. The propagation characteristics of wireless signals indoors can also sense the state of surrounding objects, known as wireless sensing.

In the past two decades, ubiquitous computing has engaged in interdisciplinary collaboration with signal processing, machine learning, wireless communications, and other fields, leading scholars to develop many interesting applications based on wireless radio frequency signals. For example, Professor Liu Yunhao from Tsinghua University proposed the Landmarc algorithm1, which opened the chapter on high-precision indoor positioning using RFID; and Professor Dina Katabi from MIT designed the EQ-Radio system, which uses WiFi signals to accurately recognize human emotions2. Recently, deep artificial intelligence (AI), also known as deep learning (DL), has shown tremendous success in the field of computer vision. Additionally, recent work3-5 has also demonstrated that deep artificial intelligence can benefit wireless sensing, bringing us one step closer to a new generation of ubiquitous computing.

Two

Method Overview

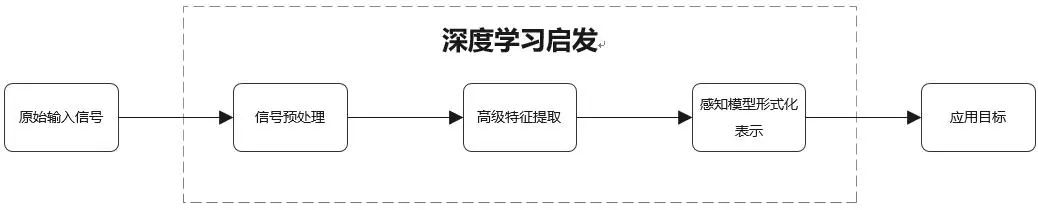

In recent years, deep learning (e.g., CNN, RNN, GAN) has achieved success in many visual and audio applications, such as speech recognition, object detection, and facial recognition. Compared to traditional machine learning (ML) models (e.g., k-NN, SVM, boosting, decision trees, Bayesian networks), deep learning (DL) frameworks leverage a large amount of complex and heterogeneous input data and can effectively reduce noise and extract significant features, ensuring accurate results in general application scenarios. Due to the similarities in input and output between wireless sensing and visual perception, wireless sensing systems can certainly benefit from deep learning. As shown in Figure 2, deep learning can be used to enhance various modules of wireless sensing systems.

Figure 2: General Model of Wireless Sensing

The optimization of wireless sensing model performance using deep learning is mainly divided into three steps: signal preprocessing, advanced feature extraction, and formal representation of the sensing model. Next, we will provide a brief overview of the above three parts:

2.1 Signal Preprocessing

In the signal preprocessing module of wireless sensing systems, the main tasks include data denoising and data adaptation. Compared to previous methods (such as numerical analysis, background elimination, statistical analysis, machine learning algorithms, etc.), deep learning-based signal preprocessing modules can effectively separate the “signal of interest” from noise in the environmental domain. Typical methods include the following three:

a) Domain-Related Noise Elimination: Wang6 and others proposed a maximum-minimum adversarial learning method for feature transfer under cross-scenario conditions, thus achieving cross-scenario activity recognition based on millimeter waves. Ma7 and others designed a deep similarity network to assess the transfer similarity between the training set and data in new scenarios. Furthermore, to address the issue of high training costs under cross-scenario conditions, concepts such as transfer learning and adversarial structures have been introduced into the signal preprocessing module to extract parts of the signal that are independent of the environment.

b) Attention Models: This method can further improve the computational efficiency of perception tasks in complex scenarios by focusing on specific parts of the neural network input: selecting specific inputs. Attention models have made significant progress in computer vision tasks and are expected to be effectively applied in wireless sensing.

c) Region of Interest (ROI) Detection: Deep neural networks used for wireless sensing tasks employ ROI detection to focus on parts of the signal of interest; essentially, its principle is based on beamforming techniques using directional antennas.

2.2 Advanced Feature Extraction

After data preprocessing, the next step is to select appropriate features for formal representation of the model. Due to the high-level feature learning capabilities of deep learning, it has been widely used for feature extraction. Features can be classified by purpose into three categories:

a) Spatial Information Extraction: Multi-layer convolutional networks are used for extracting spatial information from signals, such as multi-layer perceptrons, convolutional neural networks, and some derived networks (e.g., encoder-decoder networks).

b) Temporal Information Extraction: Deep learning is also used to extract time series information from wireless signals, with commonly used methods including RNN, LSTM, and GRU.

c) Physical Eigen Information: To effectively extract physical features, adversarial architectures such as GAN can be used to learn the implicit relationships between source inputs and application outputs.

2.3 Formal Representation of the Sensing Model

In wireless sensing applications, compared to traditional methods using geometric models, statistical models, or machine learning models, deep learning more effectively connects preprocessed and advanced feature inputs with sensing applications, especially when facing high-precision sensing tasks, such as multi-person behavior recognition. Additionally, deep learning methods from other fields can also be referenced to improve the accuracy and robustness of wireless sensing tasks, such as multi-task learning and transfer learning.

Three

Problems and Challenges

Despite the strong potential of deep learning technology for wireless sensing systems, there are still some important problems and challenges that need to be addressed when using deep learning technology. These mainly include:

a) Scalability and Generalization: DL technology relies on massive amounts of high-quality data to achieve scalable and generalizable performance. As architectures become increasingly complex and develop, larger amounts of data, higher quality, and more parameters to learn and configure are needed. Compared to other fields such as computer vision and natural language processing, data collection for wireless sensing is more time-consuming and labor-intensive, and there are issues with inconsistent data collection quality, making scalability and generalization of the training and learning processes more challenging. For example, trained wireless systems often face interference from multiple targets and transceivers and background noise when transitioning to large-scale scenarios.

b) Security and Privacy: Although untraceable wireless sensing systems provide a non-intrusive way to perceive personnel in the real world, they also raise many privacy and security issues, such as multiple monitoring applications that include daily activities, breathing and breathing rate estimation, and posture estimation. Deep learning technology has been a hot topic in AI security, and thus can also be used to enhance the security of wireless sensing, leveraging AI to autonomously identify and respond to potential threats based on similar or previous activities. However, the introduced deep learning technology may exacerbate privacy and security issues. For instance, we can use spoofed signals to deceive wireless sensing systems, especially deep neural networks. We note that most mainstream neural networks can be easily misclassified by adding only a small amount of noise to the original data. Additionally, advanced wireless signals can also be deliberately spoofed, such as the false CSI spectrograms generated by XModal-ID8.

c) Robustness and Perception Capability: The robustness and perception capability of wireless sensing systems present a trade-off for neural networks. On one hand, to enhance the robustness of the sensing system, we need to remove redundant parts within the neural network using techniques like model distillation and network pruning; on the other hand, to improve task perception accuracy, we need to design complex heterogeneous network models, such as combining CNN with LSTM. Therefore, when facing actual sensing tasks, we should strike a balance between the two.

Four

Future Research Trends

Wireless sensing based on deep learning provides a promising research theme for the true realization of ubiquitous computing. The future research trends mainly include three points:

Multi-modal Sensing: Each input signal provides complementary measurements in multiple dimensions for the realization of ubiquitous computing. Therefore, the fusion of multi-modal information such as RFID, ultrasound, visible light, Bluetooth, millimeter waves, computer vision, and inertial measurement units adds brightness to the research.

Cross-domain Sensing: Cross-domain sensing can not only expand the range of wireless sensing applications but also provide more information processing technologies for the research field, enabling better realization of the vision of ubiquitous computing.

Interdisciplinary Sensing: Wireless signals are very similar to audio and video signals in terms of time and space dimensions, so many mature deep learning frameworks from the fields of computer vision and natural language processing can be well transferred and referenced to the field of wireless sensing. Therefore, drawing inspiration from other research fields can better realize tasks in wireless sensing.

Five

Conclusion

Deep learning methods have been increasingly applied in various fields and show great application potential. For the true realization of ubiquitous computing in wireless sensing systems, deep learning methods are essential technologies. In this article, we first discussed the overall processing framework of deep learning-based wireless sensing, then summarized the existing problems and challenges, and finally briefly described the research trends in the field.

References

/

/

/

[1] Ni L M , Liu Y , Lau Y C , et al. LANDMARC: indoor location sensing using active RFID[C]// Proceedings of the First IEEE International Conference on Pervasive Computing and Communications, 2003. (PerCom 2003). IEEE, 2003.

[2] Zhao M , Adib F M , D Katabi. Emotion recognition using wireless signals[J]. Communications of the ACM, 2018.

[3] Lijie Fan, Tianhong Li, Rongyao Fang, Rumen Hristov, Yuan Yuan, and Dina Katabi. Learning longterm representations for person re-identification using radio signals. In Proceedings of the IEEE CVPR, 2020.

[4] Yaroslav Ganin and Victor Lempitsky. Unsupervised domain adaptation by backpropagation. In Proceedings of ICML. JMLR.org, 2015.

[5] Yue Zheng, Yi Zhang, Kun Qian, Guidong Zhang, Yunhao Liu, Chenshu Wu, and Zheng Yang. Zero-effort cross-domain gesture recognition with WiFi. In Proceedings of ACM MobiSys, 2019.

[6] Jie Wang, Liang Zhang, Changcheng Wang, Xiaorui Ma, Qinghua Gao, and Bin Lin. Device-free human gesture recognition with generative adversarial networks. IEEE Internet of Things Journal, 2020.

[7] Xiaorui Ma, Yunong Zhao, Liang Zhang, Qinghua Gao, Miao Pan, and Jie Wang. 2020. Practical device-free gesture recognition using WiFi signals based on metalearning. IEEE Transactions on Industrial Informatics, 2020.

[8] Belal Korany, Chitra R. Karanam, Hong Cai, and Yasamin Mostofi. XModal-ID: Using WiFi for through-wall person identification from candidate video footage. In Proceedings of ACM MobiCom, 2019.

China Confidentiality Association

Science and Technology Branch

Long press to scan the code to follow us

Author: Jiang Shang

Editor: Xiang Lingzi

Top 5 Exciting Articles of 2020 Review

Recent Exciting Articles Review