Virtualization is a process that abstracts physical hardware by creating multiple virtual machines (VMs) with independent operating systems and tasks, existing in the computing field since the 1960s. Now, with the demand for optimizing the utilization of large AI and DSP modules in automotive SoCs, as well as the increased demand for functional safety in autonomous driving, virtualization is entering power- and area-sensitive embedded automotive systems.

There are two recent facts about AI in automotive embedded systems. First, the urgency of adding AI to embedded designs is growing. This is driven by various factors—the rapid advancement of AI research leads to an expanding use case, an increase in the number of cameras and other sensors in automotive systems, the improved image resolution of these cameras and sensors, impressive capabilities of AI compared to previous solutions, and the position of AI in the hype cycle helps drive new research and enthusiasm for business cases. The second fact is that the neural networks required to implement AI are compute-intensive algorithms that require a lot of space to accommodate all the multiply-accumulate (MAC) units needed to achieve performance levels of L2 and above. As neural processing units (NPUs) become a critical component of automotive SoCs, system designers want to ensure they are optimizing that resource.

Virtualization allows expensive hardware to be shared among multiple VMs by abstracting the physical hardware functionality into software. Without virtualization, resources can only be allocated to one operating system at a time, and if an operating system cannot utilize all the resources of the physical hardware, it may waste resources.

Virtual Machines and Hypervisor

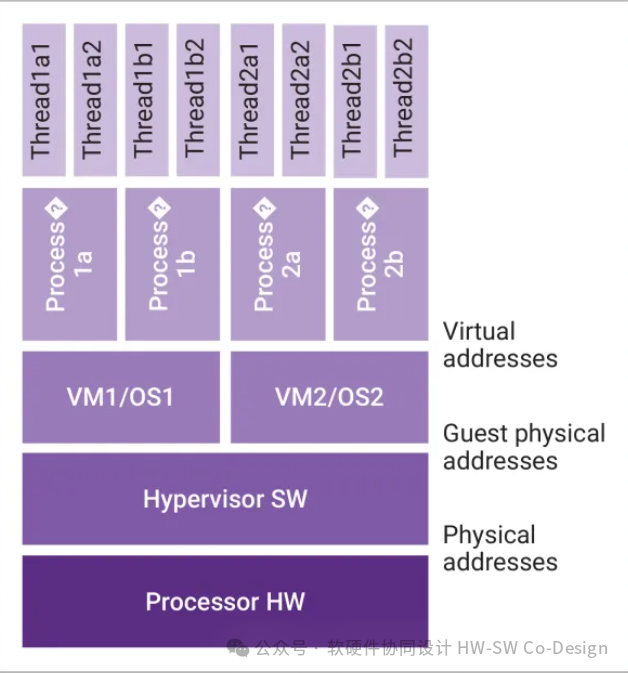

A neural network VM is a virtual representation or emulation of a neural network accelerator, with its own neural network computing resources, memory, and interfaces. Each virtual machine runs an operating system; each operating system runs multiple software processes; each process has one or more threads. VMs are isolated from each other spatially (memory protection is in place, so they cannot access across VMs) and temporally (each VM has predictable execution times).

In Figure 1, the underlying layer marked as processor hardware is the physical layer. The software called hypervisor separates the resources of the VMs from the hardware. These small software layers allow multiple instances of VMs/operating systems to run side by side—each instance has its own underlying physical hardware portion. This prevents VMs from interfering with each other.

For example, if two VMs are running on the same physical processor, as shown in Figure 1, VM1 might run a critical application while VM2 does not run. The hypervisor software will ensure that VM2 cannot access any memory of VM1 or affect the worst-case execution time of VM1. It will guarantee that if VM2 crashes, it will not affect VM1.

Figure 1: Hypervisor software separates physical hardware (processor hardware) from VMs.

Virtualization and Autonomous Vehicles

As the demand for autonomous vehicles increases, the need to ensure their safety also rises. The ISO 26262 standard defines functional safety (FuSa) requirements for detecting systems and random (permanent and transient) faults for various automotive safety integrity levels (ASIL). Front collision warning or engine control functions will require higher ASIL levels than managing the automotive entertainment system. Different tasks may require different FuSa quality levels.

Virtualization can guarantee that applications and operating systems with different quality levels running on shared resources do not interfere with each other. Separating tasks that require higher ASIL levels from those that do not can make the system more composable (modular), simplifying the task of certifying automotive systems. Certification is needed to verify that your hardware meets the appropriate automotive standards. Each safety-critical VM and hypervisor software must be certified. Non-safety-critical VMs do not require certification but must be guaranteed not to compromise certified software.

Less downtime for VMs—this is crucial in the automotive industry—because multiple redundant VMs can run simultaneously. Another benefit of virtualization for autonomous vehicles is the ability to recover from safety errors. If VM1 is running a safety-critical task while VM2 is not, then if VM1 takes over resources from VM2 to prioritize executing the safety-critical task, it can “recover” from a fault on VM1.

Virtualization and NPU

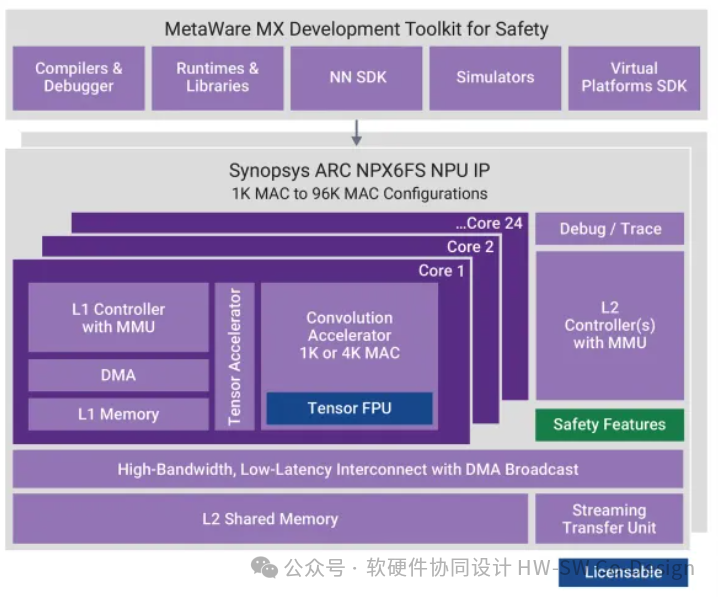

The figure shows the Synopsys ARC NPX6FS NPU IP architecture, highlighting components such as cores, memory, and security features.

Figure 2: Synopsys NPX6 NPU IP series supports hardware and software virtualization.

The Synopsys ARC NPX6FS neural processing unit (NPU) IP (Figure 2) is an AI accelerator used by host processors to provide the most area- and power-efficient neural network performance. The NPX6FS series processors can scale from 1K MAC to 96K MAC and are designed for automotive safety, supporting ASIL B / ASIL D quality levels. NPX6FS includes built-in safety mechanisms, including dual-core lockstep on the memory controller and interconnect control structure, error detection codes (EDC) on registers, error-correcting code (ECC) interconnect, and memory ECC and watchdog timers.

As an accelerator, the host CPU schedules VMs on NPX, but tasks within VMs on multiple cores can synchronize without host interaction. A local hypervisor will run on the ARC NPX NPU. To achieve spatial isolation, each VM on the ARC NPX will run in a dedicated customer physical address space. NPX has L1 and L2 memory controllers, which include an MMU to protect the controller’s load/store accesses. NPX needs to integrate with an IOMMU to protect L2 controller DMA access.

Performance levels of the NPX6FS series can scale from as low as 1 TOPS to thousands of TOPS. These high performance levels are crucial as ADAS applications require growth from hundreds of TOPS at L2 autonomous driving levels to thousands of TOPS required at L3 and above. Let’s look at NPX6-64KFS—a functionally safe configuration with 64K or 65,536 INT MAC per cycle. This is a significant amount of parallel compute resources needed for executing vision neural networks like CNNs or transformers.

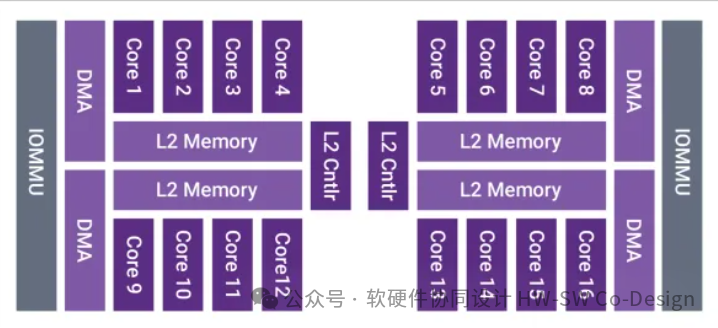

NPX6-64K has 16 4K MAC cores for neural network computing (Figure 3a). Each group of four cores (or 16K MAC per group) is supported by a portion of L2 tightly coupled memory (SRAM). Each group of eight cores (or 32K MAC) is supported by one L2 controller. (Configurations less than 32K will only have one L2 controller.) An IOMMU is not part of NPX6 FS and needs to be integrated into the system design.

Figure 3a: Non-partitioned NPX6-64KFS example. External IOMMU integrated with NPX6-64KFS has 16 4K MAC cores for neural network computing.

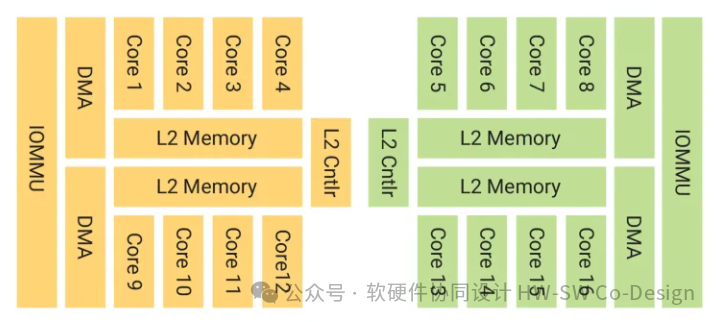

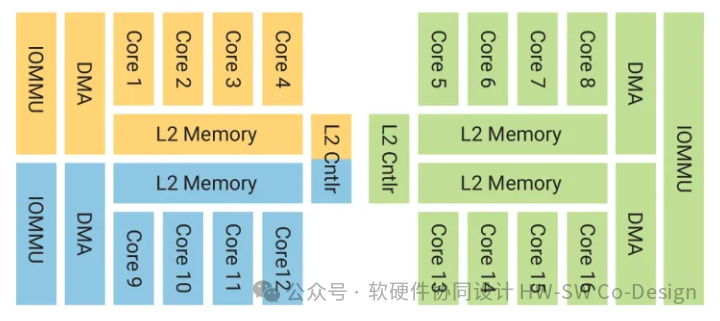

Although the NPX6-64KFS neural network processor is treated as a single engine (software development tools treat it this way as well), resources can be easily allocated to different VMs. We see a possible configuration in Figure 3b for creating two partitions from NPX6-64KFS. In this case, each partition has eight 4K MAC cores and is controlled by one L2 memory controller. One partition can run a safety-critical VM, while the other can run a non-safety-critical VM.

The figure shows a multi-core processor architecture, where components such as cores, L2 memory, DMA, and IOMMU are organized by parts.

Figure 3b: NPX6-64KFS partitioned for two VMs.

In Figure 3c, we can see that the available physical resources of NPX6-64KFS can create three partitions. In this case, both smaller partitions will be managed by an L2 controller. In this example, Partition A can run a safety-critical VM while Partition B can run a non-safety-critical VM, both sharing resources of one L2 controller. Partition C may be running a non-safety-critical VM using the second L2 controller.

The figure shows a multi-core architecture, where components such as cores, L2 memory, DMA, and IOMMU are organized by parts.

Figure 3c: NPX6-64KFS divided into three VMs.

While partitioning the large NPX6 by cores into different partitions and VMs seems straightforward, even single-core NPX6-1KFS or NPX6-4KFS can use virtualization to create multiple VMs. The local hypervisor will handle resource partitioning. Virtualization is a tool for creating flexible ASIL systems and is a crucial feature of NXP6 FS series.

For designs targeting autonomous vehicles, virtualization has rapidly become a must-have feature for embedded automotive solutions. Adding virtualization capabilities can help maximize the efficiency of the numerous DSPs and AI accelerators required for L3 and above autonomous driving. Virtualization can also enhance the system’s ability to recover from faults and simplify the certification process by focusing solely on functional safety tasks. For future ADAS designs, it will be essential to choose DSPs and neural network processor IPs with hardware and software virtualization capabilities.