Click the “blue text” above to follow for more exciting content.

This article contains a total of 1444 words, and reading the full text will take about 4 minutes.

Data centers & AI are the third largest application market for global FPGA chips, accounting for 15%. As an emerging application market, it is expected to grow at a high rate of 18% in the future. The FPGAs required in this market are relatively high-end, and domestic manufacturers are accelerating R&D investment to produce more advanced and high-end products to enhance their competitiveness.

Data Centers:

The global data center load is increasing dramatically, and FPGAs can achieve computation acceleration, system management, and other functions. Data centers are specific networks of devices for global collaboration, used to transmit, accelerate, display, compute, and store data information over network infrastructure. The growth of data volume, especially real-time data, has greatly increased the demand for computing and processing capabilities of data center servers. To cope with complex and changing application scenarios, data center servers and storage (the general infrastructure of data centers) require FPGA chips to achieve functions such as logic control, data conversion, functional expansion, and system upgrades, specifically manifested as computation accelerators, power managers, and interface controllers.

FPGA chips are primarily used for hardware acceleration in data centers, with a high entry barrier. After data centers began using FPGA chips instead of traditional CPU solutions, significant acceleration effects were achieved when processing their custom algorithms. Therefore, since 2016, FPGA accelerators have been deployed on servers of Microsoft Azure, Amazon AWS, and Alibaba Cloud for computation acceleration. In the context of widespread cloud computing applications, the future demand for chip performance in data centers will further increase, and more data centers will adopt FPGA chip solutions, which will enhance the value proportion of FPGA chips in data center chips.

Microsoft Azure’s server architecture using FPGA acceleration

Microsoft Azure’s server architecture using FPGA acceleration

Artificial Intelligence:

Deep learning based on artificial neural networks is the main direction of current AI research. Deep learning algorithms are centered around data processing and involve a large number of computational operations. Due to the massive computation requirements, deep learning typically requires hardware acceleration, and FPGAs, with better flexibility, lower development barriers, and shorter development cycles, have become excellent hardware accelerators.

Hardware chips for AI algorithms are divided into cloud-side processing and edge-side processing. Cloud-side processing is further divided into training and inference tasks. Training tasks are mostly single-instruction multiple data flow computations, which align well with GPU architectures, while inference tasks typically feature single data multiple instruction flows, requiring hardware accelerators to have low power consumption, high performance, and low latency. Compared to GPUs, FPGAs have a higher energy efficiency ratio, making them more suitable for inference tasks.

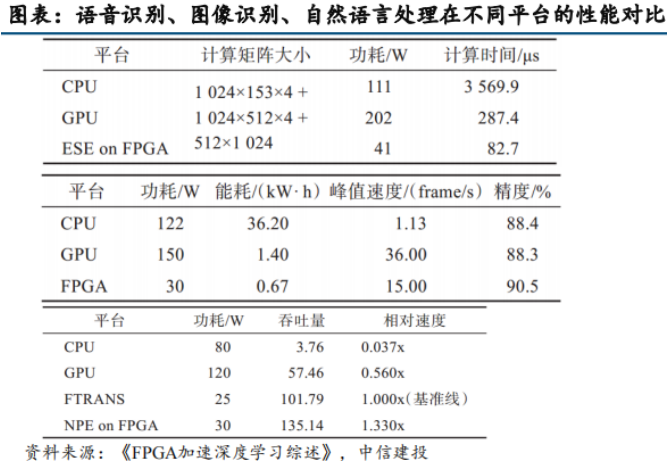

According to relevant experimental data from papers, in three specific fields of artificial intelligence, FPGA shows more significant acceleration effects in speech recognition and natural language processing, outperforming CPUs and GPUs in terms of power consumption and speed, although FPGAs do not reach the peak speed of GPUs in image recognition, only slightly surpassing them in recognition accuracy.

AI algorithms have two characteristics: first, the model iteration speed is relatively fast, and second, the amount of data processed by the model is continuously increasing, with the complexity of the model also rising. Therefore, the demand for hardware acceleration is constantly growing. FPGAs, due to their field-programmable characteristics, are well-suited to meet the rapid iteration needs of AI algorithms. Additionally, regarding the demand for hardware acceleration of AI algorithms, the acceleration design solutions provided by GPUs require the algorithms to adapt to the hardware structure, meaning that AI algorithms often need to be adjusted to fit the GPU’s hardware structure and execution models. In contrast, the soft-hardware integration of FPGAs allows them to provide acceleration design solutions that adapt the hardware structure to the algorithms, maximizing the retention of the algorithm’s inherent characteristics. Furthermore, in recent years, FPGA development environments have become increasingly rich, allowing developers to use high-level programming languages such as C, C++, or OpenCL for FPGA development, significantly reducing the difficulty of FPGA development and shortening the FPGA development cycle, providing convenience for users.

In summary:

The main application scenarios of FPGA in the data center & AI fields and their reasons are as follows:

High parallel low-latency processing (acceleration of computation, storage control, edge AI);

Highly flexible solutions (on-board function expansion & bridging).

Additionally, the growth drivers are:

Data center rate upgrades → increased demand for high-bandwidth processing → enhanced FPGA value;

Expansion of edge AI scenarios → increased demand for low-latency inference → increased FPGA penetration and value.

Risk Warning:This content only represents the analysis, speculation, and judgment of the Breaking Research team, and is published here solely for the purpose of conveying information, not as a basis for specific investment targets. Investment carries risks; please proceed with caution!Copyright Statement:This content is copyrighted by the original party or author. If reproduced, please indicate the source and author, retain the original title, and ensure the integrity of the article content, and bear legal responsibilities for copyright, etc.

END