Click the blue text above to follow us!

Edge Intelligence in Vehicle Networks: Concepts, Architecture, Issues, Implementation, and Prospects

Jiang Kai1, Cao Yue1, 2, Zhou Huan3, Ren Xuefeng2, Zhu Yongdong4, Lin Hai1

1. Wuhan University, Wuhan, Hubei 430072;

2. Huali Zhixing (Wuhan) Technology Co., Ltd., Wuhan, Hubei 430056; 3. Three Gorges University, Yichang, Hubei 443002;

4. Zhijiang Laboratory, Hangzhou, Zhejiang 310100

Abstract:

As an emerging interdisciplinary field, edge intelligence pushes artificial intelligence closer to traffic data sources, utilizing edge computing power, storage resources, and perception capabilities to provide real-time responses, intelligent decision-making, and network autonomy. This enables a more intelligent and efficient resource allocation and processing mechanism, facilitating the transition of vehicle networks from a “pipeline” to an information “intelligent” enabling platform. However, the successful implementation of edge intelligence in the vehicle network domain is still in its infancy, necessitating a comprehensive review of this emerging field from a broader perspective. This paper introduces the background, concepts, and key technologies of edge intelligence, focusing on vehicle network application scenarios. It provides an overview of service types based on edge intelligence, detailing the deployment and implementation processes of edge intelligence models. Finally, it analyzes the key open challenges of edge intelligence in vehicle networks and discusses strategies to address them, promoting potential research directions.

Keywords: Artificial Intelligence; Vehicle Networks; Edge Intelligence

Citation format:

Jiang K, Cao Y, Zhou H, et al. Edge intelligence empowered internet of vehicles: concept, framework, issues, implementation, and prospect[J]. Chinese Journal on Internet of Things, 2023, 7(1): 37-48.

JIANG K, CAO Y, ZHOU H, et al. Edge intelligence empowered internet of vehicles: concept, framework, issues, implementation, and prospect[J]. Chinese Journal on Internet of Things, 2023, 7(1): 37-48.

0 Introduction

Under the dual influence of technological development and business demands, transportation systems are gradually evolving into data-driven intelligent systems. Currently, the automotive industry is experiencing new innovations and vitality, with emerging vehicle network technologies enabling vehicles to provide reliable in-vehicle multimedia services, adaptive cruise control, intelligent traffic information management, and other informatization capabilities, promoting the development of intelligent transportation and effectively enhancing passenger safety and travel comfort.With the vigorous development of informatization, in-vehicle applications have higher demands for processing capabilities and quality of service (QoS). Inevitably, they consume more resources and energy compared to traditional mobile applications. However, due to the processing capability bottlenecks of vehicles themselves and the long-distance backhaul limitations of cloud computing platforms, the cloud computing architecture faces high latency and low reliability due to transmission distances, making it difficult to meet the QoS guarantees required for real-time in-vehicle applications. Additionally, the linear growth of cloud computing capabilities cannot keep pace with the rapid growth of edge data in vehicle networks.Therefore, core functions of cloud computing should be deployed closer to data sources, considering the network edge as an emerging technical architecture. As a core computational support for vehicle-road interconnection applications, edge computing technology has emerged. Specifically, edge computing can be understood as a complement and extension of the cloud computing model, not entirely relying on cloud capabilities but promoting the collaborative unity of cloud and edge capabilities. This technology deploys edge servers at roadside units (RSUs), extending network functions such as computing, communication, storage, control, and management from centralized cloud to the network edge, leveraging the physical proximity of the network edge to vehicles to alleviate latency and unreliability caused by transmission distances. From a technical perspective, edge computing can achieve the migration of computing tasks to RSUs, providing computational resource support for real-time in-vehicle applications. Moreover, deploying edge computing functions in vehicle networks also brings additional technical advantages due to its distributed structure and small-scale nature, including agile connectivity, privacy protection, scalability, and context awareness.Furthermore, given the relatively limited processing capabilities of edge servers and the occasional imbalance between supply and demand, on-demand service optimization is often a key factor in achieving vehicle service gains. The optimization core will focus on the efficient allocation of computing, storage, and network resources in the edge environment, addressing the orchestration and scheduling of limited network resources on both the user demand side and the resource supply side, achieving dynamic deployment of computing tasks. It is worth emphasizing that service optimization must consider aspects such as scalability, flexibility, and high efficiency to adapt to development trends and diverse application demands. Although traditional service optimization methods are NP-hard problems, the high dynamism and demand uncertainty of vehicle networks impose specific requirements on the design of adaptive methods for resource scheduling, making model-based service optimization methods often unsuitable. Therefore, there is an urgent need to design edge service optimization methods that do not rely on generalized models.Meanwhile, the development of artificial intelligence (AI) has entered a leap stage in the past decade after experiencing two troughs and three rises. Thanks to hardware upgrades and the generalization of neural networks, AI, with its advantages in data analysis and insight extraction, supports technological innovation in dynamic environments to enhance the cognitive and intelligent levels of networks. Pushing AI closer to traffic data sources at the edge of vehicle networks has given rise to a new learning paradigm known as edge intelligence.Edge intelligence is widely regarded as the practical deployment of intelligent edge computing, with the key being to leverage the advantages of edge computing and AI. Here, edge intelligence sinks cloud processing capabilities to the edge of the access network and introduces AI technology at the network edge close to vehicles. By integrating the computing power, communication, storage resources, and perception capabilities of wireless edge networks, it provides real-time responses, intelligent decision-making, and network autonomy while empowering a more intelligent and efficient resource allocation and processing mechanism, ultimately achieving the transition of vehicle networks from an access “pipeline” to an information intelligent enabling platform.However, despite the growing attention edge intelligence has received in recent years, its successful implementation in the vehicle network domain is still in its infancy, necessitating a comprehensive review of this emerging field from a broader perspective. Therefore, this paper introduces the background, concepts, and key technologies of edge intelligence, focusing on vehicle network application scenarios. It provides an overview of service types based on edge intelligence, detailing the deployment and implementation processes of edge intelligence models. Finally, it analyzes the key open challenges of edge intelligence in vehicle networks and discusses strategies to address them, promoting potential research directions.

1 Basic Concepts and Key Enabling Technologies

1.1 Basic Concept of Edge Intelligence

Edge intelligence arises from the combination of the advantages of edge computing and AI technology, and in recent years has been regarded by operators as a powerful support to break free from the “pipeline” nature of 5G networks. The emergence of edge intelligence is significant for the efficiency, service response, scheduling optimization, and privacy protection of vehicle network systems. Essentially, introducing AI technology at the network edge allows RSUs to perform localized model training and inference, thereby avoiding frequent communication with remote cloud servers. Edge intelligence emphasizes bringing computational decisions closer to data sources while pushing intelligent services from the cloud to the edge, reducing service delivery distances and latency, and enhancing the service experience for vehicle access. Meanwhile, AI models extract features from the actual edge environment of vehicle networks, providing high-quality edge computing services through repeated iterations with the environment. Over the past decade, deep learning and reinforcement learning have gradually become mainstream AI technologies in edge intelligence. Here, deep learning can automatically extract features and detect edge anomalies from data, while reinforcement learning can achieve goals through Markov decision processes and appropriate gradient strategies, playing an increasingly important role in real-time decision-making in networks.

1.2 Key Enabling Technologies of Edge Intelligence

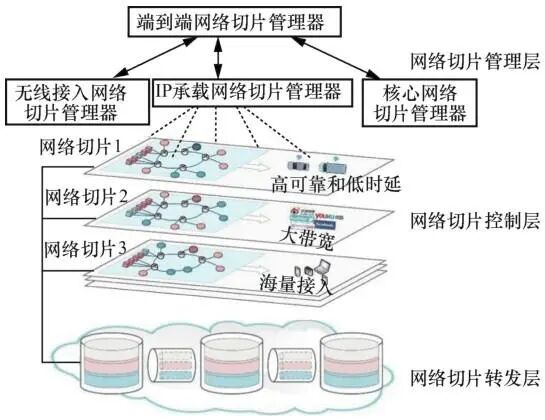

1.2.1 Network SlicingNetwork slicing is an important technology to address the “one-size-fits-all” network model by virtually dividing the network into multiple segments, allowing the creation of multiple logically independent self-contained network instances (i.e., network slices) on a single shared physical infrastructure. As a virtualized end-to-end logical network, any slice can be used to meet specific business types, thereby achieving differentiated service requirements for vehicles through customized network functions, hierarchical abstraction, and different categories of isolation (resource isolation, business isolation, operation and maintenance isolation). An example of the architecture and functions of network slicing is shown in Figure 1. Figure 1 Architecture and Function Example of Network SlicingTypically, to customize the heterogeneous resource combinations for in-vehicle services, a network resource allocation framework needs to be constructed for vehicle-to-infrastructure (V2I) access control. Deploying edge network slices with differentiated performance requirements at appropriate locations can improve the overall resource utilization of vehicle networks. Additionally, the inherent dynamism and openness of vehicle networks make network slicing indispensable for ultra-reliable low-latency communication (URLLC) and can support flexible dedicated resource allocation and performance guarantee strategies across multiple wireless access networks based on differentiated service level agreement requirements. Enabling network slicing in vehicle networks can not only reduce latency for in-vehicle applications but also support traffic prioritization for in-vehicle services.1.2.2 Software Defined NetworkingSoftware Defined Networking (SDN) is an infrastructure approach that abstracts network resources into a virtualized system, fundamentally simplifying network management and operation through network software. SDN can build a virtual logical network layer on existing physical networks, decoupling control layer functions from the data layer and migrating them to the virtual network layer, ultimately being uniformly processed by a logically centralized and programmable controller.In recent years, the combination of SDN and edge computing has made the logical centralized control of vehicle networks more reliable, simplifying data forwarding functions through flexibility, scalability, and programmability. Related optimizations involve service guarantees, custom development, and the expansion and contraction of network resources. Specifically, given the need to deploy more RSUs in vehicle networks to cope with the overall cost surge caused by the increase in vehicle ownership, SDN serves as a key driving force, providing potential solutions for global network configuration, cost-effective adaptive resource allocation, and the aggregation of heterogeneous elements in vehicle networks. The heterogeneous SDN/NFV architecture in vehicle networks is shown in Figure 2, where the SDN architecture is divided into data layer, control layer, and application layer from bottom to top. Generally, the centralized controller is deployed in the RSU edge server, collecting global network information, including traffic load, vehicle density, service types, node resource capacity, etc. Utilizing the collected information, the centralized controller can deploy adaptive routing protocols for business flows while configuring network-level resource slicing and access control through southbound interfaces to improve resource utilization and reduce overall operational costs. However, since the controller relies on a global control view, how to apply signaling-free SDN under highly dynamic network topology conditions is worth exploring.

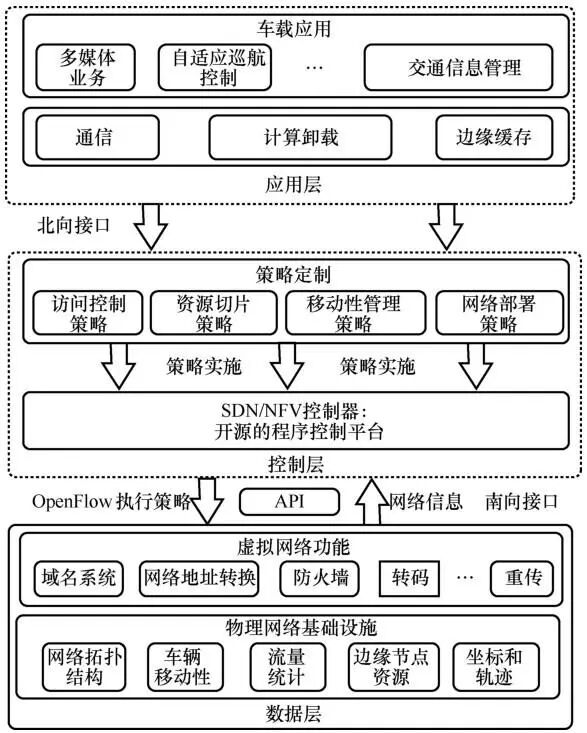

Figure 1 Architecture and Function Example of Network SlicingTypically, to customize the heterogeneous resource combinations for in-vehicle services, a network resource allocation framework needs to be constructed for vehicle-to-infrastructure (V2I) access control. Deploying edge network slices with differentiated performance requirements at appropriate locations can improve the overall resource utilization of vehicle networks. Additionally, the inherent dynamism and openness of vehicle networks make network slicing indispensable for ultra-reliable low-latency communication (URLLC) and can support flexible dedicated resource allocation and performance guarantee strategies across multiple wireless access networks based on differentiated service level agreement requirements. Enabling network slicing in vehicle networks can not only reduce latency for in-vehicle applications but also support traffic prioritization for in-vehicle services.1.2.2 Software Defined NetworkingSoftware Defined Networking (SDN) is an infrastructure approach that abstracts network resources into a virtualized system, fundamentally simplifying network management and operation through network software. SDN can build a virtual logical network layer on existing physical networks, decoupling control layer functions from the data layer and migrating them to the virtual network layer, ultimately being uniformly processed by a logically centralized and programmable controller.In recent years, the combination of SDN and edge computing has made the logical centralized control of vehicle networks more reliable, simplifying data forwarding functions through flexibility, scalability, and programmability. Related optimizations involve service guarantees, custom development, and the expansion and contraction of network resources. Specifically, given the need to deploy more RSUs in vehicle networks to cope with the overall cost surge caused by the increase in vehicle ownership, SDN serves as a key driving force, providing potential solutions for global network configuration, cost-effective adaptive resource allocation, and the aggregation of heterogeneous elements in vehicle networks. The heterogeneous SDN/NFV architecture in vehicle networks is shown in Figure 2, where the SDN architecture is divided into data layer, control layer, and application layer from bottom to top. Generally, the centralized controller is deployed in the RSU edge server, collecting global network information, including traffic load, vehicle density, service types, node resource capacity, etc. Utilizing the collected information, the centralized controller can deploy adaptive routing protocols for business flows while configuring network-level resource slicing and access control through southbound interfaces to improve resource utilization and reduce overall operational costs. However, since the controller relies on a global control view, how to apply signaling-free SDN under highly dynamic network topology conditions is worth exploring. Figure 2 Heterogeneous SDN/NFV Architecture in Vehicle Networks1.2.3 Network Function VirtualizationNetwork Function Virtualization (NFV) complements SDN technology, primarily used to decouple network functions such as load balancing, domain name system, network address translation, and video transcoding from underlying hardware servers. Its purpose is to program network functions as software instances through virtualization technology, providing a runnable infrastructure for SDN software.Generally, NFV operates on edge servers connected to RSUs to achieve service customization and distribution oriented towards computation, where the programmable software instances on edge servers are typically referred to as virtual network function units. Through NFV, edge service operators can flexibly run corresponding functions on different servers or move some functions to respond to changes in vehicle service demands, thereby reducing overall operational costs and improving service delivery efficiency. In fact, SDN and NFV are technically complementary and mutually beneficial, creating a more flexible, programmable, and resource-efficient network architecture. As shown in Figure 2, in the context of vehicle networks, the integrated architecture of SDN and NFV can achieve flexible traffic routing management, network-level resource slicing, and efficient resource allocation through channel access control executed at the vehicle end.

Figure 2 Heterogeneous SDN/NFV Architecture in Vehicle Networks1.2.3 Network Function VirtualizationNetwork Function Virtualization (NFV) complements SDN technology, primarily used to decouple network functions such as load balancing, domain name system, network address translation, and video transcoding from underlying hardware servers. Its purpose is to program network functions as software instances through virtualization technology, providing a runnable infrastructure for SDN software.Generally, NFV operates on edge servers connected to RSUs to achieve service customization and distribution oriented towards computation, where the programmable software instances on edge servers are typically referred to as virtual network function units. Through NFV, edge service operators can flexibly run corresponding functions on different servers or move some functions to respond to changes in vehicle service demands, thereby reducing overall operational costs and improving service delivery efficiency. In fact, SDN and NFV are technically complementary and mutually beneficial, creating a more flexible, programmable, and resource-efficient network architecture. As shown in Figure 2, in the context of vehicle networks, the integrated architecture of SDN and NFV can achieve flexible traffic routing management, network-level resource slicing, and efficient resource allocation through channel access control executed at the vehicle end.

2 Service Types Based on Edge Intelligence

To build a robust edge intelligence application system, computing, caching, and cloud-edge-end collaborative services are widely applied in vehicle networks. This section provides an overall overview of three specific research issues and their related work.

2.1 Computing Offloading and Resource Allocation

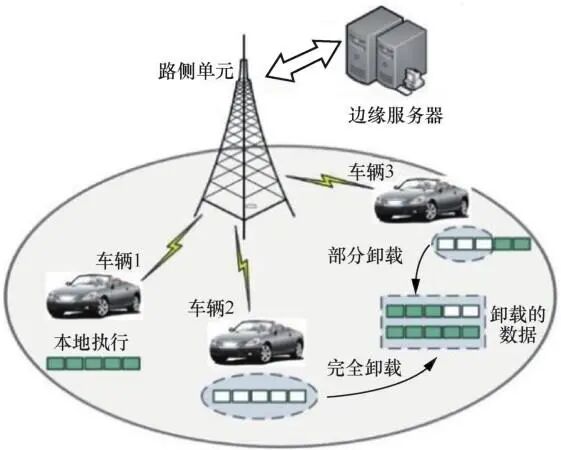

Computing offloading is one of the widely discussed features of edge intelligence in vehicle networks. Resource-constrained vehicles can offload compute-intensive or latency-sensitive workloads directly to nearby RSU edge servers through in-vehicle wireless communication technology, utilizing the computing resources of edge servers to assist in computation. This model balances the limitations of cloud computing technology while meeting the computational expansion needs of vehicles, effectively improving the QoS of vehicle network applications.In multi-user service scenarios, service optimization based on computing offloading needs to comprehensively consider various constraints such as task data volume, task timeliness, channel transmission, and edge server computing capabilities. Additionally, the main factors influencing offloading decisions include task execution latency and energy consumption, so the optimization objectives of computing offloading mainly fall into three aspects: reducing latency, minimizing energy consumption, and balancing latency and energy consumption. Literature has reviewed the relationship between low-latency, high-efficiency computing demands of vehicles and computing offloading, elucidating the development potential of computing offloading in vehicle networks. Mao et al. studied the joint optimization of power control, processor frequency, and computing offloading under a discrete model in edge systems based on non-orthogonal multiple access, solving the planned non-convex problem using block coordinate descent methods. Zhang et al. proposed a multi-layer vehicle edge network framework based on cloud computing, designing a hierarchical offloading scheme aimed at minimizing in-vehicle application latency using Stackelberg game theory. Furthermore, literature has proposed an adaptive computing offloading framework based on the asynchronous advantage actor-critic algorithm to achieve joint optimization of computing task energy consumption and latency.Moreover, depending on whether a single task can be divided, two types of offloading models can be deployed on the edge side, as shown in Figure 3. One type is called complete offloading, where the entire process or task is offloaded to the edge server; the other type is called partial offloading, where the task or process is divided into multiple fine-grained subtasks and offloaded to different edge servers for distributed processing. Based on partial offloading, Ning et al. considered resource competition among mobile users and proposed an iterative heuristic method for adaptive allocation of collaborative offloading decisions in the system. Wang et al. studied the application and deployment of partial offloading in vehicle networks and utilized deep reinforcement learning algorithms to investigate the benefit optimization problem of multi-vehicle computing offloading under QoS constraints. Meanwhile, with the development of wireless access technologies, richer computing resources can be fully utilized through vehicle-to-vehicle (V2V) communication technology. In this case, nearby vehicles with idle resources can be scheduled on demand to assist edge servers in serving designated vehicles executing computing offloading. Zhou et al. incentivized edge nodes to participate in V2V offloading through a reverse auction mechanism, modeling the incentive-driven V2V offloading process as an integer nonlinear programming problem to reduce costs for edge service providers. Therefore, research on computing offloading mainly focuses on optimal offloading decisions, requiring a global consideration of various factors such as whether tasks need to be offloaded, where to offload, and how much to offload. Figure 3 Two Types of Offloading Modes under V2I Computing Offloading ArchitectureFinally, a comprehensive resource allocation scheme during the offloading process is also a crucial factor in ensuring overall user QoS and enhancing vehicle network performance. Resource allocation refers to optimizing system configuration by allocating available resources in servers, improving resource utilization while reducing task processing latency or energy consumption, and providing a better user experience. During the resource configuration process, the system can integrate various resources on the vehicle network edge based on dynamic conditions, service types, maximum tolerable task latency, vehicle density, service heterogeneity, and priority, implementing dynamic migration as vehicle network services change. In edge intelligence-driven vehicle networks, predefined resource allocation strategies must possess adaptability and scalability, and the allocation of computing and communication resources should be organically coordinated to cope with the high dynamism of each edge node in space and time. However, previous studies have rarely considered the hidden dynamic characteristics of vehicle networks, so designing adaptive resource allocation schemes during the offloading process is also a key research focus. To this end, Qian et al. proposed a deep reinforcement learning online algorithm for joint optimization of multi-task computing offloading, non-orthogonal multiple access transmission, and resource allocation under time-varying channel conditions. Yan et al. proposed a low-complexity algorithm based on a critic network for the combinatorial offloading and strong coupling problem of tasks relying on models. Tan et al. considered vehicle mobility at different time scales and proposed an online computing offloading and resource allocation framework based on deep Q-networks.

Figure 3 Two Types of Offloading Modes under V2I Computing Offloading ArchitectureFinally, a comprehensive resource allocation scheme during the offloading process is also a crucial factor in ensuring overall user QoS and enhancing vehicle network performance. Resource allocation refers to optimizing system configuration by allocating available resources in servers, improving resource utilization while reducing task processing latency or energy consumption, and providing a better user experience. During the resource configuration process, the system can integrate various resources on the vehicle network edge based on dynamic conditions, service types, maximum tolerable task latency, vehicle density, service heterogeneity, and priority, implementing dynamic migration as vehicle network services change. In edge intelligence-driven vehicle networks, predefined resource allocation strategies must possess adaptability and scalability, and the allocation of computing and communication resources should be organically coordinated to cope with the high dynamism of each edge node in space and time. However, previous studies have rarely considered the hidden dynamic characteristics of vehicle networks, so designing adaptive resource allocation schemes during the offloading process is also a key research focus. To this end, Qian et al. proposed a deep reinforcement learning online algorithm for joint optimization of multi-task computing offloading, non-orthogonal multiple access transmission, and resource allocation under time-varying channel conditions. Yan et al. proposed a low-complexity algorithm based on a critic network for the combinatorial offloading and strong coupling problem of tasks relying on models. Tan et al. considered vehicle mobility at different time scales and proposed an online computing offloading and resource allocation framework based on deep Q-networks.

2.2 Edge Caching

In-vehicle applications require vehicles to access a large amount of internet data (such as real-time traffic information downloads, navigation map updates, etc.), leading to significant redundant traffic loads and long access latencies in vehicle networks. However, the centralized nature of cloud computing architectures faces challenges such as long transmission distances and limited channel bandwidth, making it difficult for in-vehicle applications to support large-scale content delivery while meeting high-dependent network QoS requirements. Research indicates that content with different popularity levels typically needs to be adapted to corresponding priorities. Clearly, only a few highly requested contents are repeatedly requested by most vehicles, while the majority of content has relatively low access demand.Edge caching can effectively alleviate the redundant backhaul traffic caused by repeated content transmission within vehicle networks, reducing content access latency and costs while improving content delivery reliability. A distributed edge caching architecture is shown in Figure 4, where edge caching utilizes low-cost caching units (such as RSUs) to store popular content close to vehicles. Therefore, vehicles can directly access popular content from nearby RSUs providing caching services without needing to repeatedly download from remote cloud servers. Additionally, adjacent RSUs can typically communicate with each other and share content, so the collaboration between edge nodes must also be considered to improve the overall caching utilization of the system. Meanwhile, V2V communication technology will enable the storage units of vehicles to opportunistically share content based on interactions with other vehicles. In this regard, Alnagar et al. considered the impact of vehicle speed and content popularity on caching decisions, developing a caching strategy based on suboptimal relaxation and the knapsack problem to improve the cache hit rate of edge nodes. Ao et al. studied the interdependence between distributed caching and collaborative multipoint technology, investigating the physical layer resource allocation and caching strategy. For the hybrid communication mode of V2V and V2I, Wu et al. considered the geographical distribution of vehicles and RSUs and the size of transmitted content, proposing an energy-aware content caching scheme.

Figure 4 Distributed Edge Caching Architecture

Currently, the research focus of edge caching includes content delivery and caching replacement. Content delivery refers to an RSU making corresponding delivery decisions for content requests within its communication range based on the timeliness of content and its own caching status. In the content delivery phase, the RSU first searches its cache space to check if the requested content is cached; if not found, it obtains the content from adjacent RSUs or directly downloads it from a remote cloud service center, ultimately delivering the content to the requesting vehicle. Caching replacement refers to the adaptive replacement of cached content by cooperating RSUs based on content popularity. In the caching replacement phase, RSUs can update their cached content based on vehicle requests to enhance their cache hit rate and effectiveness while minimizing content delivery costs in the system. Therefore, when a certain RSU’s cache capacity is fully occupied, the local content replacement mechanism of the RSU is also an extremely important and urgent research topic in edge caching.To address the lack of adaptability of traditional methods in the dynamic environment of vehicle networks, AI-based methods have been applied to enhance the intelligence of edge caching. Tan et al. optimized vehicle-to-vehicle caching and coding technologies using deep Q-networks to optimize vehicle mobility and caching decisions at different time scales. Qian et al. utilized long short-term memory networks to predict content popularity and proposed a deep learning-based content replacement strategy to improve cache hit rates. Wang et al. extended the Markov decision process in reinforcement learning to multi-agent systems, proposing a collaborative framework for edge caching based on multi-agent reinforcement learning. Additionally, to ensure localized training of vehicle data, Wang et al. proposed a collaborative edge caching framework based on federated deep reinforcement learning to effectively address the resource consumption of centralized methods during model training and data transmission.

2.3 Cloud-Edge-End Collaboration

As business operations progress into the collaborative phase, the scope of edge intelligence is no longer limited to a single level. Many existing studies have integrated “cloud-edge” collaboration into the design of edge intelligence paradigms, lacking in-depth research on the collaborative perception mechanisms from a macro perspective and the coordinated control of perception situations, facing challenges from heterogeneous resources, hierarchical management, and business collaboration.

2.3.1 Cloud-Edge Collaboration

In recent years, “cloud-edge” collaboration has developed into a relatively mature collaborative model, attracting widespread attention from academia and industry. In this model, the edge end is responsible for local data computation and caching, or further aggregating collected data to the cloud for processing, effectively supporting local, short-cycle intelligent decision-making and execution. In contrast, the cloud end is responsible for data analysis and mining, model training and upgrading, utilizing its powerful computing capabilities to establish a management platform for long-term, large-scale intelligent processing and resource scheduling, supporting intelligent vehicles and diversified data services. This collaborative model effectively promotes the full utilization of resources among cloud, edge, and end, providing seamless and smooth network services for cross-regional and cross-network real-time traffic optimization, dynamic traffic control, and driving assistance support. Taleb et al. introduced the reference architecture and main application scenarios of “cloud-edge” collaboration. Tang et al. proposed a task offloading and resource allocation framework based on “cloud-edge” collaboration, considering uncertain factors in dynamic networks. Song et al. designed a resource scheduling scheme for 5G front-end network slicing based on a multi-layer “cloud-edge” collaborative architecture, enhancing resource utilization in service distribution.

2.3.2 Edge-Edge Collaboration

A single edge node may face limitations in computing power, storage, and communication resources when handling large-scale applications, while other nodes may have a certain amount of idle resources due to differences in service spatial and temporal distribution. To improve the overall resource utilization of the system, there is an urgent need to establish extensive interconnections among clustered edge nodes for collaborative perception and result sharing to break down information “silos.” Therefore, in the “edge-edge” collaboration model, it is necessary to fully utilize the collaboration among edge nodes to jointly ensure the optimization of computation. It is important to note that when processing large-scale applications in the “edge-edge” collaboration model, there are usually dynamic changes in resource demands, heterogeneous system conditions, and access to edge nodes, while the resource capacity of individual edge nodes also exhibits time-varying characteristics. Therefore, effective collaborative scheduling strategies need to be designed to support the controllable and orderly flow of data among edges, completing the autonomous learning loop. Jiang et al. designed a cooperative caching scheme based on “edge-edge” collaboration using multi-agent reinforcement learning within a framework of stochastic games. Zhang Xingzhou et al. proposed an electric vehicle battery fault detection system based on “edge-edge” collaboration, leveraging federated learning algorithms and long short-term memory networks.

2.3.3 Edge-End Collaboration

In “edge-end” collaboration, the “end” specifically refers to terminal devices, typically consisting of a series of IoT devices and vehicles on the road. “Edge-end” collaboration is a lightweight model that can effectively enhance the processing capabilities of edge nodes, alleviating the increasing conflict between request diversity and the singular processing capabilities of edge devices. Meanwhile, the high relevance between terminal devices and specific application scenarios makes “edge-end” collaboration focus more on the efficient scheduling and secure access of terminal devices. In “edge-end” collaboration, terminal devices collect real-time perception data and offload it to edge servers. Edge servers perform centralized computation and analysis on multi-source data and provide reliable services by sending operation identifiers to terminal devices. In summary, based on the close relationship between terminal devices and users, “edge-end” collaboration is considered a necessary step for implementing edge intelligence applications. Khan et al. discussed the application scenarios of “edge-end” collaboration in vehicle networks and the security and privacy issues it faces. Zhou et al. studied a computing offloading system based on “edge-end” collaboration, aiming to minimize the computing overhead of all users under specific constraints.The limitations of RSU performance and collaboration capabilities in a distributed architecture make it challenging for “edge-edge” and “edge-end” collaboration methods to globally allocate tasks for all vehicles. Related research has begun to explore the effective combination of “cloud-edge-end,” primarily focusing on system architectures for information services, computational power migration oriented towards “cloud-edge,” traffic flow state estimation, system-level access technologies, and service migration continuity mechanisms. However, most studies still lack the integrated concept of “cloud-edge-end” that supports the fusion of perception, decision-making, and control in vehicle-connected scenarios, making it difficult to meet diverse business needs. Therefore, Li Keqiang et al. introduced a “cloud-edge” collaborative system architecture based on cyber-physical systems, developing a cloud control system for “cloud-edge-end” fusion perception and vehicle control technologies. This system integrates the physical system layer, information mapping layer, and fusion application layer through “cloud-edge-end” fusion perception, providing a real-time operating environment, digital mapping of all traffic elements, standardized communication mechanisms, and resource platform data, thereby enabling hierarchical fusion decision-making based on business needs and achieving unified orchestration and management of vehicle task processing.

3 Deployment and Implementation of Edge Intelligence Models

Edge intelligence has emerged in the vehicle network field, with its value reflected in numerous scenarios. This section elaborates on the training and inference processes of AI models in vehicle networks.

3.1 Model Training in Edge Intelligence Vehicle Systems

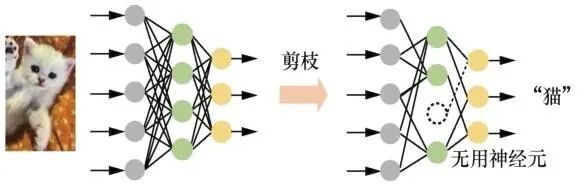

The integration of edge computing and AI in the vehicle network environment relies on efficient model training and inference in the edge-cloud continuum, which is crucial for achieving high-quality service deployment. Training modes can be divided into centralized training, decentralized training, and hybrid training.3.1.1 Centralized Training ModeIn the centralized training mode, the trained model is deployed on a cloud computing platform, while data preprocessing, model training, message brokering, and other tasks are primarily executed by the cloud computing platform. Specifically, model training is mainly achieved through cloud-edge collaboration, and its performance largely depends on the quality of network connections. During the training phase, RSUs are responsible for collecting road information within their coverage area (such information is generated by vehicles and involves in-vehicle services, sensors, wireless channel, and traffic flow data) and uploading it in real-time to the cloud for immediate processing. Based on data analysis and storage, the cloud continuously trains the model using aggregated data in a centralized training cluster. It is worth noting that although the centralized training mode has the potential to retrieve the global optimal solution of the system, the complexity of model training increases exponentially with the growth of network scale, as the system must rely on global network state information. Additionally, since the model deployed on the cloud computing platform is spatially distant from vehicles, data must be transmitted through wide-area networks. Therefore, data transmission and unpredictable network connection states may lead to high latency, low efficiency, and high bandwidth costs. Furthermore, the high concentration of vehicle data resources makes this mode more susceptible to network attacks, leading to the leakage and loss of sensitive traffic data.3.1.2 Decentralized Training ModeCompared to relying on a centralized node (the cloud) for global model updates, a decentralized training architecture based on mutual trust and communication of parameters and gradients among nodes may be more suitable for edge environments. In fact, each RSU acts as an independent node, generating its own inferences based on its perceived local state, but significant differences may exist between the decisions of different RSUs. Meanwhile, vehicle networks hope to benefit from edge intelligence deployment while protecting privacy. Therefore, to avoid related defects, models need to be trained in a highly reliable and decentralized manner at the edge. In this integrated architecture, the training of all RSUs is equivalent, fundamentally avoiding the single point of failure risk while enhancing the scalability and security of the network. However, due to the temporal correlation of data sources, the model training process independently deployed on RSUs faces overfitting issues, and the inferences among multiple RSUs in vehicle networks often influence each other. Therefore, multiple RSUs must collaboratively train models or analyze data to share local training and build and improve the global training model.Compared to centralized training, decentralized training has advantages in privacy protection, personalized learning, and strong scalability. However, in the absence of cloud, it lacks the process of aggregating global parameters, meaning that the topology of parameter exchange is crucial for the convergence performance and training effectiveness of the model, which is significantly influenced by limited resources, dynamic environments, data distribution, and device heterogeneity. Additionally, the methods of coordinating information for model training among nodes in a decentralized environment include different levels, with varying requirements for RSU computing power, necessitating research on the deployment of training models based on multiple quantifiable indicators such as service deployment costs and deployment benefits. Furthermore, federated learning can be applied in data-sensitive fields under decentralized training. It is important to note that decentralized training focuses on parsing data at the edge, while federated learning emphasizes distributed privacy protection.3.1.3 Hybrid Training ModeIn practice, due to energy consumption, computing power, and storage resource limitations, the scale of AI parameters independently trained and deployed by a single RSU is limited. Therefore, various training modes should consider compatibility with each other. To better leverage the advantages of multi-point coordination, hybrid training modes combining centralized and decentralized training are commonly adopted in vehicle networks, aiming to break the performance bottleneck of single training modes. In this mode, RSUs collaboratively train AI models through distributed updates among each other or centralized training on the cloud platform. Specifically, each RSU can train part of the parameters based on its local data and aggregate the parameters or gradients to a central node for global model upgrades, after which the central node distributes the global model to each RSU. After multiple rounds of communication and iteration between RSUs and the central node, the global model can achieve performance comparable to centralized training. At this point, private data is always stored locally during training, making the privacy protection of the hybrid training mode weaker than that of decentralized modes but undoubtedly stronger than that of centralized training modes.Moreover, current efforts to reduce the complexity of model training (including sample complexity and computational complexity) are mainly conducted from both system-level and method-level perspectives. System-level solutions aim to allocate better decision-making and training methods, while method-level solutions tend to establish better communication system models or introduce prior knowledge of the environment. Additionally, to reduce the dependency of the model training process on computing resources, related research considers pruning the model without affecting accuracy, with typical methods including discarding unnecessary weights and neurons during training, sparse training, and output reconstruction error. An example of model pruning is shown in Figure 5, where many neurons in this multilayer perceptron have values of zero, meaning these neurons do not play a role in the computation process and can be pruned to reduce the computational and storage demands during training. Furthermore, regarding model pruning optimization, literature has compared partial weight decomposition and pruning methods, discussing the advantages and bottlenecks of these methods in terms of accuracy, parameter size, intermediate feature size, processing latency, and energy consumption.

Figure 5 Example of Model Pruning

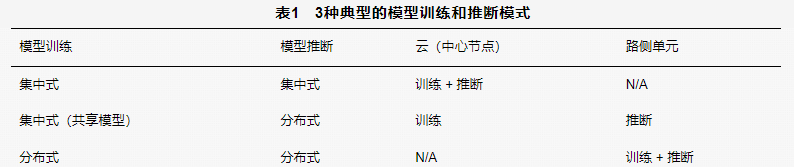

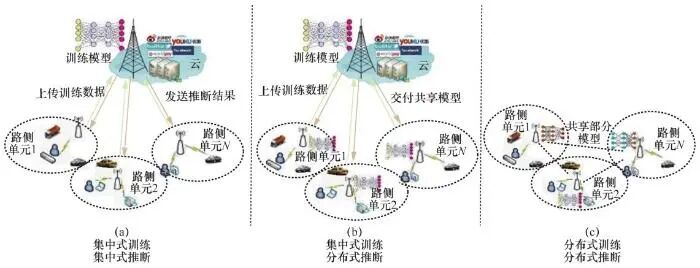

3.2 Model Inference in Edge Intelligence Vehicle Systems

Model inference occurs after model training, primarily predicting unknown results based on the trained model. Model inference and model training are interrelated, forming a cyclical and continuously improving process. Effective model inference is crucial for the implementation of edge intelligence in vehicle networks. According to the aforementioned model categories, inference can be executed either on the cloud or on independent RSUs. Three typical modes of model training and inference are shown in Table 1. Specifically, typical model inference methods include centralized and decentralized. In centralized inference, the cloud needs to collect information from all RSUs (i.e., global information), and both model training and inference will be completed in the cloud, with inference results distributed to each RSU; in decentralized inference, each RSU only needs to perform local model inference based on its own information (i.e., local information), and any RSU can exchange partial model information with other RSUs to enhance decentralized inference performance. Compared to centralized inference, decentralized inference has advantages such as low computational and communication energy consumption, short decision response times, and strong scalability, making it more suitable for vehicle network scenarios with rapid network state changes and high demands for latency and energy consumption. Additionally, in centralized training modes, the cloud can maintain a global training model for centralized inference across all RSUs, or it can first train a shared model for all RSUs and then distribute the trained shared model to each RSU for decentralized inference. Generally, common methods for centralized inference include supervised learning, unsupervised learning, and single-agent reinforcement learning, while common methods for decentralized inference include unsupervised learning and multi-agent reinforcement learning. The model training and inference processes in vehicle networks are illustrated in Figure 6.Furthermore, accelerating model inference is also a major direction for optimizing edge services. Optimization methods for adjusting inference time include model simplification and model partitioning. Model simplification refers to dynamically selecting the most suitable model for a node based on the characteristics of the node, task, and model, i.e., choosing a “small model” that requires lower resource demands and completes faster at the expense of some model accuracy. The main differences among various model simplification methods lie in the differentiation of evaluation metrics; Xu et al. considered user service level agreement requirements and node resource usage, while Taylor et al. used task characteristics and expected accuracy as evaluation metrics. Model partitioning refers to partitioning computational tasks based on the hierarchical computational structure of neural networks, which can fully utilize the computational characteristics of both edge and cloud for collaborative inference to achieve inference acceleration. At this point, selecting different model partitioning points will lead to different computation times, and the optimal model partitioning point can maximize the advantages of collaborative inference. Kang et al. designed a hierarchical structure partitioning method that determines the optimal task partitioning point between mobile devices and data centers based on the granularity of neural network layers. Karsavuran et al. proposed a general medium-grained partitioning hypergraph model using alternating least squares of sparse tensors, which imposes no topological constraints on task partitioning.

Specifically, typical model inference methods include centralized and decentralized. In centralized inference, the cloud needs to collect information from all RSUs (i.e., global information), and both model training and inference will be completed in the cloud, with inference results distributed to each RSU; in decentralized inference, each RSU only needs to perform local model inference based on its own information (i.e., local information), and any RSU can exchange partial model information with other RSUs to enhance decentralized inference performance. Compared to centralized inference, decentralized inference has advantages such as low computational and communication energy consumption, short decision response times, and strong scalability, making it more suitable for vehicle network scenarios with rapid network state changes and high demands for latency and energy consumption. Additionally, in centralized training modes, the cloud can maintain a global training model for centralized inference across all RSUs, or it can first train a shared model for all RSUs and then distribute the trained shared model to each RSU for decentralized inference. Generally, common methods for centralized inference include supervised learning, unsupervised learning, and single-agent reinforcement learning, while common methods for decentralized inference include unsupervised learning and multi-agent reinforcement learning. The model training and inference processes in vehicle networks are illustrated in Figure 6.Furthermore, accelerating model inference is also a major direction for optimizing edge services. Optimization methods for adjusting inference time include model simplification and model partitioning. Model simplification refers to dynamically selecting the most suitable model for a node based on the characteristics of the node, task, and model, i.e., choosing a “small model” that requires lower resource demands and completes faster at the expense of some model accuracy. The main differences among various model simplification methods lie in the differentiation of evaluation metrics; Xu et al. considered user service level agreement requirements and node resource usage, while Taylor et al. used task characteristics and expected accuracy as evaluation metrics. Model partitioning refers to partitioning computational tasks based on the hierarchical computational structure of neural networks, which can fully utilize the computational characteristics of both edge and cloud for collaborative inference to achieve inference acceleration. At this point, selecting different model partitioning points will lead to different computation times, and the optimal model partitioning point can maximize the advantages of collaborative inference. Kang et al. designed a hierarchical structure partitioning method that determines the optimal task partitioning point between mobile devices and data centers based on the granularity of neural network layers. Karsavuran et al. proposed a general medium-grained partitioning hypergraph model using alternating least squares of sparse tensors, which imposes no topological constraints on task partitioning.

Figure 6 Model Training and Inference Processes in Vehicle Networks

4 Challenges and Prospects

This section discusses open challenges and subsequent research directions, providing theoretical support for the deployment of edge intelligence in vehicle networks.

4.1 System Dynamics and Openness

The deployment of edge intelligence in vehicle networks must consider the dynamics of network structures and the openness of channels. On one hand, the service environment of vehicle networks is non-stationary, with frequent switching between multiple RSUs due to vehicle mobility, which may lead to dynamic changes in network topology among nodes and spatiotemporal migration of vehicle network services, making it difficult to adapt to the edge resource demands of different road segments and service node groups. Meanwhile, mobility differences result in time-varying connectivity for specific vehicles within the coverage of RSUs. These dynamic factors impose stricter requirements on the training time and energy consumption of AI models, especially in certain technologies that require cumulative residuals, where mobility can hinder effective accumulation of residuals, significantly slowing down the convergence speed of models. On the other hand, considering that RSUs are deployed in open environments, the communication quality between vehicles and RSUs is closely related to model accuracy and convergence speed. Channel quality is often influenced by various factors such as the transmission distance between vehicles and RSUs, bandwidth resource status, access methods, and network topology, exhibiting high instability. The mixed effects of various communication modes in V2X will further increase the complexity of data transmission processes. In summary, the architecture of vehicle networks driven by edge intelligence needs to possess generalization and robust control capabilities under complex and variable conditions.

4.2 Hardware and Network Architecture Support

Resource-intensive and latency-sensitive in-vehicle services often require the adaptation of a large number of resources for high-dimensional system parameter configurations. The differences in edge device models, structures, operating systems, and development environments will lead to the inability to manage and operate existing platforms uniformly, making it important to consider compatibility and coordination among heterogeneous hardware devices. Additionally, given the competitive channel access conditions and the limited communication coverage of RSUs, there is an urgent need to design resource-friendly network architectures to alleviate the pressure of model training and inference under limited resources. In fact, advanced network architectures can ensure ultra-reliable low-latency communication (URLLC) between vehicles and RSUs through effective resource optimization and task scheduling for edge nodes. However, managing and coordinating AI loads under diverse demands remains one of the urgent issues to be addressed, as network architectures need to provide new resource abstractions for AI loads to meet their requirements for chips, capacity expansion, and task dependency management. Finally, further research is needed on how to break down barriers between various communication technologies to achieve controllability of network architectures.

4.3 Lightweight Training Models

Edge intelligence models in vehicle networks are typically deployed across different RSUs, utilizing complex neural network structures to achieve advanced functions such as decision control, resource allocation, and network management. During model inference, the optimization effects of a single AI technology may face limitations that are difficult to extrapolate. Although multiple AI technologies can be parallelized during model training to address overfitting or underfitting issues, the integration of such technologies may further complicate the network structure. Therefore, it is necessary to study how to deploy and execute AI loads on resource-constrained RSUs. Across different technical routes, literature work mostly aims to construct resource-efficient, fast-converging, lightweight AI models to adapt to resource constraints. Early works include model compression techniques such as sparsification, knowledge distillation, pruning, and quantization, which can support lightweight AI models in vehicle networks. However, while adjusting model scales to support edge-side AI operations, such measures come with a loss of model accuracy. In fact, static model compression methods are no longer suitable for dynamic hardware configurations and edge node loads, and emotional information compression technologies are expected to be applied. Finally, based on application characteristics, explorations of lightweight virtualization and neural architecture search technologies can be conducted to enhance model deployment capabilities in resource-constrained environments.

4.4 Enhancing Security and Privacy Protection

Ensuring a secure operating environment for the edge is also a prerequisite for supporting the supply of vehicle network services. The mobility of vehicles and frequent switching between communication units may affect the overall stability of the system, thereby disrupting the stable supply of communication access and posing security threats to distributed systems. Such openness places high demands on the trustworthiness of services provided by decentralized RSUs, focusing on the credibility of services offered by distributed learning. More importantly, as an open network with ubiquitous wireless access capabilities, data aggregated to the edge or cloud for model training is vulnerable to global attackers. In this case, hackers’ interference with node and channel information and data attacks can lead to severe privacy breaches. Therefore, data desensitization and privacy protection are crucial for ensuring user authentication, access control, data integrity, and cross-platform verification in vehicle networks. Although technologies such as federated learning have been applied to privacy-friendly distributed training, model parameters or original datasets can still be inferred and reconstructed by untrusted third parties. Existing solutions include adding noise to protect original data, communication authentication, homomorphic encryption, and differential privacy. Additionally, as malicious nodes may tamper with and forge normal business processes at the edge, identifying bad behaviors of nodes and removing malicious nodes is of great importance. At the same time, it is necessary to verify and re-encrypt information such as decision instructions and interaction records, fully utilizing the predictability and spatiotemporal correlation of vehicle traffic flows to meet service delivery requirements.

4.5 Incentive Mechanisms Based on Network Economics

For collaborative distributed systems, an important issue to consider is the incentive mechanism based on network economics. Since edge ecosystems often involve different service providers and vehicles, service operations must consider collaboration and integration across service providers in vehicle networks. Meanwhile, vehicles in vehicle networks belong to different individuals, and the profit-seeking characteristics of individuals will drive them to pursue more powerful computing power and faster processing responses, potentially leading to disorderly resource competition and channel interference among multiple vehicles and RSUs. Although joint allocation of resources among multiple RSUs can effectively enhance the utilization of edge resources, it is challenging to ensure that in-vehicle applications unconditionally adhere to relevant strategies during implementation. Therefore, it is necessary to explore how to price computing and communication resources for edge intelligence platforms based on the unique characteristics of in-vehicle applications and individual potential factors, as well as how to design incentive mechanisms with intrinsic driving capabilities to promote orderly cooperation among multiple entities. Finally, when conducting multi-agent games, it is essential to consider the trade-off between fairness and optimization, necessitating the design of resource-friendly lightweight blockchain consensus protocols to balance relevant indicators among multiple agents.

5 Conclusion

This paper provides a comprehensive review of edge intelligence-driven vehicle networks from a broader perspective, focusing on application scenarios. It first introduces the background, concepts, and key technologies of edge intelligence; then, it provides an overview of service types based on edge intelligence in vehicle network application scenarios, detailing the deployment and implementation processes of edge intelligence models; finally, it discusses the key open challenges of edge intelligence in vehicle network application scenarios to promote potential research directions.

About Authors

Jiang Kai (1995-), male, PhD student at Wuhan University, main research directions include edge intelligence, multi-agent/deep reinforcement learning, intelligent transportation systems, etc.Cao Yue (1984-), male, PhD, professor and doctoral supervisor at Wuhan University, main research directions include security protection, network computing, traffic control, etc.Zhou Huan (1986-), male, PhD, professor and doctoral supervisor at Three Gorges University, main research directions include mobile social networks, mobile data offloading, vehicle networks, etc.Ren Xuefeng (1979-), male, vice president and director of the New Technology Research Institute at Huali Zhixing (Wuhan) Technology Co., Ltd., main research directions include intelligent connected vehicles, smart transportation, etc.Zhu Yongdong (1974-), male, PhD, researcher at Zhijiang Laboratory, main research directions include future networks and communications, IoT, vehicle networks, etc.Lin Hai (1976-), male, PhD, associate professor at Wuhan University, main research directions include network security, IoT, etc.Contact Us:

Tel: (010) 53878076, 53879096, 53879098

Email: [email protected]

Journal Introduction The Chinese Journal on Internet of Things is an academic journal supervised by the Ministry of Industry and Information Technology and sponsored by the People’s Posts and Telecommunications Press. The purpose of the journal is to serve scientific development, disseminate scientific knowledge, promote technological innovation, and cultivate scientific and technological talents.

Core Journal of Chinese Science and Technology

Scopus Indexed

Recommended Chinese Science and Technology Journal by the China Communications Society (T1)

Recommended Chinese Science and Technology Journal by the China Computer Society (T2)

Recommended Chinese Science and Technology Journal by the China Electronics Society (T2)

Ulrichsweb Indexed

DOAJ Indexed

EBSCO Database Indexed

Journal Homepage

http://www.wlwxb.com.cn

Online Submission

https://wlw.portal.founderss.cn/myManuscript/e3aa313704334ad2b07ffd79cad1b422/a2

Long press or scan the QR code to follow us, all articles can be read online for free