Author: e络盟 Technical Team

The integration of AI and IoT systems is transforming the way data is processed, analyzed, and utilized. For many years, various AI solutions have been based on cloud deployment, but the rise of edge AI now offers promising solutions for enhancing operational efficiency, improving security, and increasing operational reliability. This article aims to delve into the complexities of edge AI, exploring its components, application advantages, and the rapidly evolving hardware support ecosystem.

AI Evolution: From Cloud to Edge

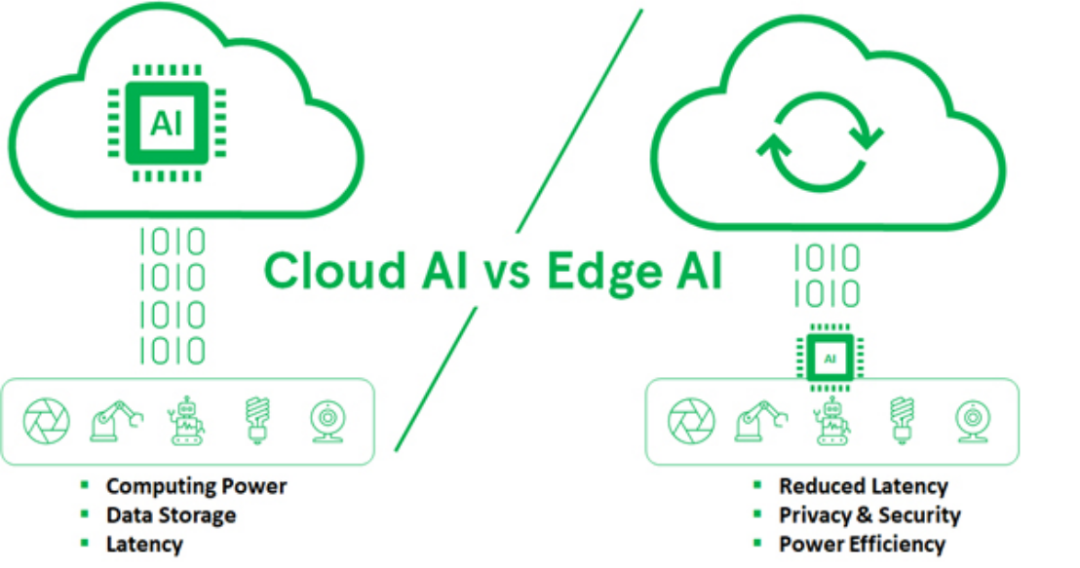

Traditional IoT devices rely directly on cloud infrastructure for AI processing. The data generated by edge device sensors needs to be transmitted to the cloud for analysis and inference. However, with the surge in real-time decision-making demands at the network edge for IoT applications, this model faces significant challenges. Issues related to massive data volumes, latency, and bandwidth limitations make cloud processing untenable in many application scenarios.

The emergence of edge AI brings processing capabilities closer to the data source—the IoT devices themselves. This shift reduces the need for continuous data transmission to the cloud and enables real-time processing, which is crucial for many applications such as autonomous vehicles, industrial automation, and healthcare.

Core Components of Edge AI Systems

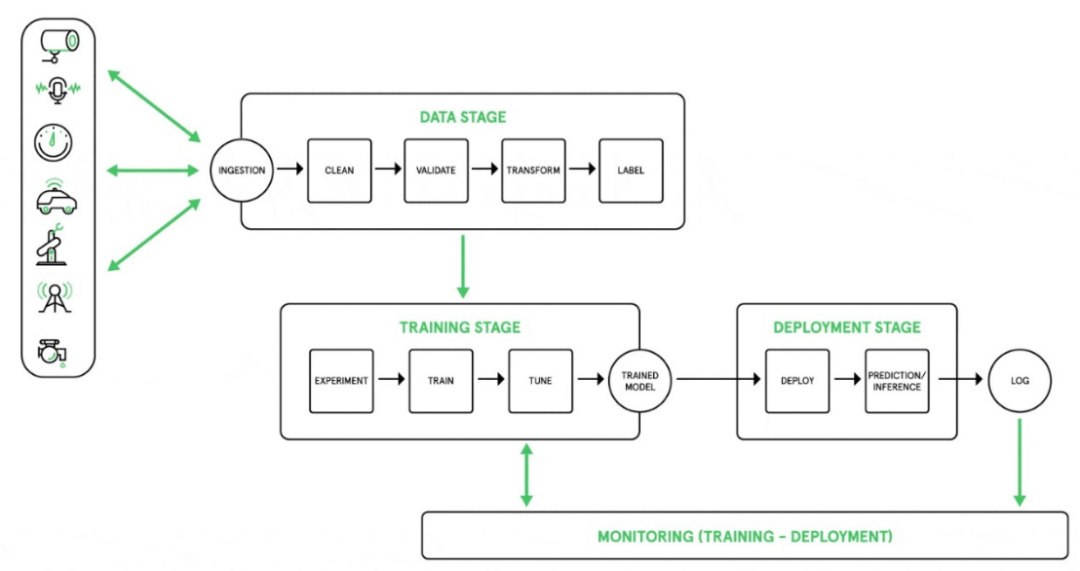

Edge AI systems consist of dedicated hardware and software components that possess core capabilities for local data acquisition, processing, and analysis. Edge AI models typically include the following elements:

-

Data Acquisition Hardware: Without dedicated sensors integrated with processing units and memory, data acquisition cannot be achieved. Modern sensors come with built-in data processing capabilities to perform preliminary filtering and transformation of data.

-

Training and Inference Models: Edge devices need to be equipped with pre-trained specialized scenario models. Due to the limited computational resources of edge devices, models can be trained during the training phase based on feature selection and transformation to enhance their performance.

-

Application Software: The software on edge devices triggers AI processing through microservices, which are typically invoked based on user requests; such software can run AI models that have been customized and aggregated during the training phase.

Figure 1: Edge AI Workflow

Advantages of Edge AI

Compared to traditional cloud models, edge AI offers several significant advantages:

-

Enhanced Security: Local data processing reduces the risk of sensitive information being leaked during transmission to the cloud.

-

Increased Operational Reliability: Edge AI systems reduce dependence on network connectivity, maintaining stable operation even in intermittent or low-bandwidth network environments.

-

Flexibility: Edge AI supports the customization of models and functions based on specific application needs, which is crucial for the diverse IoT environments.

-

Low Latency: This model minimizes data processing and decision-making time, a key feature for real-time applications such as autonomous driving and medical diagnostics.

Figure 2

Challenges in Implementing Edge AI

Despite the numerous advantages of edge AI, its implementation faces multiple challenges. Developing machine learning models for edge devices means dealing with massive data, selecting appropriate algorithms, and optimizing models to fit constrained hardware environments. For many manufacturers, especially those focused on mass-producing low-cost devices, the investment required to develop these capabilities from scratch can be daunting.

This dilemma has created a demand for programmable platforms. Currently, the industry is accelerating the transition to specialized AI architectures that support flexible scaling across a wide range of power-performance profiles. These architectures maintain the flexibility of general-purpose designs while meeting specific processing needs.

The Role of Dedicated Hardware in Edge AI

As AI and machine learning applications continue to expand, the market’s demand for customized hardware is increasing, as this specialized hardware can effectively address the unique needs of the AI technology domain. However, traditional general-purpose processors struggle to meet the specific demands of AI, particularly in neural network processing, even though they still hold significant value in manufacturing industries and general toolchains.

To fill this gap, semiconductor manufacturers are launching new AI accelerators that enhance the performance of general-purpose processors while retaining their advantages. These accelerators are designed for the parallel processing required by neural networks, providing a more efficient execution path for AI computations.

-

Parallel Architectures and Matrix Processors: These parallel architectures (such as those found in graphics processors) are very effective for training neural networks. Matrix processors are designed based on this principle, such as Google’s Tensor Processing Unit, which is developed to accelerate the core aspect of neural network processing—matrix operations.

-

In-Memory Computing: This innovative technology interconnects variable resistors with memory cells, directly transforming memory arrays into neural network structures, effectively circumventing the bottleneck issues of traditional memory access, thus achieving significant breakthroughs in computation speed and energy efficiency.

The Future of Edge AI: Innovation and Opportunities

As the field of edge AI continues to evolve, new technologies and architectures are emerging to meet the growing demand for AI processing. Among them, the progress of Tiny Machine Learning (TinyML) is particularly noteworthy, extending AI capabilities to ultra-low-power devices. Although TinyML may not be suitable for all applications, it undoubtedly promotes the proliferation of AI across a wider range of devices.

-

Field-Programmable Gate Arrays (FPGAs): FPGAs feature dynamically reconfigurable architectures that perfectly align with the rapid development of AI technologies. Compared to GPUs and CPUs, FPGAs empower designers to quickly build and test neural networks and customize hardware for specific application needs. This flexibility is crucial in high-risk fields such as aerospace, defense equipment, and medical devices, where product lifecycles are typically long and require support for deploying new algorithms in the field.

-

Graphics Processing Units (GPUs): Although GPUs possess powerful parallel computing capabilities, their energy efficiency and thermal management come at a high cost. Nevertheless, in applications requiring strong computing power, such as virtual reality and machine vision, GPUs remain the preferred solution.

-

Central Processing Units (CPUs): Despite inherent limitations in parallel processing, CPUs are still widely integrated into various devices. Innovations such as Arm’s Single Instruction Multiple Data (SIMD) architecture have improved the performance of CPUs running AI algorithms, but they typically exhibit slower speeds and higher power consumption compared to other computing devices like GPUs and FPGAs.

Conclusion

The transition from cloud AI to edge AI is profoundly changing the way IoT systems process and utilize data. By deploying AI processing capabilities at the data source, edge AI significantly enhances security, reliability, and flexibility, leading to widespread applications. However, implementing edge AI requires a comprehensive consideration of the synergy between hardware and software components and addressing the unique challenges of deploying AI in resource-constrained environments.

As the prevalence of AI increases, the market increasingly demands specialized hardware adept at solving the unique challenges of edge computing. From matrix processors and in-memory computing to FPGAs and TinyML, these emerging technologies will reshape the next generation of edge AI solutions. This enables application engineers to keep pace with technological advancements and fully unlock the potential of edge AI, creating more innovative and competitive solutions.

In the rapidly evolving landscape of AI technology, engineers and developers must continuously stay updated on the latest technological trends. For an in-depth exploration of AI, mastering core elements, and learning how to apply AI technology in practical projects, we invite you to visit our AI Hub. Whether it’s image classification, voice and gesture recognition, or condition monitoring and predictive maintenance, the AI Hub can provide comprehensive support, offering you a full range of product solutions, technical resources, and expertise to help you unlock the maximum potential of AI technology.

Disclaimer

This content is an observational or commentary article published by a third-party media author, and all text and images are copyrighted by the author. It is shared for use only; for any copyright issues, please contact for resolution or removal.

About Us

The “Industrial AI” magazine is published by Yashi International Business News, focusing on the technical applications of AI in the industrial field, aiming to provide Chinese process and manufacturing engineers, managers, and manufacturing executives with timely access to the latest news and technical information regarding the integration of artificial intelligence into the manufacturing sector. Industrial AI is also committed to creating an industry-leading comprehensive media and value platform, providing practical communication opportunities for the implementation of AI in the industrial field.

Your “shares”, “likes”, and “views” contribute to the advancement of technology in China!

Your “shares”, “likes”, and “views” contribute to the advancement of technology in China!