Build a network video conferencing system with C++, ushering in a new era of efficient communication!

Why Use C++ to Develop a Network Video Conferencing System?

Among many programming languages, C++ stands out due to its excellent performance and efficient control of system resources, making it an ideal choice for developing network video conferencing systems.

The characteristics of C++ being close to the underlying hardware allow precise control over computer resources, such as memory management and CPU scheduling, laying a solid foundation for the smooth operation of video conferencing systems. When multiple participants join simultaneously and massive audio and video data floods in, C++ ensures system stability, preventing stuttering and delays, allowing real-time interaction to proceed smoothly.

Moreover, C++’s rich libraries and template mechanisms greatly enhance code reusability and modularity. During development, functionalities such as audio and video processing, network communication, and user interfaces can be encapsulated into independent modules, facilitating team collaboration and aiding subsequent maintenance and expansion. If new features need to be added to the system, such as virtual backgrounds or real-time subtitles, a C++-based architecture can easily accommodate and quickly adapt to new demands.

1. Preliminary Preparations

Developing a network video conferencing system requires crucial preliminary preparations. In terms of hardware, a powerful server must be equipped to handle the pressure of multiple users being online simultaneously and the transmission of large amounts of audio and video data, ensuring stable system operation and avoiding stuttering and disconnections. Input and output devices such as cameras, microphones, and speakers must also be of high quality to ensure clear and smooth audio and video capture and playback, providing a good experience for participants.

For the software environment setup, mainstream operating systems like Windows and Linux can serve as the foundation for development. Development tools like Visual Studio or Qt Creator are recommended, as they provide comprehensive support for C++, making code editing and debugging convenient, significantly enhancing development efficiency.

In terms of library selection, OpenCV is a powerful assistant for image processing, capable of handling video image capture and preprocessing easily. After capturing images from the camera, operations like noise reduction and contrast enhancement can elevate image quality. FFmpeg focuses on audio and video encoding, decoding, and format conversion, ensuring seamless integration of different audio and video file formats, laying a solid foundation for system compatibility. With these careful preparations, subsequent development can proceed smoothly.

2. Key Technology Analysis

(1) Audio and Video Capture

To enable the video conferencing system to “see” and “hear,” audio and video capture technology is essential. In the C++ world, using the OpenCV library makes video capture straightforward. Here’s a simple piece of code:

#include <opencv2/opencv.hpp>

#include <iostream>

int main(int argc, char** argv) {

cv::VideoCapture cap(0); // Open default camera

if (!cap.isOpened()) {

std::cerr << "Error opening video capture" << std::endl;

return -1;

}

cv::namedWindow("Video", cv::WINDOW_AUTOSIZE);

cv::Mat frame;

while (true) {

bool success = cap.read(frame); // Capture frame

if (success) {

cv::imshow("Video", frame); // Display frame

char c = (char)cv::waitKey(25);

if (c == 'q' || c == 27) break; // Press 'q' or ESC to exit

} else {

std::cerr << "Error reading frame" << std::endl;

break;

}

}

cap.release();

cv::destroyAllWindows();

return 0;

}By using VideoCapture, the camera is easily opened, frames are read in a loop, and displayed, akin to giving the system a keen eye.

Audio capture is also uncomplicated. Using the PortAudio library as an example, setting up audio streams and callback functions via its API allows precise capture of microphone sounds, laying the groundwork for audio transmission during the conference.

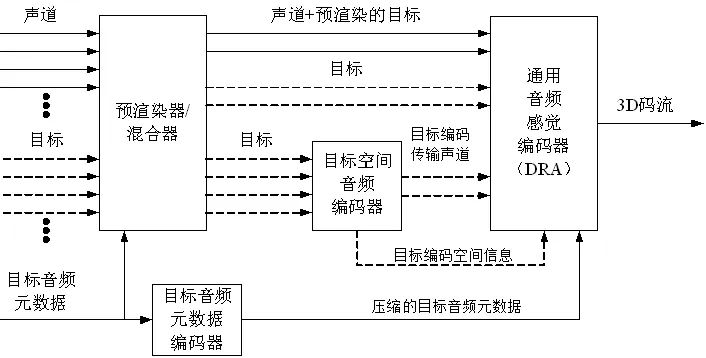

(2) Audio and Video Encoding

The raw audio and video data captured is substantial; encoding is key for size reduction. In video encoding, H.264 has become mainstream due to its high compression ratio and good picture quality, while H.265 is even more advanced, offering higher compression efficiency. In audio encoding, AAC stands out for its high quality and low bitrate.

In C++, the FFmpeg library is a powerhouse for encoding. To encode video into H.264 format, a few simple steps are required: include the library, initialize it, open the input video, find the video stream and codec, create output files and codec contexts, configure parameters, read input frames, encode, and write output. The complex encoding process becomes clear in the code, allowing audio and video data to “fly” lightly across the network.

(3) Network Transmission

The network transmission protocol is the “highway” for the video conferencing system. TCP is reliable and ordered, ensuring complete data delivery, making it the best choice for transmitting important instructions and files; UDP is efficient and fast, though it may occasionally lose packets, it has low latency and strong real-time capabilities, making it ideal for rapid transmission of audio and video streams. WebRTC is tailored for real-time communication, integrating many features to achieve low-latency communication on browsers and mobile devices, facilitating cross-platform communication.

In C++, socket programming is crucial. The server creates a socket, binds an IP address and port, and listens, while the client creates a socket and connects to the server. Once the connection is established, bidirectional data transmission can occur, akin to building a sturdy communication bridge, ensuring smooth audio and video data exchange.

(4) Real-Time Synchronization and Optimization

If audio and video are not synchronized, the conference becomes a “mess.” To achieve synchronization, timestamps are key, marking audio and video data with precise time labels, and calibrating playback based on these timestamps. Buffer management is also essential; reasonable buffer sizes must be set to balance data processing speed and playback speed, preventing stuttering and delays.

For performance optimization, algorithm optimization is core. Carefully fine-tuning encoding and decoding algorithms to reduce computational complexity; employing multithreading techniques to parallelize the steps of capture, encoding, transmission, and playback, fully utilizing multi-core CPU resources, allowing the system to operate lightning-fast; cleverly designing data caching strategies to reduce redundant reads and transmissions, enhancing overall efficiency, providing participants with a smooth experience.

3. Full Understanding of the Development Process

System Architecture Design

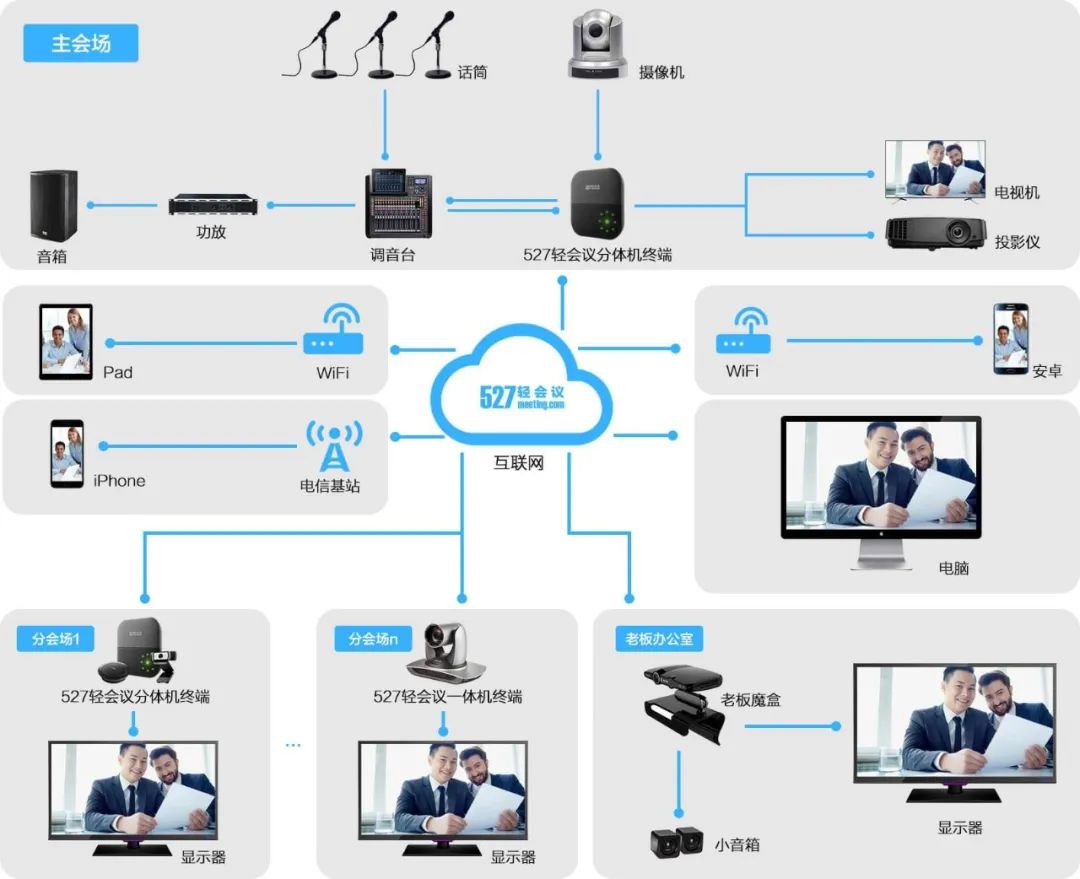

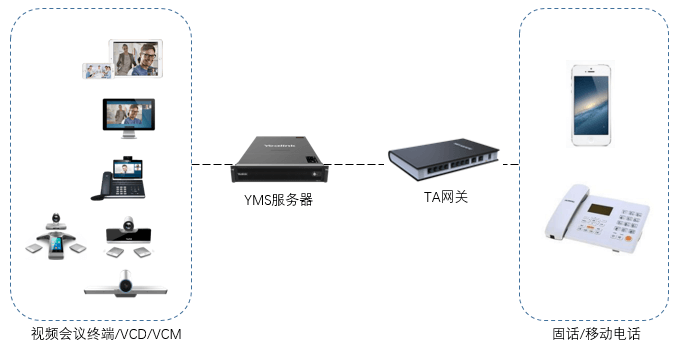

The architecture of a network video conferencing system resembles a precision building, with the foundation being the server and client architecture. The server acts as the central nervous system, managing users, scheduling meetings, and transferring data; the client serves as the “window” for participants, providing an interactive interface to display audio and video and send operational commands.

In terms of module division, the streaming media server is the core engine, controlling the reception, processing, and distribution of audio and video streams, ensuring smooth data transmission; the client interface module is carefully crafted to enhance the participant experience, from displaying the meeting list and joining meetings to layout design and volume adjustment, every detail matters; there is also a user authentication module to safeguard security, and a database module for proper information storage, all working together to ensure the system operates robustly.