Introduction:

This article is authorized by Abao1990 and written by Abao1990.

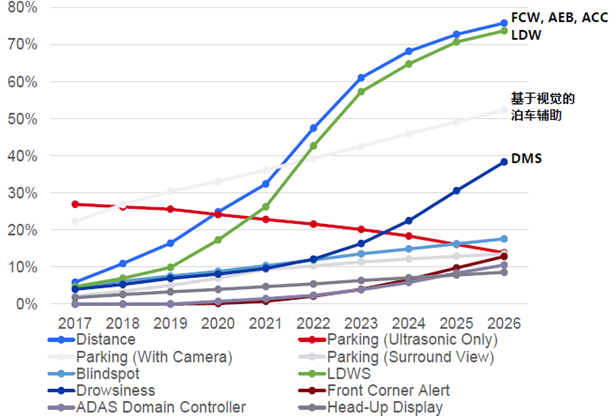

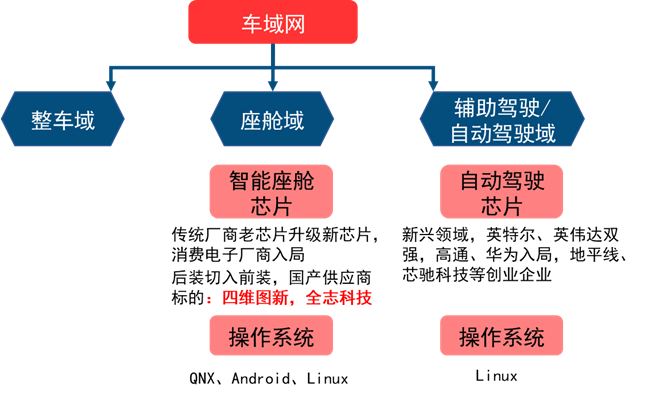

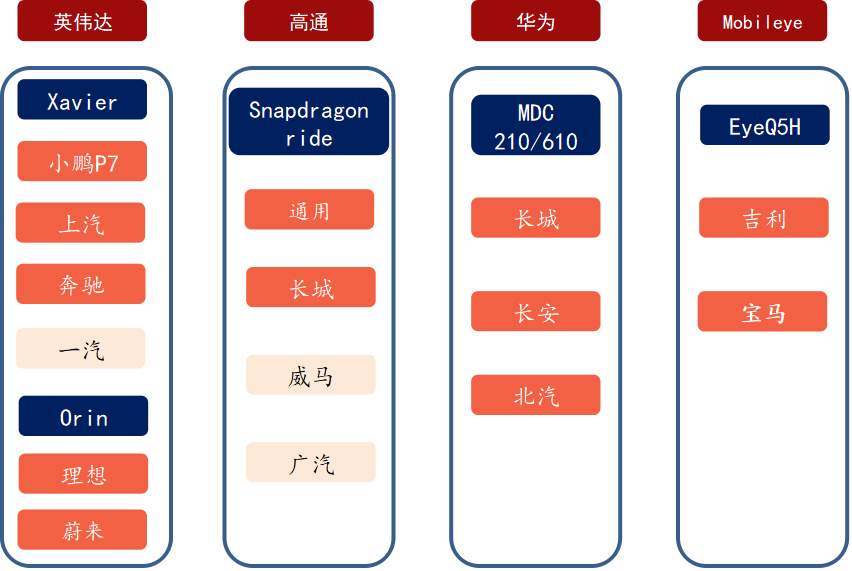

Declining component costs and intensified competition in the mid-to-low-end vehicle market have rapidly increased the penetration rate of ADAS in the Chinese market, significantly boosting the installation of ADAS in domestic brands. Let’s take a look at the mainstream chips and platform architectures from major car manufacturers.

1

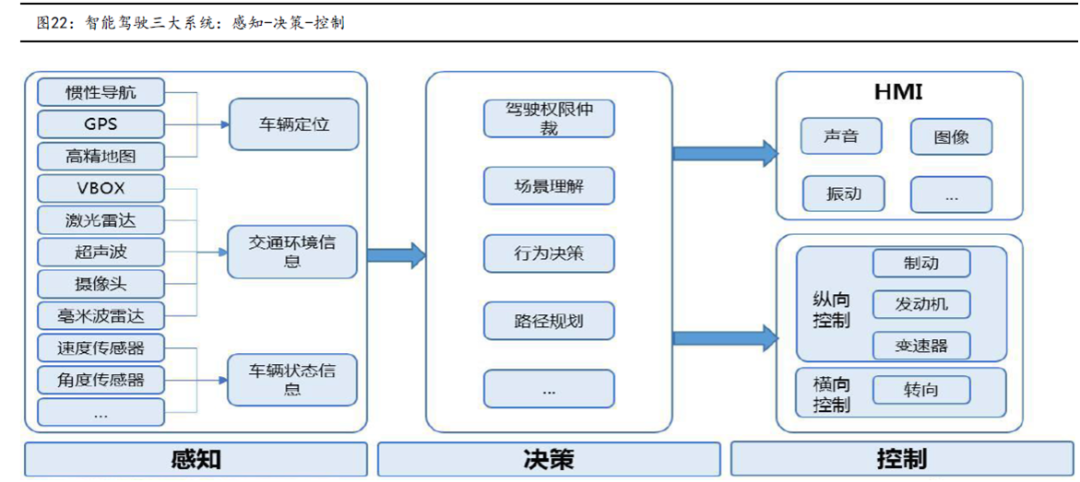

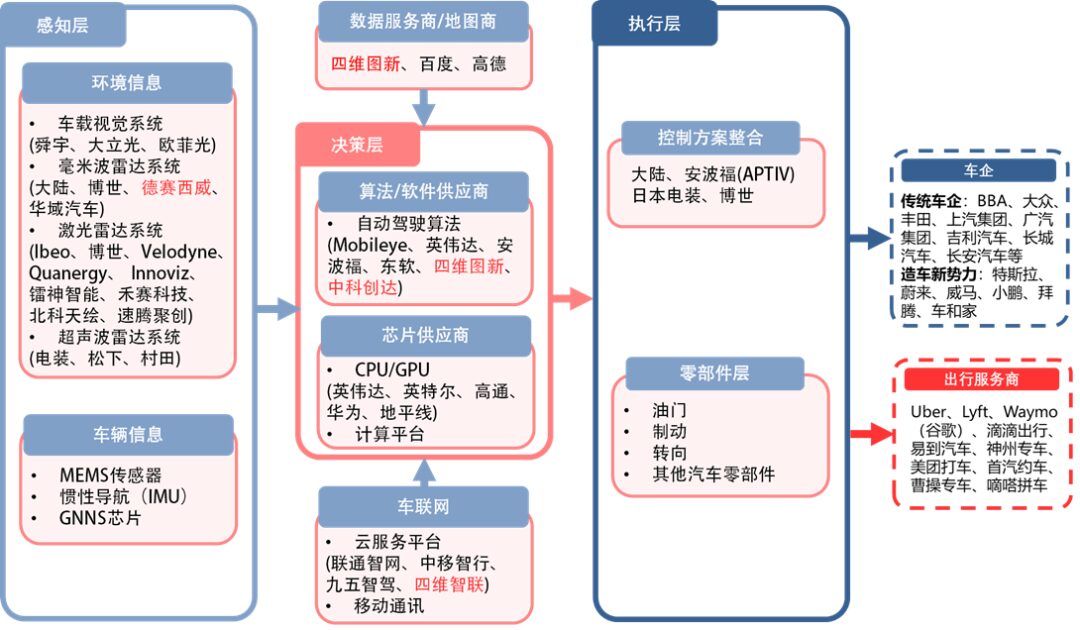

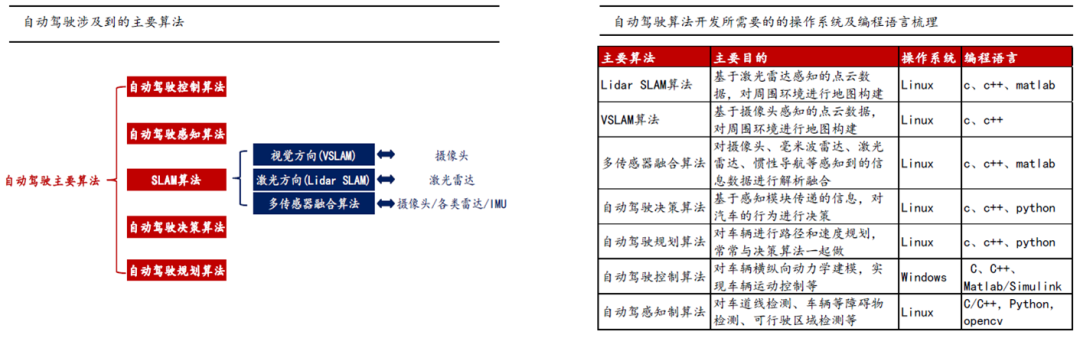

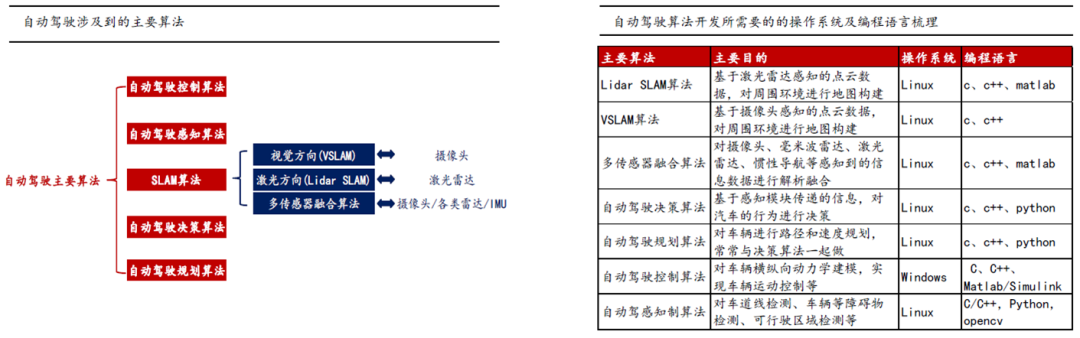

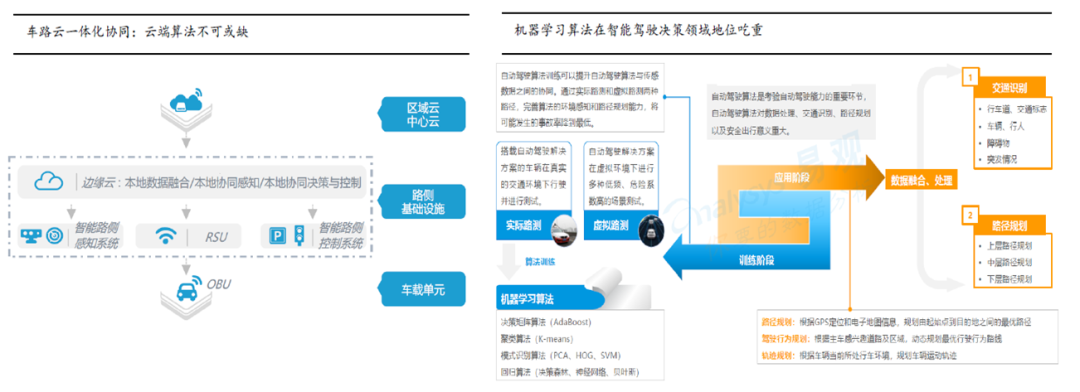

Introduction to Autonomous Driving Components and Key Technologies

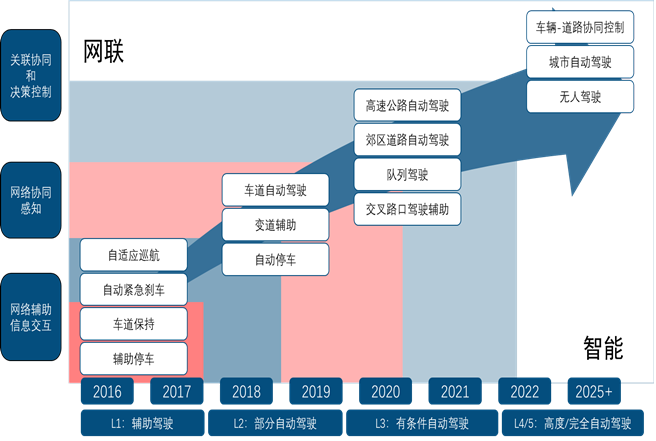

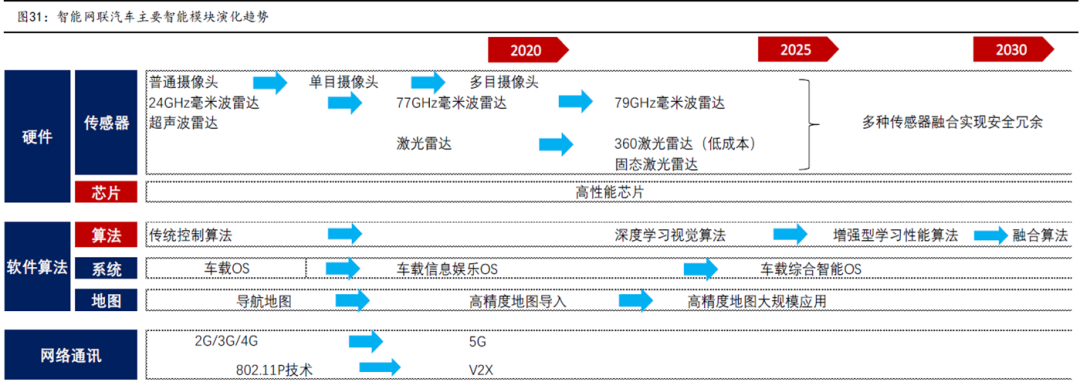

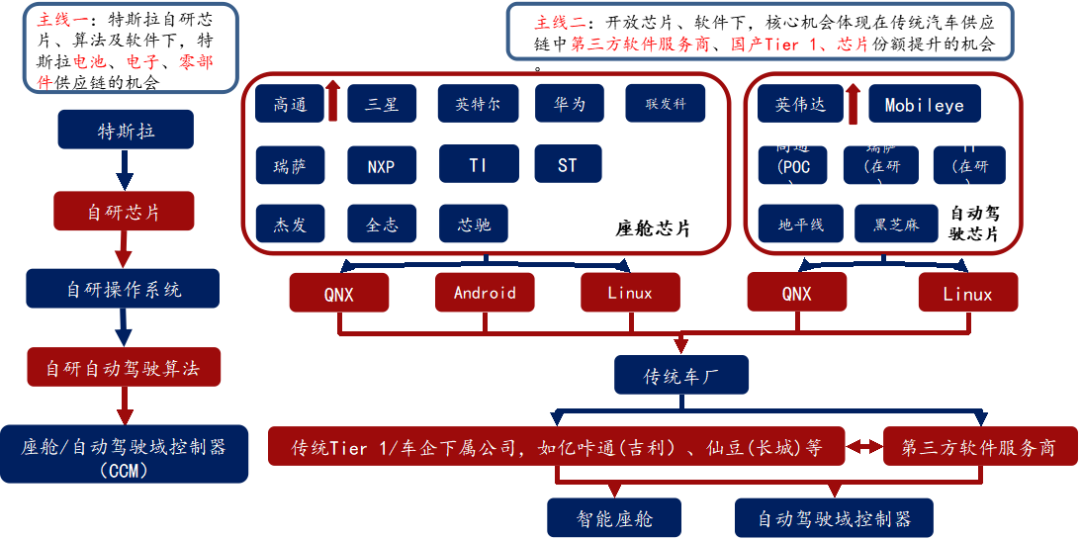

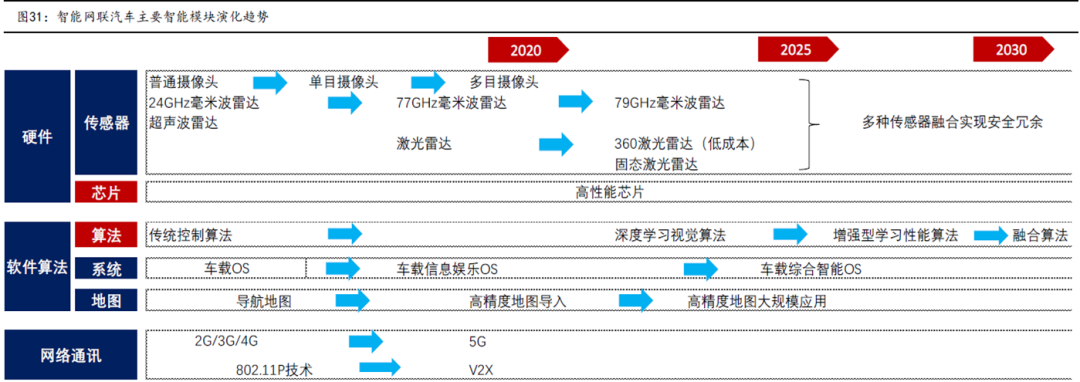

The semiconductor and energy revolution drives this wave of automotive intelligence and electrification, reflecting the pattern of the semiconductor industry chain.

2

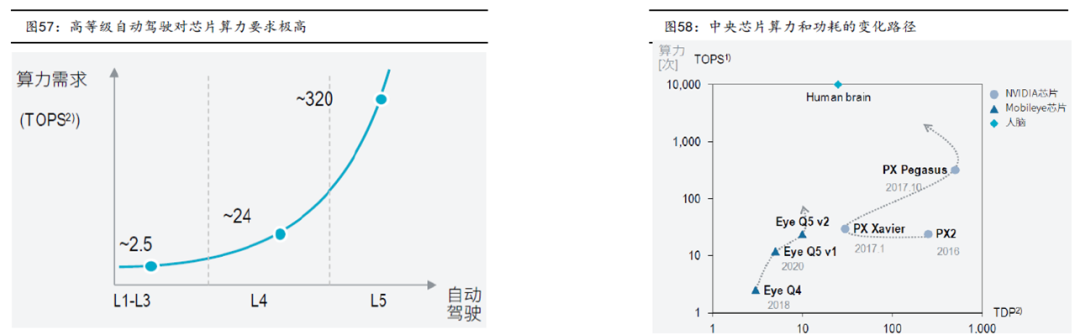

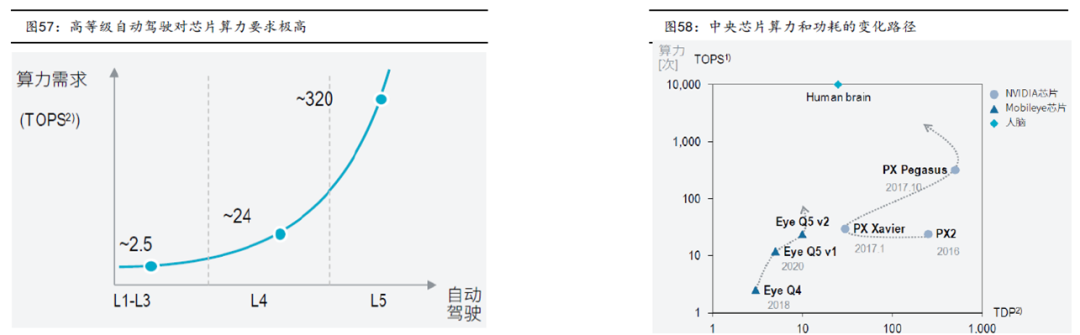

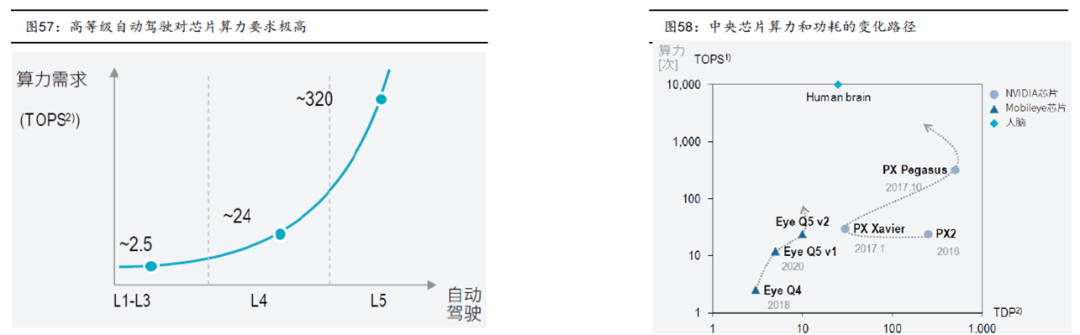

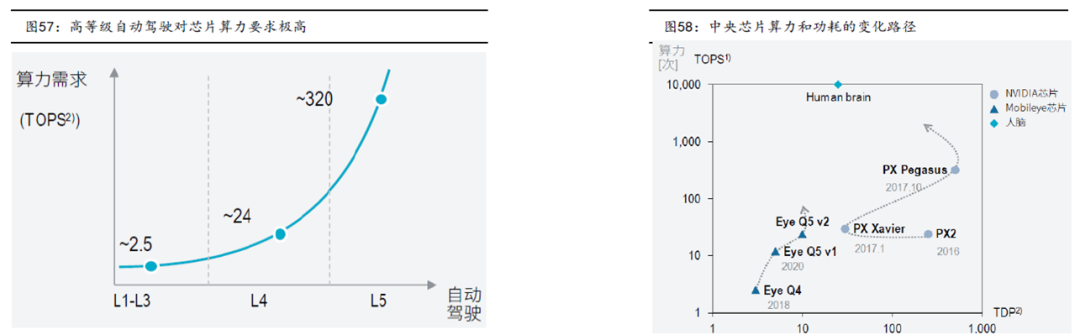

Introduction to Autonomous Driving Chip Performance

17

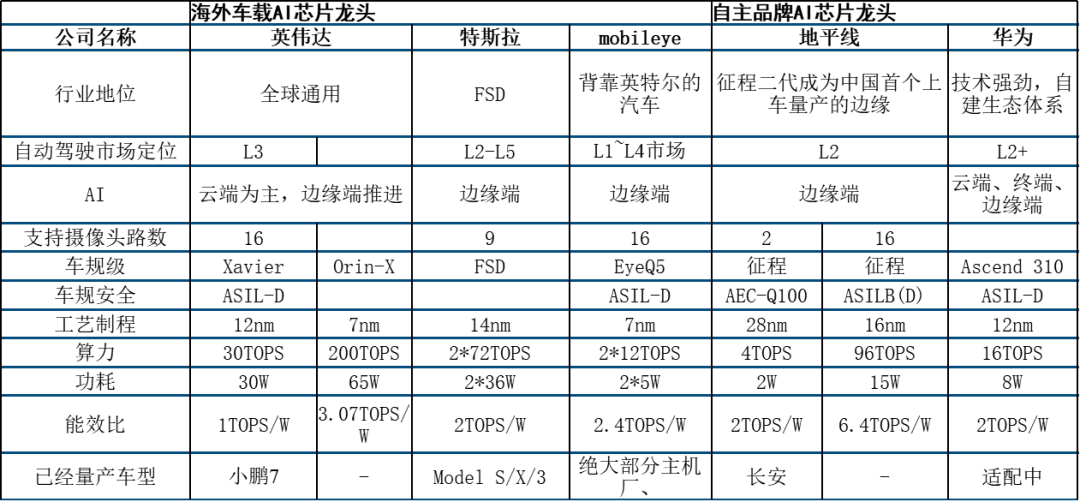

Comparison of Autonomous Driving Platforms and Selection Considerations

18

Conclusion

Click the business card below to follow us immediately