(This article is translated from electronicdesign)

Today, artificial intelligence has permeated various industries. In the field of medical diagnosis, the fundus disease recognition system developed by Google DeepMind achieves a diagnostic accuracy of 97.5% for diabetic retinopathy by analyzing hundreds of thousands of retinal images, surpassing the average human doctors; AlphaFold solved the protein structure prediction problem that has plagued the biology community for half a century in just two years, saving decades of exploration time in new drug development.

In the technology sector, from automated design processes to code generation, it is being used as a tool for various applications, with its usage expected to grow exponentially. Recent reports indicate that AI is replacing thousands of tech jobs in hundreds of major companies, including Duolingo, Google, Intel, Microsoft, NVIDIA, and TikTok.

While the application of AI is described as a beneficial tool for engineers and developers, the recent mass layoffs in the tech industry raise a question: Are these AI tools sufficient to replace workers? Can AI be fully trusted in the design or coding process?

AI assistants like ChatGPT, Copilot, and Gemini have concerning accuracy. Their responses contain significant issues 51% of the time, with 19% containing factual errors. The same problems exist in AI search tools, translation applications, image recognition, healthcare, and even the criminal justice system.

So, what is the situation of AI in the tech industry, which heavily relies on human innovation and skill levels? Engineers and developers have been using AI-based Electronic Design Automation (EDA) tools for printed circuit board (PCB) and circuit design. However, due to the complexity of these designs and the requirements of the decision-making process, AI still falls short of human capabilities in this regard, as it lacks judgment.

AI: A Powerful Yet Concerning Tool

A recent paper published by the Institute of Robotics and Artificial Intelligence Excellence explores the trustworthiness of AI in safety-critical real-time embedded systems, particularly those applied in autonomous vehicles, robotics, and medical devices. The researchers point out that while AI (specifically referring to deep neural networks) possesses powerful capabilities, it also raises a series of serious concerns regarding temporal unpredictability, security vulnerabilities, and decision transparency. They believe these issues must be addressed before deployment.

That said, modern embedded platforms continue to evolve to fully leverage heterogeneous architectures—integrating Central Processing Units (CPUs), Graphics Processing Units (GPUs), Field Programmable Gate Arrays (FPGAs), and dedicated accelerators to meet the growing demands of AI workloads. Nevertheless, resource management (such as process and thread management), maintaining temporal predictability, and task optimization remain significant obstacles to overcome.

GPUs running on complex AI frameworks face issues such as non-preemptive scheduling, high power consumption, and large form factors, which limit their use in embedded applications. On the other hand, FPGAs, with their higher predictability, lower power consumption, and smaller form factors, present a better alternative. However, they also come with trade-offs, including greater programming difficulty and limited resources.

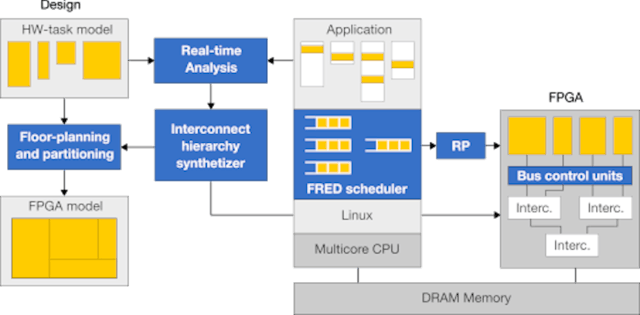

Recent technological advancements, including FPGA virtualization technologies like the FRED framework (as shown in Figure 1), help alleviate some of these challenges by supporting dynamic partial reconfiguration and better resource management.

Figure 1: The FRED framework supports the design, development, and execution of software for FPGAs.

What About Safety and Reliability?

Safety is another significant reason why AI is difficult to trust in circuit and embedded system design. Today, most AI systems are built on general-purpose operating systems like Linux, which are susceptible to cyber attacks. The hacking incident involving the Jeep Cherokee is a typical case, where hackers Charlie Miller and Chris Valasek successfully took control of the vehicle’s wipers, radio, steering system, and engine, bringing the vehicle to a complete stop.

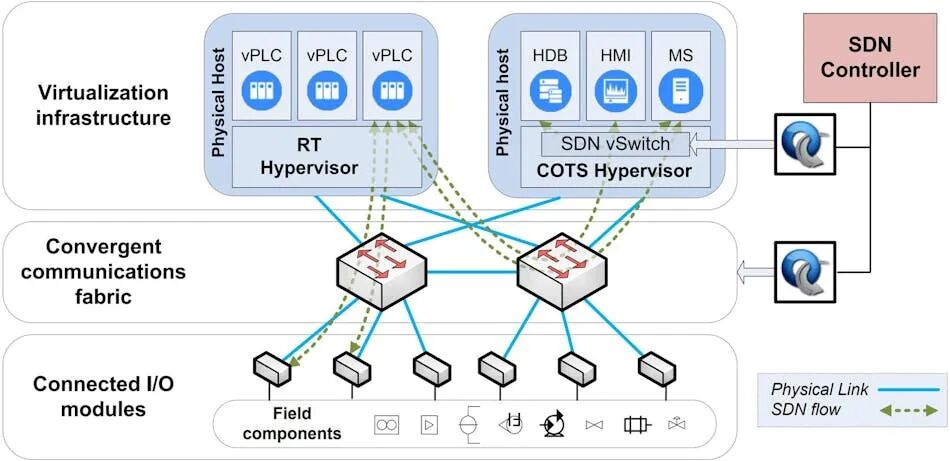

To prevent such incidents, some researchers have turned to hypervisor-based architectures, including commercial COTS hypervisors and real-time (RT) hypervisors (as shown in Figure 2). They segregate AI and safety-critical functions into different domains and utilize built-in hardware security features, such as ARM’s TrustZone technology, to prevent a failure in one system from affecting another.

Figure 2: Comparison of commercial COTS hypervisors and real-time (RT) hypervisors.

Of course, the reliability of AI itself is also a concern. Deep learning systems can easily be misled by erroneous inputs, subtle data adjustments that can deceive the model, or out-of-distribution (OOD) inputs that they have never encountered. These situations can lead to incorrect decisions by the system, potentially resulting in catastrophic consequences. In the field of embedded systems, engineers need to understand the reasons behind AI’s specific decisions, not just what decisions it made.

Solutions like Explainable AI (XAI) help reveal events and behaviors that were previously like a black box and can uncover hidden biases in training data. This provides valuable insights for any tool combination, but it is not a panacea—no single solution is universal. However, combining these solutions may help train AI to design circuits and embedded systems in a safe and highly transparent manner.

AI is currently not capable of handling all tasks, nor can it pass any ethical committee’s review. However, the situation may change dramatically in the next ten to twenty years, with more advanced training models, architectures, and security measures emerging. We need to recognize the efficiency revolution brought by AI while not shying away from its hidden risks; only by rationally delineating the boundaries of trust can technology truly serve humanity.

END